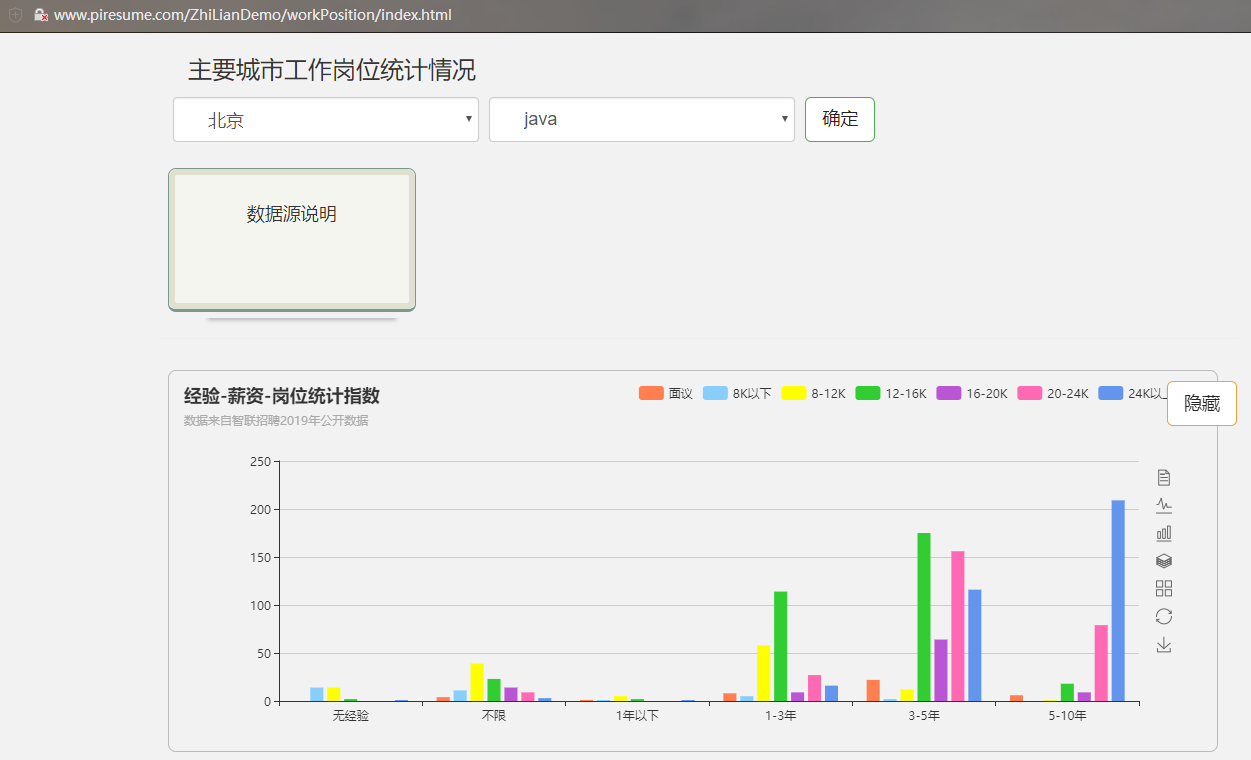

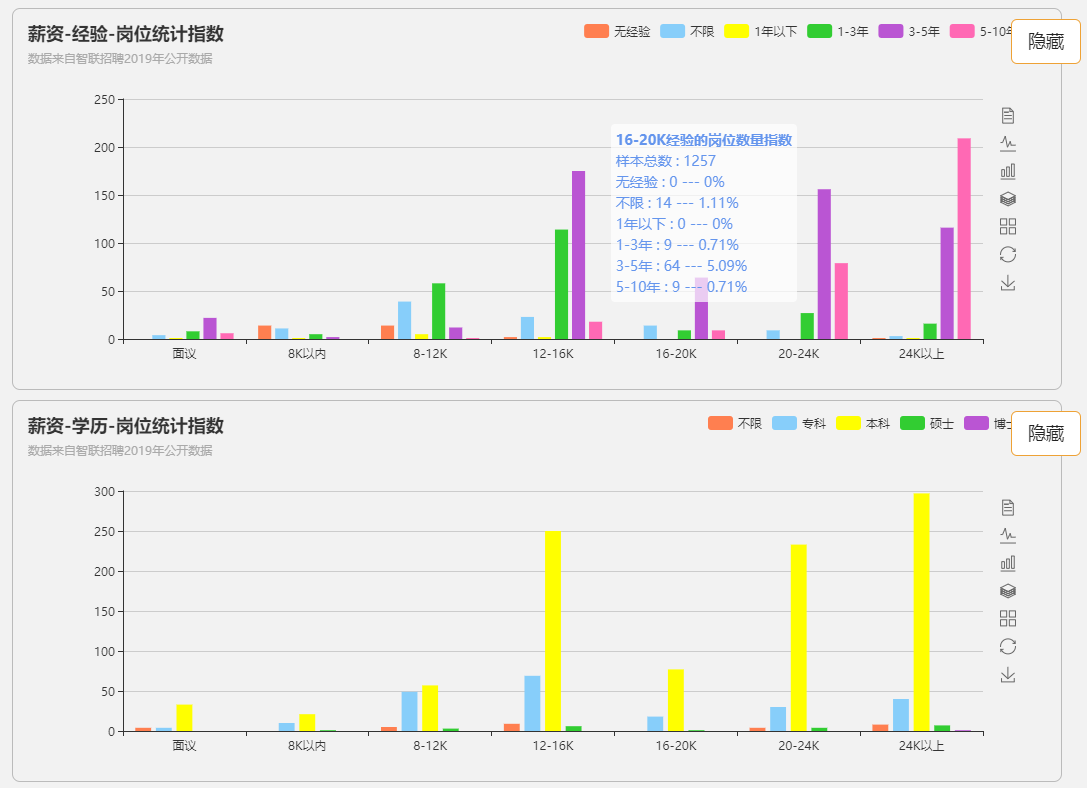

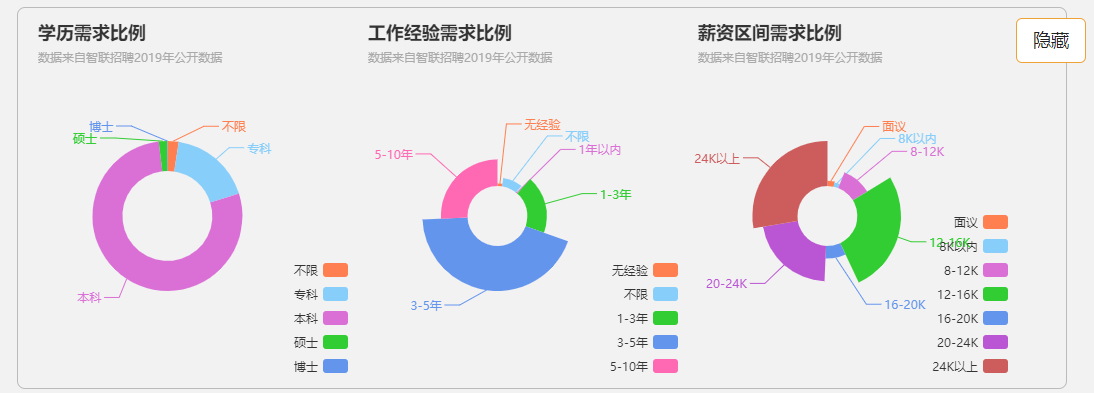

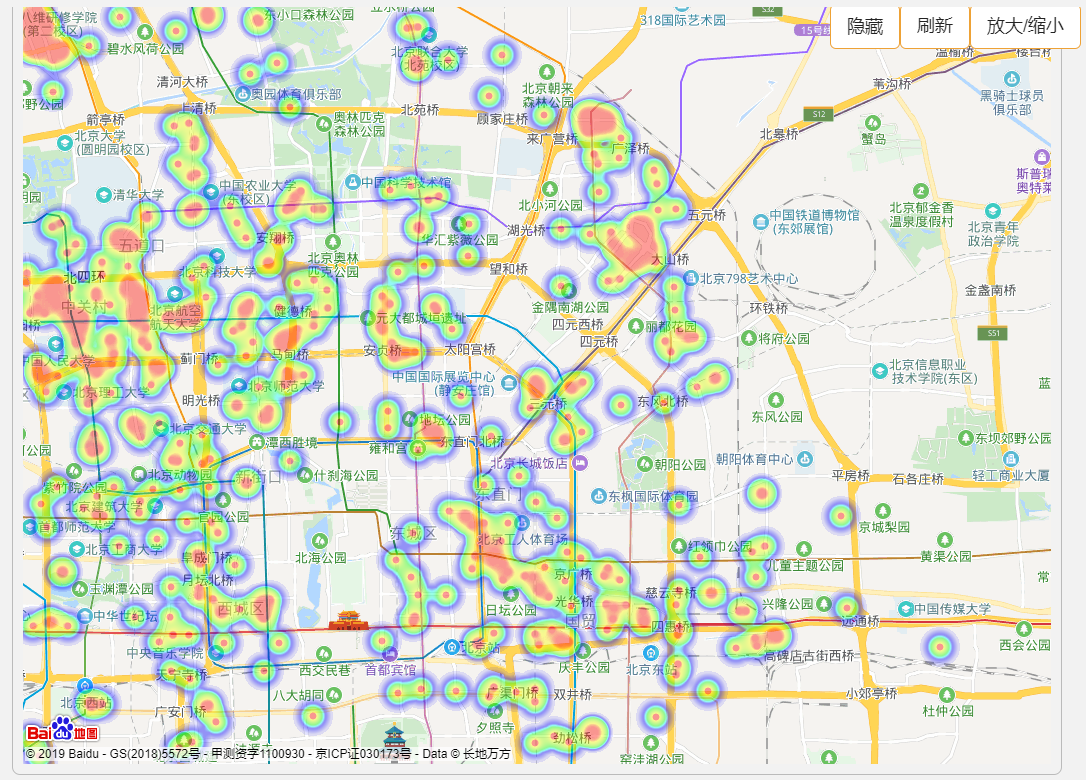

程序员各城市生存数据状况

liuyuhang原创,严禁转载!

电话&微信:13501043063

近期离开了北京回到老家,打算做一下程序员生存状况的调研,结合房价来进行一次分析,决定自己应该去哪座城市

于是便产生了此项目。

访问地址:http://www.piresume.com/ZhiLianDemo/workPosition/index.html

前端代码自己用浏览器扒,不贴了

注意:

目前的数据量并不是很庞大,只有北京的比较全面,一般单个岗位数据超过600个人认为才有参考价值,智联一次能访问的数据量大约在1200不到的样子。

数据更新中(每天只能更新2w条数据),预计一两个月以后该数据会比较充分,目前请自行识别数据样本的数量和可靠性

项目结果截屏:

章节目录:

一、项目思路

①在智联招聘网站上,能够根据get请求获取某个城市某关键字情况下的90*12个岗位数据,包括内容以及各式如下:

{ "code": 200, "data": { "chatTotal": 0, "chatWindowState": 0, "count": 1000, "informationStream": null, "jobLabel": null, "method": "", "typeSearch": 0, "results": [{ "number": "CC223880420J00328951203", "jobName": "web前端开发工程师", "company": { "name": "四川中疗网络科技有限公司", "number": "CZ223880420", "type": { "name": "股份制企业" }, "size": { "name": "20-99人" }, "url": "https://company.zhaopin.com/CZ223880420.htm" }, "city": { "items": [{ "name": "成都", "code": "801" }], "display": "成都" }, "updateDate": "2019-07-12 11:27:19", "salary": "7K-14K", "distance": 0, "eduLevel": { "name": "大专" }, "jobType": { "items": [{ "name": "软件/互联网开发/系统集成" }] }, "feedbackRation": 0.93, "workingExp": { "name": "3-5年" }, "industry": "160000", "emplType": "全职", "applyType": "1", "saleType": true, "positionURL": "https://jobs.zhaopin.com/CC223880420J00328951203.htm", "companyLogo": "https://zhaopin-rd5-pub.oss-cn-beijing.aliyuncs.com/imgs/company/6ee298d97aef480d26c096b7827afa70.png", "tags": [], "expandCount": 0, "score": "13", "vipLevel": 1002, "positionLabel": "{"qualifications":null,"role":null,"chatWindow":null,"refreshLevel":0,"skillLabel":[{"state":0,"value":"VUE"},{"state":0,"value":"Javascript"},{"state":0,"value":"Bootsrap"}]}", "welfare": ["餐补", "加班补助", "弹性工作", "带薪年假", "大牛带队"], "businessArea": "桐梓林", "futureJob": false, "futureJobUrl": "", "tagIntHighend": 0, "rootOrgId": 22388042, "staffId": 1037726905, "chatWindow": 0, "selected": false, "applied": false, "collected": false, "isShow": false, "timeState": "招聘中", "rate": "93%" }, { //以下各式相同,省略......

②在此内容中,包括该岗位的静态页面的请求地址,例如"positionURL": "https://jobs.zhaopin.com/CC223880420J00328951203.htm",

③在此地址中,若返回页面正常(非正常情况之后再说),则能从其中解析出该岗位的实际地理位置的文字描述。

④百度地图的jsAPI中有对地址的批量逆解析,获取到经纬度

⑤将获取的数据使用echarts呈现出来

二、获取数据中存在的问题

①智联对于xxs的攻击防御,是以ip来界定的,每个ip大约每分钟访问40次即无法正常访问获取想要的地址了。

②智联招聘对于频繁的访问有黑名单制度,可能是二级以上的黑名单:

首先会有滑块验证

然后会有403错误,EOF错误等

智联招聘封禁的时间大概是5个小时左右

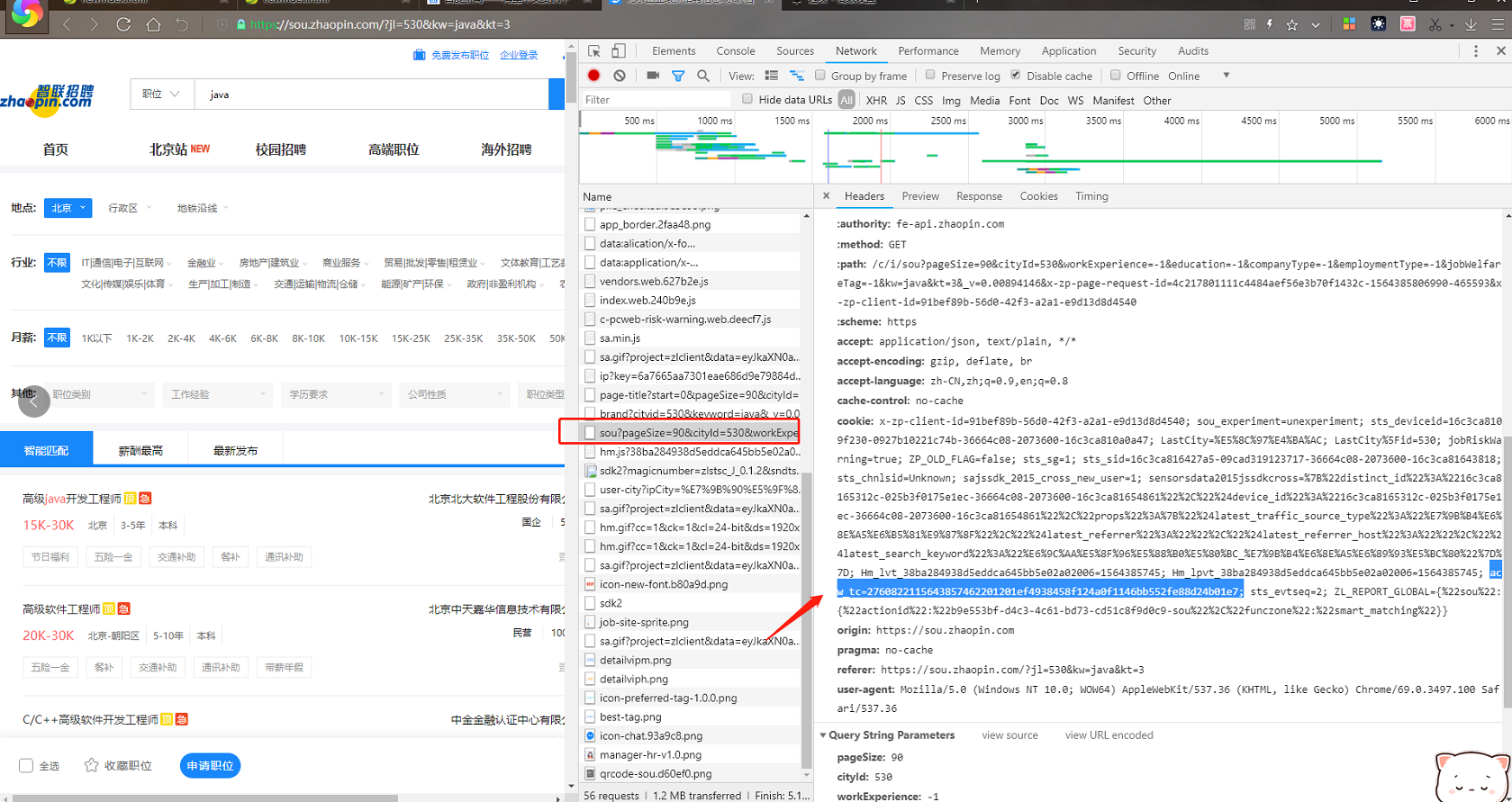

③智联招聘对于模仿浏览器登录,做了cookie防御,具体防御策略如下:

a.

b.

说明:

智联第一次登陆是没有cookie的,但是有个set-cookie字段的header信息,这个信息是通过浏览器直接修改cookie,而在header里,明确声明了禁止js修改cookie,也就是说,非浏览器无法将这个信息修改到cookie里去

而且,这个信息也是一个非常长的随机串,并且头内容也有很多不同,每次随机出现

在此刷新以后,上面的set-cookie字段要求的内容,就会出现在下一次请求的cookie里,这个不是通过js操作完成的

上面的header里有声明,path=httponly,就是只能通过浏览器的http协议的头信息操作该cookie

所以,这个绕不过去

而且,一旦acw_tc发现被模仿,他会更换头,比如更换成x-zp-client,或者其他的,就他在cookie里已经有的字段,会从头信息里拿出来,在此放进去,需要写的代码内容有点多,后来我都懒得写了

因为,即使你以此情况完成了头信息和cookie的设置,依然没法逃避xxs的每分钟40次访问数量限制,依然封ip,只是这个能逃避掉403错误,滑块验证而已

所以,干脆我就买了个代理池,暴力点

第一张图,第一次访问,header里有

acw_tc=2760822115643857462201201ef4938458f124a0f1146bb552fe88d24b01e7

第二次刷新,就在第二次请求的头信息里了,而头就没有了这个,如果第二次刷新,该ip没有将这个信息加入到cookie中,它就不会在发了

连续2-3次不发set-cookie,而cookie中又不带该信息,他会将这个ip返回403错误或者滑块验证

因为有如上问题存在,所以就必须要使用代理ip来爬取数据了。

关于什么是代理ip,代理的原理,自行百度

三、java爬取西刺代理ip池

笔者为了获取足够的数据来统计,节约时间(西刺代理每天的免费代理ip数量有限),购买了花生XX收费代理池...,但是最初还是用西刺代理做的测试

使用收费代理后,就不必在java代码中修改全局代理变量了,节约了不少代码

为了解决代理问题,第一印象是找收费代理ip池,然后想起来,免费的应该有,于是找到了西刺代理,emmm,既然有高匿代理,直接拿高匿代理的ip池吧

获取该ip池的部分重要代码如下:

①httpclient的代码:

package com.FM.tool; import java.io.File; import java.io.IOException; import java.security.KeyManagementException; import java.security.KeyStoreException; import java.security.NoSuchAlgorithmException; import java.util.Iterator; import java.util.List; import java.util.Map; import org.apache.http.HttpEntity; import org.apache.http.HttpStatus; import org.apache.http.client.config.RequestConfig; import org.apache.http.client.methods.CloseableHttpResponse; import org.apache.http.client.methods.HttpGet; import org.apache.http.client.methods.HttpPost; import org.apache.http.config.Registry; import org.apache.http.config.RegistryBuilder; import org.apache.http.conn.ClientConnectionManager; import org.apache.http.conn.socket.ConnectionSocketFactory; import org.apache.http.conn.socket.PlainConnectionSocketFactory; import org.apache.http.conn.ssl.SSLConnectionSocketFactory; import org.apache.http.conn.ssl.SSLContextBuilder; import org.apache.http.conn.ssl.TrustSelfSignedStrategy; import org.apache.http.entity.ContentType; import org.apache.http.entity.StringEntity; import org.apache.http.entity.mime.MultipartEntityBuilder; import org.apache.http.entity.mime.content.FileBody; import org.apache.http.entity.mime.content.StringBody; import org.apache.http.impl.client.CloseableHttpClient; import org.apache.http.impl.client.DefaultHttpRequestRetryHandler; import org.apache.http.impl.client.HttpClients; import org.apache.http.impl.conn.PoolingHttpClientConnectionManager; import org.apache.http.util.EntityUtils; /** * httpclient 发送get/post 请求 * * @author YF02 * */ public class HttpClientUtil { // utf-8字符编码 public static final String CHARSET_UTF_8 = "utf-8"; // HTTP内容类型。 public static final String CONTENT_TYPE_TEXT_HTML = "text/xml"; // HTTP内容类型。相当于form表单的形式,提交数据 public static final String CONTENT_TYPE_FORM_URL = "application/x-www-form-urlencoded"; // HTTP内容类型。相当于form表单的形式,提交数据 public static final String CONTENT_TYPE_JSON_URL = "application/json;charset=utf-8"; // 连接管理器 private static PoolingHttpClientConnectionManager pool; // 请求配置 private static RequestConfig requestConfig; static { try { //System.out.println("初始化HttpClientTest~~~开始"); SSLContextBuilder builder = new SSLContextBuilder(); builder.loadTrustMaterial(null, new TrustSelfSignedStrategy()); SSLConnectionSocketFactory sslsf = new SSLConnectionSocketFactory( builder.build()); // 配置同时支持 HTTP 和 HTPPS Registry<ConnectionSocketFactory> socketFactoryRegistry = RegistryBuilder.<ConnectionSocketFactory> create().register( "http", PlainConnectionSocketFactory.getSocketFactory()).register( "https", sslsf).build(); // 初始化连接管理器 pool = new PoolingHttpClientConnectionManager( socketFactoryRegistry); // 将最大连接数增加到200,实际项目最好从配置文件中读取这个值 pool.setMaxTotal(200); // 设置最大路由 pool.setDefaultMaxPerRoute(2); // 根据默认超时限制初始化requestConfig int socketTimeout = 20000; int connectTimeout = 20000; int connectionRequestTimeout = 20000; requestConfig = RequestConfig.custom() .setConnectionRequestTimeout(connectionRequestTimeout) .setSocketTimeout(socketTimeout) .setConnectTimeout(connectTimeout).build(); //System.out.println("初始化HttpClientTest~~~结束"); } catch (NoSuchAlgorithmException e) { e.printStackTrace(); } catch (KeyStoreException e) { e.printStackTrace(); } catch (KeyManagementException e) { e.printStackTrace(); } // 设置请求超时时间 requestConfig = RequestConfig.custom().setSocketTimeout(50000).setConnectTimeout(50000) .setConnectionRequestTimeout(50000).build(); } public static CloseableHttpClient getHttpClient() { CloseableHttpClient httpClient = HttpClients.custom() // 设置连接池管理 .setConnectionManager(pool) // 设置请求配置 .setDefaultRequestConfig(requestConfig) // 设置重试次数 .setRetryHandler(new DefaultHttpRequestRetryHandler(0, false)) .build(); return httpClient; } /** * 发送Post请求 * * @param httpPost * @return */ private static String sendHttpPost(HttpPost httpPost) { CloseableHttpClient httpClient = null; CloseableHttpResponse response = null; // 响应内容 String responseContent = null; try { // 创建默认的httpClient实例. httpClient = getHttpClient(); // 配置请求信息 httpPost.setConfig(requestConfig); // 执行请求 response = httpClient.execute(httpPost); // 得到响应实例 HttpEntity entity = response.getEntity(); // 可以获得响应头 // Header[] headers = response.getHeaders(HttpHeaders.CONTENT_TYPE); // for (Header header : headers) { // System.out.println(header.getName()); // } // 得到响应类型 // System.out.println(ContentType.getOrDefault(response.getEntity()).getMimeType()); // 判断响应状态 if (response.getStatusLine().getStatusCode() >= 300) { throw new Exception( "HTTP Request is not success, Response code is " + response.getStatusLine().getStatusCode()); } if (HttpStatus.SC_OK == response.getStatusLine().getStatusCode()) { responseContent = EntityUtils.toString(entity, CHARSET_UTF_8); //关闭HttpEntity的流 EntityUtils.consume(entity); } } catch (Exception e) { e.printStackTrace(); } finally { try { // 释放资源 if (response != null) { response.close(); } } catch (IOException e) { e.printStackTrace(); } } return responseContent; } /** * 发送Get请求 * * @param httpGet * @return */ private static String sendHttpGet(HttpGet httpGet) { CloseableHttpClient httpClient = null; CloseableHttpResponse response = null; // 响应内容 String responseContent = null; try { // 创建默认的httpClient实例. httpClient = getHttpClient(); // 配置请求信息 httpGet.setConfig(requestConfig); httpGet.addHeader("accept", "*/*"); httpGet.addHeader("connection", "Keep-Alive"); httpGet.addHeader("Content-Type", "application/json"); httpGet.addHeader("user-agent", "Mozilla/5.0 (compatible; MSIE 6.0; Windows NT 5.1;SV1)"); //"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) " //"Chrome/59.0.3071.115 Safari/537.36" // 发送POST请求必须设置如下两行 //conn.setDoOutput(true); //conn.setDoInput(true); // 执行请求 response = httpClient.execute(httpGet); // 得到响应实例 HttpEntity entity = response.getEntity(); // 可以获得响应头 // Header[] headers = response.getHeaders(HttpHeaders.CONTENT_TYPE); // for (Header header : headers) { // System.out.println(header.getName()); // } // 得到响应类型 // System.out.println(ContentType.getOrDefault(response.getEntity()).getMimeType()); // 判断响应状态 if (response.getStatusLine().getStatusCode() >= 300) { throw new Exception( "HTTP Request is not success, Response code is " + response.getStatusLine().getStatusCode()); } if (HttpStatus.SC_OK == response.getStatusLine().getStatusCode()) { responseContent = EntityUtils.toString(entity, CHARSET_UTF_8); EntityUtils.consume(entity); } } catch (Exception e) { e.printStackTrace(); } finally { try { // 释放资源 if (response != null) { response.close(); } } catch (IOException e) { e.printStackTrace(); } } return responseContent; } /** * 发送 post请求 * * @param httpUrl * 地址 */ public static String sendHttpPost(String httpUrl) { // 创建httpPost HttpPost httpPost = new HttpPost(httpUrl); return sendHttpPost(httpPost); } /** * 发送 get请求 * * @param httpUrl */ public static String sendHttpGet(String httpUrl) { // 创建get请求 HttpGet httpGet = new HttpGet(httpUrl); return sendHttpGet(httpGet); } /** * 发送 post请求(带文件) * * @param httpUrl * 地址 * @param maps * 参数 * @param fileLists * 附件 */ public static String sendHttpPost(String httpUrl, Map<String, String> maps, List<File> fileLists) { HttpPost httpPost = new HttpPost(httpUrl);// 创建httpPost MultipartEntityBuilder meBuilder = MultipartEntityBuilder.create(); if (maps != null) { for (String key : maps.keySet()) { meBuilder.addPart(key, new StringBody(maps.get(key), ContentType.TEXT_PLAIN)); } } if (fileLists != null) { for (File file : fileLists) { FileBody fileBody = new FileBody(file); meBuilder.addPart("files", fileBody); } } HttpEntity reqEntity = meBuilder.build(); httpPost.setEntity(reqEntity); return sendHttpPost(httpPost); } /** * 发送 post请求 * * @param httpUrl * 地址 * @param params * 参数(格式:key1=value1&key2=value2) * */ public static String sendHttpPost(String httpUrl, String params) { HttpPost httpPost = new HttpPost(httpUrl);// 创建httpPost try { // 设置参数 if (params != null && params.trim().length() > 0) { StringEntity stringEntity = new StringEntity(params, "UTF-8"); stringEntity.setContentType(CONTENT_TYPE_FORM_URL); httpPost.setEntity(stringEntity); } } catch (Exception e) { e.printStackTrace(); } return sendHttpPost(httpPost); } /** * 发送 post请求 * * @param maps * 参数 */ public static String sendHttpPost(String httpUrl, Map<String, String> maps) { String param = convertStringParamter(maps); return sendHttpPost(httpUrl, param); } /** * 发送 post请求 发送json数据 * * @param httpUrl * 地址 * @param paramsJson * 参数(格式 json) * */ public static String sendHttpPostJson(String httpUrl, String paramsJson) { HttpPost httpPost = new HttpPost(httpUrl);// 创建httpPost try { // 设置参数 if (paramsJson != null && paramsJson.trim().length() > 0) { StringEntity stringEntity = new StringEntity(paramsJson, "UTF-8"); stringEntity.setContentType(CONTENT_TYPE_JSON_URL); httpPost.setEntity(stringEntity); } } catch (Exception e) { e.printStackTrace(); } return sendHttpPost(httpPost); } /** * 发送 post请求 发送xml数据 * * @param httpUrl 地址 * @param paramsXml 参数(格式 Xml) * */ public static String sendHttpPostXml(String httpUrl, String paramsXml) { HttpPost httpPost = new HttpPost(httpUrl);// 创建httpPost try { // 设置参数 if (paramsXml != null && paramsXml.trim().length() > 0) { StringEntity stringEntity = new StringEntity(paramsXml, "UTF-8"); stringEntity.setContentType(CONTENT_TYPE_TEXT_HTML); httpPost.setEntity(stringEntity); } } catch (Exception e) { e.printStackTrace(); } return sendHttpPost(httpPost); } /** * 将map集合的键值对转化成:key1=value1&key2=value2 的形式 * * @param parameterMap * 需要转化的键值对集合 * @return 字符串 */ public static String convertStringParamter(Map parameterMap) { StringBuffer parameterBuffer = new StringBuffer(); if (parameterMap != null) { Iterator iterator = parameterMap.keySet().iterator(); String key = null; String value = null; while (iterator.hasNext()) { key = (String) iterator.next(); if (parameterMap.get(key) != null) { value = (String) parameterMap.get(key); } else { value = ""; } parameterBuffer.append(key).append("=").append(value); if (iterator.hasNext()) { parameterBuffer.append("&"); } } } return parameterBuffer.toString(); } }

②获取西刺代理ip列表的代码:

/** * 获取免费的ips,该代理为西刺代理,注意此方法调用一定要有间隔,因为西刺代理也做了反爬措施 * 若自身ip被封,无法打开西刺代理网页(通常返回403),则应断掉路由器的网络,重启后,运营商会重新分配一个ip地址,该地址应该可以继续访问 * * @params:num为页面,每个页面大约五十个ip * @params:地址为https://www.xicidaili.com/nn/,使用高匿代理ip * @return:返回list,为host和prot的列表,从map中取 */ public List getIps(Integer num) { StringBuffer buffer = new StringBuffer(); buffer.append("https://www.xicidaili.com/nn/");//西祠代理地址 if (null != num) { buffer.append("" + num); } String result = HttpClientUtil.sendHttpGet(buffer.toString()); List<Integer> indexList = new ArrayList(); List<Map<String, String>> hostAndPortList = new ArrayList(); String indexFlag = "<img src="//fs.xicidaili.com/images/flag/cn.png" alt="Cn" /></td>";//在此之后的为ip int index = 0; // System.out.println(result); while (true) { int indexs = result.indexOf(indexFlag, index + 1); if (indexs > index) { indexList.add(indexs); index = indexs; } else { break; } } for (int i = 0; i < indexList.size(); i++) { String hostAndPort = result.substring(indexList.get(i) + indexFlag.length(), indexList.get(i) + indexFlag.length() + 55); Map temp = new HashMap(); String host = hostAndPort; String port = hostAndPort; //获取host temp.put("host", host.substring(hostAndPort.indexOf("<td>") + 4, hostAndPort.indexOf("</td>"))); //获取port temp.put("port", port.substring(hostAndPort.lastIndexOf("<td>") + 4, hostAndPort.lastIndexOf("</td>"))); hostAndPortList.add(temp); } System.out.println("获取代理ip成功,共计" + hostAndPortList.size() + "个ip"); return hostAndPortList; }

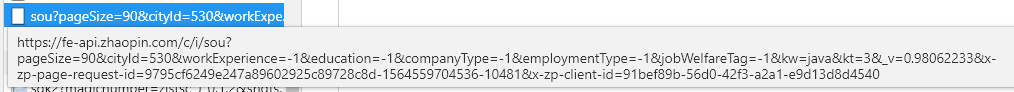

四、java爬取智联岗位列表

获取智联的方位列表,还是相对容易的,只要拼接正确了url即可,该访问地址可在网页的控制台中看到

相关参数的解析,网上有的,不过多数都不用关心,本人获取该内容时候使用的请求代码如下:

/** * 将获取的智联列表页集中到一个map中 * * @param keyWord * @param city * @return * @throws NoSuchAlgorithmException */ public ArrayList getListAllInZhiLian(String keyWord, String city) throws NoSuchAlgorithmException { ArrayList list = new ArrayList(); for (int i = 0; i < 12; i++) { Map temp = getList(keyWord, city, i); list.add(temp); } return list; } /** * 获取智联每一个列表页,一共12页 * * @param keyWord * @param city * @param page * @return * @throws NoSuchAlgorithmException */ public Map getList(String keyWord, String city, int page) throws NoSuchAlgorithmException { StringBuffer buffer = new StringBuffer(); buffer.append("https://fe-api.zhaopin.com/c/i/sou?"); if (page > 0) { buffer.append("&start=" + (90 * page));// 页数,最多12 } buffer.append("&pageSize=90");// 单页数量 buffer.append("&cityId=" + city);// 城市 buffer.append("&workExperience=-1");// 工作经验 buffer.append("&education=-1");// 学历 buffer.append("&companyType=-1");// 企业类型 buffer.append("&employmentType=-1");// ... buffer.append("&jobWelfareTag=-1");// ... buffer.append("&kw=" + keyWord);// 搜索关键词 buffer.append("&kt=3");// ... buffer.append("&rt=e7e586269e414dd8a2906eb55808a780");// ... buffer.append("&_v=" + new Double(Math.random()).toString().substring(0, 10));// ...0.34575205随机9位小数 String str = new Double(Math.random()).toString().substring(2, 10) + new Double(Math.random()).toString().substring(2, 10) + new Double(Math.random()).toString().substring(2, 10); MessageDigest md = MessageDigest.getInstance("MD5"); md.update(str.getBytes()); String mdStr = new BigInteger(1, md.digest()).toString(16); String time = new Long(new Date().getTime()).toString(); String random = new Double(Math.random()).toString().substring(2, 8); buffer.append("&x-zp-page-request-id=" + mdStr + "-" + time + "-" + random);// 32位随机数md5加密+时间戳+6位随机数 buffer.append("&x-zp-client-id=91a68428-2f82-47fc-aaa7-3056aeaf4062");// ... String result = HttpClientUtil.sendHttpGet(buffer.toString()); Map maps = (Map) JSON.parse(result); return maps; }

五、根据智联岗位列表获取该岗位工作地址

获取西刺代理池,并从智联获取列表,然后对列表中的页面地址获取,再从页面地址中解析,并存储到数据库中,其主要代码结构如下:

ArrayList list = getListAllInZhiLian((String) paramsSimple.get("keyWord"),

(String) paramsSimple.get("city"));

if (((String) paramsSimple.get("keyWord")).equals("") || ((String) paramsSimple.get("city")).equals("")) {

resultMap.put("list", "没有输入关键字");

resultMap.put("status", "error");

resultMap.put("message", "城市或者关键字缺失");

return resultMap;// 自动转换为json

}

System.out.println("尝试获取地址列表,共计" + list.size() + "个链接要查询");

for (int i = 0; i < list.size(); i++) {

List<Map<String, Object>> li = null;

try {

li = (List) ((Map) ((Map) list.get(i)).get("data")).get("results");

} catch (Exception e2) {

// e2.printStackTrace();

System.out.println("li error may be null");

}

if (null != li && !li.isEmpty() && li.size() > 0) {

System.out.println(li.size() + "个链接待查询");

for (int j = 0; j < li.size(); j++) {

// 验证该url是否已经存在于数据库中

String positionUrl = (String) ((Map<String, Object>) li.get(j)).get("positionURL");

Map paramCheck = new HashMap();

paramCheck.put("positionUrl", positionUrl);

session = sessionFactory.openSession();

Map checkUrl = null;

try {

checkUrl = session.selectOne("com.FM.otherMapper.kwMapper.getJobByUrl", paramCheck);

} catch (Exception e1) {

// e1.printStackTrace();

System.out.println("checkUrl error");

}

session.close();

if (null == checkUrl) {

String location = getPosition(positionUrl);

if (null != location) {

String loc = location.substring("<i class="iconfont icon-locate"></i>".length());

li.get(j).put("location", loc);

Map<String, Object> param = new HashMap();

param.put("companyName", ((Map) li.get(j).get("company")).get("name"));

param.put("jobName", li.get(j).get("jobName"));

param.put("keyWord", (String) paramsSimple.get("keyWord"));

if (((String) li.get(j).get("salary")).contains("-")) {

param.put("salaryLow",

((String) li.get(j).get("salary")).split("-")[0].split("K")[0]);

param.put("salaryTop",

((String) li.get(j).get("salary")).split("-")[1].split("K")[0]);

} else {

param.put("salaryLow", 0);

param.put("salaryTop", 0);

}

param.put("positionUrl", li.get(j).get("positionURL"));

param.put("location", loc);

param.put("workingExp", ((Map) li.get(j).get("workingExp")).get("name"));

param.put("eduLevel", ((Map) li.get(j).get("eduLevel")).get("name"));

param.put("emplType", li.get(j).get("emplType"));

param.put("updateBy", new Date());

param.put("x", "0");

param.put("y", "0");

param.put("city", (String) paramsSimple.get("city"));

session = sessionFactory.openSession();

try {

session.insert("com.FM.otherMapper.kwMapper.createJob", param);

System.out.println("url:" + positionUrl + " 地址:" + loc + " 存入数据库");

} catch (Exception e) {

// e.printStackTrace();

System.out.println("insert error ");

}

session.close();

}

} else {

System.out.println("url:" + positionUrl + " 已经在数据库中存在,跳过");

}

}

} else {

System.out.println("li中没有内容,获取具体列表失败");

System.out.println(li);

}

System.out.println("90个拿完,继续!");

}

对于该代码的触发方式,我是使用了springmvc,在一个页面中输入了city和key_word来触发的,后来进行了修改

六、工作地址逆解析

地址的解析,由百度来完成的,我对百度地图的jsAPI提供的逆解析示例进行了一定程度的修改,修改后的代码如下:

/* *逆解析的触发函数 */ function getGis() { $.ajax({ type : 'POST', url : local + "KeyWord/getGis.do",//获取要逆解析的地址 data : {}, async : true, success : function(resultMap) { if (resultMap.status == 'success') { for (var i = 0; i < resultMap.gisList.length; i++) { updateGis(resultMap.gisList[i]);//循环逆解析列表中的每一个地址 } } else { console.error(resultMap) } console.log(resultMap) }, error : function(resultMap) { console.error(resultMap) } }); } //逆解析需要的对象 var map = new BMap.Map("map0"); var myGeo = new BMap.Geocoder(); //逆解析调用的函数 function updateGis(li) { geocodeSearch(li); } //百度逆解析的回调函数 function geocodeSearch(li) { myGeo.getPoint(li.location, function(point) { if (point) { //console.log('id:' + li.id + ' x:' + point.lng + ' y:' + point.lat); li.x = point.lng; li.y = point.lat; console.log(li.id) modifyGis(li) //储存xy到数据库 } else { console.log('error') } }, li.city); } /* * 储存xy经纬度到数据库 */ function modifyGis(li) { $.ajax({ type : 'POST', url : local + "KeyWord/updateGis.do", data : { id : li.id, x : li.x, y : li.y, }, async : true, success : function(resultMap) { //console.log(resultMap) }, error : function(resultMap) { console.error(resultMap) } }); }

七、数据统计与分析

如下地址可访问:

http://www.piresume.com/ZhiLianDemo/workPosition/index.html

以上!