Background

一. 什么是Presto

Presto通过使用分布式查询,可以快速高效的完成海量数据的查询。如果你需要处理TB或者PB级别的数据,那么你可能更希望借助于Hadoop和HDFS来完成这些数据的处理。作为Hive和Pig(Hive和Pig都是通过MapReduce的管道流来完成HDFS数据的查询)的替代者,Presto不仅可以访问HDFS,也可以操作不同的数据源,包括:RDBMS和其他的数据源(例如:Cassandra)。

Presto被设计为数据仓库和数据分析产品:数据分析、大规模数据聚集和生成报表。这些工作经常通常被认为是线上分析处理操作。

Presto是FaceBook开源的一个开源项目。Presto在FaceBook诞生,并且由FaceBook内部工程师和开源社区的工程师公共维护和改进。

二. 环境和应用准备

- 环境

macbook pro

- application

Docker for mac: https://docs.docker.com/docker-for-mac/#check-versions

jdk-1.8: http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

hadoop-2.7.5

hive-2.3.3

presto-cli-0.198-executable.jar

三. 构建images

我们使用Docker来启动三台Centos7虚拟机,三台机器上安装Hadoop和Java。

1. 安装Docker,Macbook上安装Docker,并使用仓库账号登录。

docker login

2. 验证安装结果

docker version

3. 拉取Centos7 images

docker pull centos

4. 构建具有ssh功能的centos

mkdir ~/centos-ssh cd centos-ssh vi Dockerfile

# 选择一个已有的os镜像作为基础 FROM centos # 镜像的作者 MAINTAINER crxy # 安装openssh-server和sudo软件包,并且将sshd的UsePAM参数设置成no RUN yum install -y openssh-server sudo RUN sed -i 's/UsePAM yes/UsePAM no/g' /etc/ssh/sshd_config #安装openssh-clients RUN yum install -y openssh-clients # 添加测试用户root,密码root,并且将此用户添加到sudoers里 RUN echo "root:root" | chpasswd RUN echo "root ALL=(ALL) ALL" >> /etc/sudoers # 下面这两句比较特殊,在centos6上必须要有,否则创建出来的容器sshd不能登录 RUN ssh-keygen -t dsa -f /etc/ssh/ssh_host_dsa_key RUN ssh-keygen -t rsa -f /etc/ssh/ssh_host_rsa_key # 启动sshd服务并且暴露22端口 RUN mkdir /var/run/sshd EXPOSE 22 CMD ["/usr/sbin/sshd", "-D"]

构建

docker build -t=”centos-ssh” .

5. 基于centos-ssh镜像构建有JDK和Hadoop的镜像

mkdir ~/hadoop cd hadoop vi Dockerfile

FROM centos-ssh ADD jdk-8u161-linux-x64.tar.gz /usr/local/ RUN mv jdk-8u161-linux-x64.tar.gz /usr/local/jdk1.7 ENV JAVA_HOME /usr/local/jdk1.8 ENV PATH $JAVA_HOME/bin:$PATH ADD hadoop-2.7.5.tar.gz /usr/local RUN mv hadoop-2.7.5.tar.gz /usr/local/hadoop ENV HADOOP_HOME /usr/local/hadoop ENV PATH $HADOOP_HOME/bin:$PATH

jdk包和hadoop包要放在hadoop目录下

docker build -t=”centos-hadoop” .

四. 搭建Hadoop集群

1. 集群规划

搭建有三个节点的hadoop集群,一主两从

主节点:hadoop0 ip:172.18.0.2 从节点1:hadoop1 ip:172.18.0.3 从节点2:hadoop2 ip:172.18.0.4

但是由于docker容器重新启动之后ip会发生变化,所以需要我们给docker设置固定ip。

Docker安装后,默认会创建下面三种网络类型:

docker network ls jinhongliu@Jinhongs-MacBo NETWORK ID NAME DRIVER SCOPE 085be4855a90 bridge bridge local 177432e48de5 host host local 569f368d1561 none null local

启动 Docker的时候,用 --network 参数,可以指定网络类型,如:

~ docker run -itd --name test1 --network bridge --ip 172.17.0.10 centos:latest /bin/bash

bridge:桥接网络

默认情况下启动的Docker容器,都是使用 bridge,Docker安装时创建的桥接网络,每次Docker容器重启时,会按照顺序获取对应的IP地址,这个就导致重启下,Docker的IP地址就变了.

none:无指定网络

使用 --network=none ,docker 容器就不会分配局域网的IP

host: 主机网络

使用 --network=host,此时,Docker 容器的网络会附属在主机上,两者是互通的。

例如,在容器中运行一个Web服务,监听8080端口,则主机的8080端口就会自动映射到容器中。

创建自定义网络:(设置固定IP)

启动Docker容器的时候,使用默认的网络是不支持指派固定IP的,如下:

~ docker run -itd --net bridge --ip 172.17.0.10 centos:latest /bin/bash 6eb1f228cf308d1c60db30093c126acbfd0cb21d76cb448c678bab0f1a7c0df6 docker: Error response from daemon: User specified IP address is supported on user defined networks only.

因此,需要创建自定义网络,下面是具体的步骤:

步骤1: 创建自定义网络

创建自定义网络,并且指定网段:172.18.0.0/16

➜ ~ docker network create --subnet=172.18.0.0/16 mynetwork ➜ ~ docker network ls NETWORK ID NAME DRIVER SCOPE 085be4855a90 bridge bridge local 177432e48de5 host host local 620ebbc09400 mynetwork bridge local 569f368d1561 none null local

步骤2: 创建docker容器。启动三个容器,分别作为hadoop0 hadoop1 hadoop2

➜ ~ docker run --name hadoop0 --hostname hadoop0 --net mynetwork --ip 172.18.0.2 -d -P -p 50070:50070 -p 8088:8088 centos-hadoop

➜ ~ docker run --name hadoop0 --hostname hadoop1 --net mynetwork --ip 172.18.0.3 -d -P centos-hadoop

➜ ~ docker run --name hadoop0 --hostname hadoop2 --net mynetwork --ip 172.18.0.4 -d -P centos-hadoop

使用docker ps 查看刚才启动的是三个容器:

5e0028ed6da0 hadoop "/usr/sbin/sshd -D" 16 hours ago Up 3 hours 0.0.0.0:32771->22/tcp hadoop2 35211872eb20 hadoop "/usr/sbin/sshd -D" 16 hours ago Up 4 hours 0.0.0.0:32769->22/tcp hadoop1 0f63a870ef2b hadoop "/usr/sbin/sshd -D" 16 hours ago Up 5 hours 0.0.0.0:8088->8088/tcp, 0.0.0.0:50070->50070/tcp, 0.0.0.0:32768->22/tcp hadoop0

这样3台机器就有了固定的IP地址。验证一下,分别ping三个ip,能ping通就说明没问题。

五. 配置Hadoop集群

1. 先连接到hadoop0上, 使用命令

docker exec -it hadoop0 /bin/bash

下面的步骤就是hadoop集群的配置过程

1:设置主机名与ip的映射,修改三台容器:vi /etc/hosts

添加下面配置

172.18.0.2 hadoop0 172.18.0.3 hadoop1 172.18.0.4 hadoop2

2:设置ssh免密码登录

在hadoop0上执行下面操作

cd ~ mkdir .ssh cd .ssh ssh-keygen -t rsa(一直按回车即可) ssh-copy-id -i localhost ssh-copy-id -i hadoop0 ssh-copy-id -i hadoop1 ssh-copy-id -i hadoop2 在hadoop1上执行下面操作 cd ~ cd .ssh ssh-keygen -t rsa(一直按回车即可) ssh-copy-id -i localhost ssh-copy-id -i hadoop1 在hadoop2上执行下面操作 cd ~ cd .ssh ssh-keygen -t rsa(一直按回车即可) ssh-copy-id -i localhost ssh-copy-id -i hadoop2

3:在hadoop0上修改hadoop的配置文件

进入到/usr/local/hadoop/etc/hadoop目录

修改目录下的配置文件core-site.xml、hdfs-site.xml、yarn-site.xml、mapred-site.xml

(1)hadoop-env.sh

export JAVA_HOME=/usr/local/jdk1.8

(2)core-site.xml

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://hadoop0:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/usr/local/hadoop/tmp</value> </property> <property> <name>fs.trash.interval</name> <value>1440</value> </property> </configuration>

(3)hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.permissions</name> <value>false</value> </property> </configuration>

(4)yarn-site.xml

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> </configuration>

(5)修改文件名:mv mapred-site.xml.template mapred-site.xml

vi mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

(6)格式化

进入到/usr/local/hadoop目录下

执行格式化命令

bin/hdfs namenode -format 注意:在执行的时候会报错,是因为缺少which命令,安装即可 执行下面命令安装 yum install -y which

格式化操作不能重复执行。如果一定要重复格式化,带参数-force即可。

(7)启动伪分布hadoop

命令:sbin/start-all.sh

第一次启动的过程中需要输入yes确认一下。 使用jps,检查进程是否正常启动?能看到下面几个进程表示伪分布启动成功

3267 SecondaryNameNode 3003 NameNode 3664 Jps 3397 ResourceManager 3090 DataNode 3487 NodeManager

(8)停止伪分布hadoop

命令:sbin/stop-all.sh

(9)指定nodemanager的地址,修改文件yarn-site.xml

<property> <description>The hostname of the RM.</description> <name>yarn.resourcemanager.hostname</name> <value>hadoop0</value> </property>

(10)修改hadoop0中hadoop的一个配置文件etc/hadoop/slaves

删除原来的所有内容,修改为如下

hadoop1

hadoop2

(11)在hadoop0中执行命令

scp -rq /usr/local/hadoop hadoop1:/usr/local scp -rq /usr/local/hadoop hadoop2:/usr/local

(12)启动hadoop分布式集群服务

执行sbin/start-all.sh

注意:在执行的时候会报错,是因为两个从节点缺少which命令,安装即可

分别在两个从节点执行下面命令安装

yum install -y which

再启动集群(如果集群已启动,需要先停止)

(13)验证集群是否正常

首先查看进程:

Hadoop0上需要有这几个进程

4643 Jps 4073 NameNode 4216 SecondaryNameNode 4381 ResourceManager

Hadoop1上需要有这几个进程

715 NodeManager 849 Jps 645 DataNode

Hadoop2上需要有这几个进程

456 NodeManager 589 Jps 388 DataNode

使用程序验证集群服务

创建一个本地文件

vi a.txt hello you hello me

上传a.txt到hdfs上

hdfs dfs -put a.txt /

执行wordcount程序

cd /usr/local/hadoop/share/hadoop/mapreduce hadoop jar hadoop-mapreduce-examples-2.4.1.jar wordcount /a.txt /out

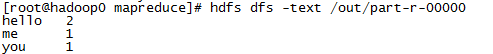

查看程序执行结果

这样就说明集群正常了。

通过浏览器访问集群的服务

由于在启动hadoop0这个容器的时候把50070和8088映射到宿主机的对应端口上了

所以在这可以直接通过宿主机访问容器中hadoop集群的服务

六. 安装Hive

我们使用Presto的hive connector来对hive中的数据进行查询,因此需要先安装hive.

1. 本地下载hive,使用下面的命令传到hadoop0上

docker cp ~/Download/hive-2.3.3-bin.tar.gz 容器ID:/

2. 解压到指定目录

tar -zxvf apache-hive-2.3.3-bin.tar.gz mv apache-hive-2.3.3-bin /hive cd /hive

3、配置/etc/profile,在/etc/profile中添加如下语句

export HIVE_HOME=/usr/local/hive

export PATH=$HIVE_HOME/bin:$PATH

source /etc/profile

4、安装MySQL数据库

我们使用docker容器来进行安装,首先pull mysql image

docker pull mysql

启动mysql容器

docker run --name mysql -e MYSQL_ROOT_PASSWORD=111111 --net mynetwork --ip 172.18.0.5 -d

登录mysql容器

5、创建metastore数据库并为其授权

create database metastore;

6、 下载jdbc connector

下载完成之后将其解压,并把其中的mysql-connector-java-5.1.41-bin.jar文件拷贝到$HIVE_HOME/lib目录

7、修改hive配置文件

cd /hive/conf

7.1复制初始化文件并重改名

cp hive-env.sh.template hive-env.sh cp hive-default.xml.template hive-site.xml cp hive-log4j2.properties.template hive-log4j2.properties cp hive-exec-log4j2.properties.template hive-exec-log4j2.properties

7.2修改hive-env.sh

export JAVA_HOME=/usr/local/jdk1.8 ##Java路径 export HADOOP_HOME=/usr/local/hadoop ##Hadoop安装路径 export HIVE_HOME=/usr/local/hive ##Hive安装路径 export HIVE_CONF_DIR=/hive/conf ##Hive配置文件路径

7.3在hdfs 中创建下面的目录 ,并且授权

hdfs dfs -mkdir -p /user/hive/warehouse hdfs dfs -mkdir -p /user/hive/tmp hdfs dfs -mkdir -p /user/hive/log hdfs dfs -chmod -R 777 /user/hive/warehouse hdfs dfs -chmod -R 777 /user/hive/tmp hdfs dfs -chmod -R 777 /user/hive/log

7.4修改hive-site.xml

<property> <name>hive.exec.scratchdir</name> <value>/user/hive/tmp</value> </property> <property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> </property> <property> <name>hive.querylog.location</name> <value>/user/hive/log</value> </property> ## 配置 MySQL 数据库连接信息 <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://172.18.0.5:3306/metastore?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>111111</value> </property>

7.5 创建tmp文件

mkdir /home/hadoop/hive/tmp

并在hive-site.xml中修改:

把{system:java.io.tmpdir} 改成 /home/hadoop/hive/tmp/

把 {system:user.name} 改成 {user.name}

8、初始化hive

schematool -dbType mysql -initSchema

9、启动hive

hive

10. hive中创建表

新建create_table文件

REATE TABLE IF NOT EXISTS `default`.`d_abstract_event` ( `id` BIGINT, `network_id` BIGINT, `name` STRING) COMMENT 'Imported by sqoop on 2017/06/27 09:49:25' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY"); CREATE TABLE IF NOT EXISTS `default`.`d_ad_bumper` ( `front_bumper_id` BIGINT, `end_bumper_id` BIGINT, `content_item_type` STRING, `content_item_id` BIGINT, `content_item_name` STRING) COMMENT 'Imported by sqoop on 2017/06/27 09:31:05' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY"); CREATE TABLE IF NOT EXISTS `default`.`d_ad_tracking` ( `id` BIGINT, `network_id` BIGINT, `name` STRING, `creative_id` BIGINT, `creative_name` STRING, `ad_unit_id` BIGINT, `ad_unit_name` STRING, `placement_id` BIGINT, `placement_name` STRING, `io_id` BIGINT, `io_ad_group_id` BIGINT, `io_name` STRING, `campaign_id` BIGINT, `campaign_name` STRING, `campaign_status` STRING, `advertiser_id` BIGINT, `advertiser_name` STRING, `agency_id` BIGINT, `agency_name` STRING, `status` STRING) COMMENT 'Imported by sqoop on 2017/06/27 09:31:03' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY"); CREATE TABLE IF NOT EXISTS `default`.`d_ad_tree_node_frequency_cap` ( `id` BIGINT, `ad_tree_node_id` BIGINT, `frequency_cap` INT, `frequency_period` INT, `frequency_cap_type` STRING, `frequency_cap_scope` STRING) COMMENT 'Imported by sqoop on 2017/06/27 09:31:03' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY"); CREATE TABLE IF NOT EXISTS `default`.`d_ad_tree_node_skippable` ( `id` BIGINT, `skippable` BIGINT) COMMENT 'Imported by sqoop on 2017/06/27 09:31:03' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY"); CREATE TABLE IF NOT EXISTS `default`.`d_ad_tree_node` ( `id` BIGINT, `network_id` BIGINT, `name` STRING, `internal_id` STRING, `staging_internal_id` STRING, `budget_exempt` INT, `ad_unit_id` BIGINT, `ad_unit_name` STRING, `ad_unit_type` STRING, `ad_unit_size` STRING, `placement_id` BIGINT, `placement_name` STRING, `placement_internal_id` STRING, `io_id` BIGINT, `io_ad_group_id` BIGINT, `io_name` STRING, `io_internal_id` STRING, `campaign_id` BIGINT, `campaign_name` STRING, `campaign_internal_id` STRING, `advertiser_id` BIGINT, `advertiser_name` STRING, `advertiser_internal_id` STRING, `agency_id` BIGINT, `agency_name` STRING, `agency_internal_id` STRING, `price_model` STRING, `price_type` STRING, `ad_unit_price` DECIMAL(16,2), `status` STRING, `companion_ad_package_id` BIGINT) COMMENT 'Imported by sqoop on 2017/06/27 09:31:03' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY"); CREATE TABLE IF NOT EXISTS `default`.`d_ad_tree_node_staging` ( `ad_tree_node_id` BIGINT, `adapter_status` STRING, `primary_ad_tree_node_id` BIGINT, `production_ad_tree_node_id` BIGINT, `hide` INT, `ignore` INT) COMMENT 'Imported by sqoop on 2017/06/27 09:31:03' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY"); CREATE TABLE IF NOT EXISTS `default`.`d_ad_tree_node_trait` ( `id` BIGINT, `ad_tree_node_id` BIGINT, `trait_type` STRING, `parameter` STRING) COMMENT 'Imported by sqoop on 2017/06/27 09:31:03' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY"); CREATE TABLE IF NOT EXISTS `default`.`d_ad_unit_ad_slot_assignment` ( `id` BIGINT, `ad_unit_id` BIGINT, `ad_slot_id` BIGINT) COMMENT 'Imported by sqoop on 2017/06/27 09:31:03' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY"); CREATE TABLE IF NOT EXISTS `default`.`d_ad_unit` ( `id` BIGINT, `name` STRING, `ad_unit_type` STRING, `height` INT, `width` INT, `size` STRING, `network_id` BIGINT, `created_type` STRING) COMMENT 'Imported by sqoop on 2017/06/27 09:31:03' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY"); CREATE TABLE IF NOT EXISTS `default`.`d_advertiser` ( `id` BIGINT, `network_id` BIGINT, `name` STRING, `agency_id` BIGINT, `agency_name` STRING, `advertiser_company_id` BIGINT, `agency_company_id` BIGINT, `billing_contact_company_id` BIGINT, `address_1` STRING, `address_2` STRING, `address_3` STRING, `city` STRING, `state_region_id` BIGINT, `country_id` BIGINT, `postal_code` STRING, `email` STRING, `phone` STRING, `fax` STRING, `url` STRING, `notes` STRING, `billing_term` STRING, `meta_data` STRING, `internal_id` STRING, `active` INT, `budgeted_imp` BIGINT, `num_of_campaigns` BIGINT, `adv_category_name_list` STRING, `adv_category_id_name_list` STRING, `updated_at` TIMESTAMP, `created_at` TIMESTAMP) COMMENT 'Imported by sqoop on 2017/06/27 09:31:22' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS ORC tblproperties ("orc.compress"="SNAPPY");

cat create_table | hive

11. 启动metadata service

presto需要使用hive的metadata service

nohup hive --service metadata &

至此hive的安装就完成了。

七. 安装presto

1. 下载presto-server-0.198.tar.gz

2. 解压

cd presto-service-0.198 mkdir etc cd etc

3. 编辑配置文件:

Node Properties

etc/node.properties

node.environment=production node.id=ffffffff-0000-0000-0000-ffffffffffff node.data-dir=/opt/presto/data/discovery/

JVM Config

etc/jvm.config

-server -Xmx16G -XX:+UseG1GC -XX:G1HeapRegionSize=32M -XX:+UseGCOverheadLimit -XX:+ExplicitGCInvokesConcurrent -XX:+HeapDumpOnOutOfMemoryError -XX:+ExitOnOutOfMemoryError

Config Properties

etc/config.properties

coordinator=true node-scheduler.include-coordinator=true http-server.http.port=8080 query.max-memory=5GB query.max-memory-per-node=1GB discovery-server.enabled=true discovery.uri=http://hadoop0:8080

catalog配置:

etc/catalog/hive.properties

connector.name=hive-hadoop2 hive.metastore.uri=thrift://hadoop0:9083 hive.config.resources=/usr/local/hadoop/etc/hadoop/core-site.xml,/usr/local/hadoop/etc/hadoop/hdfs-site.xml

4. 启动hive service

./bin/launch start

5. Download presto-cli-0.198-executable.jar, rename it to presto, make it executable with chmod +x, then run it:

./presto --server localhost:8080 --catalog hive --schema default

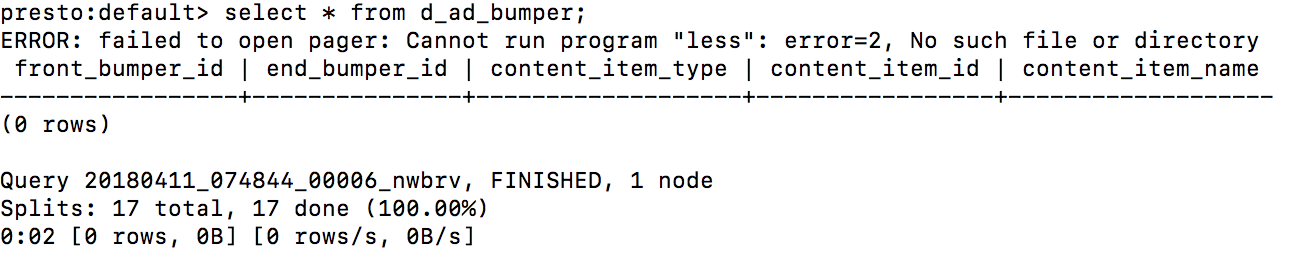

这样整个配置就完成啦。看一下效果吧,通过show tables来查看我们在hive中创建的表。

参考:

https://blog.csdn.net/xu470438000/article/details/50512442‘

http://www.jb51.net/article/118396.htm

https://prestodb.io/docs/current/installation/cli.html