Flink以Standalone模式运行时,可能会发生jobmanager(以下简称jm)或taskmanager(以下简称tm)异常退出的情况,我们可以使用Linux自带的Systemd方式管理jm以及tm的启停,并在jm或tm出现故障时,及时将jm以及tm拉起来。

Flink在1.11版本后,从发行版中移除了对Hadoop的依赖包,如果需要使用Hadoop的一些特性,有两种解决方案:

【注】以下假设java、flink、hadoop都安装在/opt目录下,并且都建立了软连接:

一、解决方案

1.1 方案一(推荐):设置HADOOP_CLASSPATH环境变量

在安装了Flink的所有节点上,在/etc/profile中进行如下设置:

# Hadoop Env export HADOOP_HOME=/opt/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin export HADOOP_CLASSPATH=`hadoop classpath`

然后通过以下命令使环境变量生效

sudo source /etc/profile

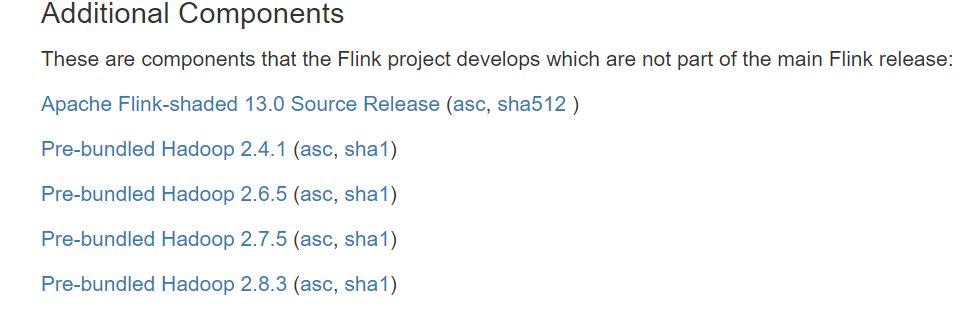

1.2 方案二:下载flink-shaded-hadoop-2-uber对应的jar包,并拷贝到Flink安装路径的lib目录下

下载地址:https://flink.apache.org/downloads.html#additional-components

二、详细配置

由于以systemd方式启动时,系统设置的环境变量,在.service文件中是不能使用的,所以需要在.service文件中单独显式设置环境变量:

1./usr/lib/systemd/system/flink-jobmanager.service

[Unit] Description=Flink Job Manager After=syslog.target network.target remote-fs.target nss-lookup.target network-online.target Requires=network-online.target [Service] User=teld Group=teld Type=forking Environment=PATH=/opt/java/bin:/opt/flink/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin Environment=JAVA_HOME=/opt/java Environment=FLINK_HOME=/opt/flink Environment=HADOOP_CLASSPATH=/opt/hadoop/etc/hadoop:/opt/hadoop/share/hadoop/common/lib/*:/opt/hadoop/share/hadoop/common/*:/opt/hadoop/ share/hadoop/hdfs:/opt/hadoop/share/hadoop/hdfs/lib/*:/opt/hadoop/share/hadoop/hdfs/*:/opt/hadoop/share/hadoop/yarn/lib/*:/opt/hadoop/sh are/hadoop/yarn/*:/opt/hadoop/share/hadoop/mapreduce/lib/*:/opt/hadoop/share/hadoop/mapreduce/*:/opt/hadoop/contrib/capacity-scheduler/* .jar ExecStart=/opt/flink/bin/jobmanager.sh start ExecStop=/opt/flink/bin/jobmanager.sh stop Restart=on-failure [Install] WantedBy=multi-user.target

【注】HADOOP_CLASSPATH对应的值,是通过执行以下命令获得到的:

hadoop classpath

2./usr/lib/systemd/system/flink-taskmanager.service

[Unit] Description=Flink Task Manager After=syslog.target network.target remote-fs.target nss-lookup.target network-online.target Requires=network-online.target [Service] User=teld Group=teld Type=forking Environment=PATH=/opt/java/bin:/opt/flink/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin Environment=JAVA_HOME=/opt/java Environment=FLINK_HOME=/opt/flink Environment=HADOOP_CLASSPATH=/opt/hadoop/etc/hadoop:/opt/hadoop/share/hadoop/common/lib/*:/opt/hadoop/share/hadoop/common/*:/opt/hadoop/ share/hadoop/hdfs:/opt/hadoop/share/hadoop/hdfs/lib/*:/opt/hadoop/share/hadoop/hdfs/*:/opt/hadoop/share/hadoop/yarn/lib/*:/opt/hadoop/sh are/hadoop/yarn/*:/opt/hadoop/share/hadoop/mapreduce/lib/*:/opt/hadoop/share/hadoop/mapreduce/*:/opt/hadoop/contrib/capacity-scheduler/* .jar ExecStart=/opt/flink/bin/taskmanager.sh start ExecStop=/opt/flink/bin/taskmanager.sh stop Restart=on-failure [Install] WantedBy=multi-user.target

【注】HADOOP_CLASSPATH对应的值,是通过执行以下命令获得到的:

hadoop classpath

通过sudo systemctl daemon-reload命令来加载上面针对jm以及tm的配置后,就可以使用Systemd的方式来管理jm以及tm了,并且能够在jm以及tm异常退出时,及时将它们拉起来:

sudo systemctl start flink-jobmanager.service

sudo systemctl stop flink-jobmanager.service

sudo systemctl status flink-jobmanager.service

sudo systemctl start flink-taskmanager.service

sudo systemctl stop flink-taskmanager.service

sudo systemctl status flink-taskmanager.service

三、遇到的坑:

1.如果Flink设置了启用Checkpoint,但是没有设置HADOOP_CLASSPATH环境变量,则提交job的时候,会报如下异常:

Caused by: org.apache.flink.util.FlinkRuntimeException: Failed to create checkpoint storage at checkpoint coordinator side. at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.<init>(CheckpointCoordinator.java:304) at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.<init>(CheckpointCoordinator.java:223) at org.apache.flink.runtime.executiongraph.ExecutionGraph.enableCheckpointing(ExecutionGraph.java:483) at org.apache.flink.runtime.executiongraph.ExecutionGraphBuilder.buildGraph(ExecutionGraphBuilder.java:338) at org.apache.flink.runtime.scheduler.SchedulerBase.createExecutionGraph(SchedulerBase.java:269) at org.apache.flink.runtime.scheduler.SchedulerBase.createAndRestoreExecutionGraph(SchedulerBase.java:242) at org.apache.flink.runtime.scheduler.SchedulerBase.<init>(SchedulerBase.java:229) at org.apache.flink.runtime.scheduler.DefaultScheduler.<init>(DefaultScheduler.java:119) at org.apache.flink.runtime.scheduler.DefaultSchedulerFactory.createInstance(DefaultSchedulerFactory.java:103) at org.apache.flink.runtime.jobmaster.JobMaster.createScheduler(JobMaster.java:284) at org.apache.flink.runtime.jobmaster.JobMaster.<init>(JobMaster.java:272) at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.createJobMasterService(DefaultJobMasterServiceFac tory.java:98) at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.createJobMasterService(DefaultJobMasterServiceFac tory.java:40) at org.apache.flink.runtime.jobmaster.JobManagerRunnerImpl.<init>(JobManagerRunnerImpl.java:140) at org.apache.flink.runtime.dispatcher.DefaultJobManagerRunnerFactory.createJobManagerRunner(DefaultJobManagerRunnerFactory.java :84) at org.apache.flink.runtime.dispatcher.Dispatcher.lambda$createJobManagerRunner$6(Dispatcher.java:388) ... 7 more Caused by: org.apache.flink.core.fs.UnsupportedFileSystemSchemeException: Could not find a file system implementation for scheme 'hdfs'. The scheme is not directly supported by Flink and no Hadoop file system to support this scheme could be loaded. For a full list of supp

2.在为flink-jobmanager.service以及flink-taskmanager.service中的HADOOP_CLASSPATH环境变量赋值时,尝试使用过反引号,期望将反引号内的Linux命令执行结果赋予变量,但实际上并不会执行反引号中的内容:

Environment=HADOOP_CLASSPATH=`/opt/hadoop/bin/hadoop classpath`

最后只得将直接执行hadoop classpath获得的结果,粘贴到.service文件中

Environment=HADOOP_CLASSPATH=/opt/hadoop/etc/hadoop:/opt/hadoop/share/hadoop/common/lib/*:/opt/hadoop/share/hadoop/common/*:/opt/hadoop/ share/hadoop/hdfs:/opt/hadoop/share/hadoop/hdfs/lib/*:/opt/hadoop/share/hadoop/hdfs/*:/opt/hadoop/share/hadoop/yarn/lib/*:/opt/hadoop/sh are/hadoop/yarn/*:/opt/hadoop/share/hadoop/mapreduce/lib/*:/opt/hadoop/share/hadoop/mapreduce/*:/opt/hadoop/contrib/capacity-scheduler/* .jar