一.简介

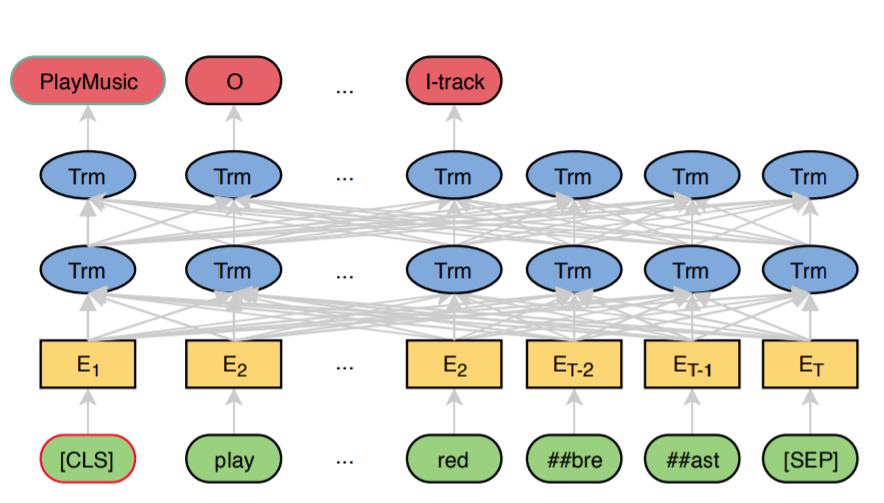

此模型采用bertBERT for Joint Intent Classification and Slot Filling进行意图识别与槽填充。 结构如下:

从上可知:

1.意图识别采用[cls]的输出进行识别

2.槽填充直接输出对应的结果进行序列标注,这里不使用mlm中的mask

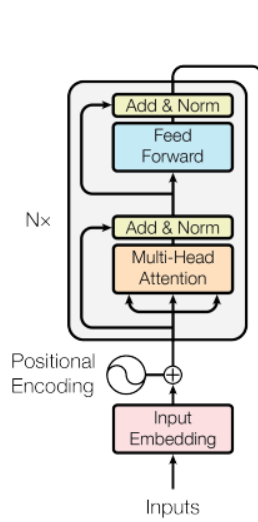

3.其中Trm是transformer-encoder模型。bert由多层transformer-encoder组成,每个encoder中由【一个多头self-attention + add & norm + feedforward network + add & norm】以及残差连接组成,如下图中的transformer-encoder模型。

步骤:

1.输入是单句,[cls]用于最后的意图识别, [sep]作为句子的最后部分

2.再加入位置信息

3.经过embedding -> transformer-encoder -> 输出

二.程序(bert的简单实现,transformer-encoder部分参考https://github.com/bentrevett)

完整程序见:(https://github.com/jiangnanboy/intent_detection_and_slot_filling/tree/master/model6)

class Encoder(nn.Module): def __init__(self, input_dim, hid_dim, n_layers, n_heads, pf_dim, dropout, max_length=100): super(Encoder, self).__init__() self.tok_embedding = nn.Embedding(input_dim, hid_dim) self.pos_embedding = nn.Embedding(max_length, hid_dim) # 多层encoder self.layers = nn.ModuleList([EncoderLayer(hid_dim, n_heads, pf_dim, dropout) for _ in range(n_layers)]) self.dropout = nn.Dropout(dropout) self.scale = torch.sqrt(torch.FloatTensor([hid_dim])).to(DEVICE) def forward(self, src, src_mask): #src:[batch_size, src_len] #src_mask:[batch_size, 1, 1, src_len] batch_size = src.shape[0] src_len = src.shape[1] #位置信息 pos = torch.arange(0, src_len).unsqueeze(0).repeat(batch_size, 1).to(DEVICE) #token编码+位置编码 src = self.dropout((self.tok_embedding(src) * self.scale) + self.pos_embedding(pos)) # [batch_size, src_len, hid_dim] for layer in self.layers: src = layer(src, src_mask) #[batch_size, src_len, hid_dim] return src class EncoderLayer(nn.Module): def __init__(self, hid_dim, n_heads, pf_dim, dropout): super(EncoderLayer, self).__init__() self.self_attn_layer_norm = nn.LayerNorm(hid_dim) self.ff_layer_norm = nn.LayerNorm(hid_dim) self.self_attention = MultiHeadAttentionLayer(hid_dim, n_heads, dropout) self.positionwise_feedforward = PositionwiseFeedforwardLayer(hid_dim, pf_dim, dropout) self.dropout = nn.Dropout(dropout) def forward(self, src, src_mask): # src:[batch_size, src_len, hid_dim] # src_mask:[batch_size, 1, 1, src_len] # 1.经过多头attetnion后,再经过add+norm # self-attention _src = self.self_attention(src, src, src, src_mask) src = self.self_attn_layer_norm(src + self.dropout(_src)) # [batch_size, src_len, hid_dim] # 2.经过一个前馈网络后,再经过add+norm _src = self.positionwise_feedforward(src) src = self.ff_layer_norm(src + self.dropout(_src)) # [batch_size, src_len, hid_dim] return src class MultiHeadAttentionLayer(nn.Module): def __init__(self, hid_dim, n_heads, dropout): super(MultiHeadAttentionLayer, self).__init__() assert hid_dim % n_heads == 0 self.hid_dim = hid_dim self.n_heads = n_heads self.head_dim = hid_dim // n_heads self.fc_q = nn.Linear(hid_dim, hid_dim) self.fc_k = nn.Linear(hid_dim, hid_dim) self.fc_v = nn.Linear(hid_dim, hid_dim) self.fc_o = nn.Linear(hid_dim, hid_dim) self.dropout = nn.Dropout(dropout) self.scale = torch.sqrt(torch.FloatTensor([self.head_dim])).to(DEVICE) def forward(self, query, key, value, mask=None): batch_size = query.shape[0] # query:[batch_size, query_len, hid_dim] # key:[batch_size, query_len, hid_dim] # value:[batch_size, query_len, hid_dim] Q = self.fc_q(query) K = self.fc_k(key) V = self.fc_v(value) Q = Q.view(batch_size, -1, self.n_heads, self.head_dim).permute(0, 2, 1, 3) # [batch_size, query_len, n_heads, head_dim] K = K.view(batch_size, -1, self.n_heads, self.head_dim).permute(0, 2, 1, 3) V = V.view(batch_size, -1, self.n_heads, self.head_dim).permute(0, 2, 1, 3) energy = torch.matmul(Q, K.permute(0, 1, 3, 2)) / self.scale # [batch_size, n_heads, query_len, key_len] if mask is not None: energy = energy.mask_fill(mask == 0, -1e10) attention = torch.softmax(energy, dim=-1) # [batch_size, n_heads, query_len, key_len] x = torch.matmul(self.dropout(attention), V) # [batch_size, n_heads, query_len, head_dim] x = x.permute(0, 2, 1, 3).contiguous() # [batch_size, query_len, n_heads, head_dim] x = x.view(batch_size, -1, self.hid_dim) # [batch_size, query_len, hid_dim] x = self.fc_o(x) # [batch_size, query_len, hid_dim] return x class PositionwiseFeedforwardLayer(nn.Module): def __init__(self, hid_dim, pf_dim, dropout): super(PositionwiseFeedforwardLayer, self).__init__() self.fc_1 = nn.Linear(hid_dim, pf_dim) self.fc_2 = nn.Linear(pf_dim, hid_dim) self.dropout = nn.Dropout(dropout) self.gelu = nn.GELU() def forward(self, x): # x:[batch_size, seq_len, hid_dim] x = self.dropout(self.gelu(self.fc_1(x))) # [batch_size, seq_len, pf_dim] x = self.fc_2(x) # [batch_size, seq_len, hid_dim] return x class BERT(nn.Module): def __init__(self, input_dim, hid_dim, n_layers, n_heads, pf_dim, dropout, slot_size, intent_size, src_pad_idx): super(BERT, self).__init__() self.src_pad_idx = src_pad_idx self.encoder = Encoder(input_dim, hid_dim, n_layers, n_heads, pf_dim, dropout) self.gelu = nn.GELU() self.fc = nn.Sequential(nn.Linear(hid_dim, hid_dim), nn.Dropout(dropout), nn.Tanh()) self.intent_out = nn.Linear(hid_dim, intent_size) self.linear = nn.Linear(hid_dim, hid_dim) embed_weight = self.encoder.tok_embedding.weight self.slot_out = nn.Linear(hid_dim, slot_size, bias=False) self.slot_out.weight = embed_weight def make_src_mask(self, src): # src: [batch_size, src_len] src_mask = (src != self.src_pad_idx).unsqueeze(1).unsqueeze(2) # [batch_size, 1, 1, src_len] def forward(self, src): src_mask = self.make_src_mask(src) encoder_out = self.encoder(src, src_mask) #[batch_size, src_len, hid_dim] # 拿到[cls] token进行意图分类 cls_hidden = self.fc(encoder_out[:, 0]) # [batch_size, hid_dim] intent_output = self.intent_out(cls_hidden) # [batch_size, intent_size] # 排除cls进行slot预测 other_hidden = self.gelu(self.linear(encoder_out[:,1:])) # [batch_sze, src_len-1, hid_dim] slot_output = self.slot_out(other_hidden) # [batch_size, src_len-1, slot_size] return intent_output, slot_output