1.选一个自己感兴趣的主题或网站:

广州地铁官网:http://www.gzmtr.com/ygwm/xwzx/gsxw/

2.用python 编写爬虫程序,从网络上爬取相关主题的数据。

from bs4 import BeautifulSoup import requests import pandas import re indexUrl = "http://www.gzmtr.com/ygwm/xwzx/gsxw/" def Url (url):#分析网址的 res = requests.get(url) res.encoding = "utf-8" soup = BeautifulSoup(res.text, "html.parser") return soup aList = [] newArr = [] def onePage(url):#一页的a标签的网址 soup = Url(url) page = soup.select(".pages")[0] ulList = soup.select(".ag_h_w")[0].select("li") for i in range(len(ulList)): List = ulList[i].select("a")[0].attrs["href"] List = List.lstrip("./") aList.append(List) return page def pageNumber(page): for tag in page.find_all(re.compile("script")): te = tag.text k = int(re.search("var countPage = [0-9]*", te).group(0).split(" ")[-1] ) # 总页数 return k def allPage(k): for i in range(k): if i == 0: page = onePage(indexUrl) else: nextPage = indexUrl+"index_{}.html".format(i) page = onePage(nextPage) onePageText() def onePageText(): for i in range(len(aList)): dict1 = {} newsUrl = indexUrl + aList[i] soup = Url(newsUrl) [s.extract() for s in soup('style')]#删除style标签 newsText = soup.select(".right_slide_bar_c_c")[0] if newsText.select(".TRS_Editor"): if newsText.select(".TRS_PreAppend"): if newsText.select(".TRS_PreAppend")[0].name == "p": newsaa = soup.select(".MsoNormal") newsText = "" for i in newsaa: newsText = newsText + i.text newsText = newsText.lstrip() else: newsText = newsText.select(".TRS_PreAppend")[0].text.lstrip().rstrip().replace(" ","") else: newsText = newsText.select(".TRS_Editor")[0].text; elif newsText.select(".TRS_PreAppend"): newsText = newsText.select(".TRS_PreAppend")[0].text.lstrip().rstrip() else: newsaa = soup.select(".MsoNormal") newsText = "" for i in newsaa: newsText = newsText + i.text newstitle = soup.select(".right_slide_bar_c_c")[0].select("h2")[0].text newsTextSource = soup.select(".text_title")[0].select(".source")[0].text.lstrip() newsTextTime = soup.select(".text_title")[0].select(".time")[0].text dict1["新闻标题"] = newstitle dict1["新闻来源"] = newsTextSource dict1["新闻日期"] = newsTextTime dict1["新闻内容"] = newsText newArr.append(dict1) page = onePage(indexUrl) k = pageNumber(page) allPage(k) content = "" for i in range(len(newArr)): content = content+newArr[i]["新闻内容"] f = open("SchoolNews.txt","a+",encoding='utf-8') f.write(content) f.close() # dict={} # for i in content: # dict[i]=content.count(i) # dictList = list(dict.items()) # for i in dictList: # print(i)

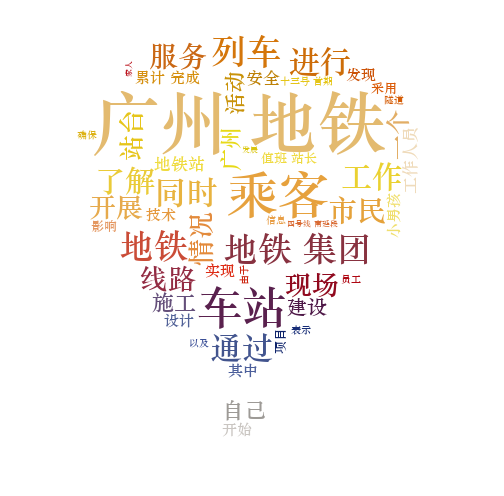

3.对爬了的数据进行文本分析,生成词云:

4.对文本分析结果进行解释说明:

4.1主要功能:查阅文章内容

def onePageText(): for i in range(len(aList)): dict1 = {} newsUrl = indexUrl + aList[i] soup = Url(newsUrl) [s.extract() for s in soup('style')]#删除style标签 newsText = soup.select(".right_slide_bar_c_c")[0] if newsText.select(".TRS_Editor"): if newsText.select(".TRS_PreAppend"): if newsText.select(".TRS_PreAppend")[0].name == "p": newsaa = soup.select(".MsoNormal") newsText = "" for i in newsaa: newsText = newsText + i.text newsText = newsText.lstrip() else: newsText = newsText.select(".TRS_PreAppend")[0].text.lstrip().rstrip().replace(" ","") else: newsText = newsText.select(".TRS_Editor")[0].text; elif newsText.select(".TRS_PreAppend"): newsText = newsText.select(".TRS_PreAppend")[0].text.lstrip().rstrip() else: newsaa = soup.select(".MsoNormal") newsText = "" for i in newsaa: newsText = newsText + i.text newstitle = soup.select(".right_slide_bar_c_c")[0].select("h2")[0].text newsTextSource = soup.select(".text_title")[0].select(".source")[0].text.lstrip() newsTextTime = soup.select(".text_title")[0].select(".time")[0].text dict1["新闻标题"] = newstitle dict1["新闻来源"] = newsTextSource dict1["新闻日期"] = newsTextTime dict1["新闻内容"] = newsText newArr.append(dict1)

4.2 一页html的a标签集合

def onePage(url):#一页的a标签的网址 soup = Url(url) page = soup.select(".pages")[0] ulList = soup.select(".ag_h_w")[0].select("li") for i in range(len(ulList)): List = ulList[i].select("a")[0].attrs["href"] List = List.lstrip("./") aList.append(List) return page

4.3 网站的总页数

def pageNumber(page): for tag in page.find_all(re.compile("script")): te = tag.text k = int(re.search("var countPage = [0-9]*", te).group(0).split(" ")[-1] ) # 总页数 return k

5.遇到的问题及解决办法、数据分析思想及结论

(1)网站新闻模版的class标签不规则

解决办法:找出所有的class然后用if语句查找

(2)标签里面有许多script标签和style标签

解决办法:re.compile("script")

(3)词云的使用,WordCloud的安装

解决方案:百度