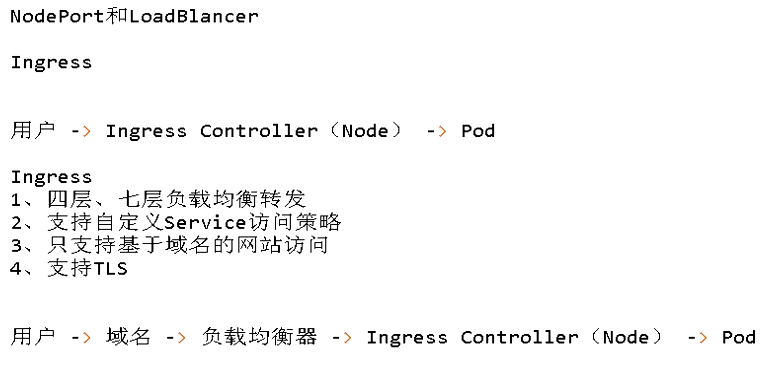

一、Ingress介绍

K8s暴露服务的方式:LoadBlancer Service、ExternalName、NodePort Service、Ingress

这里nginx-ingress-controller 为例 官方参考地址https://kubernetes.io/docs/concepts/services-networking/ingress/

原理:Ingress Controller通过与Kubernetes API交互,动态的去感知集群中Ingress 规则变化,然后读取它,按照它自己模板生成一段 Nginx 配置,再写到 nginx-ingress-controller的Pod 里,最后 reload 一下

四层 调度器 不负责建立会话(看工作模型nat,dr,fullnat,tunn) client需要与后端建立会话

七层的调度器: client 只需要和调度器建立连接调度器管理会话

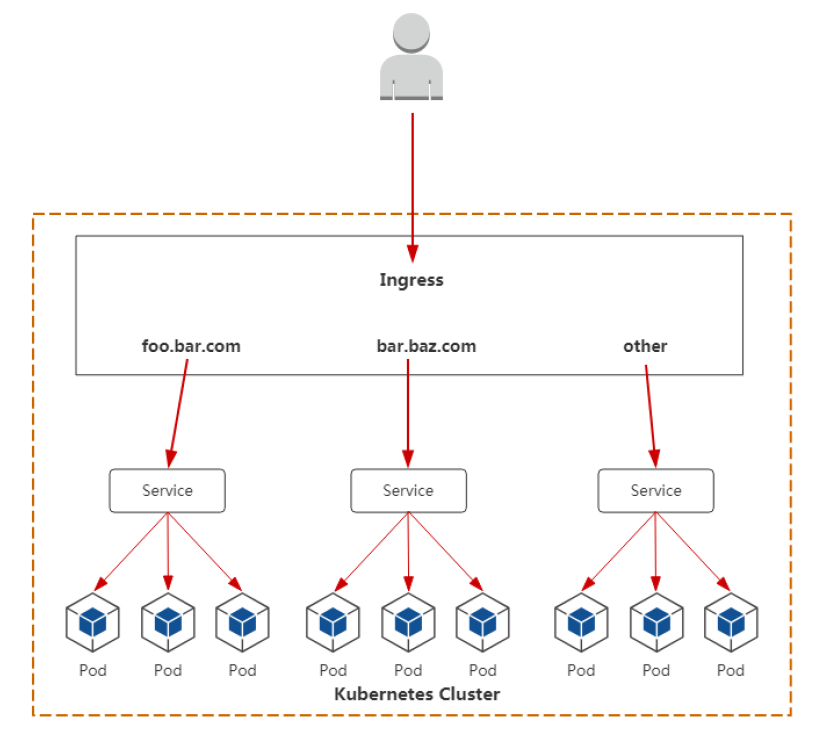

Ingress的资源类型有以下几种:

1、单Service资源型Ingress #只设置spec.backend,不设置其他的规则

2、基于URL路径进行流量转发 #根据spec.rules.http.paths 区分对同一个站点的不同的url的请求,并转发到不同主机

3、基于主机名称的虚拟主机 #spec.rules.host 设置不同的host来区分不同的站点

4、TLS类型的Ingress资源 #通过Secret获取TLS私钥和证书 (名为 tls.crt 和 tls.key)

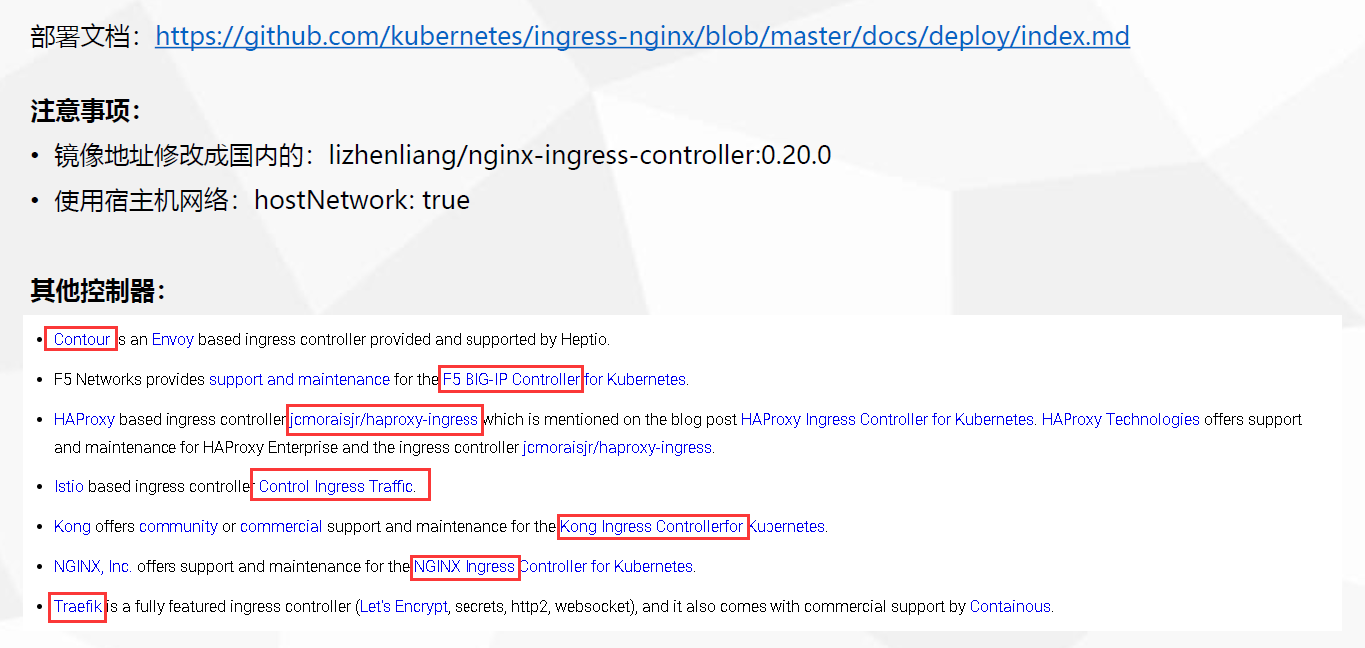

Ingress controller #HAproxy/nginx/Traefik/Envoy (服务网格)

要调度的肯定不止一个服务,url 区分不同的虚拟主机,server,一个server定向不同的一组pod

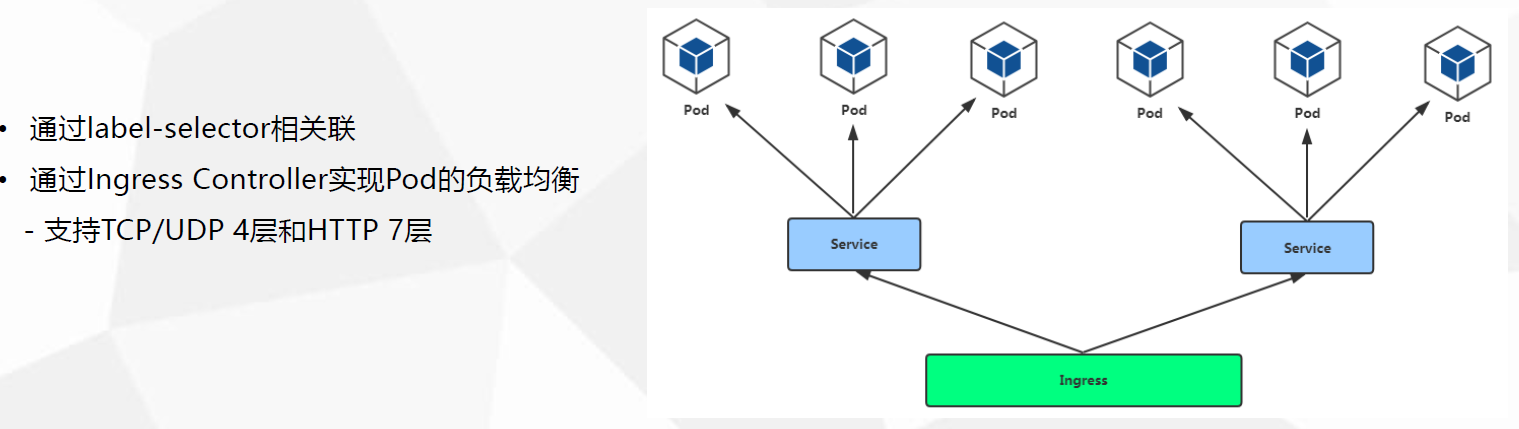

service使用label selector始终关心 watch自己的pod,一旦pod发生变化,自己也理解作出相应的改变

ingress controller 借助于service(headless)关注pod的状态变化,service会把状态变化及时反馈给ingress

service对后端pod进行分类(headless),ingress在发现service分类的pod资源发生改变的时候,及时作出反应

ingress基于service对pod的分类,获取分类的pod ip列表,并注入ip列表信息到ingress中

创建ingress需要的步骤:

1. ingress controller

2. 配置前端,server虚拟主机

3. 根据service收集到的pod 信息,生成upstream server,反映在ingress并注册到ingress controller中

二、安装

安装步骤 :https://github.com/kubernetes/ingress-nginx/blob/master/docs/deploy/index.md

介绍:https://github.com/kubernetes/ingress-nginx: ingress-nginx

默认会监听所有的namespace,如果想要特定的监听--watch-namespace

如果单个host定义了不同路径,ingress会 合并配置

1、部署nginx-ingress-controller控制器

[root@master1 ingress]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml 内容包含: 1)创建Namespace[ingress-nginx] 2)创建ConfigMap[nginx-configuration]、ConfigMap[tcp-services]、ConfigMap[udp-services] 3)创建RoleBinding[nginx-ingress-role-nisa-binding]=Role[nginx-ingress-role]+ServiceAccount[nginx-ingress-serviceaccount] 4)创建ClusterRoleBinding[nginx-ingress-clusterrole-nisa-binding]=ClusterRole[nginx-ingress-clusterrole]+ServiceAccount[nginx-ingress-serviceaccount] 5)Deployment[nginx-ingress-controller]应用ConfigMap[nginx-configuration]、ConfigMap[tcp-services]、ConfigMap[udp-services]作为配置,

[root@master01 ingress]# cat mandatory.yaml apiVersion: v1 kind: Namespace metadata: name: ingress-nginx #新建一个命名空间 --- kind: ConfigMap apiVersion: v1 metadata: name: nginx-configuration namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: tcp-services namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: udp-services namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- apiVersion: v1 kind: ServiceAccount #新建一个服务账号 并绑定权限 进行APIserver访问 metadata: name: nginx-ingress-serviceaccount namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: nginx-ingress-clusterrole labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - "extensions" resources: - ingresses verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - "extensions" resources: - ingresses/status verbs: - update --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: Role metadata: name: nginx-ingress-role namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - pods - secrets - namespaces verbs: - get - apiGroups: - "" resources: - configmaps resourceNames: # Defaults to "<election-id>-<ingress-class>" # Here: "<ingress-controller-leader>-<nginx>" # This has to be adapted if you change either parameter # when launching the nginx-ingress-controller. - "ingress-controller-leader-nginx" verbs: - get - update - apiGroups: - "" resources: - configmaps verbs: - create - apiGroups: - "" resources: - endpoints verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: RoleBinding metadata: name: nginx-ingress-role-nisa-binding namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: nginx-ingress-role subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: nginx-ingress-clusterrole-nisa-binding labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: nginx-ingress-clusterrole subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: nginx-ingress-controller namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx spec: replicas: 1 selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx annotations: prometheus.io/port: "10254" prometheus.io/scrape: "true" spec: hostNetwork: true #使用宿主机网路 serviceAccountName: nginx-ingress-serviceaccount containers: - name: nginx-ingress-controller image: 10.192.27.111/library/nginx-ingress-controller:0.20.0 #拉取镜像的地址 args: - /nginx-ingress-controller - --configmap=$(POD_NAMESPACE)/nginx-configuration - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services - --udp-services-configmap=$(POD_NAMESPACE)/udp-services - --publish-service=$(POD_NAMESPACE)/ingress-nginx - --annotations-prefix=nginx.ingress.kubernetes.io securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE # www-data -> 33 runAsUser: 33 env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace ports: - name: http containerPort: 80 - name: https containerPort: 443 livenessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 --- [root@master01 ingress]#

[root@localhost ~]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml [root@master01 ingress]# kubectl apply -f mandatory.yaml namespace/ingress-nginx created configmap/nginx-configuration created configmap/tcp-services created configmap/udp-services created serviceaccount/nginx-ingress-serviceaccount created clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created role.rbac.authorization.k8s.io/nginx-ingress-role created rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created deployment.extensions/nginx-ingress-controller created [root@master01 ingress]# kubectl get all -n ingress-nginx NAME READY STATUS RESTARTS AGE pod/nginx-ingress-controller-ffc9559bd-27nh7 0/1 Running 1 44s #pod READY状态没有准备好 NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-ingress-controller 0/1 1 0 44s NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-ingress-controller-ffc9559bd 1 1 0 44s

#查看详情 分析原因 健康检查连接失败 Readiness probe failed: Get http://10.192.27.115:10254/healthz: dial tcp 10.192.27.115:10254: connect: connection refused [root@master01 ingress]# kubectl describe pod/nginx-ingress-controller-ffc9559bd-27nh7 Error from server (NotFound): pods "nginx-ingress-controller-ffc9559bd-27nh7" not found [root@master01 ingress]# kubectl describe pod/nginx-ingress-controller-ffc9559bd-27nh7 Error from server (NotFound): pods "nginx-ingress-controller-ffc9559bd-27nh7" not found [root@master01 ingress]# kubectl describe pod/nginx-ingress-controller-ffc9559bd-27nh7 -n ingress-nginx Name: nginx-ingress-controller-ffc9559bd-27nh7 Namespace: ingress-nginx Priority: 0 PriorityClassName: <none> Node: 10.192.27.115/10.192.27.115 Start Time: Fri, 29 Nov 2019 09:12:50 +0800 Labels: app.kubernetes.io/name=ingress-nginx app.kubernetes.io/part-of=ingress-nginx pod-template-hash=ffc9559bd Annotations: prometheus.io/port: 10254 prometheus.io/scrape: true Status: Running IP: 10.192.27.115 Controlled By: ReplicaSet/nginx-ingress-controller-ffc9559bd Containers: nginx-ingress-controller: Container ID: docker://13a9b010ae5df5fbc4146c26a0b8132e1e6a2c5411648a104826ac70727196c4 Image: 10.192.27.111/library/nginx-ingress-controller:0.20.0 Image ID: docker-pullable://10.192.27.111/library/nginx-ingress-controller@sha256:97bbe36d965aedce82f744669d2f78d2e4564c43809d43e80111cecebcb952d0 Ports: 80/TCP, 443/TCP Host Ports: 80/TCP, 443/TCP Args: /nginx-ingress-controller --configmap=$(POD_NAMESPACE)/nginx-configuration --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services --udp-services-configmap=$(POD_NAMESPACE)/udp-services --publish-service=$(POD_NAMESPACE)/ingress-nginx --annotations-prefix=nginx.ingress.kubernetes.io State: Running Started: Fri, 29 Nov 2019 09:13:57 +0800 Last State: Terminated Reason: Error Exit Code: 143 Started: Fri, 29 Nov 2019 09:13:27 +0800 Finished: Fri, 29 Nov 2019 09:13:57 +0800 Ready: False Restart Count: 2 Liveness: http-get http://:10254/healthz delay=10s timeout=1s period=10s #success=1 #failure=3 Readiness: http-get http://:10254/healthz delay=0s timeout=1s period=10s #success=1 #failure=3 Environment: POD_NAME: nginx-ingress-controller-ffc9559bd-27nh7 (v1:metadata.name) POD_NAMESPACE: ingress-nginx (v1:metadata.namespace) Mounts: /var/run/secrets/kubernetes.io/serviceaccount from nginx-ingress-serviceaccount-token-scfz7 (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: nginx-ingress-serviceaccount-token-scfz7: Type: Secret (a volume populated by a Secret) SecretName: nginx-ingress-serviceaccount-token-scfz7 Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 94s default-scheduler Successfully assigned ingress-nginx/nginx-ingress-controller-ffc9559bd-27nh7 to 10.192.27.115 Normal Pulled 27s (x3 over 93s) kubelet, 10.192.27.115 Container image "10.192.27.111/library/nginx-ingress-controller:0.20.0" already present on machine Normal Created 27s (x3 over 93s) kubelet, 10.192.27.115 Created container Normal Started 27s (x3 over 93s) kubelet, 10.192.27.115 Started container Warning Unhealthy 27s (x6 over 77s) kubelet, 10.192.27.115 Liveness probe failed: Get http://10.192.27.115:10254/healthz: dial tcp 10.192.27.115:10254: connect: connection refused Normal Killing 27s (x2 over 57s) kubelet, 10.192.27.115 Killing container with id docker://nginx-ingress-controller:Container failed liveness probe.. Container will be killed and recreated. Warning Unhealthy 19s (x8 over 89s) kubelet, 10.192.27.115 Readiness probe failed: Get http://10.192.27.115:10254/healthz: dial tcp 10.192.27.115:10254: connect: connection refused #K8S之INGRESS-NGINX部署一直提示健康检查10254端口不通过问题就处理https://www.cnblogs.com/xingyunfashi/p/11493270.html [root@master01 ingress]#

[root@master01 ingress]# kubectl delete -f mandatory.yaml namespace "ingress-nginx" deleted configmap "nginx-configuration" deleted configmap "tcp-services" deleted configmap "udp-services" deleted serviceaccount "nginx-ingress-serviceaccount" deleted clusterrole.rbac.authorization.k8s.io "nginx-ingress-clusterrole" deleted role.rbac.authorization.k8s.io "nginx-ingress-role" deleted rolebinding.rbac.authorization.k8s.io "nginx-ingress-role-nisa-binding" deleted clusterrolebinding.rbac.authorization.k8s.io "nginx-ingress-clusterrole-nisa-binding" deleted deployment.extensions "nginx-ingress-controller" deleted [root@master01 ingress]# #解决方法 在node节点加参数 [root@node01 image]# vim /opt/kubernetes/cfg/kube-proxy [root@node01 image]# cat /opt/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true --v=4 --hostname-override=10.192.27.115 --cluster-cidr=10.0.0.0/24 --proxy-mode=ipvs --masquerade-all=true #每个node添加这行 --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" [root@node01 image]# systemctl restart kube-proxy [root@node01 image]# ps -ef | grep kube-proxy root 3243 1 1 09:35 ? 00:00:00 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=10.192.27.115 --cluster-cidr=10.0.0.0/24 --proxy-mode=ipvs --masquerade-all=true --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig root 3393 18416 0 09:35 pts/0 00:00:00 grep --color=auto kube-proxy [root@node02 cfg]# vim /opt/kubernetes/cfg/kube-proxy [root@node02 cfg]# cat /opt/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true --v=4 --hostname-override=10.192.27.116 --cluster-cidr=10.0.0.0/24 --proxy-mode=ipvs --masquerade-all=true ##每个node添加这行 --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" [root@node02 cfg]# systemctl restart kube-proxy [root@node02 cfg]# ps -ef | grep kube-proxy root 2950 1 1 09:34 ? 00:00:00 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=10.192.27.116 --cluster-cidr=10.0.0.0/24 --proxy-mode=ipvs --masquerade-all=true --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig root 3117 13951 0 09:34 pts/0 00:00:00 grep --color=auto kube-proxy [root@node02 cfg]# [root@master01 ingress]# kubectl apply -f mandatory.yaml namespace/ingress-nginx created configmap/nginx-configuration created configmap/tcp-services created configmap/udp-services created serviceaccount/nginx-ingress-serviceaccount created clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created role.rbac.authorization.k8s.io/nginx-ingress-role created rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created deployment.extensions/nginx-ingress-controller created [root@master01 ingress]# kubectl get all -n ingress-nginx #创建成功 NAME READY STATUS RESTARTS AGE pod/nginx-ingress-controller-ffc9559bd-kml76 1/1 Running 0 7s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-ingress-controller 1/1 1 1 7s NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-ingress-controller-ffc9559bd 1 1 1 7s [root@master01 ingress]#

#分配到了node2 10.192.27.116 [root@master01 ingress]# kubectl get pods -o wide -n ingress-nginx NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ingress-controller-ffc9559bd-5k6fd 1/1 Running 0 10m 10.192.27.116 10.192.27.116 <none> <none> [root@master01 ingress]# #在node02节点上查看 情况 [root@node02 cfg]# ps -ef | grep nginx-ingress-controller root 1547 13951 0 11:43 pts/0 00:00:00 grep --color=auto nginx-ingress-controller 33 11410 11392 0 10:08 ? 00:00:00 /usr/bin/dumb-init /bin/bash /entrypoint.sh /nginx-ingress-controller --configmap=ingress-nginx/nginx-configuration --tcp-services-configmap=ingress-nginx/tcp-services --udp-services-configmap=ingress-nginx/udp-services --publish-service=ingress-nginx/ingress-nginx --annotations-prefix=nginx.ingress.kubernetes.io 33 11515 11410 0 10:08 ? 00:00:00 /bin/bash /entrypoint.sh /nginx-ingress-controller --configmap=ingress-nginx/nginx-configuration --tcp-services-configmap=ingress-nginx/tcp-services --udp-services-configmap=ingress-nginx/udp-services --publish-service=ingress-nginx/ingress-nginx --annotations-prefix=nginx.ingress.kubernetes.io 33 11520 11515 1 10:08 ? 00:01:04 /nginx-ingress-controller --configmap=ingress-nginx/nginx-configuration --tcp-services-configmap=ingress-nginx/tcp-services --udp-services-configmap=ingress-nginx/udp-services --publish-service=ingress-nginx/ingress-nginx --annotations-prefix=nginx.ingress.kubernetes.io [root@node02 cfg]# netstat -anput | grep :80 | grep LISTEN #80端口已监听 tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 11828/nginx: master tcp6 0 0 :::80 :::* LISTEN 11828/nginx: master [root@node02 cfg]#

2、新建pod 和service进行 测试

[root@master01 ingress]# cat deploy-nginx.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment namespace: default labels: app: nginx spec: replicas: 3 selector: matchLabels: app: nginx-deploy template: metadata: labels: app: nginx-deploy spec: containers: - name: nginx image: 10.192.27.111/library/nginx:1.14 imagePullPolicy: IfNotPresent ports: - containerPort: 80 --- apiVersion: v1 kind: Service metadata: name: nginx-service-mxxl spec: type: NodePort ports: - port: 80 nodePort: 30080 selector: app: nginx-deploy [root@master01 ingress]#

[root@master01 ingress]# kubectl apply -f deploy-nginx.yaml deployment.apps/nginx-deployment created service/nginx-service-mxxl created [root@master01 ingress]# kubectl get all NAME READY STATUS RESTARTS AGE pod/nginx-deployment-5dbdf48958-29dbd 1/1 Running 0 11s pod/nginx-deployment-5dbdf48958-mz2xh 1/1 Running 0 11s pod/nginx-deployment-5dbdf48958-q9lx4 1/1 Running 0 11s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 17d service/nginx-service-mxxl NodePort 10.0.0.209 <none> 80:30080/TCP 11s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-deployment 3/3 3 3 11s NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-deployment-5dbdf48958 3 3 3 11s [root@master01 ingress]# [root@master01 ingress]# kubectl get ep NAME ENDPOINTS AGE kubernetes 10.192.27.100:6443,10.192.27.114:6443 17d nginx-service-mxxl 172.17.43.4:80,172.17.43.5:80,172.17.46.4:80 31s [root@master01 ingress]#

3、新建http 80端口的ingress进行测试

[root@master01 ingress]# cat ingress-test.yaml apiVersion: extensions/v1beta1 #要和上面发布的deployment在同一个名称空间中 kind: Ingress metadata: name: simple-fanout-example annotations: nginx.ingress.kubernetes.io/rewrite-target: / #说明自己使用的ingress controller是哪一个 spec: rules: - host: foo.bar.com # 网站域名 http: paths: - path: / #访问的URI backend: serviceName: nginx-service-mxxl #service 域名 servicePort: 80 #service port # - path: /foo # backend: # serviceName: service1 # servicePort: 4200 # - path: /bar # backend: # serviceName: service2 # servicePort: 8080 [root@master01 ingress]# [root@master01 ingress]# kubectl create -f ingress-test.yaml ingress.extensions/simple-fanout-example created [root@master01 ingress]# kubectl get ingress NAME HOSTS ADDRESS PORTS AGE simple-fanout-example foo.bar.com 80 21s [root@master01 ingress]#

在系统hosts文件中加

10.192.27.116 foo.bar.com

由于ingress-controller分配到node2 10.192.27.116上

这里说明:生产环境中可以将一组node加上标签 ingress-controller 可以DaemonSet的形式分配到这些node中 ,再使用一个外部负载均衡器

访问网站 http://foo.bar.com

[root@master01 ingress]# kubectl get pods -o wide -n ingress-nginx NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ingress-controller-ffc9559bd-5k6fd 1/1 Running 0 31m 10.192.27.116 10.192.27.116 <none> <none> [root@master01 ingress]# kubectl exec -it nginx-ingress-controller-ffc9559bd-5k6fd bash -n ingress-nginx www-data@node02:/etc/nginx$ ls fastcgi.conf fastcgi_params.default koi-win mime.types.default nginx.conf owasp-modsecurity-crs template win-utf fastcgi.conf.default geoip lua modsecurity nginx.conf.default scgi_params uwsgi_params fastcgi_params koi-utf mime.types modules opentracing.json scgi_params.default uwsgi_params.default www-data@node02:/etc/nginx$ cat nginx.conf # Configuration checksum: 1952086611213485535 # setup custom paths that do not require root access pid /tmp/nginx.pid; daemon off; worker_processes 4; worker_rlimit_nofile 15360; worker_shutdown_timeout 10s ; events { multi_accept on; worker_connections 16384; use epoll; } http { lua_package_cpath "/usr/local/lib/lua/?.so;/usr/lib/lua-platform-path/lua/5.1/?.so;;"; lua_package_path "/etc/nginx/lua/?.lua;/etc/nginx/lua/vendor/?.lua;/usr/local/lib/lua/?.lua;;"; lua_shared_dict configuration_data 5M; lua_shared_dict certificate_data 16M; lua_shared_dict locks 512k; lua_shared_dict balancer_ewma 1M; lua_shared_dict balancer_ewma_last_touched_at 1M; lua_shared_dict sticky_sessions 1M; init_by_lua_block { require("resty.core") collectgarbage("collect") local lua_resty_waf = require("resty.waf") lua_resty_waf.init() -- init modules local ok, res ok, res = pcall(require, "configuration") if not ok then error("require failed: " .. tostring(res)) else configuration = res configuration.nameservers = { "10.30.8.8", "10.40.8.8" } end ok, res = pcall(require, "balancer") if not ok then error("require failed: " .. tostring(res)) else balancer = res end ok, res = pcall(require, "monitor") if not ok then error("require failed: " .. tostring(res)) else monitor = res end } init_worker_by_lua_block { balancer.init_worker() monitor.init_worker() } real_ip_header X-Forwarded-For; real_ip_recursive on; set_real_ip_from 0.0.0.0/0; geoip_country /etc/nginx/geoip/GeoIP.dat; geoip_city /etc/nginx/geoip/GeoLiteCity.dat; geoip_org /etc/nginx/geoip/GeoIPASNum.dat; geoip_proxy_recursive on; aio threads; aio_write on; tcp_nopush on; tcp_nodelay on; log_subrequest on; reset_timedout_connection on; keepalive_timeout 75s; keepalive_requests 100; client_body_temp_path /tmp/client-body; fastcgi_temp_path /tmp/fastcgi-temp; proxy_temp_path /tmp/proxy-temp; ajp_temp_path /tmp/ajp-temp; client_header_buffer_size 1k; client_header_timeout 60s; large_client_header_buffers 4 8k; client_body_buffer_size 8k; client_body_timeout 60s; http2_max_field_size 4k; http2_max_header_size 16k; http2_max_requests 1000; types_hash_max_size 2048; server_names_hash_max_size 1024; server_names_hash_bucket_size 32; map_hash_bucket_size 64; proxy_headers_hash_max_size 512; proxy_headers_hash_bucket_size 64; variables_hash_bucket_size 128; variables_hash_max_size 2048; underscores_in_headers off; ignore_invalid_headers on; limit_req_status 503; include /etc/nginx/mime.types; default_type text/html; gzip on; gzip_comp_level 5; gzip_http_version 1.1; gzip_min_length 256; gzip_types application/atom+xml application/javascript application/x-javascript application/json application/rss+xml application/vnd.ms-fontobject application/x-font-ttf application/x-web-app-manifest+json application/xhtml+xml application/xml font/opentype image/svg+xml image/x-icon text/css text/plain text/x-component; gzip_proxied any; gzip_vary on; # Custom headers for response server_tokens on; # disable warnings uninitialized_variable_warn off; # Additional available variables: # $namespace # $ingress_name # $service_name # $service_port log_format upstreaminfo '$the_real_ip - [$the_real_ip] - $remote_user [$time_local] "$request" $status $body_bytes_sent "$http_referer" "$http_user_agent" $request_length $request_time [$proxy_upstream_name] $upstream_addr $upstream_response_length $upstream_response_time $upstream_status $req_id'; map $request_uri $loggable { default 1; } access_log /var/log/nginx/access.log upstreaminfo if=$loggable; error_log /var/log/nginx/error.log notice; resolver 10.30.8.8 10.40.8.8 valid=30s; # See https://www.nginx.com/blog/websocket-nginx map $http_upgrade $connection_upgrade { default upgrade; # See http://nginx.org/en/docs/http/ngx_http_upstream_module.html#keepalive '' ''; } # The following is a sneaky way to do "set $the_real_ip $remote_addr" # Needed because using set is not allowed outside server blocks. map '' $the_real_ip { default $remote_addr; } # trust http_x_forwarded_proto headers correctly indicate ssl offloading map $http_x_forwarded_proto $pass_access_scheme { default $http_x_forwarded_proto; '' $scheme; } map $http_x_forwarded_port $pass_server_port { default $http_x_forwarded_port; '' $server_port; } # Obtain best http host map $http_host $this_host { default $http_host; '' $host; } map $http_x_forwarded_host $best_http_host { default $http_x_forwarded_host; '' $this_host; } # validate $pass_access_scheme and $scheme are http to force a redirect map "$scheme:$pass_access_scheme" $redirect_to_https { default 0; "http:http" 1; "https:http" 1; } map $pass_server_port $pass_port { 443 443; default $pass_server_port; } # Reverse proxies can detect if a client provides a X-Request-ID header, and pass it on to the backend server. # If no such header is provided, it can provide a random value. map $http_x_request_id $req_id { default $http_x_request_id; "" $request_id; } server_name_in_redirect off; port_in_redirect off; ssl_protocols TLSv1.2; # turn on session caching to drastically improve performance ssl_session_cache builtin:1000 shared:SSL:10m; ssl_session_timeout 10m; # allow configuring ssl session tickets ssl_session_tickets on; # slightly reduce the time-to-first-byte ssl_buffer_size 4k; # allow configuring custom ssl ciphers ssl_ciphers 'ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256'; ssl_prefer_server_ciphers on; ssl_ecdh_curve auto; proxy_ssl_session_reuse on; upstream upstream_balancer { server 0.0.0.1; # placeholder balancer_by_lua_block { balancer.balance() } keepalive 32; } # Global filters ## start server _ server { server_name _ ; listen 80 default_server reuseport backlog=511; listen [::]:80 default_server reuseport backlog=511; set $proxy_upstream_name "-"; listen 443 default_server reuseport backlog=511 ssl http2; listen [::]:443 default_server reuseport backlog=511 ssl http2; # PEM sha: 1280e3b27119d5e79bd411e59c59e0ca024a814c ssl_certificate /etc/ingress-controller/ssl/default-fake-certificate.pem; ssl_certificate_key /etc/ingress-controller/ssl/default-fake-certificate.pem; location / { set $namespace ""; set $ingress_name ""; set $service_name ""; set $service_port "0"; set $location_path "/"; rewrite_by_lua_block { balancer.rewrite() } log_by_lua_block { balancer.log() monitor.call() } if ($scheme = https) { more_set_headers "Strict-Transport-Security: max-age=15724800; includeSubDomains"; } access_log off; port_in_redirect off; set $proxy_upstream_name "upstream-default-backend"; client_max_body_size 1m; proxy_set_header Host $best_http_host; # Pass the extracted client certificate to the backend # Allow websocket connections proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection $connection_upgrade; proxy_set_header X-Request-ID $req_id; proxy_set_header X-Real-IP $the_real_ip; proxy_set_header X-Forwarded-For $the_real_ip; proxy_set_header X-Forwarded-Host $best_http_host; proxy_set_header X-Forwarded-Port $pass_port; proxy_set_header X-Forwarded-Proto $pass_access_scheme; proxy_set_header X-Original-URI $request_uri; proxy_set_header X-Scheme $pass_access_scheme; # Pass the original X-Forwarded-For proxy_set_header X-Original-Forwarded-For $http_x_forwarded_for; # mitigate HTTPoxy Vulnerability # https://www.nginx.com/blog/mitigating-the-httpoxy-vulnerability-with-nginx/ proxy_set_header Proxy ""; # Custom headers to proxied server proxy_connect_timeout 5s; proxy_send_timeout 60s; proxy_read_timeout 60s; proxy_buffering off; proxy_buffer_size 4k; proxy_buffers 4 4k; proxy_request_buffering on; proxy_http_version 1.1; proxy_cookie_domain off; proxy_cookie_path off; # In case of errors try the next upstream server before returning an error proxy_next_upstream error timeout; proxy_next_upstream_tries 3; proxy_pass http://upstream_balancer; proxy_redirect off; } # health checks in cloud providers require the use of port 80 location /healthz { access_log off; return 200; } # this is required to avoid error if nginx is being monitored # with an external software (like sysdig) location /nginx_status { allow 127.0.0.1; allow ::1; deny all; access_log off; stub_status on; } } ## end server _ ## start server foo.bar.com server { ####这个nginx服务器转发模块 server_name foo.bar.com ; listen 80; listen [::]:80; set $proxy_upstream_name "-"; location / { set $namespace "default"; set $ingress_name "simple-fanout-example"; set $service_name "nginx-service-mxxl"; set $service_port "80"; set $location_path "/"; rewrite_by_lua_block { balancer.rewrite() } log_by_lua_block { balancer.log() monitor.call() } port_in_redirect off; set $proxy_upstream_name "default-nginx-service-mxxl-80"; client_max_body_size 1m; proxy_set_header Host $best_http_host; # Pass the extracted client certificate to the backend # Allow websocket connections proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection $connection_upgrade; proxy_set_header X-Request-ID $req_id; proxy_set_header X-Real-IP $the_real_ip; proxy_set_header X-Forwarded-For $the_real_ip; proxy_set_header X-Forwarded-Host $best_http_host; proxy_set_header X-Forwarded-Port $pass_port; proxy_set_header X-Forwarded-Proto $pass_access_scheme; proxy_set_header X-Original-URI $request_uri; proxy_set_header X-Scheme $pass_access_scheme; # Pass the original X-Forwarded-For proxy_set_header X-Original-Forwarded-For $http_x_forwarded_for; # mitigate HTTPoxy Vulnerability # https://www.nginx.com/blog/mitigating-the-httpoxy-vulnerability-with-nginx/ proxy_set_header Proxy ""; # Custom headers to proxied server proxy_connect_timeout 5s; proxy_send_timeout 60s; proxy_read_timeout 60s; proxy_buffering off; proxy_buffer_size 4k; proxy_buffers 4 4k; proxy_request_buffering on; proxy_http_version 1.1; proxy_cookie_domain off; proxy_cookie_path off; # In case of errors try the next upstream server before returning an error proxy_next_upstream error timeout; proxy_next_upstream_tries 3; proxy_pass http://upstream_balancer; proxy_redirect off; } } ## end server foo.bar.com # backend for when default-backend-service is not configured or it does not have endpoints server { listen 8181 default_server reuseport backlog=511; listen [::]:8181 default_server reuseport backlog=511; set $proxy_upstream_name "-"; location / { return 404; } } # default server, used for NGINX healthcheck and access to nginx stats server { listen 18080 default_server reuseport backlog=511; listen [::]:18080 default_server reuseport backlog=511; set $proxy_upstream_name "-"; location /healthz { access_log off; return 200; } location /is-dynamic-lb-initialized { access_log off; content_by_lua_block { local configuration = require("configuration") local backend_data = configuration.get_backends_data() if not backend_data then ngx.exit(ngx.HTTP_INTERNAL_SERVER_ERROR) return end ngx.say("OK") ngx.exit(ngx.HTTP_OK) } } location /nginx_status { set $proxy_upstream_name "internal"; access_log off; stub_status on; } location /configuration { access_log off; allow 127.0.0.1; allow ::1; deny all; # this should be equals to configuration_data dict client_max_body_size 10m; proxy_buffering off; content_by_lua_block { configuration.call() } } location / { set $proxy_upstream_name "upstream-default-backend"; proxy_set_header Host $best_http_host; proxy_pass http://upstream_balancer; } } } stream { log_format log_stream [$time_local] $protocol $status $bytes_sent $bytes_received $session_time; access_log /var/log/nginx/access.log log_stream; error_log /var/log/nginx/error.log; # TCP services # UDP services } www-data@node02:/etc/nginx$

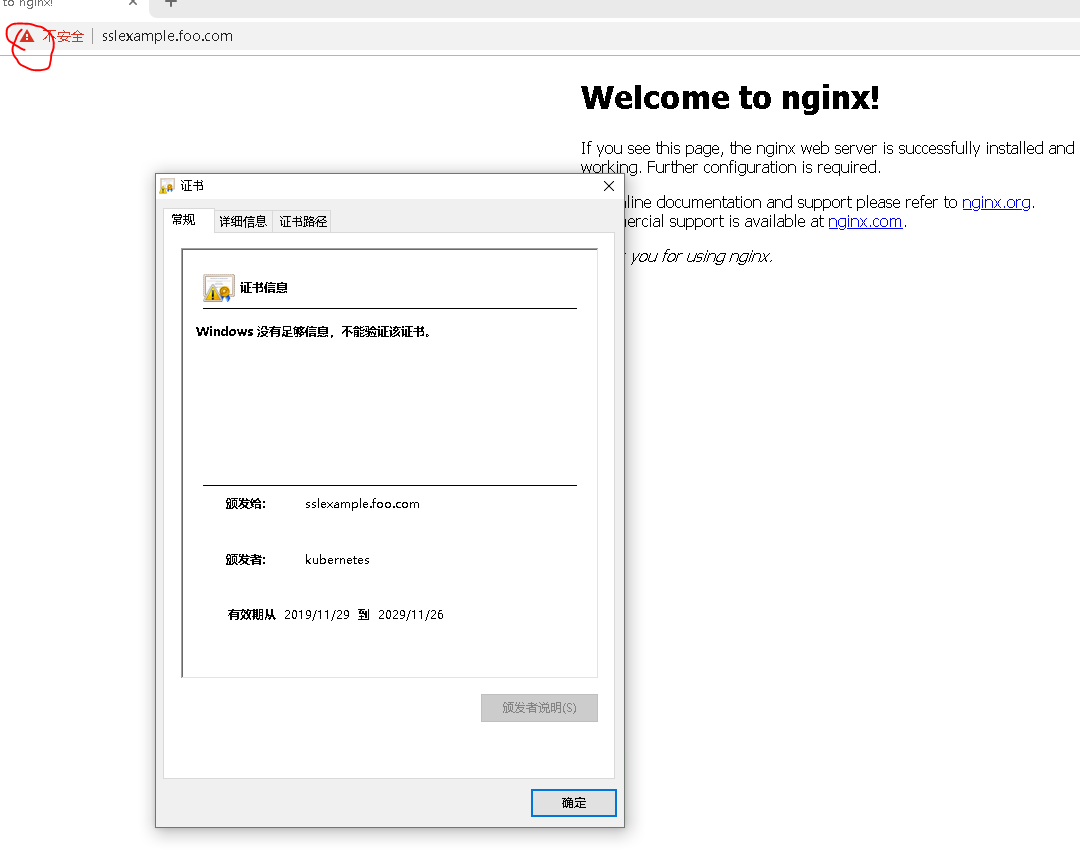

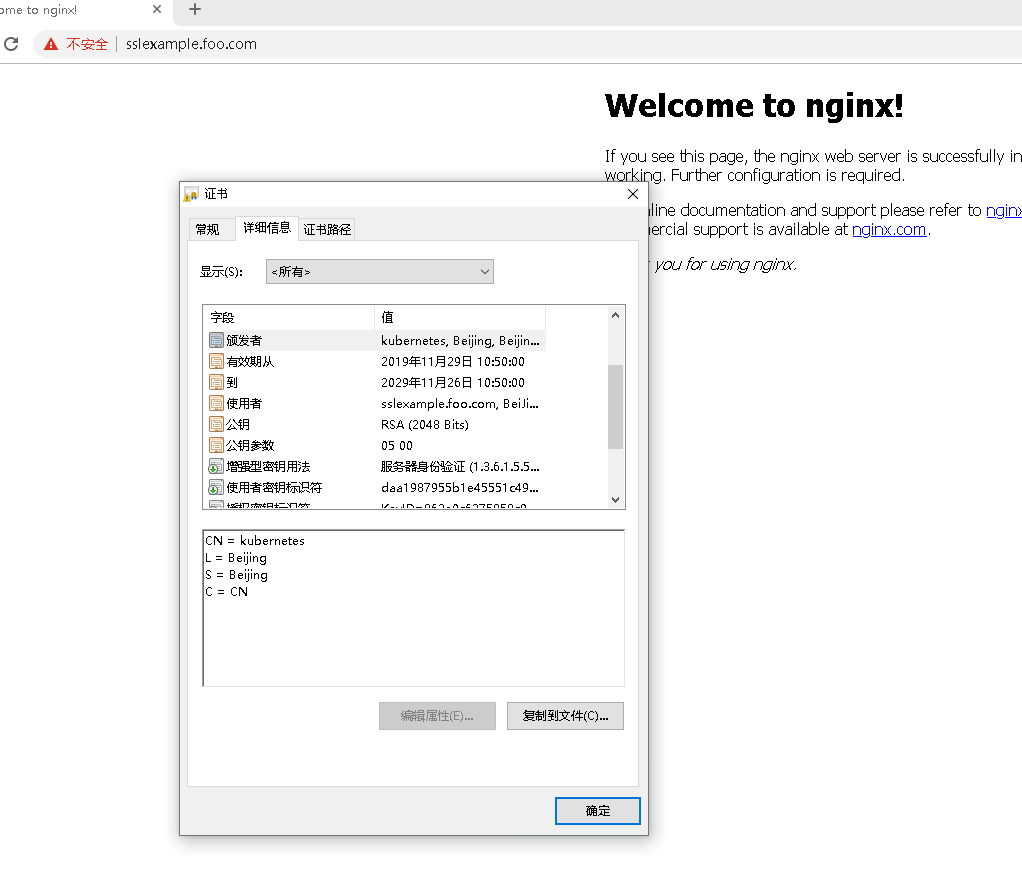

4、测试 HTTPS Ingress

[root@master01 ingress]# cat certs.sh cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF cat > ca-csr.json <<EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing" } ] } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - cat > sslexample.foo.com-csr.json <<EOF { "CN": "sslexample.foo.com", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes sslexample.foo.com-csr.json | cfssljson -bare sslexample.foo.com #kubectl create secret tls sslexample.foo.com --cert=sslexample.foo.com.pem --key=sslexample.foo.com-key.pem [root@master01 ingress]# [root@master01 ingress]# bash certs.sh 2019/11/29 10:55:22 [INFO] generating a new CA key and certificate from CSR 2019/11/29 10:55:22 [INFO] generate received request 2019/11/29 10:55:22 [INFO] received CSR 2019/11/29 10:55:22 [INFO] generating key: rsa-2048 2019/11/29 10:55:22 [INFO] encoded CSR 2019/11/29 10:55:22 [INFO] signed certificate with serial number 514318613303042562719971641871742446860618909631 2019/11/29 10:55:22 [INFO] generate received request 2019/11/29 10:55:22 [INFO] received CSR 2019/11/29 10:55:22 [INFO] generating key: rsa-2048 2019/11/29 10:55:23 [INFO] encoded CSR 2019/11/29 10:55:23 [INFO] signed certificate with serial number 498681032087048301383176988107267731280366273049 2019/11/29 10:55:23 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@master01 ingress]#

[root@master01 ingress]# kubectl create secret --help Create a secret using specified subcommand. Available Commands: docker-registry 创建一个给 Docker registry 使用的 secret generic 从本地 file, directory 或者 literal value 创建一个 secret tls 创建一个 TLS secret #这里使用TLS Usage: kubectl create secret [flags] [options] Use "kubectl <command> --help" for more information about a given command. Use "kubectl options" for a list of global command-line options (applies to all commands). [root@master01 ingress]# [root@master01 ingress]# kubectl create secret tls sslexample.foo.com --cert=sslexample.foo.com.pem --key=sslexample.foo.com-key.pem #创建秘钥配置文件 secret/sslexample.foo.com created [root@master01 ingress]#

[root@master01 ingress]# cat http-ingress.yaml apiVersion: extensions/v1beta1 #保证命名空间与上面的pod service的一致 kind: Ingress metadata: name: tls-example-ingress spec: tls: - hosts: - sslexample.foo.com secretName: sslexample.foo.com #使用密钥文件 命名空间一致 rules: - host: sslexample.foo.com http: paths: - path: / backend: serviceName: nginx-service-mxxl servicePort: 80 [root@master01 ingress]#

[root@master01 ingress]# vim http-ingress.yaml [root@master01 ingress]# kubectl apply -f http-ingress.yaml ingress.extensions/tls-example-ingress created [root@master01 ingress]# [root@node02 cfg]# netstat -anput | grep :443 | grep LISTEN tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 11828/nginx: master tcp6 0 0 :::443 :::* LISTEN 11828/nginx: master [root@node02 cfg]# 在系统hosts文件中加 10.192.27.116 foo.bar.com sslexample.foo.com

[root@master01 ingress]# kubectl get ingress NAME HOSTS ADDRESS PORTS AGE simple-fanout-example foo.bar.com 80 5h58m tls-example-ingress sslexample.foo.com 80, 443 5h13m

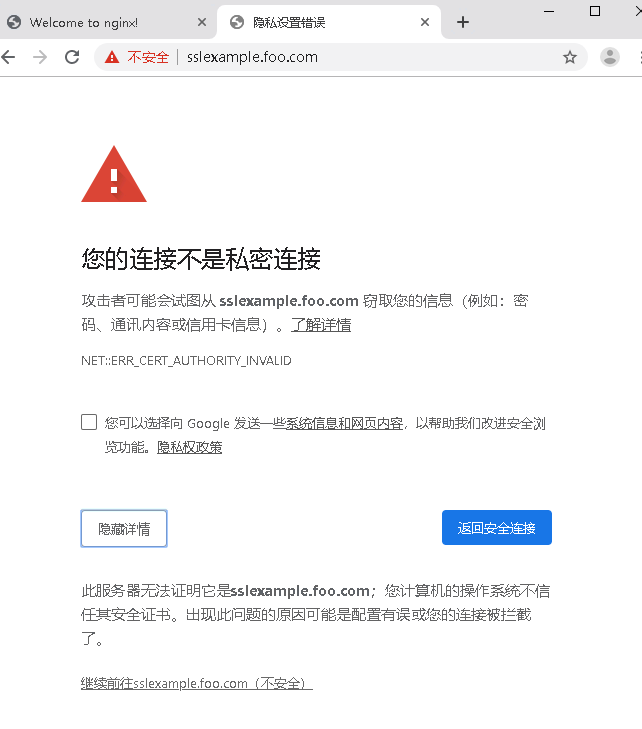

访问地址:https://sslexample.foo.com/

总结