一、服务功能简介

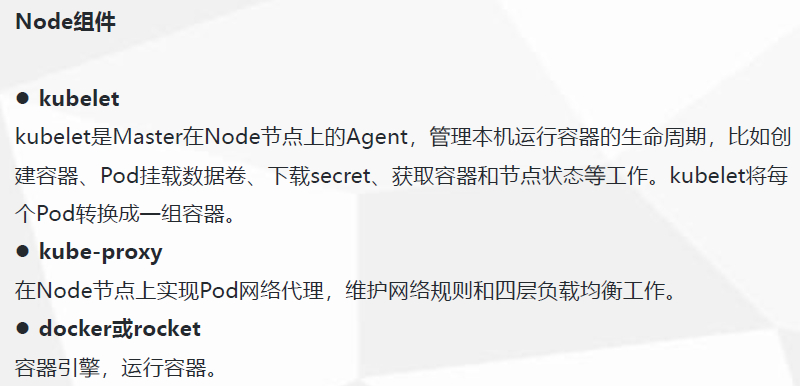

1. kubelet

kubernetes 是一个分布式的集群管理系统,在每个节点(node)上都要运行一个 worker 对容器进行生命周期的管理,这个 worker 程序就是 kubelet。简单地说,kubelet 的主要功能就是定时从某个地方获取节点上 pod/container 的期望状态(运行什么容器、运行的副本数量、网络或者存储如何配置等等),并调用对应的容器平台接口达到这个状态。集群状态下,kubelet 会从 master 上读取信息,但其实 kubelet 还可以从其他地方获取节点的 pod 信息

kubelet主要功能:

- Pod管理

- 容器健康检查

- 容器监控

2. kube-proxy

- kube-proxy其实就是管理service的访问入口,包括集群内Pod到Service的访问和集群外访问service。

- kube-proxy管理sevice的Endpoints,该service对外暴露一个Virtual IP,也成为Cluster IP, 集群内通过访问这个Cluster IP:Port就能访问到集群内对应的serivce下的Pod。

- service是通过Selector选择的一组Pods的服务抽象,其实就是一个微服务,提供了服务的LB和反向代理的能力,而kube-proxy的主要作用就是负责service的实现。

- ervice另外一个重要作用是,一个服务后端的Pods可能会随着生存灭亡而发生IP的改变,service的出现,给服务提供了一个固定的IP,而无视后端Endpoint的变化。

二、部署(node01 10.192.27.115 ,node02 10.192.27.116)

##从 主节点拷贝 kubernetes-server-linux-amd64.tar.gz / kubernetes/server/bin 目录下的 kubelet和kube-proxy 到node节点

1. 在主节点创建kubelet-bootstrap用户绑定到系统集群角色

[root@master01 kubeconfig]# kubectl get clusterrolebinding #查看已有的系统集群角色 NAME AGE cluster-admin 3h58m system:aws-cloud-provider 3h58m system:basic-user 3h58m system:controller:attachdetach-controller 3h58m system:controller:certificate-controller 3h58m system:controller:clusterrole-aggregation-controller 3h58m system:controller:cronjob-controller 3h58m system:controller:daemon-set-controller 3h58m system:controller:deployment-controller 3h58m system:controller:disruption-controller 3h58m system:controller:endpoint-controller 3h58m system:controller:expand-controller 3h58m system:controller:generic-garbage-collector 3h58m system:controller:horizontal-pod-autoscaler 3h58m system:controller:job-controller 3h58m system:controller:namespace-controller 3h58m system:controller:node-controller 3h58m system:controller:persistent-volume-binder 3h58m system:controller:pod-garbage-collector 3h58m system:controller:pv-protection-controller 3h58m system:controller:pvc-protection-controller 3h58m system:controller:replicaset-controller 3h58m system:controller:replication-controller 3h58m system:controller:resourcequota-controller 3h58m system:controller:route-controller 3h58m system:controller:service-account-controller 3h58m system:controller:service-controller 3h58m system:controller:statefulset-controller 3h58m system:controller:ttl-controller 3h58m system:discovery 3h58m system:kube-controller-manager 3h58m system:kube-dns 3h58m system:kube-scheduler 3h58m system:node 3h58m system:node-proxier 3h58m system:volume-scheduler 3h58m

[root@mster01 kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created [root@master01 kubeconfig]#

2. 创建kubeconfig文件 在主节点10.192.27.100 生成kubelet和kube-proxy的配置文件

[root@master01 bin]# cd ../../.. [root@master01 k8s]# mkdir kubeconfig [root@master01 k8s]# cd kubeconfig [root@master01 kubeconfig]# [root@master01 kubeconfig]# vim kubeconfig.sh #新建一个脚本用于快速生成配置文件

[root@master01 kubeconfig]# cat kubeconfig.sh # 创建 TLS Bootstrapping Token #BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ') BOOTSTRAP_TOKEN=0fb61c46f8991b718eb38d27b605b008 #因为我们在部署master的时候已经手动创建了,所有我们要前后保持一致 cat > token.csv <<EOF ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap" #使用创建的用户 EOF #---------------------- APISERVER=$1 SSL_DIR=$2 # 创建kubelet bootstrapping kubeconfig export KUBE_APISERVER="https://$APISERVER:6443" # 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=$SSL_DIR/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=bootstrap.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kubelet-bootstrap \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=bootstrap.kubeconfig # 设置上下文参数 kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=bootstrap.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=bootstrap.kubeconfig #---------------------- # 创建kube-proxy kubeconfig文件 kubectl config set-cluster kubernetes \ --certificate-authority=$SSL_DIR/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy \ --client-certificate=$SSL_DIR/kube-proxy.pem \ --client-key=$SSL_DIR/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

[root@master01 kubeconfig]# bash kubeconfig.sh 10.192.27.100 /root/k8s/k8s-cert #使用 ca 和kube-proxy证书

[root@master01 kubeconfig]# ls bootstrap.kubeconfig kubeconfig.sh kube-proxy.kubeconfig token.csv [root@master01 kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@10.192.27.115:/opt/kubernetes/cfg/ #拷贝配置文件 [root@master01 kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@10.192.27.116:/opt/kubernetes/cfg/

3. 部署kubelet,kube-proxy组件

[root@master01 ~]# cd /root/k8s/kubernetes/server/bin/ #拷贝node组件二进制包 [root@master01 bin]# scp kubelet kube-proxy root@10.192.27.115:/opt/kubernetes/bin/ [root@master01 bin]# scp kubelet kube-proxy root@10.192.27.116:/opt/kubernetes/bin/

自己写好的脚本 生成kubelet配置文件 和服务启动文件

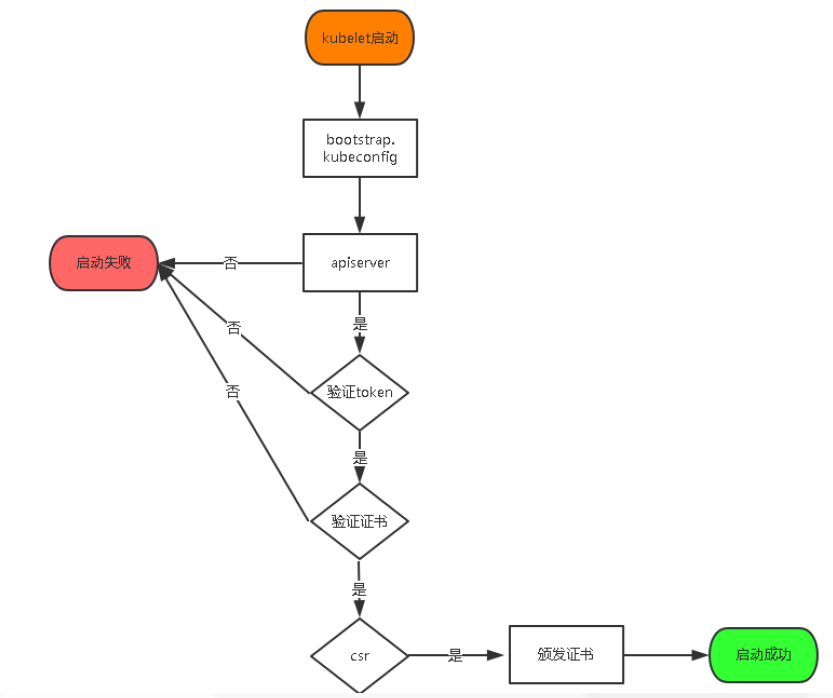

[root@node01 ~]# cat kubelet.sh #kubelet的脚本 #!/bin/bash NODE_ADDRESS=$1 DNS_SERVER_IP=${2:-"10.0.0.2"} cat <<EOF >/opt/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true \\ --v=4 \\ --hostname-override=${NODE_ADDRESS} \\ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\ #自动生成 --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\ #配置文件一 用于连接 master的apiserver 从主节点拷贝过来的 --config=/opt/kubernetes/cfg/kubelet.config \\ #配置文件二 下面要生成的配置文件 --cert-dir=/opt/kubernetes/ssl \\ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" EOF cat <<EOF >/opt/kubernetes/cfg/kubelet.config kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: ${NODE_ADDRESS} port: 10250 readOnlyPort: 10255 cgroupDriver: cgroupfs clusterDNS: - ${DNS_SERVER_IP} clusterDomain: cluster.local. failSwapOn: false authentication: anonymous: enabled: true EOF cat <<EOF >/usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=dockerd.service Requires=dockerd.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet

执行脚本

[root@node01 ~]# bash kubelet.sh 10.192.27.115 #安装kubelet [root@node01 ~]# ps -ef | grep kubelet root 19538 1 6 20:53 ? 00:00:00 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --hostname-override=10.192.27.115 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet.config --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 root 19770 7553 0 20:53 pts/0 00:00:00 grep --color=auto kubelet [root@localhost ~]#

[root@node02 ~]# bash kubelet.sh 10.192.27.116 Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. [root@node02 ~]# ps -ef | grep kubelet root 18058 1 7 20:42 ? 00:00:00 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --hostname-override=10.192.27.116 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet.config --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 root 18080 7493 0 20:42 pts/0 00:00:00 grep --color=auto kubelet

自己写好的脚本 生成kube-proxy配置文件 和服务启动文件

[root@node01 ~]# cat proxy.sh #!/bin/bash NODE_ADDRESS=$1 cat <<EOF >/opt/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true \\ --v=4 \\ --hostname-override=${NODE_ADDRESS} \\ --cluster-cidr=10.0.0.0/24 \\ --proxy-mode=ipvs \\ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" EOF cat <<EOF >/usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy

执行脚本

[root@node01 ~]# bash proxy.sh 10.192.27.115 #安装kube-proxy Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service. [root@node01 ~]# ps -ef | grep kube root 6886 1 0 22:44 ? 00:00:00 /opt/kubernetes/bin/flanneld --ip-masq --etcd-endpoints=https://10.192.27.100:2379,https://10.192.27.115:2379,https://10.192.27.116:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem root 7460 1 2 22:45 ? 00:00:02 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --hostname-override=10.192.27.115 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet.config --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 root 7991 1 5 22:46 ? 00:00:00 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=10.192.27.115 --cluster-cidr=10.0.0.0/24 --proxy-mode=ipvs --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig root 8163 7267 0 22:46 pts/0 00:00:00 grep --color=auto kube [root@node01 ~]#

[root@node02 ~]# bash proxy.sh 10.192.27.116 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service. [root@node02 ~]# ps -ef | grep kube root 6891 1 0 22:44 ? 00:00:00 /opt/kubernetes/bin/flanneld --ip-masq --etcd-endpoints=https://10.192.27.100:2379,https://10.192.27.115:2379,https://10.192.27.116:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem root 7462 1 2 22:45 ? 00:00:03 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --hostname-override=10.192.27.116 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet.config --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 root 8134 1 1 22:47 ? 00:00:00 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=10.192.27.116 --cluster-cidr=10.0.0.0/24 --proxy-mode=ipvs --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig root 8369 6993 0 22:47 pts/0 00:00:00 grep --color=auto kube

4、主节点上10.192.27.100 颁发证书

[root@master01 kubeconfig]# kubectl get csr #可以看到两个node节点等待颁发证书 NAME AGE REQUESTOR CONDITION node-csr-Aj47g-pRa5NV94S_24ilm_ndSqZSar6A4HQLZyS-w8I 3m18s kubelet-bootstrap Pending node-csr-dubWwt0x1pbSajIDgqt3irzE3zke2t9G-EyCigHRfOI 6m45s kubelet-bootstrap Pending [root@master01 kubeconfig]# [root@master01 kubeconfig]# kubectl certificate approve node-csr-Aj47g-pRa5NV94S_24ilm_ndSqZSar6A4HQLZyS-w8I #颁发证书 certificatesigningrequest.certificates.k8s.io/node-csr-Aj47g-pRa5NV94S_24ilm_ndSqZSar6A4HQLZyS-w8I approved [root@master01 kubeconfig]# kubectl certificate approve node-csr-dubWwt0x1pbSajIDgqt3irzE3zke2t9G-EyCigHRfOI ##颁发证书 certificatesigningrequest.certificates.k8s.io/node-csr-dubWwt0x1pbSajIDgqt3irzE3zke2t9G-EyCigHRfOI approved [root@master01 kubeconfig]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-Aj47g-pRa5NV94S_24ilm_ndSqZSar6A4HQLZyS-w8I 6m57s kubelet-bootstrap Approved,Issued node-csr-dubWwt0x1pbSajIDgqt3irzE3zke2t9G-EyCigHRfOI 10m kubelet-bootstrap Approved,Issued [root@master01 kubeconfig]# kubectl get node 查看node节点 NAME STATUS ROLES AGE VERSION 10.192.27.115 Ready <none> 19s v1.13.0 10.192.27.116 Ready <none> 64m v1.13.0

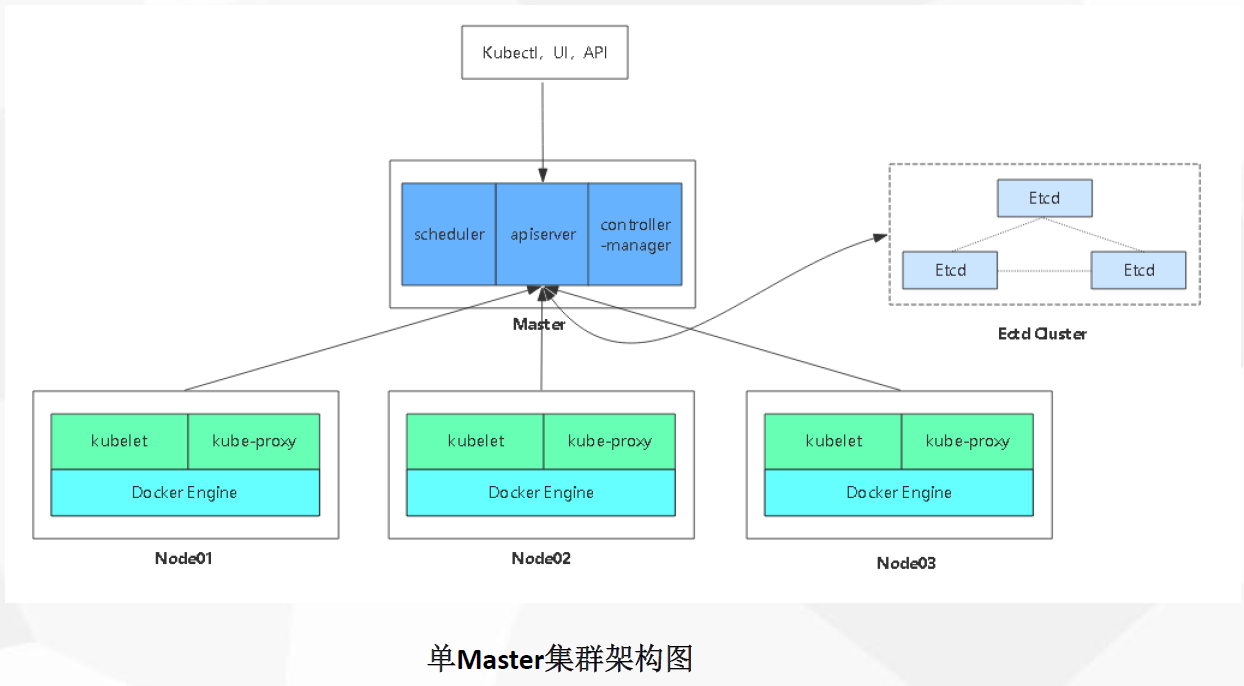

以上几篇博客完了单master集群架构

####################### 以下为参考文档 ####################

k8s删除一个Node并重新加入集群

k8s删除一个节点使用以下命令

删除一个节点前,先驱赶掉上面的pod

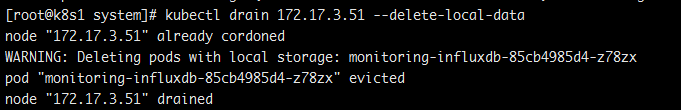

kubectl drain 172.17.3.51 --delete-local-data

然后我们来删除节点

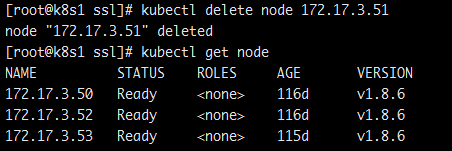

kubectl delete node nodename

从上面看已经是删除掉该节点了

其实以上命令是通用的,可以进行任何资源的删除

kubectl delete type typename

type 是资源类型,可以是node, pod, rs, rc, deployment, service等等,typename是这个资源的名称

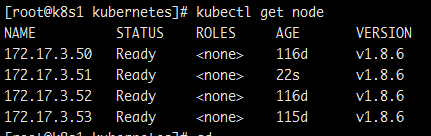

回到上面的情况,删除一个节点后,这个节点如果从新启动kubelet服务的话,在master节点还是可以看到的该节点的

如何进行有效彻底删除,并在该节点重新加入集群时候进行csr请求呢?

进入该节点

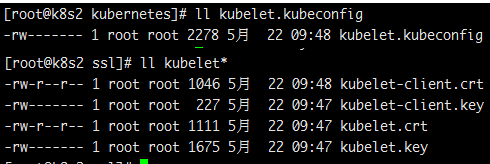

删除以下文件

以上这些文件是在Node上的kubelet启动后向master发出csr请求后通过后生成的文件,删除后,重新启动Kubelet就会重新发出csr请求,这样在master上重新通过csr请求就可以把该节点重新加入到集群里了,以上这些文件也会自动生成了。

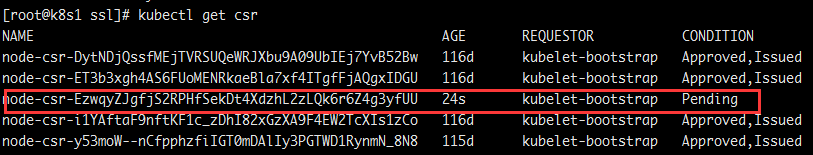

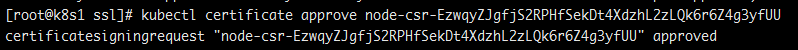

从新通过

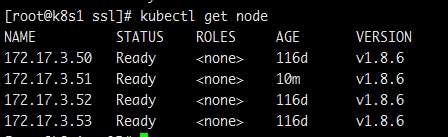

从新看下集群节点

已经可以了。

参考下以下内容