转载:https://blog.51cto.com/hmtk520/2428519

一、Pod简介

二、标签

三、Pod控制器:Deployment、ReplicaController、StatefuleSet、DaemonSet、Job、CronJob等

四、Service

五、Ingress

六、ServiceAccount/UserAccount

七、Secret&Configmap

一、Pod简介

k8s的常见资源:

workload(对外提供服务的):Pod、ReplicaSet、Deployment、StaefulSet、Job、CronJob...

Service发现及均衡:service、ingress

配置与存储:Volume、CSI(存储接口)

ConfigMap、Secret、DownwardAPI

集群级资源:Namespace、Node、Role、ClusterRole、RoleBinding、ClusterRoleBinding

元数据资源:HPA、PodTemplate、LimitRange、

一个Pod(就像一群鲸鱼,或者一个豌豆夹)相当于一个共享context的配置组,在同一个context下,应用可能还会有独立的cgroup隔离机制,一个Pod是一个容器环境下的“逻辑主机”,它可能包含一个或者多个紧密相连的应用,这些应用可能是在同一个物理主机或虚拟机上。

Pod 的context可以理解成多个linux命名空间的联合

PID 命名空间(同一个Pod中应用可以看到其它进程)

网络 命名空间(同一个Pod的中的应用对相同的IP地址和端口有权限)

IPC 命名空间(同一个Pod中的应用可以通过VPC或者POSIX进行通信)

UTS 命名空间(同一个Pod中的应用共享一个主机名称)

1、Pod种类:

自主式pod。不接受pod控制器管理,删除后不会自动创建

Pod控制器管理的Pod。删除后会自动创建,如果确实要修改数量,可以使用scale调整

2、资源清单格式:

[root@registry metrics-server]# kubectl get pods $pod_name -o yaml #可以查看当前pod的yaml格式定义 YAML格式的基本格式:apiVersion:(group/version)、metadata、kind、spec、status //amkss [root@registry metrics-server]# kubectl explain pod #可以查pod的yaml定义方法 apiVersion:定义使用的api版本信息 kind: 资源类型,比如pod metadatab:元数据信息 name: 名称 annotations <map[string]string>:注解 labels <map[string]string>:标签,字符串映射格式 namespace <string>:namespace名称 status:当前pod的状态,动态生成 sepc: containers:pod内容器定义 restartPolicy:重启策略OnFailure,Never,Default to Always nodeSelector:根据node标签调度pod nodeName:指定node hostname:pod的名称 hostPID:使用host PID hostNetwork:使用hostNetwork affinity:亲和性调度 //nodeAffinity,podAffinity,podAntiAffinity serviceAccountName:pod使用的sa,在serviceAccount重点说明 volumes:要创建的volume,在pv详细说明 tolerations:容忍度Taints与tolerations一起工作确保pod不会被调度到不合适的节点上

3、containers

[root@registry metrics-server]# kubectl explain pods.spec.containers args <[]string> #Arguments to the entrypoint command <[]string> #Entrypoint array, The docker image's ENTRYPOINT is used if this is not provided env <[]Object> #环境变量 envFrom <[]Object> # image <string> imagePullPolicy <string> #镜像拉取策略Always, Never(本地没有就不下载,需要用户手动pull), IfNotPresent. 如果镜像tag是latest,默认策略是Defaults to Always(因为latest可能会变,latest会指向一个新的标签),其他的标签则默认是IfNotPresent;并且这个策略不能修改 lifecycle <Object> livenessProbe <Object> name <string> -required- ports <[]Object> #kubectl explain pods.spec.containers.ports 此处的port containerPort hostIP #因为容器运行在哪个node不确定,因此如果确实需要绑定hostip,建议0.0.0.0 hostPort #对应主机的端口 protocol //Must be UDP, TCP, or SCTP. Defaults to "TCP" readinessProbe <Object> #存活性 resources <Object> #资源限制 limits #最大资源使用量 requests #最小资源使用量 securityContext <Object> #安全上下文 dockerfile中如果只有cmd,就运行cmd,如果cmd和entrypoint都有,cmd的内容将作为参数传递给entrypoint https://kubernetes.io/docs/tasks/inject-data-application/define-command-argument-container/ ============================================ Description Docker field name Kubernetes field name The command run by the container Entrypoint command The arguments passed to the command Cmd args ============================================ If you do not supply command or args for a Container, the defaults defined in the Docker image are used. If you supply a command but no args for a Container, only the supplied command is used. The default EntryPoint and the default Cmd defined in the Docker image are ignored. If you supply only args for a Container, the default Entrypoint defined in the Docker image is run with the args that you supplied. If you supply a command and args, the default Entrypoint and the default Cmd defined in the Docker image are ignored. Your command is run with your args. Image Entrypoint Image Cmd Container command Container args Command run [/ep-1] [foo bar] <not set> <not set> [ep-1 foo bar] [/ep-1] [foo bar] [/ep-2] <not set> [ep-2] [/ep-1] [foo bar] <not set> [zoo boo] [ep-1 zoo boo] [/ep-1] [foo bar] [/ep-2] [zoo boo] [ep-2 zoo boo] ====================================

示例:

[root@registry work]# cat broker.yaml apiVersion: v1 kind: Pod metadata: name: appv1 namespace: default labels: name: broker version: latest spec: containers: - name: broker args: env: - name: verbase value: testv1 - name: MY_NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName command: - "ping" - "-i 2" - "127.0.0.1" image: "192.168.192.234:888/broker:latest" imagePullPolicy: Always - name: nginx image: 192.168.192.234:888/nginx:latest imagePullPolicy: IfNotPresent ports: - containerPort: 80 hostPort: 8888 protocol: TCP dnsPolicy: Default restartPolicy: Always

4、lifecycle

容器生命周期钩子(Container Lifecycle Hooks)监听容器生命周期的特定事件,并在事件发生时执行已注册的回调函数

支持2种钩子:

postStart:容器启动后执行,注意由于是异步执行,它无法保证一定在ENTRYPOINT之后运行。如果失败,容器会被杀死,并根据RestartPolicy决定是否重启

preStop:容器停止前执行,常用于资源清理。如果失败,容器同样也会被杀死

回调函数支持两种方式

exec:在容器内执行命令

httpGet:向指定URL发起GET请求

示例:

[root@registry work]# cat v2.yaml apiVersion: v1 kind: Pod metadata: name: lifecycle-demo spec: containers: - name: lifecycle-demo-container image: nginx lifecycle: postStart: exec: command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"] preStop: exec: command: ["/usr/sbin/nginx","-s","quit"]

5、容器生命周期探测

k8s支持2中类型的Pod生命周期检测:

1)liveness Probe存活,容器状态

2)readiness Probe就绪型检测,是否服务Ready

Pod的状态:pending、running、failed、succeed、Unknown

探针类型三种:Exec、TCPSocketAction、HTTPGetAction

[root@registry work]# kubectl explain pods.spec.containers.readinessProbe [root@registry work]# kubectl explain pods.spec.containers.livenessProbe exec <Object> failureThreshold <integer> 探测成功后,最少连续探测失败多少次才被认定为失败,默认是 3 httpGet <Object> initialDelaySeconds <integer> 容器启动后第一次执行探测是需要等待多少秒 periodSeconds <integer> 执行探测的频率,默认是10秒。 successThreshold <integer> 探测失败后,最少连续探测成功多少次才被认定为成功,默认是 1 tcpSocket <Object> timeoutSeconds <integer> 探测超时时间,默认1秒。

apiVersion: v1 kind: Pod metadata: name: liveness-exec-pod namespace: default spec: containers: - name: live-ness-container image: 192.168.192.234:888/nginx imagePullPolicy: IfNotPresent command: ["/bin/sh","-c","touch /tmp/healthy ;sleep 60;rm -rf /tmp/healthy; sleep 30"] livenessProbe: exec: command: ["test","-e","/tmp/healthy"] initialDelaySeconds: 1 periodSeconds: 3 [root@master1 yaml]# kubectl create -f livenessl.yaml

[root@master1 yaml]# cat httpget.yaml apiVersion: v1 kind: Pod metadata: name: liveness-httpget-pod namespace: default spec: containers: - name: live-ness-container image: 192.168.192.234:888/nginx imagePullPolicy: IfNotPresent livenessProbe: httpGet: port: 80 path: /index.html initialDelaySeconds: 1 periodSeconds: 3 [root@master1 ~]# kubectl exec -it liveness-httpget-pod -- /bin/bash root@liveness-httpget-pod:/# root@liveness-httpget-pod:/usr/share/nginx/html# mv index.html index.html.bak [root@master1 yaml]# kubectl describe pods liveness-httpget-pod Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 5m56s default-scheduler Successfully assigned default/liveness-httpget-pod to master2 Normal Pulled 13s (x2 over 5m56s) kubelet, master2 Container image "192.168.192.234:888/nginx" already present on machine Normal Created 13s (x2 over 5m56s) kubelet, master2 Created container live-ness-container Warning Unhealthy 13s (x3 over 19s) kubelet, master2 Liveness probe failed: HTTP probe failed with statuscode: 404 Normal Killing 13s kubelet, master2 Container live-ness-container failed liveness probe, will be restarted Normal Started 12s (x2 over 5m56s) kubelet, master2 Started container live-ness-container

为什么要做readnessProbe和livenessProbe:

比如service根据label关联容器,一个新建的容器服务启动可能要10s,在刚创建成功后就被service关联

收到的请求就会失败,最好是在服务运行就绪(ready)后再提供服务

[root@master1 ~]# kubectl explain pods.spec.containers.readinessProbe [root@master1 yaml]# cat readness.yaml apiVersion: v1 kind: Pod metadata: name: readiness-exec-pod namespace: default spec: containers: - name: readiness-container image: 192.168.192.234:888/nginx imagePullPolicy: IfNotPresent ports: - name: http containerPort: 80 livenessProbe: httpGet: port: http path: /index.html initialDelaySeconds: 1 periodSeconds: 3

6、资源限制

1)resources

[root@registry work]# kubectl explain pods.spec.containers.resources spec.containers[].resources.limits.cpu spec.containers[].resources.limits.memory spec.containers[].resources.requests.cpu spec.containers[].resources.requests.memory [root@registry work]# cat v3.yaml apiVersion: v1 kind: Pod metadata: name: nginx spec: containers: - image: nginx name: nginx resources: requests: cpu: "300m" memory: "56Mi" limits: cpu: "500m" memory: "128Mi" mem支持单位:Ki | Mi | Gi | Ti | Pi 等

2)限制网络带宽

可以通过给Pod增加kubernetes.io/ingress-bandwidth和kubernetes.io/egress-bandwidth这两个annotation来限制Pod的网络带宽

目前只有kubenet网络插件支持限制网络带宽,其他CNI网络插件暂不支持这个功能。

apiVersion: v1 kind: Pod metadata: name: qos annotations: kubernetes.io/ingress-band 3M kubernetes.io/egress-band 4M spec: containers: - name: iperf3 image: networkstatic/iperf3 command: - iperf3 - -s

7、Init Container

Init Container在所有容器运行之前执行(run-to-completion),常用来初始化配置。

[root@registry ~]# cat v3.yaml apiVersion: v1 kind: Pod metadata: name: init-demo spec: containers: - name: nginx image: nginx ports: - containerPort: 80 volumeMounts: - name: workdir mountPath: /usr/share/nginx/html initContainers: - name: install image: busybox command: - wget - "-O" - "/work-dir/index.html" - http://kubernetes.io volumeMounts: - name: workdir mountPath: "/work-dir" dnsPolicy: Default volumes: - name: workdir emptyDir: {}

二、标签

1、标签

一个资源resouces可存在多个label,一个label也可以应用于多个resources

每个标签都可以被label selector进行匹配

label可以在资源创建时yaml或者命令创建资源时定义,也可以在创建后命令添加

key=value

key:字母、数字、_、-、.

value:可以为空、只能以字母或者数字开头或者结尾,中间可使用

[root@master1 ~]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS client 1/1 Running 0 5d23h run=client nginx-deloy-67f8c9dc5c-bgx9k 1/1 Running 0 5d22h pod-template-hash=67f8c9dc5c,run=nginx-deloy [root@master1 ~]# kubectl get pods -L=run,nginx //输出增加run和nginx两个列、 NAME READY STATUS RESTARTS AGE RUN NGINX client 1/1 Running 0 5d23h client nginx-deloy-67f8c9dc5c-bgx9k 1/1 Running 0 5d22h nginx-deloy [root@master1 ~]# kubectl get pods -l pod-template-hash //具有标签 pod-template-hash 的pod [root@master1 ~]# kubectl get pods -l run=client //标签run为client的 NAME READY STATUS RESTARTS AGE client 1/1 Running 0 5d23h 添加标签: [root@master1 ~]# kubectl label pods client name=label1,run [root@master1 ~]# kubectl label pods client name=label2 --overwrite //强制修改标签 删除标签: [root@master1 ~]# kubectl label client client name- //key- 即可删除标签

2、标签选择器:

等值关系:=,==,!=

集合关系:

KEY in (value1,value2,...) # kubectl get pods --show-labels -l "name notin (label1,label2)

Key not in (value1,value2...)# kubectl get pods --show-labels -l "name in (label1,label2)"

Key 存在

!key 不存在 # kubectl get pods --show-labels -l "! name"

许多资源支持内嵌字段定义其使用的标签选择器:

[root@registry ~]# kubectl explain deployment.spec.selector matchLabels:直接给定键值 mathExpressions:{key:"KEY",operator:"OPERATOR",value:[VAL1,VAL2,VAL3..]} 操作符: In,NotIn:value字段的值必须为非空列表 Exists,NotExists:value字段的值必须为空列表 标签的对象可以是:pods、node... annotations:与label不同的地方在于、他不能用于挑选资源对象,仅用于对象提供“元数据” 可以在yaml文件中定义,也可以在 [root@registry ~]# kubectl describe pods $pod_name 查看

三、Pod控制器

Pod控制器:

ReplicaSet:确保副本处于用户期待状态,新的RC,支持动态扩容,(无状态pod资源) #核心概念:标签选择器、用户期望的副本数、Pod资源模板

Deployment工作在ReplicaSet之上,Deploement支持滚动更新等等,Deployments是一个更高层次的概念,它管理ReplicaSets,并提供对pod的声明性更新以及许多其他的功能,因此一般建议使用Deployment

DaemonSet:集群的所有同一个label的node都运行一个pod副本

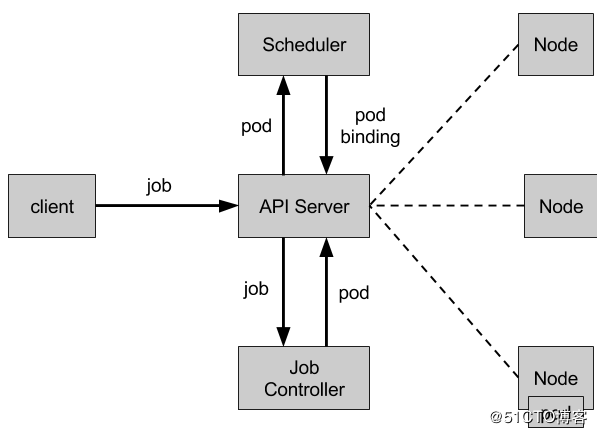

Job:只操作一次的Pod,Job负责保证任务正常运行结束,而不是异常任务

CronJob:周期性job,

StatefuleSet:有状态Pod,需要自定义操作内容

TPR:Third party resource,1.2+ 1.7废弃

CDR:custom defined resource,1.8+

1、Pod控制器ReplicationSet

ReplicaSet是下一代复本控制器。ReplicaSet和 Replication Controller之间的唯一区别是现在的选择器支持。注:Replication Controller被下一代ReplicaSet副本控制器替代

Replication Controller 保证了在所有时间内,都有特定数量的Pod副本正在运行,如果太多了,Replication Controller就杀死几个,如果太少了,Replication Controller会新建几个

Deployments是一个更高层次的概念,它管理ReplicaSets,并提供对pod的声明性更新以及许多其他的功能,因此一般建议使用Deployment

[root@master1 ~]# kubectl explain ReplicaSet //kubectl explain rs [root@master1 yaml]# kubectl create -f rs.yaml replicaset.apps/myrs created [root@master1 yaml]# cat rs.yaml apiVersion: apps/v1 kind: ReplicaSet spec: replicas: 2 selector: matchLabels: app: myapp release: beta template: metadata: name: myapp-pod labels: app: myapp release: beta environment: dev spec: containers: - name: myrs-container image: 192.168.192.234:888/nginx:latest ports: - name: http containerPort: 80 metadata: name: myrs namespace: default [root@master1 yaml]# [root@master1 yaml]# kubectl get pods -l app NAME READY STATUS RESTARTS AGE myrs-fnhwd 1/1 Running 0 61s myrs-x5zgh 1/1 Running 0 61s 如果为已有的pod添加上pod标签 app: myapp release: beta ,pod控制器会选取其中一个进行干掉 [root@master1 yaml]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS client 1/1 Running 0 10d run=client liveness-httpget-pod 1/1 Running 1 4d <none> myrs-fnhwd 1/1 Running 0 5m5s app=myapp,environment=dev,release=beta myrs-x5zgh 1/1 Running 0 5m5s app=myapp,environment=dev,release=beta nginx-deloy-67f8c9dc5c-bgx9k 1/1 Running 0 10d pod-template-hash=67f8c9dc5c,run=nginx-deloy readiness-exec-pod 1/1 Running 0 3d23h <none> [root@master1 yaml]# kubectl label pods liveness-httpget-pod app=myapp release=beta --overwrite pod/liveness-httpget-pod labeled [root@master1 ~]# kubectl get pods --show-labels -l app,release //发现myrs被干掉一个 NAME READY STATUS RESTARTS AGE LABELS liveness-httpget-pod 1/1 Running 1 4d app=myapp,release=beta myrs-x5zgh 1/1 Running 0 7m28s app=myapp,environment=dev,release=beta

1)kubectl scale命令 2)修改yaml方式 ,然后kubectl apply -f yaml文件 3)Kubectl edit ReplicaSet $rs名称 更新升级:更改容器iamge版本 kubectl edit rs $rs名称也可以,但是只修改了控制器(rs)的版本,只有在pod被删除后才会使用新image Deployment是建立在RS之上的,支持滚动升级,支持控制更新逻辑和更新策略(最多/最少)多个pods

[root@registry ~]# cat v3.yaml apiVersion: autoscaling/v1 kind: HorizontalPodAutoscaler metadata: name: frontend-scaler spec: scaleTargetRef: kind: ReplicaSet name: frontend minReplicas: 3 maxReplicas: 10 targetCPUUtilizationPercentage: 50

2、Pod控制器Deployment

1、deployment的镜像更新

[root@registry ~]# kubectl set image deployment/nginx-deployment nginx=nginx:1.9.1 [root@registry ~]# kubectl edit deployment/nginx-deployment [root@registry ~]# kubectl rollout status deployment/nginx-deployment #查看 rollout 的状态,只要执行 Deployment 可以保证在升级时只有一定数量的 Pod 是 down 的。默认的,它会确保至少有比期望的Pod数量少一个是up状态(最多一个不可用)。 Deployment 同时也可以确保只创建出超过期望数量的一定数量的 Pod。默认的,它会确保最多比期望的Pod数量多一个的 Pod 是 up 的(最多1个 surge )。 [root@registry work]# kubectl describe deployment $deployment_名称 RollingUpdateStrategy: 1 max unavailable, 25% max surge #查看更新策略

2、更新策略

[root@registry work]# kubectl explain deployment.spec.strategy #镜像更新策略 rollingUpdate #仅到type位RollingUpdate的时候有效 maxSurge #最大超出pod个数,百分比或者更个数 maxUnavailable #最大不可用 type #"Recreate" or "RollingUpdate"默认RollingUpdate [root@master1 yaml]# cat deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: dpv1 namespace: default spec: replicas: 2 selector: matchLabels: name: mydp version: dpv1 template: metadata: labels: name: mydp version: dpv1 spec: containers: - name: mydp image: 192.168.192.234:888/nginx:latest ports: - name: dpport containerPort: 80 strategy: rollingUpdate: maxSurge: 1 [root@master1 yaml]# kubeclt apploy -f mydp.yaml //apply可以执行多次,自动更新 label:pod、replicaSet、deployment都可以有label [root@master1 yaml]# kubectl explain deployment.metadata.labels //deployment可以简写为deploy(限kubectl命令行) [root@master1 yaml]# kubectl explain replicaSet.metadata.labels [root@master1 yaml]# kubectl explain pods.metadata.labels [root@master1 yaml]# kubectl get deployment --show-labels NAME READY UP-TO-DATE AVAILABLE AGE LABELS dpv1 2/2 2 2 6m2s <none> [root@master1 yaml]# kubectl get replicaSet --show-labels NAME DESIRED CURRENT READY AGE LABELS dpv1-7b7df4f86c 2 2 2 6m5s name=mydp,pod-template-hash=7b7df4f86c,version=dpv1 [root@master1 yaml]# kubectl get pods --show-labels -l version=dpv1 NAME READY STATUS RESTARTS AGE LABELS dpv1-7b7df4f86c-9sdk8 1/1 Running 0 6m11s name=mydp,pod-template-hash=7b7df4f86c,version=dpv1 dpv1-7b7df4f86c-nv6xv 1/1 Running 0 6m11s name=mydp,pod-template-hash=7b7df4f86c,version=dpv1 Pod-template-hash label: 当 Deployment 创建或者接管 ReplicaSet 时,Deployment controller 会自动为 Pod 添加 pod-template-hash label。这样做的目的是防止 Deployment 的子ReplicaSet 的 pod 名字重复 通过将 ReplicaSet 的 PodTemplate 进行哈希散列,使用生成的哈希值作为 label 的值,并添加到 ReplicaSet selector 里、 pod template label 和 ReplicaSet 管理中的 Pod 上。

3、修改Pod

修改yaml的image的版本号会看到整个更新过程 [root@master1 ~]# kubectl get pods --show-labels -l version -w [root@master1 ~]# kubectl apply -f deployment.yaml deployment.apps/dpv1 configured 查看image更新生效 [root@master1 ~]# kubectl describe pods $pods [root@master1 ~]# kubectl get rs -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR dpv1-5778f9d958 2 2 2 11m mydp 192.168.192.234:888/nginx:v2 name=mydp,pod-template-hash=5778f9d958,version=dpv1 dpv1-7b7df4f86c 0 0 0 26m mydp 192.168.192.234:888/nginx:latest name=mydp,pod-template-hash=7b7df4f86c,version=dpv1 可以看到保存了2个模板,一个为0,一个为2 [root@master1 yaml]# kubectl rollout history deployment dpv1 //查看版本历史 deployment.extensions/dpv1 REVISION CHANGE-CAUSE 1 <none> 2 <none> 修改副本数量 方法1:scale 方法2:kubectl edit 方法3:kubectl apply -f *.yaml 方法4:kubectl patch 以打补丁方式操作 [root@master1 yaml]# kubectl patch deployment dpv1 -p '{"spec":{"replicas":5}}' deployment.extensions/dpv1 patched 如果只修改镜像还可以使用:kubectl set image deployment dbv1 $image名称

4、pause

暂停容器,用户批量修改操作,容器再次启动会应用所有更新策略 [root@master1 yaml]# kubectl rollout pause $资源名称 [root@master1 yaml]# kubectl set image deployment dpv1 mydp=192.168.192.234:888/nginx:v3 && kubectl rollout pause deployment dpv1 [root@master1 ~]# kubectl get pods -o wide -l version -w //会在创建一个新的pod后停止 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES dpv1-5778f9d958-2jn9b 1/1 Running 0 44m 172.30.200.5 master3 <none> <none> dpv1-5778f9d958-bsctb 1/1 Running 0 22m 172.30.200.3 master3 <none> <none> dpv1-5778f9d958-tq64f 1/1 Running 0 44m 172.30.72.5 master1 <none> <none> dpv1-6fb8f5799f-286bg 0/1 Pending 0 0s <none> <none> <none> <none> dpv1-6fb8f5799f-286bg 0/1 Pending 0 0s <none> master2 <none> <none> dpv1-6fb8f5799f-286bg 0/1 ContainerCreating 0 0s <none> master2 <none> <none> dpv1-6fb8f5799f-286bg 1/1 Running 0 1s 172.30.56.4 master2 <none> <none> 新开一个终端://会发现有4个,desired为 3 [root@master1 yaml]# kubectl get pods -l version NAME READY STATUS RESTARTS AGE dpv1-5778f9d958-2jn9b 1/1 Running 0 46m dpv1-5778f9d958-bsctb 1/1 Running 0 24m dpv1-5778f9d958-tq64f 1/1 Running 0 46m dpv1-6fb8f5799f-286bg 1/1 Running 0 78s [root@master1 yaml]# kubectl rollout status deployment dpv1 //查看当前rollout状态 Waiting for deployment "dpv1" rollout to finish: 1 out of 3 new replicas have been updated... 重新resume deployment: [root@master1 ~]# kubectl rollout resume deployment dpv1 deployment.extensions/dpv1 resumed 回滚:undo ,默认回滚到上一个版本 [root@master1 ~]# kubectl rollout undo deployment dpv1 --to-revision=1 #第一个版本,不加参数--to-revision默认回滚到上一个版本 [root@registry work]# kubectl explain deploy.spec.revisionHistoryLimit #deployment 最多保留多少 revision 历史记录

3、Pod控制器DaemonSet

DaemonSet保证在每个Node上都运行一个容器副本,常用来部署一些集群的日志、监控或者其他系统管理应用。典型的应用包括: 日志收集,比如fluentd,logstash等 系统监控,比如Prometheus Node Exporter,collectd,New Relic agent,Ganglia gmond等 系统程序,比如kube-proxy, kube-dns, glusterd, ceph等 [root@master1 yaml]# cat daemon.yaml [root@registry prometheus]# cat node-exporter-ds.yml apiVersion: apps/v1 kind: DaemonSet metadata: name: node-exporter namespace: kube-system labels: k8s-app: node-exporter kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v0.15.2 spec: selector: matchLabels: k8s-app: node-exporter version: v0.15.2 updateStrategy: type: OnDelete template: metadata: labels: k8s-app: node-exporter version: v0.15.2 annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: priorityClassName: system-node-critical containers: - name: prometheus-node-exporter image: "prom/node-exporter:v0.15.2" imagePullPolicy: "IfNotPresent" args: - --path.procfs=/host/proc - --path.sysfs=/host/sys ports: - name: metrics containerPort: 9100 hostPort: 9100 volumeMounts: - name: proc mountPath: /host/proc readOnly: true - name: sys mountPath: /host/sys readOnly: true resources: limits: cpu: 10m memory: 50Mi requests: cpu: 10m memory: 50Mi hostNetwork: true hostPID: true volumes: - name: proc hostPath: path: /proc - name: sys hostPath: path: /sys [root@registry prometheus]# kubectl explain daemonset.spec updateStrategy #Pod更新策略 template #Pod模板 selector #node标签选择器,根据matchExpressions,matchLabels revisionHistoryLimit #历史保留个数 minReadySeconds #最少多少秒必须保证可用

Job负责批量处理短暂的一次性任务 (short lived one-off tasks),即仅执行一次的任务,它保证批处理任务的一个或多个Pod成功结束。 Kubernetes支持以下几种Job: 1)非并行Job:通常一个Pod对应一个Job,除非Pod异常才会重启Pod,一旦此Pod正常结束,Job将结束 2)固定结束次数的Job:启动多个Pod,设置.spec.parallelism控制并行度,直到.spec.completions个Pod成功结束,Job结束 3)带有工作队列的并行Job:设置.spec.Parallelism但不设置.spec.completions,当所有Pod结束并且至少一个成功时,Job就认为是成功Kubernetes理论介绍系列(二) Job Controller负责根据Job Spec创建Pod,并持续监控Pod的状态,直至其成功结束。如果失败,则根据restartPolicy(只支持OnFailure和Never,不支持Always)决定是否创建新的Pod再次重试任务。 Kubernetes理论介绍系列(二) 1、Job yaml定义 [root@registry work]# cat job.yaml apiVersion: batch/v1 kind: Job metadata: name: pi spec: completions: 2 parallelism: 3 template: metadata: name: nginx spec: containers: - name: nginx image: "192.168.192.234:888/nginx:latest" command: ["sh","-c","echo test for nginx && sleep 5"] restartPolicy: Never 2、CronJob [root@registry work]# cat cronjob.yaml apiVersion: batch/v2alpha1 kind: CronJob metadata: name: hello spec: schedule: "*/1 * * * *" jobTemplate: spec: template: spec: containers: - name: hello image: 192.168.192.234:888/nginx:latest args: - /bin/sh - -c - date; echo Hello from the Kubernetes cluster && sleep 5 restartPolicy: OnFailure 支持的时间格式;分 时 天 月 周 //支持的字符: "*"匹配该域的任意值,"/"每隔多久, spec.schedule指定任务运行周期,格式同Cron spec.jobTemplate指定需要运行的任务,格式同Job spec.startingDeadlineSeconds指定任务开始的截止期限 spec.concurrencyPolicy指定任务的并发策略,支持Allow、Forbid和Replace三个选项 spec.suspend 设置为true,后续所有执行被挂起

四、Service

为了给客户端提供一个固定的访问地址:service; k8s提供的三种类型的ip:(node,pod,clusterIP),service的域名解析,强依赖与CoreDNS kube-proxy始终监听着api-server 获取任何一个与service相关的资源变动,并在本地添加规则 ;api server---[watch]-----kube-proxy service实现的三种模型: 1、user namespace Client Pod[user空间]-->service(iptables kernel空间)-->kube-proxy-->转发到服务所在节点的kube-proxy->对应的服务pod 由kube-proxy负责调度 2、iptables Client Pod-->servie(iptables) ->服务端 不再依赖kube-proxy调度 3、ipvs //version 1.11+之后 ipvs模块:ip_vs_rr,ip_vs_wrr,ip_vs_sh,nf_contrack_ipv4(连接追踪)需要添加专门的选项 KUBE_RPXOY_MODE=ipvs,ipvs Client Pod-->servie(ipvs) ->服务端 service类型: clusterip nodeport :Client->node_ip:node_port->cluster_ip:cluster_port-->pod_ip:contaier_port loadbalancer //云产品的lb, ExtraName 集群外部的域名 FQDN: CNAME->FQDN //集群外部的域名解析为集群内使用的域名 注:这几个都是需要clusterip的 无头服务:么有clusterip headless service : ServiceNmae-->PodIP 1、yaml示例service [root@registry work]# cat v3.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: name: nginx spec: selector: matchLabels: name: nginx department: dev replicas: 2 template: metadata: name: nginx labels: name: nginx department: dev spec: containers: - name: nginx image: 192.168.192.234:888/nginx:latest imagePullPolicy: IfNotPresent restartPolicy: Always --- apiVersion: v1 kind: Service metadata: name: test-nginx spec: ports: - name: http port: 8080 targetPort: 80 protocol: TCP - name: ssh port: 2222 targetPort: 22 protocol: TCP selector: name: nginx department: dev type: ClusterIP [root@registry work]# kubectl explain svc.spec clusterIP #服务ip,集群内有效 externalIPs #引入外部的IP healthCheckNodePort #健康检查端口 ports #nodePort使用node的端口,port服务端口,targetPort pod端口 selector #标签选择器 type #服务类型 ExternalName, ClusterIP, NodePort, and LoadBalancer ExternalName:集群外部的服务引用到集群内部使用 ClusterIP:集群内使用 NodePort:物理机网段 2、命令行方式 [root@master1 ~]# kubectl run nginx-deloy --image=192.168.192.234:888/nginx:latest --port=80 --replicas=1 [root@master1 ~]# kubectl get pods -o wide //该pod在Replicas和Deployment中都可以看到,describe 的controlled by Replicas NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deloy-67f8c9dc5c-4nk68 1/1 Running 0 3m5s 172.30.72.3 master1 <none> <none> 可以看到pod运行在master1上 直接curl $pod_ip:80 是可以的 问题1:Kubectl delete pods $podname 后新建的pod名称会发生改变 解决方法:kubectl expose service_ip:service_port dnat到 pod_ip:pod_port Usage: kubectl expose (-f FILENAME | TYPE NAME) [--port=port] [--protocol=TCP|UDP|SCTP] [--target-port=number-or-name] [--name=name] [--external-ip=external-ip-of-service] [--type=type] [options] --type='': Type for this service: ClusterIP, NodePort, LoadBalancer, or ExternalName. Default is 'ClusterIP'. (-f FILENAME | TYPE NAME) //TYPE:控制器类型 NAME:控制器名称,expose之后 service提供一个固定的ip,但是仅限集群内部pod客户端使用 确定pod控制器:kubectel describe pods $pod名称 [root@master1 ~]# kubectl expose deployment nginx-deloy --name nginx1 --port=80 --target-port=80 --protocol=TCP service/nginx1 exposed [root@master1 ~]# kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dnsutils-ds NodePort 10.254.98.197 <none> 80:31707/TCP 2d5h kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 2d9h my-nginx ClusterIP 10.254.1.216 <none> 80/TCP 2d5h nginx1 ClusterIP 10.254.63.79 <none> 80/TCP 11s pod网段内:直接curl $service_ip:80 也可以访问 //不在集群内的节点是无法访问的 3、域名解析 kubectl get svc -n kube-system //可以使用kubedns提供的域名解析功能,直接解析 service 创建的pod,默认容器内/etc/resolv.conf配置中会有nameserver为 $kubedns的ip 记录 配置search 域 //域名不全的情况下,会自动补全 [root@client /home/admin] #cat /etc/resolv.conf nameserver 10.244.0.2 search default.svc.cluster.local svc.cluster.local cluster.local options ndots:5 //client机器内ping nginx1会自动补全 nginx1.default.svc.cluster.local (10.254.63.79),解析的地址为clusterip [root@master1 ~]# dig -t A nginx1.default.svc.cluster.local @10.254.0.2 这种是可以的 kubectl describe service nginx //可以看到实际的情况 kubectl get pods --show-labels kubectl edit service $服务名 //可以直接修改服务信息 [root@master1 ~]# kubectl describe svc nginx1 //删除pod后ip会发生变化,但是使用cluster ip人仍然可以访问, //svc和pod 通过label selector 关联 Name: nginx1 Namespace: default Labels: run=nginx-deloy Annotations: <none> Selector: run=nginx-deloy //关联 pod的标签为nginx-deploy Type: ClusterIP IP: 10.254.63.79 Port: <unset> 80/TCP TargetPort: 80/TCP Endpoints: 172.30.200.3:80 Session Affinity: None Events: <none> [root@master1 ~]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS client 1/1 Running 0 24m run=client nginx-deloy-67f8c9dc5c-zpv4q 1/1 Running 0 38m pod-template-hash=67f8c9dc5c,run=nginx-deloy service-->endpoints-->pod //k8s有endpoints资源的概念 使用curl $cluster_ip:80的方式就可以访问了 资源记录: SVC_NAME.NS_NAME.DOMAIN.LTD. #举例:mysvc.default.svc.cluter.local. 4、Headless service 没有clusterIP,客户端根据service可以获取 Label selector后的po列表,由客户端自行决定如何处理这个Pod列表 定义:headless service [root@master1 yaml]# kubectl apply -f heaness.yaml service/headness created [root@master1 yaml]# cat heaness.yaml apiVersion: v1 kind: Service metadata: name: headness namespace: default spec: clusterIP: "" ports: - name: mysrvport port: 80 targetPort: 80 selector: name: mydp version: dpv1 [root@master1 yaml]# [root@master1 yaml]# cat heaness.yaml apiVersion: v1 kind: Service metadata: name: headness namespace: default spec: selector: name: mydp version: dpv1 clusterIP: "None" ports: - name: mysrvport port: 80 targetPort: 80 [root@master1 yaml]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dnsutils-ds NodePort 10.254.98.197 <none> 80:31707/TCP 12d headness ClusterIP None <none> 80/TCP 9s //这里一定要是"None" kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 13d mysvc ClusterIP 10.254.0.88 <none> 80/TCP 38m [root@master1 yaml]# dig -t A headness.default.svc.cluster.local @172.30.200.2 //可以获取三个地址 headness.default.svc.cluster.local. 5 IN A 172.30.72.6 headness.default.svc.cluster.local. 5 IN A 172.30.56.4 headness.default.svc.cluster.local. 5 IN A 172.30.200.3 [root@master1 yaml]# dig -t A mysvc.default.svc.cluster.local @172.30.200.2 //有clusterip的只会被解析会clusterip mysvc.default.svc.cluster.local. 5 IN A 10.254.0.88

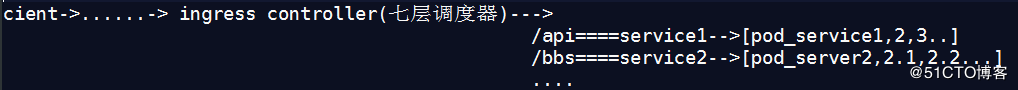

五、Ingress

1、ingress K8s暴露服务的方式:LoadBlancer Service、ExternalName、NodePort Service、Ingress Ingress Controller通过与Kubernetes API交互,动态的去感知集群中Ingress 规则变化,然后读取他,按照他自己模板生成一段 Nginx 配置,再写到 Nginx Pod 里,最后 reload 一下 四层 调度器 不负责建立会话(看工作模型nat,dr,fullnat,tunn) client需要与后端建立会话 七层的调度器: client 只需要和调度器建立连接调度器管理会话 Ingress的资源类型有以下几种: 1、单Service资源型Ingress #只设置spec.backend,不设置其他的规则 2、基于URL路径进行流量转发 #根据spec.rules.http.paths 区分对同一个站点的不同的url的请求,并转发到不同主机 3、基于主机名称的虚拟主机 #spec.rules.host 设置不同的host来区分不同的站点 4、TLS类型的Ingress资源 #通过Secret获取TLS私钥和证书 (名为 tls.crt 和 tls.key) Ingress controller #HAproxy/nginx/Traefik/Envoy (服务网格) 要调度的肯定不止一个服务,url 区分不同的虚拟主机,server,一个server定向不同的一组podKubernetes理论介绍系列(二) service使用label selector始终关心 watch自己的pod,一旦pod发生变化,自己也理解作出相应的改变 ingress controller 借助于service(headless)关注pod的状态变化,service会把状态变化及时反馈给ingress service对后端pod进行分类(headless),ingress在发现service分类的pod资源发生改变的时候,及时作出反应 ingress基于service对pod的分类,获取分类的pod ip列表,并注入ip列表信息到ingress中 创建ingress需要的步骤: 1、ingress controller 2、配置前端,server虚拟主机 3、根据service收集到的pod 信息,生成upstream server,反映在ingress并注册到ingress controller中 2、安装 安装步骤 #https://github.com/kubernetes/ingress-nginx/blob/master/docs/deploy/index.md 介绍:https://github.com/kubernetes/ingress-nginx: ingress-nginx 默认会监听所有的namespace,如果想要特定的监听--watch-namespace 如果单个host定义了不同路径,ingress会 合并配置 [root@master1 yaml]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml 内容包含: 1)创建Namespace[ingress-nginx] 2)创建ConfigMap[nginx-configuration]、ConfigMap[tcp-services]、ConfigMap[udp-services] 3)创建RoleBinding[nginx-ingress-role-nisa-binding]=Role[nginx-ingress-role]+ServiceAccount[nginx-ingress-serviceaccount] 4)创建ClusterRoleBinding[nginx-ingress-clusterrole-nisa-binding]=ClusterRole[nginx-ingress-clusterrole]+ServiceAccount[nginx-ingress-serviceaccount] 5)Deployment[nginx-ingress-controller]应用ConfigMap[nginx-configuration]、ConfigMap[tcp-services]、ConfigMap[udp-services]作为配置, [root@master1 yaml]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/baremetal/service-nodeport.yaml [root@master1 yaml]# cat service-nodeport.yaml #修改后 apiVersion: v1 kind: Service metadata: name: ingress-nginx namespace: ingress-nginx spec: type: NodePort ports: - name: http port: 80 targetPort: 80 protocol: TCP nodePort: 30080 - name: https port: 443 targetPort: 443 protocol: TCP nodePort: 30443 selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx [root@master1 yaml]# kubectl get svc -n ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx NodePort 10.254.90.250 <none> 80:30080/TCP,443:30443/TCP 10m 验证: [root@master1 ingress]# kubectl describe svc ingress-nginx -n ingress-nginx Name: ingress-nginx Namespace: ingress-nginx Labels: app.kubernetes.io/name=ingress-nginx app.kubernetes.io/part-of=ingress-nginx Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app.kubernetes.io/name":"ingress-nginx","app.kubernetes.io/par... Selector: app.kubernetes.io/name=ingress-nginx,app.kubernetes.io/part-of=ingress-nginx Type: NodePort IP: 10.254.90.250 Port: http 80/TCP TargetPort: 80/TCP NodePort: http 30080/TCP Endpoints: 172.30.72.7:80 Port: https 443/TCP TargetPort: 443/TCP NodePort: https 30443/TCP Endpoints: 172.30.72.7:443 //这个endpoint一定有内容 Session Affinity: None External Traffic Policy: Cluster Events: <none> [root@master1 ingress]# curl $ingress_nginx_srv_Ip:80 #会提示404这就代表已经正常解析 如果要在集群内所有节点或者集群内部分节点上部署,可以修改该yaml的 deployment部分为DaemonSet,设置为共享物理机的network namespace [root@master1 yaml]# kubectl explain DaemonSet.spec.template.spec.hostNetwork [root@master1 yaml]# kubectl get pods -n ingress-nginx -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ingress-controller-6c777cd89c-65xr4 1/1 Running 0 6m53s 172.30.72.7 master1 <none> <none> [root@master1 yaml]# curl -I 172.30.72.7/healthz #返回200 3、验证安装 [root@registry ~]# kubectl get pods --all-namespaces -l app.kubernetes.io/name=ingress-nginx --watch [root@registry ~]# POD_NAMESPACE=ingress-nginx [root@registry ~]# POD_NAME=$(kubectl get pods -n $POD_NAMESPACE -l app.kubernetes.io/name=ingress-nginx -o jsonpath='{.items[0].metadata.name}') [root@registry ~]# kubectl exec -it $POD_NAME -n $POD_NAMESPACE -- /nginx-ingress-controller --version 4、创建ingress 1)创建service和deployment [root@master1 ingress]# kubectl apply -f ingress.yaml apiVersion: v1 kind: Service metadata: name: mysrv-v2 namespace: default spec: selector: name: mydpv2 version: dpv2 ports: - name: http port: 80 targetPort: 80 --- apiVersion: apps/v1 kind: Deployment metadata: name: dpv2 namespace: default spec: replicas: 2 selector: matchLabels: name: mydpv2 version: dpv2 template: metadata: labels: name: mydpv2 version: dpv2 spec: containers: - name: mydpv2 image: 192.168.192.234:888/nginx:v2 ports: - name: dpport containerPort: 80 strategy: rollingUpdate: maxSurge: 1 [root@master1 ingress]# kubectl get pods -l name=mydpv2 --show-labels NAME READY STATUS RESTARTS AGE LABELS dpv2-697556c88f-pbjm9 1/1 Running 0 66s name=mydpv2,pod-template-hash=697556c88f,version=dpv2 dpv2-697556c88f-sxr84 1/1 Running 0 66s name=mydpv2,pod-template-hash=697556c88f,version=dpv2 2)发布服务为ingress [root@master1 ingress]# kubectl apply -f ingress-myapp.yaml [root@master1 ingress]# cat ingress-myapp.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: ingress-myapp namespace: default #要和发布的deployment在同一个名称空间中 annotations: kubernetes.io/ingress.class: "nginx" #说明自己使用的ingress controller是哪一个 spec: rules: - host: www.mt.com http: paths: - path: backend: serviceName: mysrv-v2 servicePort: 80 查看nginx配置文件 [root@master1 ingress]# kubectl describe ingress Name: ingress-myapp Namespace: default Address: Default backend: default-http-backend:80 (<none>) Rules: Host Path Backends ---- ---- -------- www.mt.com mysrv-v2:80 (172.30.56.6:80,172.30.72.8:80) Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"extensions/v1beta1","kind":"Ingress","metadata":{"annotations":{"kubernetes.io/ingress.class":"nginx"},"name":"ingress-myapp","namespace":"default"},"spec":{"rules":[{"host":"www.mt.com","http":{"paths":[{"backend":{"serviceName":"mysrv-v2","servicePort":80},"path":null}]}}]}} kubernetes.io/ingress.class: nginx Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal CREATE 21s nginx-ingress-controller Ingress default/ingress-myapp [root@master1 ingress]# curl www.mt.com:30080 //返回正常 3)https [root@master1 ingress]# kubectl apply -f tomcat.yaml service/tomcat created deployment.apps/tomcat-deploy created [root@master1 ingress]# cat tomcat.yaml apiVersion: v1 kind: Service metadata: name: tomcat namespace: default spec: selector: app: tomcat release: canary ports: - name: http targetPort: 8080 port: 8080 - name: ajp targetPort: 8009 port: 8009 --- apiVersion: apps/v1 kind: Deployment metadata: name: tomcat-deploy namespace: default spec: replicas: 3 selector: matchLabels: app: tomcat release: canary template: metadata: labels: app: tomcat release: canary spec: containers: - name: myapp image: 192.168.192.234:888/tomcat:latest ports: - name: http containerPort: 8080 - name: ajp containerPort: 8009 [root@master1 ingress]# kubectl describe svc tomcat [root@master1 ingress]# cat ingress-tomcat.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: ingress-tomcat namespace: default annotations: kubernetes.io/ingress.class: "nginx" spec: rules: - host: www.mt.com http: paths: - path: backend: serviceName: tomcat servicePort: 8080 [root@master1 ingress]# curl www.mt.com:30080 验证,30080是nginx暴露出去的 https:(使用secret注入到pod中) [root@master1 ssl]# openssl genrsa -out tls.key 2048 [root@master1 ssl]# openssl req -new -x509 -key tls.key -out tls.crt -subj /C=CN/ST=HangZhou/L=HangZhou/O=DevOps/CN=www.mt.com [root@master1 ssl]# kubectl create secret tls tomcat-ingress-secret --cert=tls.crt --key=tls.key [root@master1 ingress]# kubectl describe secret tomcat-ingress-secret 修改nginx为https模式:修改 [root@master1 ingress]# kubectl apply -f ingress-tomcat-tls.yaml [root@master1 ingress]# cat ingress-tomcat-tls.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: ingress-tomcat namespace: default annotations: kubernetes.io/ingress.class: "nginx" spec: tls: - hosts: - www.mt.com secretName: tomcat-ingress-secret rules: - host: www.mt.com http: paths: - path: backend: serviceName: tomcat servicePort: 8080 登陆ingress的pod查看是否正常生成ssl配置

六、ServiceAccount/UserAccount

K8s 2套独立的账号系统:User Account账号是给别人用的,Service Account是给Pod中的进程使用的,面对的对象不通 User Account是全局性的,Service Account有namespace的限制 每个namespace都会自动创建一个default service account Token controller检测service account的创建,并为它们创建secret Pod客户端访问API Server的https安全端口: 1)controller-manager使用api-server的私钥为Pod创建token 2)pod访问api server的时候,传递Token到HTTP Header中 3)api server使用自己的私钥验证该Token是否合法 [root@master1 merged]# kubectl exec -it nginx-587764dd97-29n2g -- ls -l /run/secrets/kubernetes.io/serviceaccount total 0 lrwxrwxrwx 1 root root 13 Aug 9 10:02 ca.crt -> ..data/ca.crt lrwxrwxrwx 1 root root 16 Aug 9 10:02 namespace -> ..data/namespace lrwxrwxrwx 1 root root 12 Aug 9 10:02 token -> ..data/token 1、创建serviceaccount [root@master1 merged]# kubectl create serviceaccount nginx serviceaccount/nginx created [root@master1 merged]# kubectl get serviceaccount nginx -o yaml #自动创建sercrets apiVersion: v1 kind: ServiceAccount metadata: creationTimestamp: "2019-08-10T13:37:38Z" name: nginx namespace: default resourceVersion: "1066770" selfLink: /api/v1/namespaces/default/serviceaccounts/nginx uid: 04af83c1-bb74-11e9-9c2a-00163e000999 secrets: - name: nginx-token-pthgt [root@master1 merged]# kubectl get secret nginx-token-pthgt -o yaml apiVersion: v1 data: ca.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR4akNDQXE2Z0F3SUJBZ0lVSXJ4Q2diY2lGZXNUVGxuVU1heDlla3JDSmxjd0RRWUpLb1pJaHZjTkFRRUwKQlFBd2FURUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVJFd0R3WURWUVFIRXdoSQpZVzVuV21odmRURU1NQW9HQTFVRUNoTURhemh6TVJFd0R3WURWUVFMRXdoR2FYSnpkRTl1WlRFVE1CRUdBMVVFCkF4TUthM1ZpWlhKdVpYUmxjekFlRncweE9UQTRNREl4TVRNNU1EQmFGdzB5TWpBNE1ERXhNVE01TURCYU1Ha3gKQ3pBSkJnTlZCQVlUQWtOT01SRXdEd1lEVlFRSUV3aElZVzVuV21odmRURVJNQThHQTFVRUJ4TUlTR0Z1WjFwbwpiM1V4RERBS0JnTlZCQW9UQTJzNGN6RVJNQThHQTFVRUN4TUlSbWx5YzNSUGJtVXhFekFSQmdOVkJBTVRDbXQxClltVnlibVYwWlhNd2dnRWlNQTBHQ1NxR1NJYjNEUUVCQVFVQUE0SUJEd0F3Z2dFS0FvSUJBUURKN1hueEVjWFAKS1hqZFpVY3VETVhQdFE5NzkxQjlvNDFFV05FWGNJbFZqM0NueVVuVmIxaHk1WittQzNGMGRtcGxGbTE3eTI2UQp6bThSV2RNZlh4MnlJZS9DSHJlV2o5Y3hMc0JLSm9xM0JvRmNLdmZqc1Z2dzIwaUZ1ZEEwektHREZEc01US3JYClQ3UUdGTEtveWM1N3BTS08yUGt5aG9OeDc2cHdDYllCMndpam4vRnRmMEYvTXpiTXBlR3E0WXkzK3VEUm9ubTIKeUI5TG1uOFRRT2NLb1ZWZHBPWDRIb1E5MGpkdy9EcUlzMUk4OXg3ZjNIZTBISDBGWkpzN3JJQ01TbG50QlR5RApWZkpaZ3N6YldYeHdzb25IeitzaVh3cnBTUDN6RGlmaWJLWHlmdUM1KzBreEJxWDNOQUZUVVFwVDErbTFmNEJwCnRCNTI3ZUpDdXdPakFnTUJBQUdqWmpCa01BNEdBMVVkRHdFQi93UUVBd0lCQmpBU0JnTlZIUk1CQWY4RUNEQUcKQVFIL0FnRUNNQjBHQTFVZERnUVdCQlQ3dmtPOUZOUGl2T0hhMjl2ODAzZFlrZGcvMERBZkJnTlZIU01FR0RBVwpnQlQ3dmtPOUZOUGl2T0hhMjl2ODAzZFlrZGcvMERBTkJna3Foa2lHOXcwQkFRc0ZBQU9DQVFFQVJFdTlSZmZMCmdDYS9kS3kvTU1sNmd5dHZMMzJPMlRnNko4RGw5L0ZJanJtaXpVeC9xV256b29wTGNzY0hRcC9rcEQ1QURLTDUKcmJkTXZCcFNicUVncTlaT2hYeHg5SCtVYnZiY3IwV3h3N2xEbTdKR0lUb2FhckVrckk2ZExXcVpqczl4bTBPdAowb3RETjcwaFkxaG0rWTNqQXQzanV3WVByUFcxb2RJM013L3ArWG9DWTR3bkcycDMyc1grNzdCVUl0eHhkeGY1CkJJWHoyWURMdzNLbXNScCtXdW1DaTNwSUV3bXFHbnJDNFhQUzhXbDdleGFZZkxDRWgwRjVQU2NYb1MxdjZGYjYKYlNrM0h2ZVRLNzduNDgwUXZ0blJFOVZVSGFMRkdBUm5SRkh0NDVRbS9teUc1dXdKbW9zVnlRajVZdVRSVjdTbwpSZE1DVEVXOHFESGp1UT09Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K namespace: ZGVmYXVsdA== token: ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNklpSjkuZXlKcGMzTWlPaUpyZFdKbGNtNWxkR1Z6TDNObGNuWnBZMlZoWTJOdmRXNTBJaXdpYTNWaVpYSnVaWFJsY3k1cGJ5OXpaWEoyYVdObFlXTmpiM1Z1ZEM5dVlXMWxjM0JoWTJVaU9pSmtaV1poZFd4MElpd2lhM1ZpWlhKdVpYUmxjeTVwYnk5elpYSjJhV05sWVdOamIzVnVkQzl6WldOeVpYUXVibUZ0WlNJNkltNW5hVzU0TFhSdmEyVnVMWEIwYUdkMElpd2lhM1ZpWlhKdVpYUmxjeTVwYnk5elpYSjJhV05sWVdOamIzVnVkQzl6WlhKMmFXTmxMV0ZqWTI5MWJuUXVibUZ0WlNJNkltNW5hVzU0SWl3aWEzVmlaWEp1WlhSbGN5NXBieTl6WlhKMmFXTmxZV05qYjNWdWRDOXpaWEoyYVdObExXRmpZMjkxYm5RdWRXbGtJam9pTURSaFpqZ3pZekV0WW1JM05DMHhNV1U1TFRsak1tRXRNREF4TmpObE1EQXdPVGs1SWl3aWMzVmlJam9pYzNsemRHVnRPbk5sY25acFkyVmhZMk52ZFc1ME9tUmxabUYxYkhRNmJtZHBibmdpZlEuVUpzV3pHTEloVHBkVWc4LTVweXlya0pkWnBJWEhpbFB6Nzk3Xy1qLW5nQVNxNFRjYkVrSFFkXzR1d1J6X2lkaGZvc0NUY2NxYW1oR1pzcU9PWldWWXBzeGdZbHY4aTRuQjJWNTJtaXN2OTZHTVFBb3FUVlE5aXBMNWN6VFY0cXlicGVyZ2w4WHpXU3B1SDhVTUVfQk5YRFZuZ0d4UGU2RUNTcU1qaEpWUDZjczVWTVFVZlp2dEl1dHRuRHhvWHlwdFN4RXNyaWJEUVNxNDQyeVYwU3hHRGpOMDZYZThtblNxamlpc0R2MmQ0c1NYNmpaczU0R3hlMlBQdUtWZjFoLUtRRzNKdDVwRTZic3FBcnJkcm83TDlJZHJRbkl6dUQ2QlJ5ZnRqTWdGcXVXVk9CeWNJcVkzaGY3Q0pmeThmNmJ0QXZrVi1XNUZFdE5TY3pKZXQ4SWZ3 kind: Secret metadata: annotations: kubernetes.io/service-account.name: nginx kubernetes.io/service-account.uid: 04af83c1-bb74-11e9-9c2a-00163e000999 creationTimestamp: "2019-08-10T13:37:38Z" name: nginx-token-pthgt namespace: default resourceVersion: "1066769" selfLink: /api/v1/namespaces/default/secrets/nginx-token-pthgt uid: 04b16e50-bb74-11e9-a28c-00163e000318 type: kubernetes.io/service-account-token [root@master1 merged]# kubectl describe secret nginx-token-pthgt Name: nginx-token-pthgt Namespace: default Labels: <none> Annotations: kubernetes.io/service-account.name: nginx kubernetes.io/service-account.uid: 04af83c1-bb74-11e9-9c2a-00163e000999 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1371 bytes namespace: 7 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6Im5naW54LXRva2VuLXB0aGd0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6Im5naW54Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMDRhZjgzYzEtYmI3NC0xMWU5LTljMmEtMDAxNjNlMDAwOTk5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRlZmF1bHQ6bmdpbngifQ.UJsWzGLIhTpdUg8-5pyyrkJdZpIXHilPz797_-j-ngASq4TcbEkHQd_4uwRz_idhfosCTccqamhGZsqOOZWVYpsxgYlv8i4nB2V52misv96GMQAoqTVQ9ipL5czTV4qybpergl8XzWSpuH8UME_BNXDVngGxPe6ECSqMjhJVP6cs5VMQUfZvtIuttnDxoXyptSxEsribDQSq442yV0SxGDjN06Xe8mnSqjiisDv2d4sSX6jZs54Gxe2PPuKVf1h-KQG3Jt5pE6bsqArrdro7L9IdrQnIzuD6BRyftjMgFquWVOBycIqY3hf7CJfy8f6btAvkV-W5FEtNSczJet8Ifw Service Account 为服务提供了一种方便的认证机制,但它不关心授权的问题。可以配合 RBAC 来为 Service Account 鉴权: Secret从属于Service Account资源对象,属于Service Account的一部分,一个Service Account可以包含多个不同的Secret对象 Secret说明: 1)名为Token的secret用于访问API Server的Secret,也被称作Service Account Secret 2)名为imagePullSecrets的Secret用于下载容器镜像时的认证过程 3)用户自定义的其他Secret,用于用户的进程 2、创建userAccount 大体步骤如下: 1)生成个人私钥,和证书签署请求 2)k8s集群ca对csr进行认证 3)认证后的内容写入kubeconfig方便使用

参考:https://blog.51cto.com/hmtk520/2423253

七、Secret&Configmap

ConfigMap用于保存配置数据的键值对,可以用来保存单个属性,也可以用来保存配置文件。ConfigMap跟secret很类似,但它可以更方便地处理不包含敏感信息的字符串。 配置容器化应用的方式: 1、自定义命令行参数 command args 2、把配置文件直接放在镜像中 3、环境变量 1)Cloud Native的应用程序一般可直接通过env var加载配置 2)通过entrypoint 脚本来预处理配置文件中的信息 4、存储卷 configmap sercret [root@master1 yaml]# kubectl explain pods.spec.containers [root@master1 yaml]# kubectl explain pods.spec.containers.envFrom 可以是configMap configMapRef prefix secretRef [root@master1 yaml]# kubectl explain pods.spec.containers.env.valueFrom configMapKeyRef 使用configmap fieldRef 字段,可以是pod自身的字段,比如:metadata.name,metadata.namespace.... resourceFieldRef secretKeyRef secret 1、创建 configmap [root@master1 yaml]# kubectl create configmap nginx-configmap --from-literal=nginx_port=80 --from-literal=server_name=www.mt.com [root@master1 configmap]# kubectl create configmap configmap-1 --from-file=www=./www.conf configmap/configmap-1 created [root@master1 configmap]# cat www.conf server { server_name www.mt.com; listen 80; root /data/web/html; } [root@master1 configmap]# kubectl create configmap special-config --from-file=config/ #从目录创建 [root@master1 configmap]# kubectl describe configmap configmap-1 #查看 2、configmap使用 可用于:设置环境变量、设置容器命令行参数、在Volume中创建配置文件等 #configmap要在Pod创建前创建 [root@registry work]# cat configmap.yaml apiVersion: v1 kind: Pod metadata: name: test-nginx spec: containers: - name: test-container image: 192.168.192.234:888/nginx:latest env: - name: pod-port #这个name为pod内环境变量,引用的是nginx-configmap.nginx_port这个变量 valueFrom: configMapKeyRef: name: nginx-configmap #引用的nginx-configmap这个configmap的nginx_port这个变量 key: nginx_port optional: True #是否为可选 - name: pod_name valueFrom: configMapKeyRef: name: nginx-configmap key: server_name envFrom: #引用的configmap,可设置多个,将引用nginx-configmap的所有内容 - configMapRef: name: nginx-configmap restartPolicy: Never 修改configmap: [root@master1 configmap]# kubectl edit configmap nginx-configmap [root@master1 configmap]# kubectl describe configmap nginx-configmap 会发现已经修改 但是pod内的仍然没有改变,环境变量方式只在系统启动时生效。存储卷方式 3、Volume方式使用configmap [root@master1 configmap]# kubectl apply -f conf2.yaml [root@master1 configmap]# cat conf2.yaml apiVersion: v1 kind: Pod metadata: name: pod-cm1 namespace: default labels: app: cm1 release: centos annotations: www.mt.com/created-by: "mt" spec: containers: - name: pod-cm1 image: 192.168.192.225:80/csb-broker:latest ports: - name: containerPort: 80 volumeMounts: - name: nginxconf mountPath: /etc/nginx/config.d/ readOnly: True volumes: - name: nginxconf configMap: name: configmap-1 [root@pod-cm2 /home/admin] #cat /etc/nginx/config.d/nginx_port 8080 [root@pod-cm2 /home/admin] #cat /etc/nginx/config.d/server_name www.mt.com 使用kubectl edit configmap 容器内会发生改变 如果只需要挂载configmap中的部分key:value (一个configmap 可能有多个key/value) [root@master1 configmap]# kubectl explain pods.spec.volumes.configMap 4、secret Secret和configMap类型,用于保存敏感配置信息 Secret 有三种类型: Opaque:base64 编码格式的 Secret,用来存储密码、密钥等;但数据也通过 base64 --decode 解码得到原始数据,所有加密性很弱。 kubernetes.io/dockerconfigjson:用来存储私有 docker registry 的认证信息。 kubernetes.io/service-account-token: 用于被 serviceaccount 引用。serviceaccout 创建时Kubernetes 会默认创建对应的 secret。Pod 如果使用了 serviceaccount,对应的 secret 会自动挂载到 Pod 的 /run/secrets/kubernetes.io/serviceaccount 目录中。 [root@master1 configmap]# kubectl create secret Create a secret using specified subcommand. Available Commands: docker-registry Create a secret for use with a Docker registry //连接私有仓库需要的认证信息 generic Create a secret from a local file, directory or literal value tls Create a TLS secret //秘钥信息 [root@master1 configmap]# kubectl create secret generic mysql-root-password --from-literal=password=password123 secret/mysql-root-password created [root@master1 configmap]# kubectl get secret mysql-root-password NAME TYPE DATA AGE mysql-root-password Opaque 1 8s [root@master1 configmap]# kubectl describe secret mysql-root-password [root@master1 configmap]# kubectl get secret mysql-root-password -o yaml apiVersion: v1 data: password: cGFzc3dvcmQxMjM= //base64编码;echo cGFzc3dvcmQxMjM= | base64 -d 解码 kind: Secret metadata: creationTimestamp: "2019-07-05T08:55:48Z" name: mysql-root-password namespace: default resourceVersion: "2794776" selfLink: /api/v1/namespaces/default/secrets/mysql-root-password uid: af3180b4-9f02-11e9-8691-00163e000bdd type: Opaque Secret引用:以 Volume 方式 或者 以环境变量方式,参考ConfigMap Docker_registry [root@registry ~]# kubectl create secret docker-registry myregistrykey --docker-server=DOCKER_REGISTRY_SERVER --docker-username=DOCKER_USER --docker-password=DOCKER_PASSWORD

参考博客:

https://www.kubernetes.org.cn/kubernetes-pod

http://docs.kubernetes.org.cn/317.html#Pod-template-hash_label

https://kubernetes.io/docs/concepts/configuration/secret/

https://kubernetes.io/docs/concepts/services-networking/ingress-controllers/

https://kubernetes.io/docs/concept