一、二进制部署 k8s集群

1)参考文章

博客: https://blog.qikqiak.com 文章: https://www.qikqiak.com/post/manual-install-high-available-kubernetes-cluster/

2)环境架构

master: 192.168.10.12 192.168.10.22 etcd:类似于数据库,尽量使用高可用 192.168.10.12(etcd01) 192.168.10.22(etcd01)

二、创建证书

1)hosts 文件修改

[root@master01 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.10.12 k8s-api.virtual.local

k8s-api.virtual.local 为后期设计的高可用的访问地址。现在临时设置

2)环境变量定义

[root@master01 ~]# head -c 16 /dev/urandom | od -An -t x | tr -d ' ' 0b340751863956f119cbc624465db92b [root@master01 ~]# cat env.sh BOOTSTRAP_TOKEN="0b340751863956f119cbc624465db92b" SERVICE_CIDR="10.254.0.0/16" CLUSTER_CIDR="172.30.0.0/16" NODE_PORT_RANGE="30000-32766" ETCD_ENDPOINTS="https://192.168.10.12:2379,https://192.168.10.22:2379" FLANNEL_ETCD_PREFIX="/kubernetes/network" CLUSTER_KUBERNETES_SVC_IP="10.254.0.1" CLUSTER_DNS_SVC_IP="10.254.0.2" CLUSTER_DNS_DOMAIN="cluster.local." MASTER_URL="k8s-api.virtual.local" [root@master01 ~]# mkdir -p /usr/k8s/bin [root@master01 ~]# mv env.sh /usr/k8s/bin/

head -c 16 /dev/urandom | od -An -t x | tr -d ' ' 生成token值,每次都不一样

3)创建CA 证书和密钥

3.1)下载创建证书的命令

[root@master01 ~]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 [root@master01 ~]# chmod +x cfssl_linux-amd64 [root@master01 ~]# mv cfssl_linux-amd64 /usr/k8s/bin/cfssl [root@master01 ~]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 [root@master01 ~]# chmod +x cfssljson_linux-amd64 [root@master01 ~]# mv cfssljson_linux-amd64 /usr/k8s/bin/cfssljson [root@master01 ~]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 [root@master01 ~]# chmod +x cfssl-certinfo_linux-amd64 [root@master01 ~]# mv cfssl-certinfo_linux-amd64 /usr/k8s/bin/cfssl-certinfo

[root@master01 ~]# chmod +x /usr/k8s/bin/cfssl*

3.2)为了方便使用命令,添加环境变量

[root@master01 bin]# ls /usr/k8s/bin/ cfssl cfssl-certinfo cfssljson env.sh [root@master01 ~]# export PATH=/usr/k8s/bin/:$PATH [root@master01 ~]# echo $PATH /usr/k8s/bin/:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

环境变量写入配置文件永久生效

[root@master01 ~]# cat .bash_profile # .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs PATH=$PATH:$HOME/bin export PATH=/usr/k8s/bin/:$PATH # 新增 source /usr/k8s/bin/env.sh # 新增,后面需要 export PATH

3.3 )创建默认证书文件

[root@master01 ~]# mkdir ssl [root@master01 ~]# cd ssl/ [root@master01 ssl]# cfssl print-defaults config > config.json [root@master01 ssl]# cfssl print-defaults csr > csr.json [root@master01 ssl]# ls config.json csr.json

3.5)改为需要的证书文件。ca-csr.json 和 ca-config.json

[root@master01 ssl]# cp config.json config.json.bak [root@master01 ssl]# mv config.json ca-config.json [root@master01 ssl]# cat ca-config.json # 修改为需要的 { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } [root@master01 ssl]# cp csr.json ca-csr.json [root@master01 ssl]# mv csr.json csr.json.bak [root@master01 ssl]# cat ca-csr.json # 修改为需要的 { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } [root@master01 ssl]# ls ca-config.json ca-csr.json config.json.bak csr.json.bak

3.6)根据证书生成证书秘钥对

[root@master01 ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca 2019/05/22 14:42:24 [INFO] generating a new CA key and certificate from CSR 2019/05/22 14:42:24 [INFO] generate received request 2019/05/22 14:42:24 [INFO] received CSR 2019/05/22 14:42:24 [INFO] generating key: rsa-2048 2019/05/22 14:42:24 [INFO] encoded CSR 2019/05/22 14:42:24 [INFO] signed certificate with serial number 205407174390394697284979809186701593413605316370 [root@master01 ssl]# ls ca* ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem 备注:ca.pem 为私钥 ca-key.pem 为公钥

3.7)将证书拷贝到 所有的节点的 k8s的指定目录

[root@master01 ssl]# mkdir -p /etc/kubernetes/ssl [root@master01 ssl]# cp ca* /etc/kubernetes/ssl [root@master01 ssl]# ls /etc/kubernetes/ssl ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

强调:所有 k8s 节点

二、创建 etcd 集群

1)环境变量生效

export NODE_NAME=etcd01 export NODE_IP=192.168.10.12 export NODE_IPS="192.168.10.12 192.168.10.22" export ETCD_NODES=etcd01=https://192.168.10.12:2380,etcd02=https://192.168.10.22:2380

执行过程

[root@master01 ssl]# source /usr/k8s/bin/env.sh [root@master01 ssl]# echo $ETCD_ENDPOINTS # 检验变量 https://192.168.10.12:2379,https://192.168.10.22:2379 [root@master01 ssl]# export NODE_NAME=etcd01 [root@master01 ssl]# export NODE_IP=192.168.10.12 [root@master01 ssl]# export NODE_IPS="192.168.10.12 192.168.10.22" [root@master01 ssl]# export ETCD_NODES=etcd01=https://192.168.10.12:2380,etcd02=https://192.168.10.22:2380 [root@master01 ssl]# echo $NODE_NAME etcd01

2)导入etcd的命令。从github下载

https://github.com/coreos/etcd 找 releases 包 https://github.com/etcd-io/etcd/releases [root@master01 ~]# wget https://github.com/etcd-io/etcd/releases/download/v3.3.13/etcd-v3.3.13-linux-amd64.tar.gz [root@master01 ~]# tar xf etcd-v3.3.13-linux-amd64.tar.gz [root@master01 ~]# ls etcd-v3.3.13-linux-amd64 Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md [root@master01 ~]# cp etcd-v3.3.13-linux-amd64/etcd* /usr/k8s/bin/

3)创建etcd需要的json文件

[root@master01 ~]# cd /root/ssl/ [root@master01 ~]# cat > etcd-csr.json <<EOF { "CN": "etcd", "hosts": [ "127.0.0.1", "${NODE_IP}" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF 注意:"${NODE_IP}" 根据环境变量替换为当前ip [root@master01 ssl]# cat etcd-csr.json { "CN": "etcd", "hosts": [ "127.0.0.1", "192.168.10.12" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] }

4)创建etcd秘钥文件

过程简略

$ cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd $ ls etcd* etcd.csr etcd-csr.json etcd-key.pem etcd.pem $ sudo mkdir -p /etc/etcd/ssl $ sudo mv etcd*.pem /etc/etcd/ssl/

执行过程

[root@master01 ssl]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem > -ca-key=/etc/kubernetes/ssl/ca-key.pem > -config=/etc/kubernetes/ssl/ca-config.json > -profile=kubernetes etcd-csr.json | cfssljson -bare etcd 2019/05/22 17:52:42 [INFO] generate received request 2019/05/22 17:52:42 [INFO] received CSR 2019/05/22 17:52:42 [INFO] generating key: rsa-2048 2019/05/22 17:52:42 [INFO] encoded CSR 2019/05/22 17:52:42 [INFO] signed certificate with serial number 142898298242549096204648651997326366332634729441 2019/05/22 17:52:42 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@master01 ssl]# ls etcd* etcd.csr etcd-csr.json etcd-key.pem etcd.pem 备注:etcd-key.pem 私钥文件 etcd.pem 证书文件 [root@master01 ssl]# mkdir -p /etc/etcd/ssl [root@master01 ssl]# mv etcd*.pem /etc/etcd/ssl/ [root@master01 ssl]# ll /etc/etcd/ssl/ 总用量 8 -rw------- 1 root root 1679 5月 22 17:52 etcd-key.pem -rw-r--r-- 1 root root 1419 5月 22 17:52 etcd.pem

5)创建etcd 的systemd unit 文件

$ sudo mkdir -p /var/lib/etcd # 必须要先创建工作目录 $ cat > etcd.service <<EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/k8s/bin/etcd \ --name=${NODE_NAME} \ --cert-file=/etc/etcd/ssl/etcd.pem \ --key-file=/etc/etcd/ssl/etcd-key.pem \ --peer-cert-file=/etc/etcd/ssl/etcd.pem \ --peer-key-file=/etc/etcd/ssl/etcd-key.pem \ --trusted-ca-file=/etc/kubernetes/ssl/ca.pem \ --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \ --initial-advertise-peer-urls=https://${NODE_IP}:2380 \ --listen-peer-urls=https://${NODE_IP}:2380 \ --listen-client-urls=https://${NODE_IP}:2379,http://127.0.0.1:2379 \ --advertise-client-urls=https://${NODE_IP}:2379 \ --initial-cluster-token=etcd-cluster-0 \ --initial-cluster=${ETCD_NODES} \ --initial-cluster-state=new \ --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

6.1)将创建的etcd.service文件放到指定位置,启动

$ sudo mv etcd.service /etc/systemd/system $ sudo systemctl daemon-reload $ sudo systemctl enable etcd $ sudo systemctl start etcd $ sudo systemctl status etcd

6.2)启动报错处理

[root@master01 ssl]# systemctl start etcd Job for etcd.service failed because a timeout was exceeded. See "systemctl status etcd.service" and "journalctl -xe" for details.

处理错误过程

[root@master01 ssl]# systemctl status etcd.service -l ........ 5月 22 18:08:33 master01 etcd[9567]: health check for peer 21e3841b92f796be could not connect: dial tcp 192.168.10.22:2380: connect: connection refused (prober "ROUND_TRIPPER_RAFT_MESSAGE") 5月 22 18:08:34 master01 etcd[9567]: 1af68d968c7e3f22 is starting a new election at term 199 5月 22 18:08:34 master01 etcd[9567]: 1af68d968c7e3f22 became candidate at term 200 5月 22 18:08:34 master01 etcd[9567]: 1af68d968c7e3f22 received MsgVoteResp from 1af68d968c7e3f22 at term 200 5月 22 18:08:34 master01 etcd[9567]: 1af68d968c7e3f22 [logterm: 1, index: 2] sent MsgVote request to 21e3841b92f796be at term 200 5月 22 18:08:36 master01 etcd[9567]: 1af68d968c7e3f22 is starting a new election at term 200 5月 22 18:08:36 master01 etcd[9567]: 1af68d968c7e3f22 became candidate at term 201 5月 22 18:08:36 master01 etcd[9567]: 1af68d968c7e3f22 received MsgVoteResp from 1af68d968c7e3f22 at term 201 5月 22 18:08:36 master01 etcd[9567]: 1af68d968c7e3f22 [logterm: 1, index: 2] sent MsgVote request to 21e3841b92f796be at term 201 5月 22 18:08:36 master01 etcd[9567]: publish error: etcdserver: request timed out

原因。需要在其他节点安装 etcd,并启动etcd。如果启动了,该错误就直接消失了

最先启动的etcd 进程会卡住一段时间,等待其他节点启动加入集群,在所有的etcd 节点重复上面的步骤,直到所有的机器etcd 服务都已经启动。

7)在其他节点安装etcd

7.1)拷贝文件到其他节点

[root@master01 usr]# zip -r k8s.zip k8s/ [root@master01 usr]# scp k8s.zip root@master02:/usr/ [root@master01 etc]# zip -r kubernetes.zip kubernetes/ [root@master01 etc]# scp kubernetes.zip root@master02:/etc/ 去master02解压该文件 [root@master02 etc]# tree /usr/k8s/ /usr/k8s/ └── bin ├── cfssl ├── cfssl-certinfo ├── cfssljson ├── env.sh ├── etcd └── etcdctl [root@master02 etc]# tree /etc/kubernetes/ /etc/kubernetes/ └── ssl ├── ca-config.json ├── ca.csr ├── ca-csr.json ├── ca-key.pem └── ca.pem

7.2)其他服务器的节点变量生效

export NODE_NAME=etcd02 export NODE_IP=192.168.10.22 export NODE_IPS="192.168.10.12 192.168.10.22" export ETCD_NODES=etcd01=https://192.168.10.12:2380,etcd02=https://192.168.10.22:2380 source /usr/k8s/bin/env.sh

export PATH=/usr/k8s/bin/:$PATH

7.3)给其他节点创建TLS的证书请求

[root@master02 ~]# mkdir ssl [root@master02 ~]# cd ssl/ cat > etcd-csr.json <<EOF { "CN": "etcd", "hosts": [ "127.0.0.1", "${NODE_IP}" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF

生成etcd证书和私钥

$ cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd $ ls etcd* etcd.csr etcd-csr.json etcd-key.pem etcd.pem $ sudo mkdir -p /etc/etcd/ssl $ sudo mv etcd*.pem /etc/etcd/ssl/

[root@master02 ssl]# ls /etc/etcd/ssl/

etcd-key.pem etcd.pem

7.4)创建etcd的systemd unit文件

$ sudo mkdir -p /var/lib/etcd # 必须要先创建工作目录 $ cat > etcd.service <<EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/k8s/bin/etcd \ --name=${NODE_NAME} \ --cert-file=/etc/etcd/ssl/etcd.pem \ --key-file=/etc/etcd/ssl/etcd-key.pem \ --peer-cert-file=/etc/etcd/ssl/etcd.pem \ --peer-key-file=/etc/etcd/ssl/etcd-key.pem \ --trusted-ca-file=/etc/kubernetes/ssl/ca.pem \ --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \ --initial-advertise-peer-urls=https://${NODE_IP}:2380 \ --listen-peer-urls=https://${NODE_IP}:2380 \ --listen-client-urls=https://${NODE_IP}:2379,http://127.0.0.1:2379 \ --advertise-client-urls=https://${NODE_IP}:2379 \ --initial-cluster-token=etcd-cluster-0 \ --initial-cluster=${ETCD_NODES} \ --initial-cluster-state=new \ --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

7.5)启动etcd服务

[root@master02 ssl]# mv etcd.service /etc/systemd/system systemctl daemon-reload systemctl enable etcd systemctl start etcd systemctl status etcd

现在再次进入master01,执行systemctl status etcd 查看状态

8)验证etcd集群,在任意集群的节点执行下面命令

for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/k8s/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem endpoint health; done

输出

https://192.168.10.12:2379 is healthy: successfully committed proposal: took = 1.298547ms https://192.168.10.22:2379 is healthy: successfully committed proposal: took = 2.740962ms

三、搭建master集群

1)下载文件

https://github.com/kubernetes/kubernetes/ https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.9.md wget https://dl.k8s.io/v1.9.10/kubernetes-server-linux-amd64.tar.gz 下载该包较大,且国内无法访问

2)拷贝kubernetes的命令到 k8s的bin目录下

[root@master01 ~]# tar xf kubernetes-server-linux-amd64.tar.gz [root@master01 ~]# cd kubernetes [root@master01 kubernetes]# cp -r server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler} /usr/k8s/bin/ [root@master01 kubernetes]# ls /usr/k8s/bin/kube-* /usr/k8s/bin/kube-apiserver /usr/k8s/bin/kube-controller-manager /usr/k8s/bin/kube-scheduler

3)创建kubernetes 证书

cat > kubernetes-csr.json <<EOF { "CN": "kubernetes", "hosts": [ "127.0.0.1", "${NODE_IP}", "${MASTER_URL}", "${CLUSTER_KUBERNETES_SVC_IP}", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF ------------ [root@master01 kubernetes]# cat kubernetes-csr.json { "CN": "kubernetes", "hosts": [ "127.0.0.1", "192.168.10.12", "k8s-api.virtual.local", "10.254.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] }

mv kubernetes-csr.json ../ssl/

4)生成kubernetes 证书和私钥

$ cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes $ ls kubernetes* kubernetes.csr kubernetes-csr.json kubernetes-key.pem kubernetes.pem $ sudo mkdir -p /etc/kubernetes/ssl/ $ sudo mv kubernetes*.pem /etc/kubernetes/ssl/

4.1)操作过程

[root@master01 ssl]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem > -ca-key=/etc/kubernetes/ssl/ca-key.pem > -config=/etc/kubernetes/ssl/ca-config.json > -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes 2019/05/27 19:57:35 [INFO] generate received request 2019/05/27 19:57:35 [INFO] received CSR 2019/05/27 19:57:35 [INFO] generating key: rsa-2048 2019/05/27 19:57:36 [INFO] encoded CSR 2019/05/27 19:57:36 [INFO] signed certificate with serial number 700523371489172612405920435814032644060474436709 2019/05/27 19:57:36 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@master01 ssl]# ls kubernetes* kubernetes.csr kubernetes-csr.json kubernetes-key.pem kubernetes.pem [root@master01 ssl]# ls /etc/kubernetes/ssl/ ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem [root@master01 ssl]# mv kubernetes*.pem /etc/kubernetes/ssl/ [root@master01 ssl]# ls /etc/kubernetes/ssl/ ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem kubernetes-key.pem kubernetes.pem

5) 配置和启动kube-apiserver

5.1)创建kube-apiserver 使用的客户端token 文件

kubelet 首次启动时向kube-apiserver 发送TLS Bootstrapping 请求,kube-apiserver 验证请求中的token 是否与它配置的token.csv 一致,如果一致则自动为kubelet 生成证书和密钥。

$ # 导入的 environment.sh 文件定义了 BOOTSTRAP_TOKEN 变量

$ cat > token.csv <<EOF ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap" EOF $ sudo mv token.csv /etc/kubernetes/

操作流程

[root@master01 ssl]# cat > token.csv <<EOF > ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap" > EOF [root@master01 ssl]# cat token.csv 0b340751863956f119cbc624465db92b,kubelet-bootstrap,10001,"system:kubelet-bootstrap" [root@master01 ssl]# [root@master01 ssl]# mv token.csv /etc/kubernetes/

5.2)审查日志策略文件内容如下:(/etc/kubernetes/audit-policy.yaml)

apiVersion: audit.k8s.io/v1beta1 # This is required. kind: Policy # Don't generate audit events for all requests in RequestReceived stage. omitStages: - "RequestReceived" rules: # Log pod changes at RequestResponse level - level: RequestResponse resources: - group: "" # Resource "pods" doesn't match requests to any subresource of pods, # which is consistent with the RBAC policy. resources: ["pods"] # Log "pods/log", "pods/status" at Metadata level - level: Metadata resources: - group: "" resources: ["pods/log", "pods/status"] # Don't log requests to a configmap called "controller-leader" - level: None resources: - group: "" resources: ["configmaps"] resourceNames: ["controller-leader"] # Don't log watch requests by the "system:kube-proxy" on endpoints or services - level: None users: ["system:kube-proxy"] verbs: ["watch"] resources: - group: "" # core API group resources: ["endpoints", "services"] # Don't log authenticated requests to certain non-resource URL paths. - level: None userGroups: ["system:authenticated"] nonResourceURLs: - "/api*" # Wildcard matching. - "/version" # Log the request body of configmap changes in kube-system. - level: Request resources: - group: "" # core API group resources: ["configmaps"] # This rule only applies to resources in the "kube-system" namespace. # The empty string "" can be used to select non-namespaced resources. namespaces: ["kube-system"] # Log configmap and secret changes in all other namespaces at the Metadata level. - level: Metadata resources: - group: "" # core API group resources: ["secrets", "configmaps"] # Log all other resources in core and extensions at the Request level. - level: Request resources: - group: "" # core API group - group: "extensions" # Version of group should NOT be included. # A catch-all rule to log all other requests at the Metadata level. - level: Metadata # Long-running requests like watches that fall under this rule will not # generate an audit event in RequestReceived. omitStages:

5.3)命令行启动测试

/usr/k8s/bin/kube-apiserver --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota --advertise-address=${NODE_IP} --bind-address=0.0.0.0 --insecure-bind-address=${NODE_IP} --authorization-mode=Node,RBAC --runtime-config=rbac.authorization.k8s.io/v1alpha1 --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/etc/kubernetes/token.csv --service-cluster-ip-range=${SERVICE_CIDR} --service-node-port-range=${NODE_PORT_RANGE} --tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem --tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem --client-ca-file=/etc/kubernetes/ssl/ca.pem --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem --etcd-cafile=/etc/kubernetes/ssl/ca.pem --etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem --etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem --etcd-servers=${ETCD_ENDPOINTS} --enable-swagger-ui=true --allow-privileged=true --apiserver-count=2 --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --audit-log-path=/var/lib/audit.log --audit-policy-file=/etc/kubernetes/audit-policy.yaml --event-ttl=1h --logtostderr=true --v=6

5.4)创建kube-apiserver 的systemd unit文件。将上面命令行执行成功的文件换到下面的文件内

cat > kube-apiserver.service <<EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] ExecStart=/usr/k8s/bin/kube-apiserver \ --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \ --advertise-address=${NODE_IP} \ --bind-address=0.0.0.0 \ --insecure-bind-address=${NODE_IP} \ --authorization-mode=Node,RBAC \ --runtime-config=rbac.authorization.k8s.io/v1alpha1 \ --kubelet-https=true \ --enable-bootstrap-token-auth \ --token-auth-file=/etc/kubernetes/token.csv \ --service-cluster-ip-range=${SERVICE_CIDR} \ --service-node-port-range=${NODE_PORT_RANGE} \ --tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ --client-ca-file=/etc/kubernetes/ssl/ca.pem \ --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/etc/kubernetes/ssl/ca.pem \ --etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \ --etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem \ --etcd-servers=${ETCD_ENDPOINTS} \ --enable-swagger-ui=true \ --allow-privileged=true \ --apiserver-count=2 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/var/lib/audit.log \ --audit-policy-file=/etc/kubernetes/audit-policy.yaml \ --event-ttl=1h \ --logtostderr=true \ --v=6 Restart=on-failure RestartSec=5 Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

查看文件

[root@master01 ssl]# cat /root/ssl/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] ExecStart=/usr/k8s/bin/kube-apiserver --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota --advertise-address=192.168.10.12 --bind-address=0.0.0.0 --insecure-bind-address=192.168.10.12 --authorization-mode=Node,RBAC --runtime-config=rbac.authorization.k8s.io/v1alpha1 --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/etc/kubernetes/token.csv --service-cluster-ip-range=10.254.0.0/16 --service-node-port-range=30000-32766 --tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem --tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem --client-ca-file=/etc/kubernetes/ssl/ca.pem --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem --etcd-cafile=/etc/kubernetes/ssl/ca.pem --etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem --etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem --etcd-servers=https://192.168.10.12:2379,https://192.168.10.22:2379 --enable-swagger-ui=true --allow-privileged=true --apiserver-count=2 --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --audit-log-path=/var/lib/audit.log --audit-policy-file=/etc/kubernetes/audit-policy.yaml --event-ttl=1h --logtostderr=true --v=6 Restart=on-failure RestartSec=5 Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target

5.5) 设置api_server开机自启动。并启动服务

[root@master01 ssl]# mv kube-apiserver.service /etc/systemd/system/

[root@master01 ssl]# systemctl daemon-reload

[root@master01 ssl]# systemctl enable kube-apiserver

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /etc/systemd/system/kube-apiserver.service.

[root@master01 ssl]# systemctl start kube-apiserver

[root@master01 ssl]# systemctl status kube-apiserver

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/etc/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since 一 2019-05-27 20:52:20 CST; 15s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 37700 (kube-apiserver)

CGroup: /system.slice/kube-apiserver.service

└─37700 /usr/k8s/bin/kube-apiserver --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota --advertise-address=192.168.10.12 --bind-address=0....

5月 27 20:52:30 master01 kube-apiserver[37700]: I0527 20:52:30.558316 37700 handler.go:160] kube-aggregator: GET "/api/v1/namespaces/default/services/kubernetes" satisfied by nonGoRestful

5月 27 20:52:30 master01 kube-apiserver[37700]: I0527 20:52:30.558345 37700 pathrecorder.go:247] kube-aggregator: "/api/v1/namespaces/default/services/kubernetes" satisfied by prefix /api/

5月 27 20:52:30 master01 kube-apiserver[37700]: I0527 20:52:30.558363 37700 handler.go:150] kube-apiserver: GET "/api/v1/namespaces/default/services/kubernetes" satisfied by gorestfu...rvice /api/v1

5月 27 20:52:30 master01 kube-apiserver[37700]: I0527 20:52:30.563809 37700 wrap.go:42] GET /api/v1/namespaces/default/services/kubernetes: (5.592002ms) 200 [[kube-apiserver/v1.9.10 ....0.0.1:42872]

5月 27 20:52:30 master01 kube-apiserver[37700]: I0527 20:52:30.564282 37700 round_trippers.go:436] GET https://127.0.0.1:6443/api/v1/namespaces/default/services/kubernetes 200 OK in 6 milliseconds

5月 27 20:52:30 master01 kube-apiserver[37700]: I0527 20:52:30.565420 37700 handler.go:160] kube-aggregator: GET "/api/v1/namespaces/default/endpoints/kubernetes" satisfied by nonGoRestful

5月 27 20:52:30 master01 kube-apiserver[37700]: I0527 20:52:30.565545 37700 pathrecorder.go:247] kube-aggregator: "/api/v1/namespaces/default/endpoints/kubernetes" satisfied by prefix /api/

5月 27 20:52:30 master01 kube-apiserver[37700]: I0527 20:52:30.565631 37700 handler.go:150] kube-apiserver: GET "/api/v1/namespaces/default/endpoints/kubernetes" satisfied by gorestf...rvice /api/v1

5月 27 20:52:30 master01 kube-apiserver[37700]: I0527 20:52:30.572355 37700 wrap.go:42] GET /api/v1/namespaces/default/endpoints/kubernetes: (7.170178ms) 200 [[kube-apiserver/v1.9.10....0.0.1:42872]

5月 27 20:52:30 master01 kube-apiserver[37700]: I0527 20:52:30.572846 37700 round_trippers.go:436] GET https://127.0.0.1:6443/api/v1/namespaces/default/endpoints/kubernetes 200 OK in 8 milliseconds

[root@master01 ssl]# systemctl status kube-apiserver -l

6)配置和启动kube-controller-manager

6.1)先命令行测试启动

/usr/k8s/bin/kube-controller-manager --address=127.0.0.1 --master=http://${MASTER_URL}:8080 --allocate-node-cidrs=true --service-cluster-ip-range=${SERVICE_CIDR} --cluster-cidr=${CLUSTER_CIDR} --cluster-name=kubernetes --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem --root-ca-file=/etc/kubernetes/ssl/ca.pem --leader-elect=true --v=2

执行没有错误,创建文件

$ cat > kube-controller-manager.service <<EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] ExecStart=/usr/k8s/bin/kube-controller-manager \ --address=127.0.0.1 \ --master=http://${MASTER_URL}:8080 \ --allocate-node-cidrs=true \ --service-cluster-ip-range=${SERVICE_CIDR} \ --cluster-cidr=${CLUSTER_CIDR} \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \ --root-ca-file=/etc/kubernetes/ssl/ca.pem \ --leader-elect=true \ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

查看该文件

[root@master01 ssl]# cat kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] ExecStart=/usr/k8s/bin/kube-controller-manager --address=127.0.0.1 --master=http://k8s-api.virtual.local:8080 --allocate-node-cidrs=true --service-cluster-ip-range=10.254.0.0/16 --cluster-cidr=172.30.0.0/16 --cluster-name=kubernetes --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem --root-ca-file=/etc/kubernetes/ssl/ca.pem --leader-elect=true --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target

6.2)设置kube-controller-manager.service 开机自启动

[root@master01 ssl]# mv kube-controller-manager.service /etc/systemd/system [root@master01 ssl]# systemctl daemon-reload [root@master01 ssl]# systemctl enable kube-controller-manager.service Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /etc/systemd/system/kube-controller-manager.service. [root@master01 ssl]# systemctl start kube-controller-manager.service [root@master01 ssl]# systemctl status kube-controller-manager.service ● kube-controller-manager.service - Kubernetes Controller Manager Loaded: loaded (/etc/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled) Active: active (running) since 一 2019-05-27 21:04:35 CST; 13s ago Docs: https://github.com/GoogleCloudPlatform/kubernetes Main PID: 37783 (kube-controller) CGroup: /system.slice/kube-controller-manager.service └─37783 /usr/k8s/bin/kube-controller-manager --address=127.0.0.1 --master=http://k8s-api.virtual.local:8080 --allocate-node-cidrs=true --service-cluster-ip-range=10.254.0.0/16 --cluster-c... 5月 27 21:04:47 master01 kube-controller-manager[37783]: I0527 21:04:47.333573 37783 controller_utils.go:1026] Caches are synced for cidrallocator controller 5月 27 21:04:47 master01 kube-controller-manager[37783]: I0527 21:04:47.353933 37783 controller_utils.go:1026] Caches are synced for certificate controller 5月 27 21:04:47 master01 kube-controller-manager[37783]: I0527 21:04:47.407102 37783 controller_utils.go:1026] Caches are synced for certificate controller 5月 27 21:04:47 master01 kube-controller-manager[37783]: I0527 21:04:47.424111 37783 controller_utils.go:1026] Caches are synced for job controller 5月 27 21:04:47 master01 kube-controller-manager[37783]: I0527 21:04:47.490109 37783 controller_utils.go:1026] Caches are synced for resource quota controller 5月 27 21:04:47 master01 kube-controller-manager[37783]: I0527 21:04:47.506266 37783 controller_utils.go:1026] Caches are synced for garbage collector controller 5月 27 21:04:47 master01 kube-controller-manager[37783]: I0527 21:04:47.506311 37783 garbagecollector.go:144] Garbage collector: all resource monitors have synced. Proceeding to collect garbage 5月 27 21:04:47 master01 kube-controller-manager[37783]: I0527 21:04:47.524898 37783 controller_utils.go:1026] Caches are synced for resource quota controller 5月 27 21:04:47 master01 kube-controller-manager[37783]: I0527 21:04:47.525473 37783 controller_utils.go:1026] Caches are synced for persistent volume controller 5月 27 21:04:48 master01 kube-controller-manager[37783]: I0527 21:04:48.444031 37783 garbagecollector.go:190] syncing garbage collector with updated resources from discovery: map[{ v1...:{} {rbac.au

7)配置和启动kube-scheduler

7.1)命令行测试命令是否正常

/usr/k8s/bin/kube-scheduler --address=127.0.0.1 --master=http://${MASTER_URL}:8080 --leader-elect=true --v=2

正常则创建kube-scheduler.service文件

$ cat > kube-scheduler.service <<EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] ExecStart=/usr/k8s/bin/kube-scheduler \ --address=127.0.0.1 \ --master=http://${MASTER_URL}:8080 \ --leader-elect=true \ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

查看文件

[root@master01 ssl]# cat kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] ExecStart=/usr/k8s/bin/kube-scheduler --address=127.0.0.1 --master=http://k8s-api.virtual.local:8080 --leader-elect=true --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target

7.2)设置kube-scheduler的开机启动

[root@master01 ssl]# mv kube-scheduler.service /etc/systemd/system [root@master01 ssl]# systemctl daemon-reload [root@master01 ssl]# systemctl enable kube-scheduler Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /etc/systemd/system/kube-scheduler.service. [root@master01 ssl]# systemctl start kube-scheduler [root@master01 ssl]# systemctl status kube-scheduler ● kube-scheduler.service - Kubernetes Scheduler Loaded: loaded (/etc/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled) Active: active (running) since 一 2019-05-27 21:13:42 CST; 17s ago Docs: https://github.com/GoogleCloudPlatform/kubernetes Main PID: 37850 (kube-scheduler) CGroup: /system.slice/kube-scheduler.service └─37850 /usr/k8s/bin/kube-scheduler --address=127.0.0.1 --master=http://k8s-api.virtual.local:8080 --leader-elect=true --v=2 5月 27 21:13:42 master01 systemd[1]: Starting Kubernetes Scheduler... 5月 27 21:13:42 master01 kube-scheduler[37850]: W0527 21:13:42.436689 37850 server.go:162] WARNING: all flags than --config are deprecated. Please begin using a config file ASAP. 5月 27 21:13:42 master01 kube-scheduler[37850]: I0527 21:13:42.438090 37850 server.go:554] Version: v1.9.10 5月 27 21:13:42 master01 kube-scheduler[37850]: I0527 21:13:42.438222 37850 factory.go:837] Creating scheduler from algorithm provider 'DefaultProvider' 5月 27 21:13:42 master01 kube-scheduler[37850]: I0527 21:13:42.438231 37850 factory.go:898] Creating scheduler with fit predicates 'map[MaxEBSVolumeCount:{} MaxGCEPDVolumeCount:{} Ge... MatchInterPo 5月 27 21:13:42 master01 kube-scheduler[37850]: I0527 21:13:42.438431 37850 server.go:573] starting healthz server on 127.0.0.1:10251 5月 27 21:13:43 master01 kube-scheduler[37850]: I0527 21:13:43.243364 37850 controller_utils.go:1019] Waiting for caches to sync for scheduler controller 5月 27 21:13:43 master01 kube-scheduler[37850]: I0527 21:13:43.345039 37850 controller_utils.go:1026] Caches are synced for scheduler controller 5月 27 21:13:43 master01 kube-scheduler[37850]: I0527 21:13:43.345105 37850 leaderelection.go:174] attempting to acquire leader lease... 5月 27 21:13:43 master01 kube-scheduler[37850]: I0527 21:13:43.359717 37850 leaderelection.go:184] successfully acquired lease kube-system/kube-scheduler Hint: Some lines were ellipsized, use -l to show in full.

8)配置kubectl 命令行工具

8.1)配置环境变量

$ export KUBE_APISERVER="https://${MASTER_URL}:6443"

环境变量定义在配置文件

[root@master01 ssl]# cat ~/.bash_profile # .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs PATH=$PATH:$HOME/bin export PATH=/usr/k8s/bin/:$PATH export NODE_IP=192.168.10.12 source /usr/k8s/bin/env.sh export KUBE_APISERVER="https://${MASTER_URL}:6443" export PATH

8.2) 配置 kubectl命令

[root@master01 ~]# ls kubernetes/server/bin/kubectl kubernetes/server/bin/kubectl [root@master01 ~]# cp kubernetes/server/bin/kubectl /usr/k8s/bin/ [root@master01 ~]# kubectl version Client Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.10", GitCommit:"098570796b32895c38a9a1c9286425fb1ececa18", GitTreeState:"clean", BuildDate:"2018-08-02T17:19:54Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"} The connection to the server localhost:8080 was refused - did you specify the right host or port?

8.3)配置admin证书

$ cat > admin-csr.json <<EOF { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF

备注

后续kube-apiserver使用RBAC 对客户端(如kubelet、kube-proxy、Pod)请求进行授权 kube-apiserver 预定义了一些RBAC 使用的RoleBindings,如cluster-admin 将Group system:masters与Role cluster-admin绑定,该Role 授予了调用kube-apiserver所有API 的权限 O 指定了该证书的Group 为system:masters,kubectl使用该证书访问kube-apiserver时,由于证书被CA 签名,所以认证通过,同时由于证书用户组为经过预授权的system:masters,所以被授予访问所有API 的劝降 hosts 属性值为空列表

8.4)生成admin 证书和私钥

$ cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin $ ls admin admin.csr admin-csr.json admin-key.pem admin.pem $ sudo mv admin*.pem /etc/kubernetes/ssl/

8.5)创建kubectl kubeconfig 文件

# 设置集群参数 $ kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=${KUBE_APISERVER} # 设置客户端认证参数 $ kubectl config set-credentials admin --client-certificate=/etc/kubernetes/ssl/admin.pem --embed-certs=true --client-key=/etc/kubernetes/ssl/admin-key.pem --token=${BOOTSTRAP_TOKEN} # 设置上下文参数 $ kubectl config set-context kubernetes --cluster=kubernetes --user=admin # 设置默认上下文 $ kubectl config use-context kubernetes

执行过程

[root@master01 ssl]# echo $KUBE_APISERVER https://k8s-api.virtual.local:6443 [root@master01 ssl]# kubectl config set-cluster kubernetes > --certificate-authority=/etc/kubernetes/ssl/ca.pem > --embed-certs=true > --server=${KUBE_APISERVER} Cluster "kubernetes" set. [root@master01 ssl]# [root@master01 ssl]# echo $BOOTSTRAP_TOKEN 0b340751863956f119cbc624465db92b [root@master01 ssl]# kubectl config set-credentials admin > --client-certificate=/etc/kubernetes/ssl/admin.pem > --embed-certs=true > --client-key=/etc/kubernetes/ssl/admin-key.pem > --token=${BOOTSTRAP_TOKEN} User "admin" set. [root@master01 ssl]# kubectl config set-context kubernetes > --cluster=kubernetes > --user=admin Context "kubernetes" created. [root@master01 ssl]# kubectl config use-context kubernetes Switched to context "kubernetes".

8.6)此时验证kubect是否可以使用

[root@master01 ssl]# ls ~/.kube/ config [root@master01 ssl]# ls ~/.kube/config /root/.kube/config [root@master01 ssl]# kubectl version Client Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.10", GitCommit:"098570796b32895c38a9a1c9286425fb1ececa18", GitTreeState:"clean", BuildDate:"2018-08-02T17:19:54Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.10", GitCommit:"098570796b32895c38a9a1c9286425fb1ececa18", GitTreeState:"clean", BuildDate:"2018-08-02T17:11:51Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"} [root@master01 ssl]# kubectl get cs NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy {"health":"true"} etcd-1 Healthy {"health":"true"}

四、部署flannel网络(所有节点都需要安装)

1) 给node节点 创建环境变量

将master01的/usr/k8s/bin/env.sh 拷贝过来 [root@k8s01-node01 ~]# export NODE_IP=192.168.10.23 [root@k8s01-node01 ~]# cat ~/.bash_profile # .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs PATH=$PATH:$HOME/bin PATH=/usr/k8s/bin:$PATH source /usr/k8s/bin/env.sh export NODE_IP=192.168.10.23 export PATH

2)从master01导入ca相关证书

[root@k8s01-node01 ~]# mkdir -p /etc/kubernetes/ssl [root@master01 ssl]# ll ca* # 将master01节点的ca证书拷贝到node01中 -rw-r--r-- 1 root root 387 5月 22 14:45 ca-config.json -rw-r--r-- 1 root root 1001 5月 22 14:45 ca.csr -rw-r--r-- 1 root root 264 5月 22 14:45 ca-csr.json -rw------- 1 root root 1679 5月 22 14:45 ca-key.pem -rw-r--r-- 1 root root 1359 5月 22 14:45 ca.pem [root@master01 ssl]# scp ca* root@n1:/etc/kubernetes/ssl/ ca-config.json 100% 387 135.6KB/s 00:00 ca.csr 100% 1001 822.8KB/s 00:00 ca-csr.json 100% 264 81.2KB/s 00:00 ca-key.pem 100% 1679 36.6KB/s 00:00 ca.pem [root@k8s01-node01 ~]# ll /etc/kubernetes/ssl 查看nodes的ca证书 总用量 20 -rw-r--r-- 1 root root 387 5月 28 10:42 ca-config.json -rw-r--r-- 1 root root 1001 5月 28 10:42 ca.csr -rw-r--r-- 1 root root 264 5月 28 10:42 ca-csr.json -rw------- 1 root root 1679 5月 28 10:42 ca-key.pem -rw-r--r-- 1 root root 1359 5月 28 10:42 ca.pem

3)创建flanneld 证书签名请求

cat > flanneld-csr.json <<EOF { "CN": "flanneld", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF

3.1)生成flanneld 证书和私钥

$ cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld $ ls flanneld* flanneld.csr flanneld-csr.json flanneld-key.pem flanneld.pem $ sudo mkdir -p /etc/flanneld/ssl $ sudo mv flanneld*.pem /etc/flanneld/ssl

3.2)实际操作(证书在master生成,发布给nodes)

node01操作 [root@k8s01-node01 ~]# mkdir -p /etc/flanneld/ssl master01 创建flanneld 证书签名请求 $ cat > flanneld-csr.json <<EOF { "CN": "flanneld", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF 生成flanneld 证书和私钥: [root@master01 ssl]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem > -ca-key=/etc/kubernetes/ssl/ca-key.pem > -config=/etc/kubernetes/ssl/ca-config.json > -profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld 2019/05/28 10:48:11 [INFO] generate received request 2019/05/28 10:48:11 [INFO] received CSR 2019/05/28 10:48:11 [INFO] generating key: rsa-2048 2019/05/28 10:48:11 [INFO] encoded CSR 2019/05/28 10:48:11 [INFO] signed certificate with serial number 110687193783617600207260591820939534389315422637 2019/05/28 10:48:11 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@master01 ssl]# ls flanneld* flanneld.csr flanneld-csr.json flanneld-key.pem flanneld.pem [root@master01 ssl]# scp flanneld* root@n1:/etc/flanneld/ssl/ flanneld.csr 100% 997 370.5KB/s 00:00 flanneld-csr.json 100% 221 223.1KB/s 00:00 flanneld-key.pem 100% 1679 2.4MB/s 00:00 flanneld.pem 100% 1391 2.4MB/s 00:00 [root@master01 ssl]# node01查看 [root@k8s01-node01 ~]# ll /etc/flanneld/ssl 总用量 16 -rw-r--r-- 1 root root 997 5月 28 10:49 flanneld.csr -rw-r--r-- 1 root root 221 5月 28 10:49 flanneld-csr.json -rw------- 1 root root 1679 5月 28 10:49 flanneld-key.pem -rw-r--r-- 1 root root 1391 5月 28 10:49 flanneld.pem

4)向etcd 写入集群Pod 网段信息。该步骤只需在第一次部署Flannel 网络时执行,后续在其他节点上部署Flanneld 时无需再写入该信息

[root@master01 ssl]# mkdir -p /etc/flanneld/ssl

[root@master01 ssl]# mv flanneld*.pem /etc/flanneld/ssl/

$ etcdctl --endpoints=${ETCD_ENDPOINTS} --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/flanneld/ssl/flanneld.pem --key-file=/etc/flanneld/ssl/flanneld-key.pem set ${FLANNEL_ETCD_PREFIX}/config '{"Network":"'${CLUSTER_CIDR}'", "SubnetLen": 24, "Backend": {"Type": "vxlan"}}' # 得到如下反馈信息 {"Network":"172.30.0.0/16", "SubnetLen": 24, "Backend": {"Type": "vxlan"}}

写入的 Pod 网段(${CLUSTER_CIDR},172.30.0.0/16) 必须与kube-controller-manager 的 --cluster-cidr 选项值一致;

4.1)实际操作

[root@master01 ssl]# mkdir -p /etc/flanneld/ssl [root@master01 ssl]# mv flanneld*.pem /etc/flanneld/ssl/ [root@master01 ssl]# ll /etc/flanneld/ssl/ 总用量 8 -rw------- 1 root root 1679 5月 28 10:48 flanneld-key.pem -rw-r--r-- 1 root root 1391 5月 28 10:48 flanneld.pem [root@master01 ssl]# echo $FLANNEL_ETCD_PREFIX /kubernetes/network [root@master01 ssl]# echo $CLUSTER_CIDR 172.30.0.0/16 [root@master01 ssl]# echo $ETCD_ENDPOINTS https://192.168.10.12:2379,https://192.168.10.22:2379 [root@master01 ssl]# etcdctl > --endpoints=${ETCD_ENDPOINTS} > --ca-file=/etc/kubernetes/ssl/ca.pem > --cert-file=/etc/flanneld/ssl/flanneld.pem > --key-file=/etc/flanneld/ssl/flanneld-key.pem > set ${FLANNEL_ETCD_PREFIX}/config '{"Network":"'${CLUSTER_CIDR}'", "SubnetLen": 24, "Backend": {"Type": "vxlan"}}' {"Network":"172.30.0.0/16", "SubnetLen": 24, "Backend": {"Type": "vxlan"}} [root@master01 ssl]# cat /etc/systemd/system/kube-controller-manager.service |grep "cluster-cidr" --cluster-cidr=172.30.0.0/16

5)下载最新版的flanneld 二进制文件。

https://github.com/coreos/flannel/releases wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz [root@k8s01-node01 ~]# ls flannel-v0.11.0-linux-amd64.tar.gz flannel-v0.11.0-linux-amd64.tar.gz [root@k8s01-node01 ~]# tar xf flannel-v0.11.0-linux-amd64.tar.gz [root@k8s01-node01 ~]# cp flanneld /usr/k8s/bin/ [root@k8s01-node01 ~]# cp mk-docker-opts.sh /usr/k8s/bin/ [root@k8s01-node01 ~]# ls /usr/k8s/bin/ env.sh flanneld mk-docker-opts.sh

6)创建flanneld的systemd unit 文件

6.1)测试命令是否能正常执行(node01操作)

/usr/k8s/bin/flanneld -etcd-cafile=/etc/kubernetes/ssl/ca.pem -etcd-certfile=/etc/flanneld/ssl/flanneld.pem -etcd-keyfile=/etc/flanneld/ssl/flanneld-key.pem -etcd-endpoints=${ETCD_ENDPOINTS} -etcd-prefix=${FLANNEL_ETCD_PREFIX}

6.2)node01创建flanneld.service文件

$ cat > flanneld.service << EOF [Unit] Description=Flanneld overlay address etcd agent After=network.target After=network-online.target Wants=network-online.target After=etcd.service Before=docker.service [Service] Type=notify ExecStart=/usr/k8s/bin/flanneld \ -etcd-cafile=/etc/kubernetes/ssl/ca.pem \ -etcd-certfile=/etc/flanneld/ssl/flanneld.pem \ -etcd-keyfile=/etc/flanneld/ssl/flanneld-key.pem \ -etcd-endpoints=${ETCD_ENDPOINTS} \ -etcd-prefix=${FLANNEL_ETCD_PREFIX} ExecStartPost=/usr/k8s/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker Restart=on-failure [Install] WantedBy=multi-user.target RequiredBy=docker.service EOF

6.3) 设置 flanneld 的开机启动

[root@k8s01-node01 ~]# mv flanneld.service /etc/systemd/system [root@k8s01-node01 ~]# systemctl daemon-reload [root@k8s01-node01 ~]# systemctl enable flanneld Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /etc/systemd/system/flanneld.service. Created symlink from /etc/systemd/system/docker.service.requires/flanneld.service to /etc/systemd/system/flanneld.service. [root@k8s01-node01 ~]# systemctl start flanneld [root@k8s01-node01 ~]# systemctl status flanneld ● flanneld.service - Flanneld overlay address etcd agent Loaded: loaded (/etc/systemd/system/flanneld.service; enabled; vendor preset: disabled) Active: active (running) since 二 2019-05-28 13:37:18 CST; 21s ago Process: 28063 ExecStartPost=/usr/k8s/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker (code=exited, status=0/SUCCESS) Main PID: 28051 (flanneld) Memory: 10.3M CGroup: /system.slice/flanneld.service └─28051 /usr/k8s/bin/flanneld -etcd-cafile=/etc/kubernetes/ssl/ca.pem -etcd-certfile=/etc/flanneld/ssl/flanneld.pem -etcd-keyfile=/etc/flanneld/ssl/flanneld-key.pem -etcd-endpoints=https:... 5月 28 13:37:18 k8s01-node01 flanneld[28051]: I0528 13:37:18.747119 28051 main.go:244] Created subnet manager: Etcd Local Manager with Previous Subnet: 172.30.23.0/24 5月 28 13:37:18 k8s01-node01 flanneld[28051]: I0528 13:37:18.747123 28051 main.go:247] Installing signal handlers 5月 28 13:37:18 k8s01-node01 flanneld[28051]: I0528 13:37:18.819160 28051 main.go:386] Found network config - Backend type: vxlan 5月 28 13:37:18 k8s01-node01 flanneld[28051]: I0528 13:37:18.819293 28051 vxlan.go:120] VXLAN config: VNI=1 Port=0 GBP=false DirectRouting=false 5月 28 13:37:18 k8s01-node01 flanneld[28051]: I0528 13:37:18.830870 28051 local_manager.go:147] Found lease (172.30.23.0/24) for current IP (192.168.10.23), reusing 5月 28 13:37:18 k8s01-node01 flanneld[28051]: I0528 13:37:18.844660 28051 main.go:317] Wrote subnet file to /run/flannel/subnet.env 5月 28 13:37:18 k8s01-node01 flanneld[28051]: I0528 13:37:18.844681 28051 main.go:321] Running backend. 5月 28 13:37:18 k8s01-node01 flanneld[28051]: I0528 13:37:18.844739 28051 vxlan_network.go:60] watching for new subnet leases 5月 28 13:37:18 k8s01-node01 flanneld[28051]: I0528 13:37:18.851989 28051 main.go:429] Waiting for 22h59m59.998588039s to renew lease 5月 28 13:37:18 k8s01-node01 systemd[1]: Started Flanneld overlay address etcd agent.

查看网络

[root@k8s01-node01 ~]# ifconfig flannel.1 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 172.30.23.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::b4e5:d4ff:fe53:16da prefixlen 64 scopeid 0x20<link> ether b6:e5:d4:53:16:da txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

6.4)其他node节点,同样的方法安装flanneld网络

7)master节点查看nodes网络节点信息

$ etcdctl --endpoints=${ETCD_ENDPOINTS} --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/flanneld/ssl/flanneld.pem --key-file=/etc/flanneld/ssl/flanneld-key.pem ls ${FLANNEL_ETCD_PREFIX}/subnets /kubernetes/network/subnets/172.30.23.0-24 # 为node01节点的网络。可在node01使用ifconfig flannel.1查看

五、部署node节点

1)配置环境变量,已经hosts文件

[root@k8s01-node01 ~]# export KUBE_APISERVER="https://${MASTER_URL}:6443" [root@k8s01-node01 ~]# echo $KUBE_APISERVER https://k8s-api.virtual.local:6443 [root@k8s01-node01 ~]# cat .bash_profile # .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs PATH=$PATH:$HOME/bin PATH=/usr/k8s/bin:$PATH source /usr/k8s/bin/env.sh export NODE_IP=192.168.10.23 export KUBE_APISERVER="https://${MASTER_URL}:6443" export PATH [root@k8s01-node01 ~]# cat /etc/hosts|grep k8s-api.virtual.local 192.168.10.12 k8s-api.virtual.local

2)开启路由转发

net.ipv4.ip_forward=1

[root@k8s01-node01 ~]# cat /etc/sysctl.conf # sysctl settings are defined through files in # /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/. # # Vendors settings live in /usr/lib/sysctl.d/. # To override a whole file, create a new file with the same in # /etc/sysctl.d/ and put new settings there. To override # only specific settings, add a file with a lexically later # name in /etc/sysctl.d/ and put new settings there. # # For more information, see sysctl.conf(5) and sysctl.d(5). net.ipv4.ip_forward=1

[root@k8s01-node01 ~]# sysctl -p

net.ipv4.ip_forward = 1

3)根据官网进行docker的安装

https://docs.docker.com/install/linux/docker-ce/centos # docker 官网安装 先安装仓库 yum install -y yum-utils device-mapper-persistent-data lvm2 安装源 yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo 安装docker yum install docker-ce docker-ce-cli containerd.io -y [root@k8s01-node01 ~]# docker version Client: Version: 18.09.6 API version: 1.39 Go version: go1.10.8 Git commit: 481bc77156 Built: Sat May 4 02:34:58 2019 OS/Arch: linux/amd64 Experimental: false Server: Docker Engine - Community Engine: Version: 18.09.6 API version: 1.39 (minimum version 1.12) Go version: go1.10.8 Git commit: 481bc77 Built: Sat May 4 02:02:43 2019 OS/Arch: linux/amd64 Experimental: false

4)修改docker 的systemd unit 文件

vim /usr/lib/systemd/system/docker.service ..... [Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker EnvironmentFile=-/run/flannel/docker # 新增 ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --log-level=info $DOCKER_NETWORK_OPTIONS # 新增 --log-level=info $DOCKER_NETWORK_OPTIONS ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always .....................

查看文件内容

[Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com BindsTo=containerd.service After=network-online.target firewalld.service containerd.service Wants=network-online.target Requires=docker.socket [Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker EnvironmentFile=-/run/flannel/docker ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --log-level=info $DOCKER_NETWORK_OPTIONS ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always # Note that StartLimit* options were moved from "Service" to "Unit" in systemd 229. # Both the old, and new location are accepted by systemd 229 and up, so using the old location # to make them work for either version of systemd. StartLimitBurst=3 # Note that StartLimitInterval was renamed to StartLimitIntervalSec in systemd 230. # Both the old, and new name are accepted by systemd 230 and up, so using the old name to make # this option work for either version of systemd. StartLimitInterval=60s # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Comment TasksMax if your systemd version does not supports it. # Only systemd 226 and above support this option. TasksMax=infinity # set delegate yes so that systemd does not reset the cgroups of docker containers Delegate=yes # kill only the docker process, not all processes in the cgroup KillMode=process [Install] WantedBy=multi-user.target

5)为了加快 pull image 的速度,可以使用国内的仓库镜像服务器,同时增加下载的并发数。(如果 dockerd 已经运行,则需要重启 dockerd 生效。)

[root@k8s01-node01 ~]# cat /etc/docker/daemon.json { "max-concurrent-downloads": 10 }

6)启动docker

[root@k8s01-node01 ~]# systemctl daemon-reload [root@k8s01-node01 ~]# systemctl enable docker [root@k8s01-node01 ~]# systemctl start docker [root@k8s01-node01 ~]# systemctl status docker

内核优化

[root@k8s01-node01 ~]# cat /etc/sysctl.conf # sysctl settings are defined through files in # /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/. # # Vendors settings live in /usr/lib/sysctl.d/. # To override a whole file, create a new file with the same in # /etc/sysctl.d/ and put new settings there. To override # only specific settings, add a file with a lexically later # name in /etc/sysctl.d/ and put new settings there. # # For more information, see sysctl.conf(5) and sysctl.d(5). net.ipv4.ip_forward=1 net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1

sysctl -p

[root@k8s01-node01 ~]# sysctl -p net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1

[root@k8s01-node01 ~]# systemctl restart docker

7)防火墙问题

$ sudo systemctl daemon-reload $ sudo systemctl stop firewalld $ sudo systemctl disable firewalld $ sudo iptables -F && sudo iptables -X && sudo iptables -F -t nat && sudo iptables -X -t nat #清空以前的防火墙 $ sudo systemctl enable docker $ sudo systemctl start docker

查看防火墙

[root@k8s01-node01 ~]# iptables -L -n Chain INPUT (policy ACCEPT) target prot opt source destination Chain FORWARD (policy ACCEPT) target prot opt source destination DOCKER-USER all -- 0.0.0.0/0 0.0.0.0/0 DOCKER-ISOLATION-STAGE-1 all -- 0.0.0.0/0 0.0.0.0/0 ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 ACCEPT all -- 172.30.0.0/16 0.0.0.0/0 ACCEPT all -- 0.0.0.0/0 172.30.0.0/16 Chain OUTPUT (policy ACCEPT) target prot opt source destination Chain DOCKER (1 references) target prot opt source destination Chain DOCKER-ISOLATION-STAGE-1 (1 references) target prot opt source destination DOCKER-ISOLATION-STAGE-2 all -- 0.0.0.0/0 0.0.0.0/0 RETURN all -- 0.0.0.0/0 0.0.0.0/0 Chain DOCKER-ISOLATION-STAGE-2 (1 references) target prot opt source destination DROP all -- 0.0.0.0/0 0.0.0.0/0 RETURN all -- 0.0.0.0/0 0.0.0.0/0 Chain DOCKER-USER (1 references) target prot opt source destination RETURN all -- 0.0.0.0/0 0.0.0.0/0

六、安装和配置kubelet

1)node1节点安装kubelet命令

node01执行 [root@k8s01-node01 ~]# ls /usr/k8s/bin/ # 查看kubectl是否有 env.sh flanneld mk-docker-opts.sh [root@k8s01-node01 ~]# mkdir .kube master01传输文件 [root@master01 ~]# scp /usr/k8s/bin/kubectl root@n1:/usr/k8s/bin/ [root@master01 ~]# scp .kube/config root@n1:/root/.kube/ node01查看 [root@k8s01-node01 ~]# ls /usr/k8s/bin/ env.sh flanneld kubectl mk-docker-opts.sh [root@k8s01-node01 ~]# ls .kube/ config [root@k8s01-node01 ~]# kubectl version Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.2", GitCommit:"66049e3b21efe110454d67df4fa62b08ea79a19b", GitTreeState:"clean", BuildDate:"2019-05-16T16:23:09Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.10", GitCommit:"098570796b32895c38a9a1c9286425fb1ececa18", GitTreeState:"clean", BuildDate:"2018-08-02T17:11:51Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"}

2)给特殊用户赋予规则

kubelet 启动时向kube-apiserver 发送TLS bootstrapping 请求,需要先将bootstrap token 文件中的kubelet-bootstrap 用户赋予system:node-bootstrapper 角色,然后kubelet 才有权限创建认证请求(certificatesigningrequests): [root@k8s01-node01 ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created 另外1.8 版本中还需要为Node 请求创建一个RBAC 授权规则: [root@k8s01-node01 ~]# kubectl create clusterrolebinding kubelet-nodes --clusterrole=system:node --group=system:nodes clusterrolebinding.rbac.authorization.k8s.io/kubelet-nodes created

3)下载最新的kubelet 和kube-proxy 二进制文件(前面下载kubernetes 目录下面其实也有)

$ wget https://dl.k8s.io/v1.8.2/kubernetes-server-linux-amd64.tar.gz $ tar -xzvf kubernetes-server-linux-amd64.tar.gz $ cd kubernetes $ tar -xzvf kubernetes-src.tar.gz $ sudo cp -r ./server/bin/{kube-proxy,kubelet} /usr/k8s/bin/

方法二(实际操作)

[root@master01 ~]# ls kubernetes/server/bin/kubelet kubernetes/server/bin/kubelet [root@master01 ~]# ls kubernetes/server/bin/kube-proxy kubernetes/server/bin/kube-proxy [root@master01 ~]# scp kubernetes/server/bin/kube-proxy root@n1:/usr/k8s/bin/ [root@master01 ~]# scp kubernetes/server/bin/kubelet root@n1:/usr/k8s/bin/

4)创建kubelet bootstapping kubeconfig 文件

$ # 设置集群参数 $ kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=bootstrap.kubeconfig $ # 设置客户端认证参数 $ kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=bootstrap.kubeconfig $ # 设置上下文参数 $ kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig $ # 设置默认上下文 $ kubectl config use-context default --kubeconfig=bootstrap.kubeconfig $ mv bootstrap.kubeconfig /etc/kubernetes/

操作过程

[root@k8s01-node01 ~]# echo $KUBE_APISERVER https://k8s-api.virtual.local:6443 [root@k8s01-node01 ~]# kubectl config set-cluster kubernetes > --certificate-authority=/etc/kubernetes/ssl/ca.pem > --embed-certs=true > --server=${KUBE_APISERVER} > --kubeconfig=bootstrap.kubeconfig Cluster "kubernetes" set. [root@k8s01-node01 ~]# echo $BOOTSTRAP_TOKEN 0b340751863956f119cbc624465db92b [root@k8s01-node01 ~]# kubectl config set-credentials kubelet-bootstrap > --token=${BOOTSTRAP_TOKEN} > --kubeconfig=bootstrap.kubeconfig User "kubelet-bootstrap" set. [root@k8s01-node01 ~]# kubectl config set-context default > --cluster=kubernetes > --user=kubelet-bootstrap > --kubeconfig=bootstrap.kubeconfig Context "default" created. [root@k8s01-node01 ~]# kubectl config use-context default --kubeconfig=bootstrap.kubeconfig Switched to context "default". [root@k8s01-node01 ~]# ls bootstrap.kubeconfig bootstrap.kubeconfig [root@k8s01-node01 ~]# mv bootstrap.kubeconfig /etc/kubernetes/

5)创建kubelet 的systemd unit 文件

5.1)命令行测试是否通过

/usr/k8s/bin/kubelet --fail-swap-on=false --cgroup-driver=cgroupfs --address=${NODE_IP} --hostname-override=${NODE_IP} --experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig --require-kubeconfig --cert-dir=/etc/kubernetes/ssl --cluster-dns=${CLUSTER_DNS_SVC_IP} --cluster-domain=${CLUSTER_DNS_DOMAIN} --hairpin-mode promiscuous-bridge --allow-privileged=true --serialize-image-pulls=false --logtostderr=true --v=2

5.2)通过则创建文件

$ sudo mkdir /var/lib/kubelet # 必须先创建工作目录 $ cat > kubelet.service <<EOF [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=docker.service Requires=docker.service [Service] WorkingDirectory=/var/lib/kubelet ExecStart=/usr/k8s/bin/kubelet \ --fail-swap-on=false \ --cgroup-driver=cgroupfs \ --address=${NODE_IP} \ --hostname-override=${NODE_IP} \ --experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \ --require-kubeconfig \ --cert-dir=/etc/kubernetes/ssl \ --cluster-dns=${CLUSTER_DNS_SVC_IP} \ --cluster-domain=${CLUSTER_DNS_DOMAIN} \ --hairpin-mode promiscuous-bridge \ --allow-privileged=true \ --serialize-image-pulls=false \ --logtostderr=true \ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

5.3)开机自启动

[root@k8s01-node01 ~]# mv kubelet.service /etc/systemd/system [root@k8s01-node01 ~]# systemctl daemon-reload [root@k8s01-node01 ~]# systemctl restart kubelet [root@k8s01-node01 ~]# systemctl status kubelet [root@k8s01-node01 ~]# systemctl status kubelet -l 详细情况查看 ● kubelet.service - Kubernetes Kubelet Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled) Active: active (running) since 二 2019-05-28 19:48:40 CST; 14s ago Docs: https://github.com/GoogleCloudPlatform/kubernetes [root@k8s01-node01 ~]# systemctl enable kubelet # 问题 Failed to execute operation: File exists

6) 通过kubelet 的TLS 证书请求

6.1) 查看未通过时的状态

[root@k8s01-node01 ~]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-mTYmEsL6Eh5rKSJOgfO8trHBq8LHI1SX7QDr2OqJ2Zg 29m kubelet-bootstrap Pending [root@k8s01-node01 ~]# kubectl get nodes No resources found.

6.2)通过CSR请求

[root@k8s01-node01 ~]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-mTYmEsL6Eh5rKSJOgfO8trHBq8LHI1SX7QDr2OqJ2Zg 31m kubelet-bootstrap Pending [root@k8s01-node01 ~]# kubectl certificate approve node-csr-mTYmEsL6Eh5rKSJOgfO8trHBq8LHI1SX7QDr2OqJ2Zg # 认证通过的命令 certificatesigningrequest.certificates.k8s.io/node-csr-mTYmEsL6Eh5rKSJOgfO8trHBq8LHI1SX7QDr2OqJ2Zg approved [root@k8s01-node01 ~]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-mTYmEsL6Eh5rKSJOgfO8trHBq8LHI1SX7QDr2OqJ2Zg 32m kubelet-bootstrap Approved,Issued [root@k8s01-node01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION 192.168.10.23 Ready <none> 3m15s v1.9.10

6.3)此时会生成一些秘钥

[root@k8s01-node01 ~]# ll /etc/kubernetes/ssl/kubelet* -rw-r--r-- 1 root root 1046 5月 28 19:57 /etc/kubernetes/ssl/kubelet-client.crt -rw------- 1 root root 227 5月 28 19:25 /etc/kubernetes/ssl/kubelet-client.key -rw-r--r-- 1 root root 1115 5月 28 19:25 /etc/kubernetes/ssl/kubelet.crt -rw------- 1 root root 1679 5月 28 19:25 /etc/kubernetes/ssl/kubelet.key

七、配置kube-proxy

1)创建kube-proxy 证书签名请求(master操作)

$ cat > kube-proxy-csr.json <<EOF { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF

2)生成kube-proxy 客户端证书和私钥(master操作)

$ cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy $ ls kube-proxy* kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem $ sudo mv kube-proxy*.pem /etc/kubernetes/ssl/

2.1)实际操作过程。将生成的秘钥发送给了node01

[root@master01 ssl]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem > -ca-key=/etc/kubernetes/ssl/ca-key.pem > -config=/etc/kubernetes/ssl/ca-config.json > -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy 2019/05/28 20:14:58 [INFO] generate received request 2019/05/28 20:14:58 [INFO] received CSR 2019/05/28 20:14:58 [INFO] generating key: rsa-2048 2019/05/28 20:14:58 [INFO] encoded CSR 2019/05/28 20:14:58 [INFO] signed certificate with serial number 680983213307794519299992995799571930531865790014 2019/05/28 20:14:58 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@master01 ssl]# ls kube-proxy* kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem [root@master01 ssl]# ls kube-proxy*.pem kube-proxy-key.pem kube-proxy.pem [root@master01 ssl]# scp kube-proxy*.pem root@n1:/etc/kubernetes/ssl/ kube-proxy-key.pem 100% 1675 931.9KB/s 00:00 kube-proxy.pem

在node01查看

[root@k8s01-node01 ~]# ll /etc/kubernetes/ssl/kube-proxy*.pem -rw------- 1 root root 1675 5月 28 20:27 /etc/kubernetes/ssl/kube-proxy-key.pem -rw-r--r-- 1 root root 1403 5月 28 20:27 /etc/kubernetes/ssl/kube-proxy.pem

3)创建kube-proxy kubeconfig 文件 (node01操作)

$ # 设置集群参数 $ kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=kube-proxy.kubeconfig $ # 设置客户端认证参数 $ kubectl config set-credentials kube-proxy --client-certificate=/etc/kubernetes/ssl/kube-proxy.pem --client-key=/etc/kubernetes/ssl/kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig $ # 设置上下文参数 $ kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig $ # 设置默认上下文 $ kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig $ mv kube-proxy.kubeconfig /etc/kubernetes/

实际操作过程

[root@k8s01-node01 ~]# kubectl config set-cluster kubernetes > --certificate-authority=/etc/kubernetes/ssl/ca.pem > --embed-certs=true > --server=${KUBE_APISERVER} > --kubeconfig=kube-proxy.kubeconfig Cluster "kubernetes" set. [root@k8s01-node01 ~]# kubectl config set-credentials kube-proxy > --client-certificate=/etc/kubernetes/ssl/kube-proxy.pem > --client-key=/etc/kubernetes/ssl/kube-proxy-key.pem > --embed-certs=true > --kubeconfig=kube-proxy.kubeconfig User "kube-proxy" set. [root@k8s01-node01 ~]# kubectl config set-context default > --cluster=kubernetes > --user=kube-proxy > --kubeconfig=kube-proxy.kubeconfig Context "default" created. [root@k8s01-node01 ~]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig Switched to context "default". [root@k8s01-node01 ~]# ls kube-proxy.kubeconfig kube-proxy.kubeconfig [root@k8s01-node01 ~]# mv kube-proxy.kubeconfig /etc/kubernetes/

4)创建kube-proxy 的systemd unit 文件

4.1)命令行执行判断是否执行成功

/usr/k8s/bin/kube-proxy --bind-address=${NODE_IP} --hostname-override=${NODE_IP} --cluster-cidr=${SERVICE_CIDR} --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig --logtostderr=true --v=2

4.2)创建该文件

$ sudo mkdir -p /var/lib/kube-proxy # 必须先创建工作目录 $ cat > kube-proxy.service <<EOF [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] WorkingDirectory=/var/lib/kube-proxy ExecStart=/usr/k8s/bin/kube-proxy \ --bind-address=${NODE_IP} \ --hostname-override=${NODE_IP} \ --cluster-cidr=${SERVICE_CIDR} \ --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \ --logtostderr=true \ --v=2 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

5)设置开机自启动

[root@k8s01-node01 ~]# mv kube-proxy.service /etc/systemd/system [root@k8s01-node01 ~]# systemctl daemon-reload [root@k8s01-node01 ~]# systemctl enable kube-proxy Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /etc/systemd/system/kube-proxy.service. [root@k8s01-node01 ~]# systemctl start kube-proxy [root@k8s01-node01 ~]# systemctl status kube-proxy ● kube-proxy.service - Kubernetes Kube-Proxy Server Loaded: loaded (/etc/systemd/system/kube-proxy.service; enabled; vendor preset: disabled) Active: active (running) since 二 2019-05-28 20:42:37 CST; 12s ago Docs: https://github.com/GoogleCloudPlatform/kubernetes

6)此时防火墙写入了新的规则

[root@k8s01-node01 ~]# iptables -L -n Chain INPUT (policy ACCEPT) target prot opt source destination KUBE-SERVICES all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */ KUBE-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0 Chain FORWARD (policy ACCEPT) target prot opt source destination KUBE-FORWARD all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes forward rules */ DOCKER-USER all -- 0.0.0.0/0 0.0.0.0/0 DOCKER-ISOLATION-STAGE-1 all -- 0.0.0.0/0 0.0.0.0/0 ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 ACCEPT all -- 172.30.0.0/16 0.0.0.0/0 ACCEPT all -- 0.0.0.0/0 172.30.0.0/16 Chain OUTPUT (policy ACCEPT) target prot opt source destination KUBE-SERVICES all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */ KUBE-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0 Chain DOCKER (1 references) target prot opt source destination Chain DOCKER-ISOLATION-STAGE-1 (1 references) target prot opt source destination DOCKER-ISOLATION-STAGE-2 all -- 0.0.0.0/0 0.0.0.0/0 RETURN all -- 0.0.0.0/0 0.0.0.0/0 Chain DOCKER-ISOLATION-STAGE-2 (1 references) target prot opt source destination DROP all -- 0.0.0.0/0 0.0.0.0/0 RETURN all -- 0.0.0.0/0 0.0.0.0/0 Chain DOCKER-USER (1 references) target prot opt source destination RETURN all -- 0.0.0.0/0 0.0.0.0/0 Chain KUBE-FIREWALL (2 references) target prot opt source destination DROP all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes firewall for dropping marked packets */ mark match 0x8000/0x8000 Chain KUBE-FORWARD (1 references) target prot opt source destination ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes forwarding rules */ mark match 0x4000/0x4000 ACCEPT all -- 10.254.0.0/16 0.0.0.0/0 /* kubernetes forwarding conntrack pod source rule */ ctstate RELATED,ESTABLISHED ACCEPT all -- 0.0.0.0/0 10.254.0.0/16 /* kubernetes forwarding conntrack pod destination rule */ ctstate RELATED,ESTABLISHED Chain KUBE-SERVICES (2 references) target prot opt source destination

7)对节点是否显示主机名的问题

[root@k8s01-node01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION 192.168.10.23 Ready <none> 50m v1.9.10 如果这里的名字想显示为主机名时: [root@k8s01-node01 ~]# cat /etc/systemd/system/kubelet.service |grep hostname --hostname-override=192.168.10.23 去掉该参数 [root@k8s01-node01 ~]# cat /etc/systemd/system/kube-proxy.service |grep hostname --hostname-override=192.168.10.23 去掉该参数

八、验证节点功能

1)定义yaml 文件:(将下面内容保存为:nginx-ds.yaml)

apiVersion: v1 kind: Service metadata: name: nginx-ds labels: app: nginx-ds spec: type: NodePort selector: app: nginx-ds ports: - name: http port: 80 targetPort: 80 --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: nginx-ds labels: addonmanager.kubernetes.io/mode: Reconcile spec: template: metadata: labels: app: nginx-ds spec: containers: - name: my-nginx image: nginx:1.7.9 ports: - containerPort: 80

2)创建该pods

[root@k8s01-node01 ~]# kubectl create -f nginx-ds.yaml service/nginx-ds created daemonset.extensions/nginx-ds created [root@k8s01-node01 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-ds-txxnb 0/1 ContainerCreating 0 2m35s

kind: DaemonSet 参数是在每个节点启动一个pod

[root@k8s01-node01 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-ds-txxnb 0/1 ContainerCreating 0 2m35s [root@k8s01-node01 ~]# kubectl describe pod nginx-ds-txxnb ....... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulMountVolume 7m25s kubelet, 192.168.10.23 MountVolume.SetUp succeeded for volume "default-token-7l55q" Warning FailedCreatePodSandBox 15s (x16 over 7m10s) kubelet, 192.168.10.23 Failed create pod sandbox. [root@k8s01-node01 ~]# journalctl -u kubelet -f 更详细的查看报错 5月 28 21:05:29 k8s01-node01 kubelet[39148]: E0528 21:05:29.924991 39148 kuberuntime_manager.go:647] createPodSandbox for pod "nginx-ds-txxnb_default(004fdd27-8148-11e9-8809-000c29a2d5b5)" failed: rpc error: code = Unknown desc = failed pulling image "gcr.io/google_containers/pause-amd64:3.0": Error response from daemon: Get https://gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

3)原因,从国外源无法拉取镜像。更改镜像源

原因,从国外源无法拉取镜像 [root@k8s01-node01 ~]# vim /etc/systemd/system/kubelet.service 修改镜像地址 ...... [Service] WorkingDirectory=/var/lib/kubelet ExecStart=/usr/k8s/bin/kubelet --fail-swap-on=false --cgroup-driver=cgroupfs --address=192.168.10.23 --hostname-override=192.168.10.23 --pod-infra-container-image=cnych/pause-amd64:3.0 # 新增内容 [root@k8s01-node01 ~]# systemctl daemon-reload [root@k8s01-node01 ~]# systemctl restart kubelet [root@k8s01-node01 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE cnych/pause-amd64 3.0 99e59f495ffa 3 years ago 747kB nginx 1.7.9 84581e99d807 4 years ago 91.7MB [root@k8s01-node01 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-ds-txxnb 1/1 Running 0 14h

4)访问

[root@k8s01-node01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ds-txxnb 1/1 Running 0 14h 172.30.23.2 192.168.10.23 <none> <none> [root@k8s01-node01 ~]# curl 172.30.23.2 [root@k8s01-node01 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 38h nginx-ds NodePort 10.254.66.68 <none> 80:31717/TCP 14h [root@k8s01-node01 ~]# netstat -lntup|grep 31717 tcp6 0 0 :::31717 :::* LISTEN 41250/kube-proxy [root@k8s01-node01 ~]# curl 192.168.10.23:31717

九、部署kubedns 插件

1)下载,修改文件

wget https://raw.githubusercontent.com/kubernetes/kubernetes/v1.9.3/cluster/addons/dns/kube-dns.yaml.base cp kube-dns.yaml.base kube-dns.yaml

改好的文件

# Copyright 2016 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # Should keep target in cluster/addons/dns-horizontal-autoscaler/dns-horizontal-autoscaler.yaml # in sync with this file. # __MACHINE_GENERATED_WARNING__ apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "KubeDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.254.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP --- apiVersion: v1 kind: ServiceAccount metadata: name: kube-dns namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- apiVersion: v1 kind: ConfigMap metadata: name: kube-dns namespace: kube-system labels: addonmanager.kubernetes.io/mode: EnsureExists --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: kube-dns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: # replicas: not specified here: # 1. In order to make Addon Manager do not reconcile this replicas parameter. # 2. Default is 1. # 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: rollingUpdate: maxSurge: 10% maxUnavailable: 0 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: tolerations: - key: "CriticalAddonsOnly" operator: "Exists" volumes: - name: kube-dns-config configMap: name: kube-dns optional: true containers: - name: kubedns image: cnych/k8s-dns-kube-dns-amd64:1.14.8 resources: # TODO: Set memory limits when we've profiled the container for large # clusters, then set request = limit to keep this container in # guaranteed class. Currently, this container falls into the # "burstable" category so the kubelet doesn't backoff from restarting it. limits: memory: 170Mi requests: cpu: 100m memory: 70Mi livenessProbe: httpGet: path: /healthcheck/kubedns port: 10054 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /readiness port: 8081 scheme: HTTP # we poll on pod startup for the Kubernetes master service and # only setup the /readiness HTTP server once that's available. initialDelaySeconds: 3 timeoutSeconds: 5 args: - --domain=cluster.local. - --dns-port=10053 - --config-dir=/kube-dns-config - --v=2 env: - name: PROMETHEUS_PORT value: "10055" ports: - containerPort: 10053 name: dns-local protocol: UDP - containerPort: 10053 name: dns-tcp-local protocol: TCP - containerPort: 10055 name: metrics protocol: TCP volumeMounts: - name: kube-dns-config mountPath: /kube-dns-config - name: dnsmasq image: cnych/k8s-dns-dnsmasq-nanny-amd64:1.14.8 livenessProbe: httpGet: path: /healthcheck/dnsmasq port: 10054 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 args: - -v=2 - -logtostderr - -configDir=/etc/k8s/dns/dnsmasq-nanny - -restartDnsmasq=true - -- - -k - --cache-size=1000 - --no-negcache - --log-facility=- - --server=/cluster.local/127.0.0.1#10053 - --server=/in-addr.arpa/127.0.0.1#10053 - --server=/ip6.arpa/127.0.0.1#10053 ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP # see: https://github.com/kubernetes/kubernetes/issues/29055 for details resources: requests: cpu: 150m memory: 20Mi volumeMounts: - name: kube-dns-config mountPath: /etc/k8s/dns/dnsmasq-nanny - name: sidecar image: cnych/k8s-dns-sidecar-amd64:1.14.8 livenessProbe: httpGet: path: /metrics port: 10054 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 args: - --v=2 - --logtostderr - --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local.,5,SRV - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local.,5,SRV ports: - containerPort: 10054 name: metrics protocol: TCP resources: requests: memory: 20Mi cpu: 10m dnsPolicy: Default # Don't use cluster DNS. serviceAccountName: kube-dns

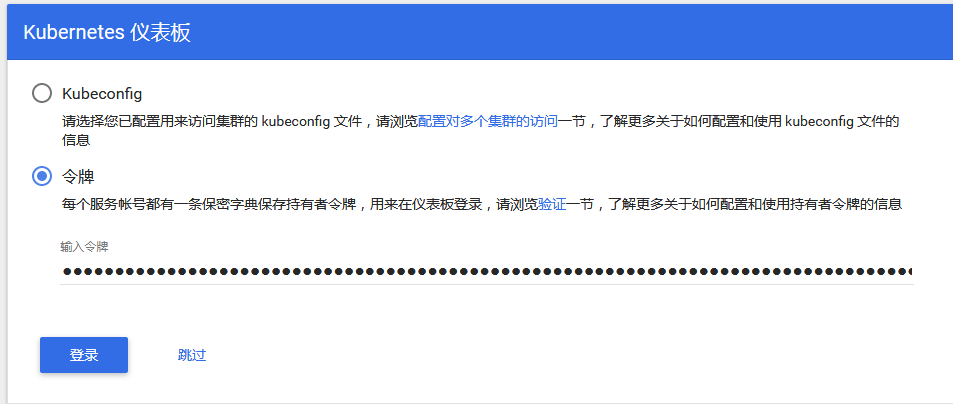

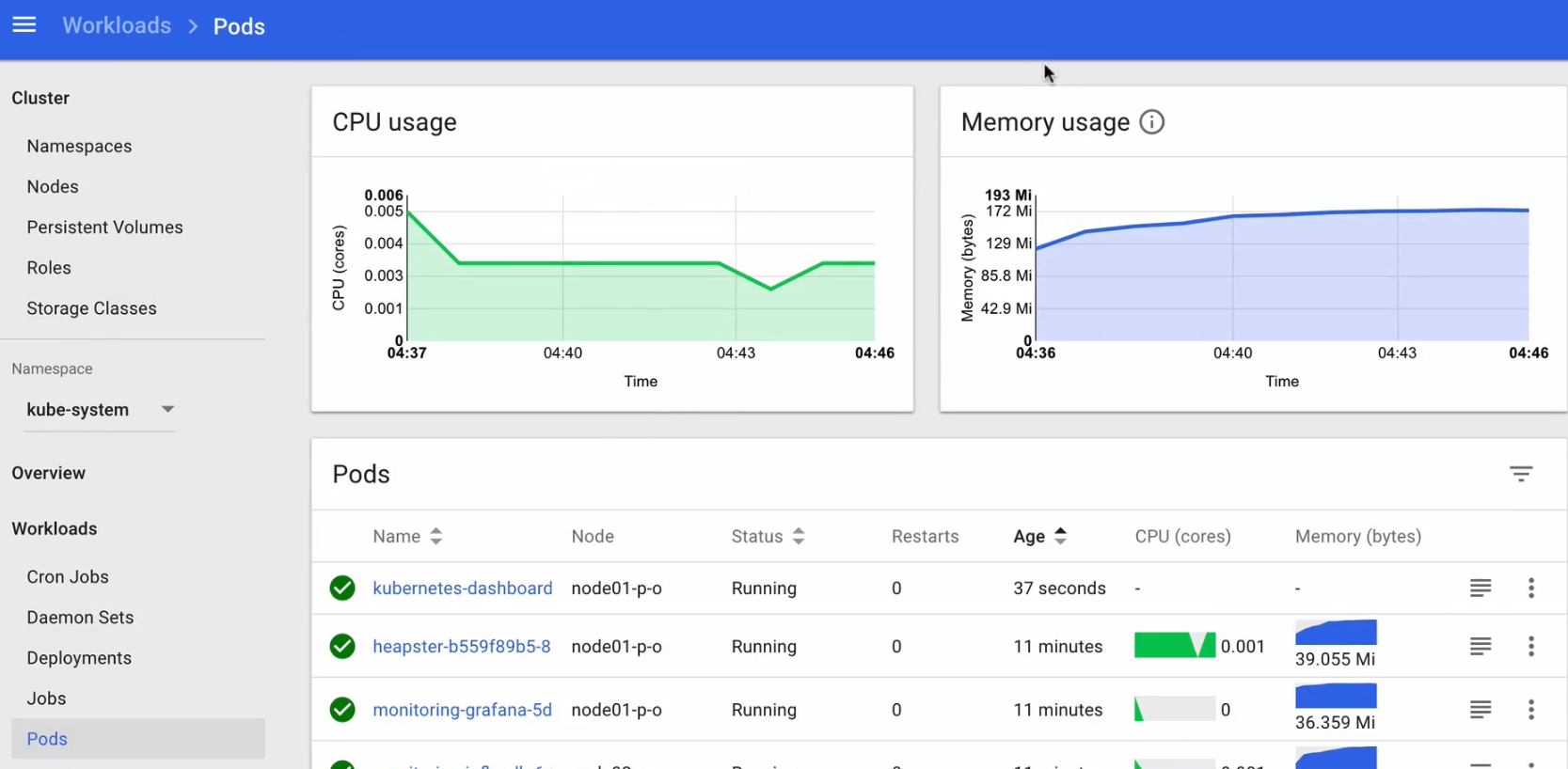

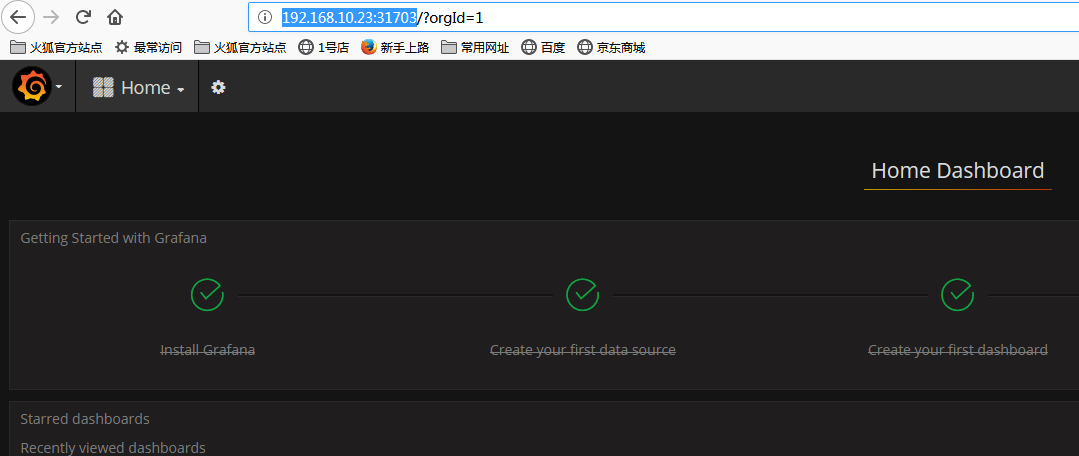

对比区别