http://www.cnblogs.com/cocowool/p/kubeadm_install_kubernetes.html

https://www.kubernetes.org.cn/doc-16

基于Kubeadm部署Kubernetes1.13.3 HA 高可用集群案例: https://github.com/yanghongfei/Kubernetes

二进制搭建:https://note.youdao.com/ynoteshare1/index.html?id=b96debbbfdee1e4eb8755515aac61ca4&type=notebook

一、环境准备

1)服务端基本信息

[root@k8s6 ~]# cat /etc/redhat-release CentOS Linux release 7.4.1708 (Core) [root@k8s6 ~]# uname -r 3.10.0-693.el7.x86_64

2)机器准备,写入hosts文件

192.168.10.22 k8s06 k06 k6 192.168.10.23 node01 n01 n1 192.168.10.24 node02 n02 n2

3) 关闭防火墙,禁止开机启动(云平台服务自带防火墙,无须设置防火墙)

centos7默认防火墙为firewall的

systemctl stop firewalld.service 关闭防火墙

systemctl disable firewalld.service 禁止开机启动

firewall-cmd --state 查看状态

4)时间同步

yum -y install ntp

systemctl start ntpd.service

netstat -lntup|grep ntpd

[root@pvz01 ~]# ntpdate -u 192.168.1.6

二、设置yum源安装kubernetes和docker。并安装

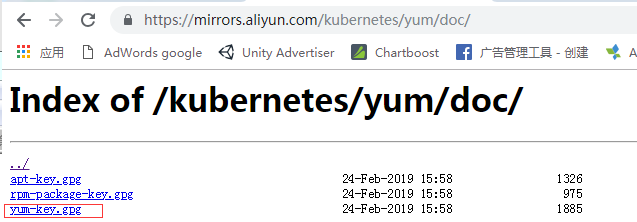

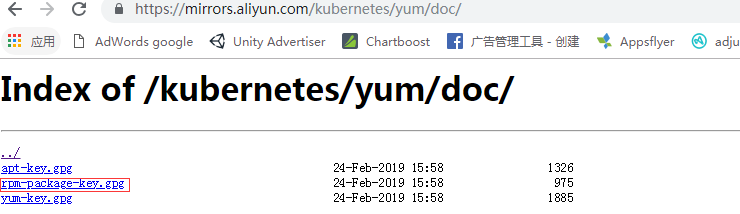

1.1)寻找kubernetes的源

拷贝该链接地址

同样拷贝该链接地址

1.1)2次拷贝的地址为

https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

1.2)拷贝安装密钥

wget https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg rpm --import rpm-package-key.gpg

wget https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

rpm --import yum-key.gpg

1.3)编辑kubernetes的yum源

[root@k8s6 yum.repos.d]# cat /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes Repo baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg enabled=1

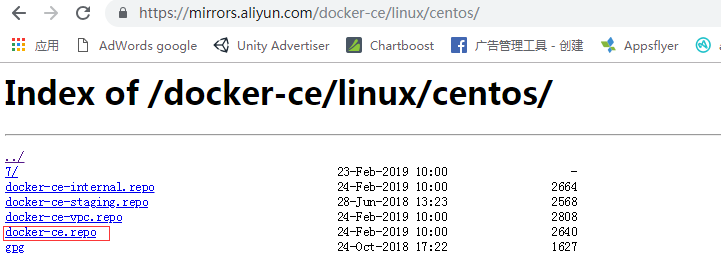

2.1)寻找docker的yum源。默认docker版本为1.3,版本太低,不建议使用

复制该链接地址

[root@k8s6 yum.repos.d]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

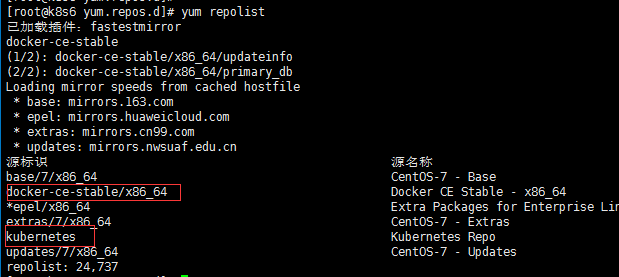

3)查看当前可用的yum源是否有k8s和docker

4)yum安装

yum install docker-ce kubelet kubeadm kubectl

注意,需要查看一下是否有密钥

4.1)yum指定版本安装

直接安装默认最新版(初始化的版本问题) yum install docker-ce kubelet kubeadm kubectl -y 指定版本安装 yum install docker-ce kubelet-1.14.0 kubeadm-1.14.0 kubectl-1.14.0 -y [root@master ~]# kubectl version Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.0", GitCommit:"641856db18352033a0d96dbc99153fa3b27298e5", GitTreeState:"clean", BuildDate:"2019-03-25T15:53:57Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"} The connection to the server localhost:8080 was refused - did you specify the right host or port?

三、配置K8s

1)vim /usr/lib/systemd/system/docker.service。无代理,无线配置。

[Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker Environment="HTTPS_PROXY=http.ik8s.io:10080" # 新增 Environment="NO_PROXY=127.0.0.0/8,127.20.0.0/16" # 新增

2)确认iptables的值是否为1

[root@k8s6 ~]# cat /proc/sys/net/bridge/bridge-nf-call-ip6tables 1 [root@k8s6 ~]# cat /proc/sys/net/bridge/bridge-nf-call-iptables 1

3)配置禁止使用缓存分区

[root@k8s6 ~]# cat /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS="--fail-swap-on=false"

等价于:swapoff -a

3)启动docker服务

先设置开机自启动 [root@k8s6 ~]# systemctl enable docker [root@k8s6 ~]# systemctl enable kubelet [root@k8s6 ~]# systemctl daemon-reload [root@k8s6 ~]# systemctl start docker

4.1)启动k8s,查看安装k8s的安装目录

[root@k8s6 ~]# rpm -ql kubelet /etc/kubernetes/manifests /etc/sysconfig/kubelet /etc/systemd/system/kubelet.service /usr/bin/kubelet

4.2)初始化前拉取镜像

需要pull的镜像,由于镜像在国外,无法直接安装 k8s.gcr.io/kube-apiserver:v1.13.3 k8s.gcr.io/kube-controller-manager:v1.13.3 k8s.gcr.io/kube-scheduler:v1.13.3 k8s.gcr.io/kube-proxy:v1.13.3 k8s.gcr.io/pause:3.1 k8s.gcr.io/etcd:3.2.24 k8s.gcr.io/coredns:1.2.6 更改为 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.13.3 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.13.3 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.13.3 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.13.3 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.2.6 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.2.24 给pull下来的镜像打标记,让它认为是从k8s下拉取过来的 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.13.3 k8s.gcr.io/kube-apiserver:v1.13.3 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.13.3 k8s.gcr.io/kube-controller-manager:v1.13.3 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.13.3 k8s.gcr.io/kube-scheduler:v1.13.3 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.13.3 k8s.gcr.io/kube-proxy:v1.13.3 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.2.24 k8s.gcr.io/etcd:3.2.24 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.2.6 k8s.gcr.io/coredns:1.2.6

4.2)启动k8s服务。注意 --pod-network-cidr 该参数需要和后面的 kube-flannel.yml 相对应

kubeadm init --kubernetes-version=v1.13.3 --pod-network-cidr=10.200.0.0/16 --apiserver-advertise-address=192.168.10.22

其他版本

kubeadm init --kubernetes-version=v1.14.1 --pod-network-cidr=10.200.0.0/16 --apiserver-advertise-address=192.168.10.22 --ignore-preflight-errors="--fail-swap-on=false" kubeadm init --kubernetes-version=v1.14.0 --pod-network-cidr=200.200.0.0/16 --service-cidr=172.16.0.0/16 --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --apiserver-advertise-address=10.10.12.143

kubeadm init --kubernetes-version=v1.14.1 --pod-network-cidr=200.200.0.0/16 --service-cidr=172.16.0.0/16 --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --apiserver-advertise-address=192.168.10.22

执行后的结果

[addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 192.168.10.22:6443 --token 9422jr.9eqpi4lvozb4auw6 --discovery-token-ca-cert-hash sha256:1e624e95c2b5efe6bebd7a649492327b5d89366ca8fd1e65bb508522a71ff3a8

执行提示操作命令

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

5)k8s的节点健康检查

[root@k8s6 ~]# kubectl get cs NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy {"health": "true"} [root@k8s6 ~]# kubectl get componentstatus NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health": "true"}

查看节点是否已经准备好。此次为NotReady

[root@k8s6 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s6 NotReady master 25m v1.13.3

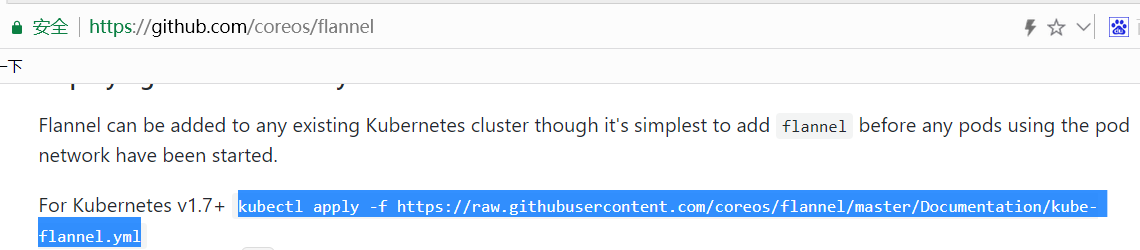

6) 处理NotReady问题。该问题是k8s集群的网络问题

执行该命令。请勿直接执行,需要修改优化配置

[root@k8s6 ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml [root@k8s6 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.13.3 98db19758ad4 3 weeks ago 80.3MB k8s.gcr.io/kube-proxy v1.13.3 98db19758ad4 3 weeks ago 80.3MB k8s.gcr.io/kube-apiserver v1.13.3 fe242e556a99 3 weeks ago 181MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.13.3 fe242e556a99 3 weeks ago 181MB k8s.gcr.io/kube-controller-manager v1.13.3 0482f6400933 3 weeks ago 146MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.13.3 0482f6400933 3 weeks ago 146MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.13.3 3a6f709e97a0 3 weeks ago 79.6MB k8s.gcr.io/kube-scheduler v1.13.3 3a6f709e97a0 3 weeks ago 79.6MB quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 3 weeks ago 52.6MB # 需要有该网络组建 k8s.gcr.io/coredns 1.2.6 f59dcacceff4 3 months ago 40MB registry.cn-hangzhou.aliyuncs.com/google_containers/coredns 1.2.6 f59dcacceff4 3 months ago 40MB k8s.gcr.io/etcd 3.2.24 3cab8e1b9802 5 months ago 220MB registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.2.24 3cab8e1b9802 5 months ago 220MB k8s.gcr.io/pause 3.1 da86e6ba6ca1 14 months ago 742kB registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca1 14 months ago 742kB

6.1)优化配置对比

[root@K8smaster ~]# diff kube-flannel.yml kube-flannel.yml.bak 129,130c129 < "Type": "vxlan", < "Directouing": true, --- > "Type": "vxlan"

6.2) 查看修改的文件。kube-flannel.yml

--- apiVersion: extensions/v1beta1 kind: PodSecurityPolicy metadata: name: psp.flannel.unprivileged annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default spec: privileged: false volumes: - configMap - secret - emptyDir - hostPath allowedHostPaths: - pathPrefix: "/etc/cni/net.d" - pathPrefix: "/etc/kube-flannel" - pathPrefix: "/run/flannel" readOnlyRootFilesystem: false # Users and groups runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny fsGroup: rule: RunAsAny # Privilege Escalation allowPrivilegeEscalation: false defaultAllowPrivilegeEscalation: false # Capabilities allowedCapabilities: ['NET_ADMIN'] defaultAddCapabilities: [] requiredDropCapabilities: [] # Host namespaces hostPID: false hostIPC: false hostNetwork: true hostPorts: - min: 0 max: 65535 # SELinux seLinux: # SELinux is unsed in CaaSP rule: 'RunAsAny' --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel rules: - apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: ['psp.flannel.unprivileged'] - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan", "Directouing": true, } } --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds-amd64 namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: amd64 tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-amd64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-amd64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds-arm64 namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: arm64 tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-arm64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-arm64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds-arm namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: arm tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-arm command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-arm command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds-ppc64le namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: ppc64le tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-ppc64le command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-ppc64le command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds-s390x namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: s390x tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-s390x command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-s390x command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg

再次查看节点状态

[root@k8s6 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s6 Ready master 41m v1.13.3

k8s的master节点已经启动好了。

四、k8s客户端节点操作

1)节点服务器

yum install docker-ce kubelet kubeadm -y

yum install docker-ce kubelet-1.14.0 kubeadm-1.14.0 -y systemctl enable docker systemctl enable kubelet systemctl daemon-reload systemctl start docker swapoff -a 服务端下载镜像操作 docker save -o mynode.gz k8s.gcr.io/kube-proxy:v1.13.3 quay.io/coreos/flannel:v0.11.0-amd64 k8s.gcr.io/pause:3.1 scp mynode.gz root@n1:/root/ 客户端操作导入镜像 docker load -i mynode.gz kubeadm join 192.168.10.22:6443 --token 9422jr.9eqpi4lvozb4auw6 --discovery-token-ca-cert-hash sha256:1e624e95c2b5efe6bebd7a649492327b5d89366ca8fd1e65bb508522a71ff3a8

2)节点服务器需要有的镜像

[root@node02 ~]# docker image ls REPOSITORY TAG IMAGE ID CREATED SIZE k8s.gcr.io/kube-proxy v1.13.3 98db19758ad4 3 weeks ago 80.3MB quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 3 weeks ago 52.6MB k8s.gcr.io/pause 3.1 da86e6ba6ca1 14 months ago 742kB

3.1)服务端查看存在的节点

[root@k8s6 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s6 Ready master 82m v1.13.3 node01 Ready <none> 23m v1.13.3 node02 Ready <none> 24m v1.13.3

3.2) 查看已经运行了的容器

[root@k8s6 ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-86c58d9df4-g65pw 1/1 Running 0 86m coredns-86c58d9df4-rx4cd 1/1 Running 0 86m etcd-k8s6 1/1 Running 0 85m kube-apiserver-k8s6 1/1 Running 0 85m kube-controller-manager-k8s6 1/1 Running 0 85m kube-flannel-ds-amd64-7swcd 1/1 Running 0 29m kube-flannel-ds-amd64-hj2z2 1/1 Running 1 27m kube-flannel-ds-amd64-sj8vp 1/1 Running 0 73m kube-proxy-dl57g 1/1 Running 0 27m kube-proxy-f8wd8 1/1 Running 0 29m kube-proxy-jgzpw 1/1 Running 0 86m kube-scheduler-k8s6 1/1 Running 0 86m

3.3)容器运行的详细信息

[root@k8s6 ~]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES coredns-86c58d9df4-g65pw 1/1 Running 0 90m 10.200.0.2 k8s6 <none> <none> coredns-86c58d9df4-rx4cd 1/1 Running 0 90m 10.200.0.3 k8s6 <none> <none> etcd-k8s6 1/1 Running 0 89m 192.168.10.22 k8s6 <none> <none> kube-apiserver-k8s6 1/1 Running 0 89m 192.168.10.22 k8s6 <none> <none> kube-controller-manager-k8s6 1/1 Running 0 89m 192.168.10.22 k8s6 <none> <none> kube-flannel-ds-amd64-7swcd 1/1 Running 0 33m 192.168.10.24 node02 <none> <none> kube-flannel-ds-amd64-hj2z2 1/1 Running 1 31m 192.168.10.23 node01 <none> <none> kube-flannel-ds-amd64-sj8vp 1/1 Running 0 77m 192.168.10.22 k8s6 <none> <none> kube-proxy-dl57g 1/1 Running 0 31m 192.168.10.23 node01 <none> <none> kube-proxy-f8wd8 1/1 Running 0 33m 192.168.10.24 node02 <none> <none> kube-proxy-jgzpw 1/1 Running 0 90m 192.168.10.22 k8s6 <none> <none> kube-scheduler-k8s6 1/1 Running 0 89m 192.168.10.22 k8s6 <none> <none>

3.4)查看运行的名称空间

[root@k8s6 ~]# kubectl get ns NAME STATUS AGE default Active 88m kube-public Active 88m kube-system Active 88m

四、服务标签选择器

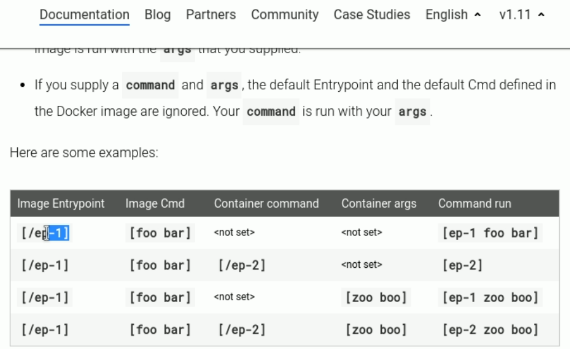

修改镜像中默认应用:https://kubernetes.io/docs/tasks/inject-data-application/define-command-argument-container/

1)根据资源清单启动服务。清单里面定义了 app

[root@k8s6 manifests]# cat pod-demo.yaml apiVersion: v1 kind: Pod metadata: name: pod-demo namespace: default labels: app: myapp tier: frontend spec: containers: - name: myapp image: nginx:1.14-alpine ports: - name: http containerPort: 80 - name: https containerPort: 443 - name: busybox image: busybox:latest imagePullPolicy: IfNotPresent command: - "/bin/sh" - "-c" - "sleep 3600" [root@k8s6 manifests]# kubectl create -f pod-demo.yaml pod/pod-demo created [root@k8s6 manifests]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS nginx-deploy-79b598b88-pt9xq 0/1 ImagePullBackOff 0 112m pod-template-hash=79b598b88,run=nginx-deploy nginx-test-67d85d447c-brwb2 1/1 Running 0 112m pod-template-hash=67d85d447c,run=nginx-test nginx-test-67d85d447c-l7xvs 1/1 Running 0 112m pod-template-hash=67d85d447c,run=nginx-test nginx-test-67d85d447c-qmrrw 1/1 Running 0 112m pod-template-hash=67d85d447c,run=nginx-test nginx-test-67d85d447c-rsmdt 1/1 Running 0 112m pod-template-hash=67d85d447c,run=nginx-test nginx-test-67d85d447c-twk77 1/1 Running 0 112m pod-template-hash=67d85d447c,run=nginx-test pod-demo 2/2 Running 0 51s app=myapp,tier=frontend

2)如果更加定义的标签app查看

[root@k8s6 manifests]# kubectl get pods -L app NAME READY STATUS RESTARTS AGE APP nginx-deploy-79b598b88-pt9xq 0/1 ImagePullBackOff 0 112m nginx-test-67d85d447c-brwb2 1/1 Running 0 112m nginx-test-67d85d447c-l7xvs 1/1 Running 0 112m nginx-test-67d85d447c-qmrrw 1/1 Running 0 112m nginx-test-67d85d447c-rsmdt 1/1 Running 0 112m nginx-test-67d85d447c-twk77 1/1 Running 0 112m pod-demo 2/2 Running 0 34s myapp [root@k8s6 manifests]# kubectl get pods -L app,run NAME READY STATUS RESTARTS AGE APP RUN nginx-deploy-79b598b88-pt9xq 0/1 ImagePullBackOff 0 114m nginx-deploy nginx-test-67d85d447c-brwb2 1/1 Running 0 114m nginx-test nginx-test-67d85d447c-l7xvs 1/1 Running 0 114m nginx-test nginx-test-67d85d447c-qmrrw 1/1 Running 0 114m nginx-test nginx-test-67d85d447c-rsmdt 1/1 Running 0 114m nginx-test nginx-test-67d85d447c-twk77 1/1 Running 0 114m nginx-test pod-demo 2/2 Running 0 2m53s myapp [root@k8s6 manifests]# kubectl get pods -l app NAME READY STATUS RESTARTS AGE pod-demo 2/2 Running 0 6s

3)给资源清单手动打标记

3.1)kubectl label --help 查看帮助

3.2)kubectl label pods 服务名 k=v 。为资源标记定义为 k=v

[root@k8s6 manifests]# kubectl get pods -l app --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-demo 2/2 Running 0 10m app=myapp,tier=frontend [root@k8s6 manifests]# kubectl label pods pod-demo release=stable pod/pod-demo labeled [root@k8s6 manifests]# kubectl get pods -l app --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-demo 2/2 Running 0 11m app=myapp,release=stable,tier=frontend

3.3) 标签选择器

标签选择器 等值关系:=,==,!= 集合关系: KEY in (VALUE1,VALUE2) KEY notin (VALUE1,VALUE2) KEY !KEY

根据标签选择器过滤出来需要的服务

[root@k8s6 manifests]# kubectl get pods -l release NAME READY STATUS RESTARTS AGE pod-demo 2/2 Running 0 17m [root@k8s6 manifests]# kubectl get pods -l release,app NAME READY STATUS RESTARTS AGE pod-demo 2/2 Running 0 17m [root@k8s6 manifests]# kubectl get pods -l release=stable NAME READY STATUS RESTARTS AGE pod-demo 2/2 Running 0 17m [root@k8s6 manifests]# kubectl get pods -l release=stable --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-demo 2/2 Running 0 17m app=myapp,release=stable,tier=frontend

标签选择器的扩展用法

[root@k8s6 manifests]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-deploy-79b598b88-pt9xq 0/1 ImagePullBackOff 0 132m nginx-test-67d85d447c-brwb2 1/1 Running 0 132m nginx-test-67d85d447c-l7xvs 1/1 Running 0 132m nginx-test-67d85d447c-qmrrw 1/1 Running 0 132m nginx-test-67d85d447c-rsmdt 1/1 Running 0 132m nginx-test-67d85d447c-twk77 1/1 Running 0 132m pod-demo 2/2 Running 0 20m [root@k8s6 manifests]# kubectl label pods nginx-test-67d85d447c-twk77 release=canary pod/nginx-test-67d85d447c-twk77 labeled [root@k8s6 manifests]# kubectl get pods -l release NAME READY STATUS RESTARTS AGE nginx-test-67d85d447c-twk77 1/1 Running 0 133m pod-demo 2/2 Running 0 21m [root@k8s6 manifests]# kubectl get pods -l release=canary NAME READY STATUS RESTARTS AGE nginx-test-67d85d447c-twk77 1/1 Running 0 133m [root@k8s6 manifests]# kubectl get pods -l release,app NAME READY STATUS RESTARTS AGE pod-demo 2/2 Running 0 22m [root@k8s6 manifests]# kubectl get pods -l release=stable,app=myapp NAME READY STATUS RESTARTS AGE pod-demo 2/2 Running 0 22m [root@k8s6 manifests]# kubectl get pods -l release!=canary NAME READY STATUS RESTARTS AGE nginx-deploy-79b598b88-pt9xq 0/1 ImagePullBackOff 0 135m nginx-test-67d85d447c-brwb2 1/1 Running 0 135m nginx-test-67d85d447c-l7xvs 1/1 Running 0 134m nginx-test-67d85d447c-qmrrw 1/1 Running 0 135m nginx-test-67d85d447c-rsmdt 1/1 Running 0 134m pod-demo 2/2 Running 0 22m [root@k8s6 manifests]# kubectl get pods -l "release in (canary,beta,alpha)" NAME READY STATUS RESTARTS AGE nginx-test-67d85d447c-twk77 1/1 Running 0 135m [root@k8s6 manifests]# kubectl get pods -l "release notin (canary,beta,alpha)" NAME READY STATUS RESTARTS AGE nginx-deploy-79b598b88-pt9xq 0/1 ErrImagePull 0 136m nginx-test-67d85d447c-brwb2 1/1 Running 0 136m nginx-test-67d85d447c-l7xvs 1/1 Running 0 135m nginx-test-67d85d447c-qmrrw 1/1 Running 0 136m nginx-test-67d85d447c-rsmdt 1/1 Running 0 135m pod-demo 2/2 Running 0 24m

4)内嵌字段的标签选择器

matchLabels:直接给定键值 matchExpressions:基于给定的表达式来定义使用标签选择器,{key:"KEY",operator:"OPERATOR",valuesL[VAL1,VAL2,....]} 操作符: In,NotIn: values 字段的值必须为非空列表 Exists,NotExists:values 字段的值必须为空列表

修改内嵌字段示例

[root@k8s6 manifests]# kubectl get nodes --show-labels NAME STATUS ROLES AGE VERSION LABELS k8s6 Ready master 3d16h v1.13.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s6,node-role.kubernetes.io/master= node01 Ready <none> 3d15h v1.13.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=node01 node02 Ready <none> 3d15h v1.13.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=node02 [root@k8s6 manifests]# kubectl label nodes node01 disktype=ssd node/node01 labeled [root@k8s6 manifests]# kubectl get nodes --show-labels NAME STATUS ROLES AGE VERSION LABELS k8s6 Ready master 3d16h v1.13.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s6,node-role.kubernetes.io/master= node01 Ready <none> 3d15h v1.13.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/hostname=node01 node02 Ready <none> 3d15h v1.13.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=node02

5)调度算法 。nodeSelector <map[string]string>

[root@k8s6 manifests]# cat pod-demo.yaml apiVersion: v1 kind: Pod metadata: name: pod-demo namespace: default labels: app: myapp tier: frontend spec: containers: - name: myapp image: nginx:1.14-alpine ports: - name: http containerPort: 80 - name: https containerPort: 443 - name: busybox image: busybox:latest imagePullPolicy: IfNotPresent command: - "/bin/sh" - "-c" - "sleep 3600" nodeSelector: disktype: ssd

服务跑在 disktype: ssd 指定标签的机器上

6)内嵌标签选择器的annotations字段

nodeSelector <map[string] string> 节点标签选择器 nadeName <string> annotations: 与label不同的地方在于,它不能用于挑选资源对象,仅用于为对象提供“元数据”

yaml文件编写

[root@k8s6 manifests]# cat pod-demo.yaml apiVersion: v1 kind: Pod metadata: name: pod-demo namespace: default labels: app: myapp tier: frontend annotations: blog.com/created-by: "cluster admin" spec: containers: - name: myapp image: nginx:1.14-alpine ports: - name: http containerPort: 80 - name: https containerPort: 443 - name: busybox image: busybox:latest imagePullPolicy: IfNotPresent command: - "/bin/sh" - "-c" - "sleep 3600" nodeSelector: disktype: ssd

kubectl create -f pod-demo.yaml

[root@k8s6 manifests]# kubectl describe pods pod-demo ............. Annotations: blog.com/created-by: cluster admin Status: Running .............

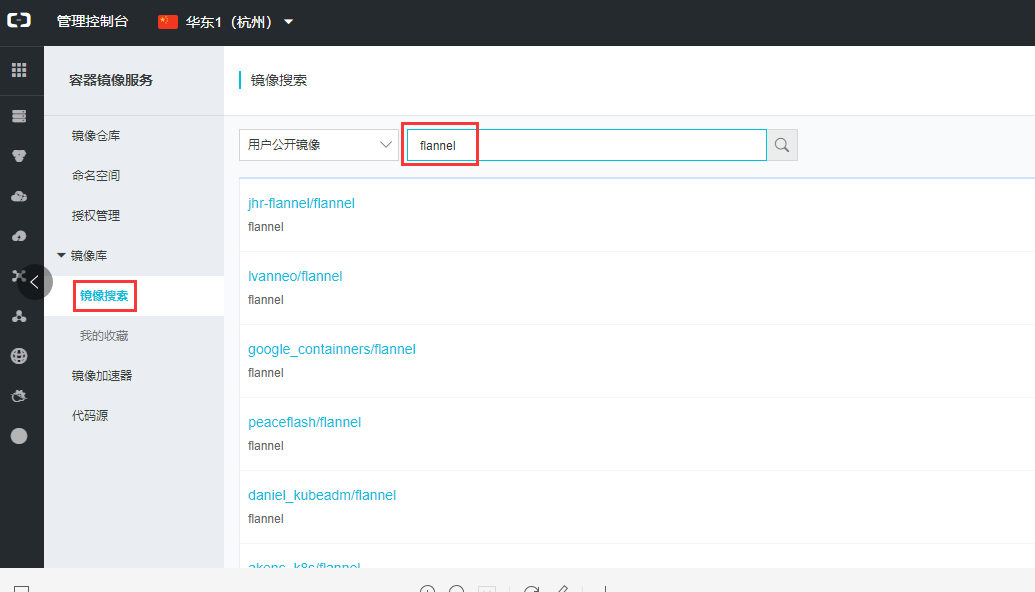

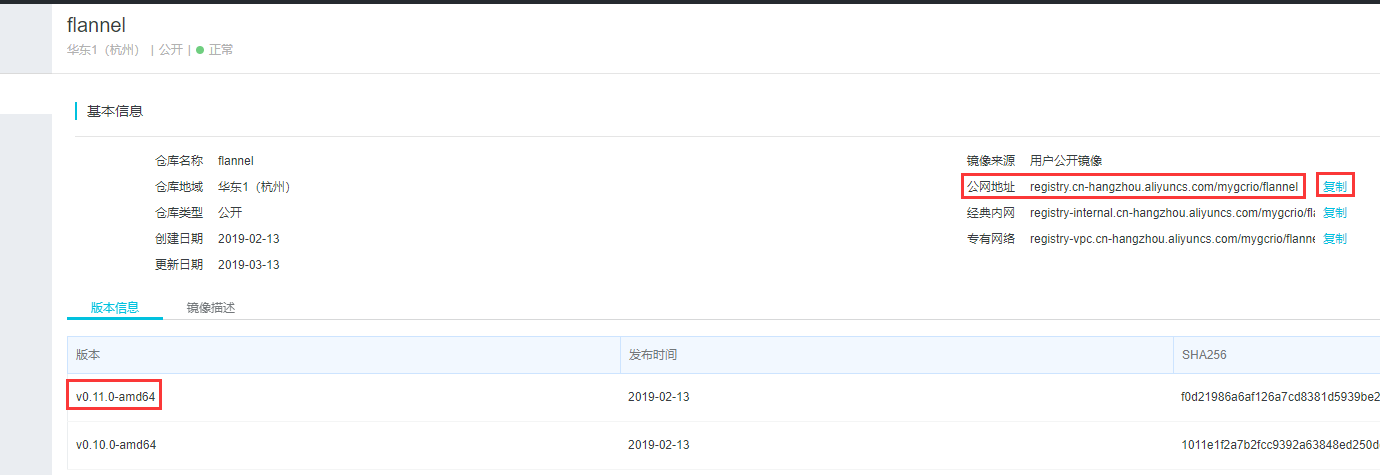

https://cr.console.aliyun.com/cn-hangzhou/images

registry.cn-hangzhou.aliyuncs.com/mygcrio/flannel:v0.11.0-amd64