1. HDFS 客户端环境准备

1.1 windows 平台搭建 hadoop 2.8.5

2. 创建Maven工程

# pom.xml

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>RELEASE</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.8.5</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.8.5</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.8.5</version>

</dependency>

</dependencies>

<build>

<plugins>

<!--java 的编译版本 1.8-->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

</plugins>

</build>

2.1 配置src/main/resources/log4j.properties

## 输出到控制台

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

## 输出到文件

#log4j.appender.logfile=org.apache.log4j.FileAppender

#log4j.appender.logfile.File=target/spring.log

#log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

#log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

2.2 创建HDFSClient类

public class HDFSClient {

public static void main(String[] args) {

Configuration conf = new Configuration();

// NameNode地址

conf.set("fs.defaultFS", "hdfs://服务器IP地址:9000");

try {

// 1. 获取hdfs客户端对象

FileSystem fs = FileSystem.get(conf);

// 2. 在hdfs上创建路径

fs.mkdirs(new Path("/0526/noodles"));

// 3. 关闭资源

fs.close();

// 4. 程序结束

System.out.println("操作结束==========");

} catch (IOException e) {

e.printStackTrace();

}

}

}

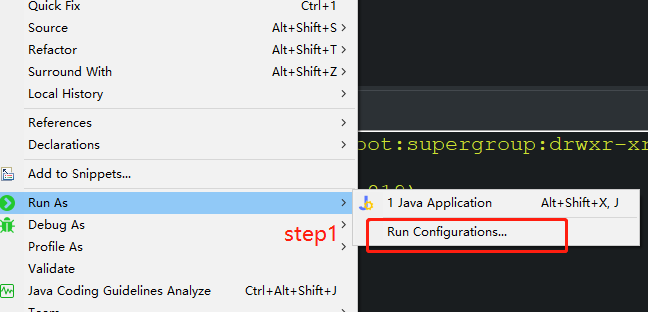

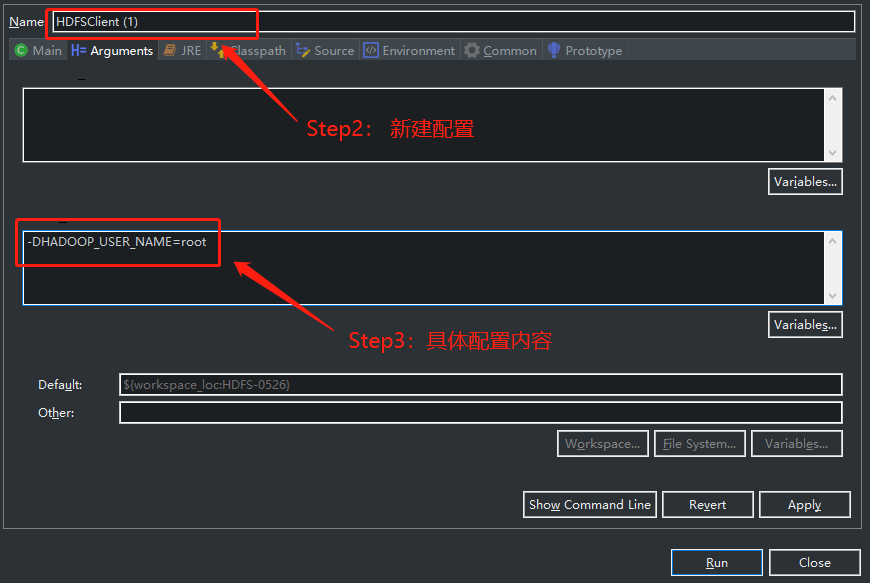

2.2.1 异常处理

- “Permission denied”

2.3 HDFSClient类(升级版)

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class HDFSClient {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

// 1. 获取hdfs客户端对象

FileSystem fs = FileSystem.get(new URI("hdfs://服务器IP地址:9000"), conf, "root");

// 2. 在hdfs上创建路径

fs.mkdirs(new Path("/0526/test002"));

// 3. 关闭资源

fs.close();

// 4. 程序结束

System.out.println("操作结束==========");

}

}

参考资料: