CPU,即中央处理器,它最有用的属性就是算力性能。通过之前的知识学习,了解了linux kernel中对cpu算力形象化的表示:cpu capacity。

1、从cpu拓扑结构、sched_doamin/sched_group的建立过程来看,就包含了对cpu capcity的初始建立。

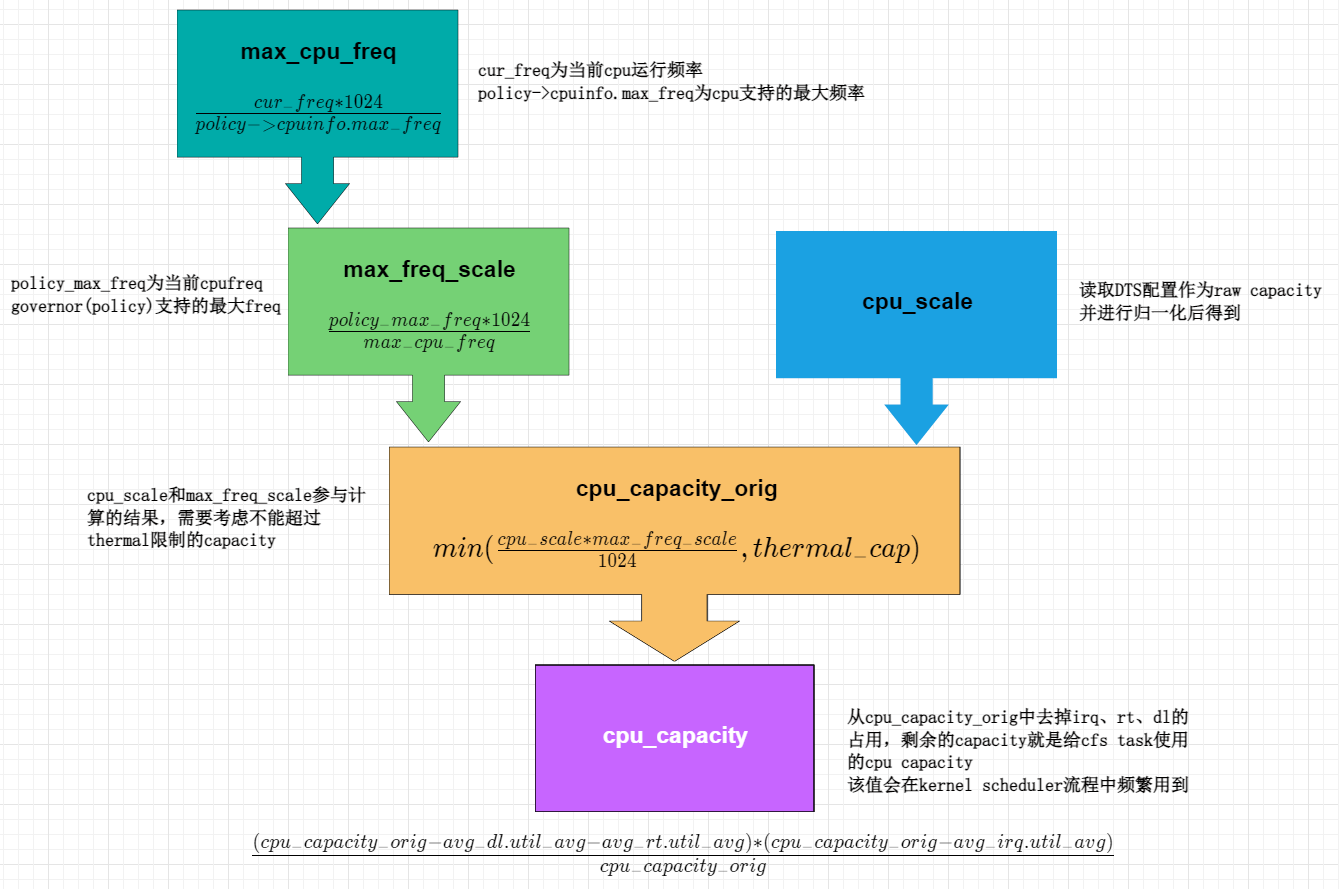

2、而cpu的算力和cpu运行的freq又极其相关,因此对cpu调频的动作,又使cpu capacity发生改变。

3、cpu的算力决定了它能及时处理的task量,最终在给cpu做cfs task placement时,就会参考cpu剩余capcity(去掉irq、dl、rt进程的占用)。

上面提到的流程中cpu capacity,从代码流程一一解析(代码基于caf-kernel msm-5.4):

在系统开机初始化时,建立CPU拓扑结构,就会根据cpu厂商DTS中配置的参数,解析并作为cpu的算力:

- 先读取dts配置作为raw capacity

cpu0: cpu@000 { device_type = "cpu"; ... capacity-dmips-mhz = <1024>; ... }; cpu7: cpu@103 { device_type = "cpu"; ... capacity-dmips-mhz = <801>; ... }; ---------------------------------------------------------------- bool __init topology_parse_cpu_capacity(struct device_node *cpu_node, int cpu) { ... ret = of_property_read_u32(cpu_node, "capacity-dmips-mhz", //解析cpu core算力,kernel4.19后配置该参数 &cpu_capacity); ... capacity_scale = max(cpu_capacity, capacity_scale); //记录最大cpu capacity值作为scale,不能超过scale。因此cpu capacity都是大核1024,小核<1024 raw_capacity[cpu] = cpu_capacity; //raw capacity就是dts中dmips值,其实就是调度中经常使用到的cpu_capacity_orig pr_debug("cpu_capacity: %pOF cpu_capacity=%u (raw)\n", cpu_node, raw_capacity[cpu]); ... return !ret; }

2. 这里是将raw capacity进行归一化,按照最大cpu raw capacity为1024,小的cpu raw capacity按照比例归一化为1024的小数倍:大核1024,小核***(***<1024)

然后将归一化的值,保存为cpu_sclae的per_cpu变量

void topology_normalize_cpu_scale(void) { u64 capacity; int cpu; if (!raw_capacity) return; pr_debug("cpu_capacity: capacity_scale=%u\n", capacity_scale); for_each_possible_cpu(cpu) { pr_debug("cpu_capacity: cpu=%d raw_capacity=%u\n", cpu, raw_capacity[cpu]); capacity = (raw_capacity[cpu] << SCHED_CAPACITY_SHIFT) //就是按照max cpu capacity的100% = 1024的方式归一化capacity / capacity_scale; topology_set_cpu_scale(cpu, capacity); //更新per_cpu变量cpu_scale为各自的cpu raw capacity pr_debug("cpu_capacity: CPU%d cpu_capacity=%lu\n", cpu, topology_get_cpu_scale(cpu)); } }

3. update_cpu_capacity函数是主要来更新cpu剩余capacity的。从函数中每个部分的计算,也可以看出一些cpu capacity相关计算的端倪。

-

- arch_scale_cpu_capacity获取cpu_scale

- arch_scale_max_freq_capacity函数展开看下:

- 可以看到其实就是获取max_freq_scale, 而它具体如何计算的呢?

/* Replace task scheduler's default max-frequency-invariant accounting */ #define arch_scale_max_freq_capacity topology_get_max_freq_scale static inline unsigned long topology_get_max_freq_scale(struct sched_domain *sd, int cpu) { return per_cpu(max_freq_scale, cpu); } void arch_set_max_freq_scale(struct cpumask *cpus, unsigned long policy_max_freq) { unsigned long scale, max_freq; int cpu = cpumask_first(cpus); if (cpu > nr_cpu_ids) return; max_freq = per_cpu(max_cpu_freq, cpu); if (!max_freq) return; scale = (policy_max_freq << SCHED_CAPACITY_SHIFT) / max_freq; trace_android_vh_arch_set_freq_scale(cpus, policy_max_freq, max_freq, &scale); for_each_cpu(cpu, cpus) per_cpu(max_freq_scale, cpu) = scale; }

- 从上述arch_set_max_freq_scale函数可知首先获取max_cpu_freq,再通过如下计算公式得出:

policy_max_freq * 1024 ① max_freq_scale = ——————————————————————————— ,其中policy_max_freq代表当前cpufreq governor(policy)支持的最大freq(会经过PM QoS将所有userspace的request聚合之后得出) max_cpu_freq

-

而max_cpu_freq又是如何确定的呢?其实就是在调节cpu freq时,这个值就会随之变化:

{ ... arch_set_freq_scale(policy->related_cpus, new_freq, policy->cpuinfo.max_freq); ... } void arch_set_freq_scale(struct cpumask *cpus, unsigned long cur_freq, unsigned long max_freq) { unsigned long scale; int i; scale = (cur_freq << SCHED_CAPACITY_SHIFT) / max_freq; trace_android_vh_arch_set_freq_scale(cpus, cur_freq, max_freq, &scale); for_each_cpu(i, cpus){ per_cpu(freq_scale, i) = scale; per_cpu(max_cpu_freq, i) = max_freq; } }

计算公式如下:

cur_freq * 1024 ② max_cpu_freq = ——————————————————————————————— ,其中policy->cpuinfo.max_freq就是该cpu支持的最大freq;cur_freq是cpu当前的运行频率 policy->cpuinfo.max_freq

- 可以看到其实就是获取max_freq_scale, 而它具体如何计算的呢?

- 将获取的max_freq_scale进行计算,并考虑thermal限制的情况。结果不能超过thermal限制的最大capacity:

min(cpu_scale * max_freq_scale / 1024, thermal_cap) - 上面计算得到的结果,就是作为cpu_capacity_orig的值

static void update_cpu_capacity(struct sched_domain *sd, int cpu) { unsigned long capacity = arch_scale_cpu_capacity(cpu); //获取per_cpu变量cpu_scale struct sched_group *sdg = sd->groups; capacity *= arch_scale_max_freq_capacity(sd, cpu); //获取per_cpu变量max_freq_scale,参与计算 capacity >>= SCHED_CAPACITY_SHIFT; //这2步计算为:cpu_scale * max_freq_scale / 1024 capacity = min(capacity, thermal_cap(cpu)); //计算得出的capacity不能超过thermal限制中的cpu的capacity cpu_rq(cpu)->cpu_capacity_orig = capacity; //将计算得出的capacity作为当前cpu rq的cpu_capacity_orig capacity = scale_rt_capacity(cpu, capacity); //计算cfs rq剩余的cpu capacity if (!capacity) //如果没有剩余cpu capacity给cfs了,那么就强制写为1 capacity = 1; cpu_rq(cpu)->cpu_capacity = capacity; //更新相关sgc capacity:cpu rq的cpu_capacity、sgc的最大/最小的capacity sdg->sgc->capacity = capacity; sdg->sgc->min_capacity = capacity; sdg->sgc->max_capacity = capacity; }

- 之后再通过scale_rt_capacity函数,从cpu_capacity_orig中减去irq、dl class和rt class的util_avg占用之后,得到剩余的cpu capacity就是留给cfs进程的。公式如下:

(cpu_capacity_orig - avg_rt.util_avg - avg_dl.util_avg) * (cpu_capacity_orig - avg_irq.util_avg) cpu_capacity = ———————————————————————————————————————————————————————————————————————————————————————————————————— cpu_capacity_orig

static unsigned long scale_rt_capacity(int cpu, unsigned long max) { struct rq *rq = cpu_rq(cpu); unsigned long used, free; unsigned long irq; irq = cpu_util_irq(rq); //获取cpu rq的irq util_avg if (unlikely(irq >= max)) //如果util_avg超过max,则说明util满了? return 1; used = READ_ONCE(rq->avg_rt.util_avg); //获取rt task rq的util_avg used += READ_ONCE(rq->avg_dl.util_avg); //获取并累加dl task rq的util_avg if (unlikely(used >= max)) //如果util_avg超过max,则说明util满了? return 1; free = max - used; //计算free util = 最大capacity - rt的util_avg - dl的util_avg return scale_irq_capacity(free, irq, max); //(max - rt的util_avg - dl的util_avg) * (max - irq) /max }

- 最后计算结果(剩余)作为cpu_capacity,以及sgc->capacity

整体的依赖框架如下:

总结:

- max_cpu_scale:根据cpu当前freq、cpu最大支持的freq,计算并归一化得出。------因为cpu的最大支持freq是一个固定值,所以,最终max_cpu_scale与cpu当前的freq变化相关。

- max_freq_scale:根据max_cpu_scale、cpufreq governor policy的最大支持freq,计算并归一化得出。-------max_cpu_scale与cpu当前freq有关、policy又与cpufreq选择governor policy的最大支持freq有关。

- cpu_scale:根据DTS中配置的cpu算力值决定。------这个值是cpu固有算力的体现,一般有cpu厂商决定和配置,所以一般是固定的。

- cpu_capacity_orig:根据max_freq_scale、cpu_scale计算,并考虑thermal限制后的结果。--------这其实代表了cpu在当前的policy、当前freq状态下的算力体现。

- cpu_capacity:根据cpu_capacity_orig,计算去掉irq、rt、dl的占用后,剩余的capacity。---------首先,irq的响应处理会占用cpu(更甚地,如果irq太频繁触发,会影响系统性能);rt、dl class的进程的优先级都是高于cfs task的;所以,从当前cpu当前状态的算力中,去掉了irq、rt、dl的算力占用,那么剩余的就是留给cfs task的cpu算力了。