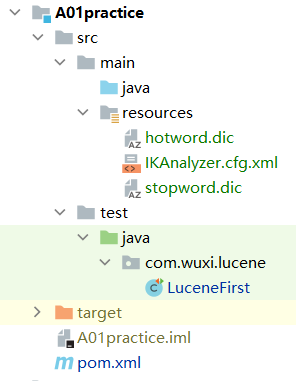

一、项目结构

二、pom.xml

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.example</groupId> <artifactId>A01practice</artifactId> <version>1.0-SNAPSHOT</version> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <configuration> <source>1.9</source> <target>1.9</target> </configuration> </plugin> </plugins> </build> <dependencies> <!-- https://mvnrepository.com/artifact/org.apache.lucene/lucene-core --> <dependency> <groupId>org.apache.lucene</groupId> <artifactId>lucene-core</artifactId> <version>8.1.0</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.lucene/lucene-analyzers-common --> <dependency> <groupId>org.apache.lucene</groupId> <artifactId>lucene-analyzers-common</artifactId> <version>8.1.0</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.lucene/lucene-queryparser --> <dependency> <groupId>org.apache.lucene</groupId> <artifactId>lucene-queryparser</artifactId> <version>8.1.0</version> </dependency> <!-- https://mvnrepository.com/artifact/commons-io/commons-io --> <dependency> <groupId>commons-io</groupId> <artifactId>commons-io</artifactId> <version>2.8.0</version> </dependency> <!--此包是第三方jar包,安装在本地仓库--> <dependency> <groupId>org.wltea.ik-analyzer</groupId> <artifactId>ik-analyzer</artifactId> <version>1.0-SNAPSHOT</version> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.12</version> <scope>test</scope> </dependency> </dependencies> </project>

三、代码

package com.wuxi.lucene; import org.apache.commons.io.FileUtils; import org.apache.lucene.analysis.TokenStream; import org.apache.lucene.analysis.tokenattributes.CharTermAttribute; import org.apache.lucene.document.*; import org.apache.lucene.index.DirectoryReader; import org.apache.lucene.index.IndexWriter; import org.apache.lucene.index.IndexWriterConfig; import org.apache.lucene.index.Term; import org.apache.lucene.queryparser.classic.QueryParser; import org.apache.lucene.search.*; import org.apache.lucene.store.Directory; import org.apache.lucene.store.FSDirectory; import org.junit.Test; import org.wltea.analyzer.lucene.IKAnalyzer; import java.io.File; public class LuceneFirst { /** * 创建索引 * * @throws Exception */ @Test public void createIndex() throws Exception { //1、创建一个Directory对象,指定索引库保存的位置。 //把索引库保存在内存中 //Directory directory = new RAMDirectory(); //把索引库保存在磁盘 Directory directory = FSDirectory.open(new File("F:\java\lucene\resource\index").toPath()); //2、基于Directory对象创建一个IndexWriter对象 IndexWriterConfig config = new IndexWriterConfig(new IKAnalyzer()); IndexWriter indexWriter = new IndexWriter(directory, config); //3、读取磁盘上的文件,对应每个文件创建一个文档对象。 File dir = new File("F:\java\lucene\resource\file"); File[] files = dir.listFiles(); for (File f : files) { //取文件名 String fileName = f.getName(); //文件的路径 String filePath = f.getPath(); //文件的内容 String fileContent = FileUtils.readFileToString(f, "utf-8"); //文件的大小 long fileSize = FileUtils.sizeOf(f); //创建Field //参数1:域的名称,参数2:域的内容,参数3:是否存储 Field fieldName = new TextField("name", fileName, Field.Store.YES);//存储且分词 Field fieldPath = new StoredField("path", filePath); Field fieldContent = new TextField("content", fileContent, Field.Store.NO);//分词但不存储 Field fieldSizeValue = new LongPoint("size", fileSize);//可做范围查询 Field fieldSizeStore = new StoredField("size", fileSize);//存储但不分词 //创建文档对象 Document document = new Document(); //向文档对象中添加域 document.add(fieldName); document.add(fieldPath); document.add(fieldContent); document.add(fieldSizeValue); document.add(fieldSizeStore); //5、把文档对象写入索引库 indexWriter.addDocument(document); } //6、关闭IndexWriter对象 indexWriter.close(); } /** * 查询 * * @throws Exception */ @Test public void searchIndex() throws Exception { //1、创建一个Directory对象,指定索引库位置 FSDirectory directory = FSDirectory.open(new File("F:\java\lucene\resource\index").toPath()); //2、创建一个IndexReader对象 DirectoryReader indexReader = DirectoryReader.open(directory); //3、创建一个IndexSearcher对象,构造方法中的参数indexReader对象。 IndexSearcher indexSearcher = new IndexSearcher(indexReader); //4、创建一个query对象,TermQuery Query query = new TermQuery(new Term("content", "中文")); //5、执行查询,得到一个TopDocs对象 //参数1:查询对象 参数2:查询结果返回的最大记录数 TopDocs topDocs = indexSearcher.search(query, 10); //6、取查询结果的总记录数 System.out.println("查询总记录数:" + topDocs.totalHits); //7、取文档列表 ScoreDoc[] scoreDocs = topDocs.scoreDocs; //8、打印文档中的内容 for (ScoreDoc doc : scoreDocs) { //取文档id int docId = doc.doc; //根据id取文档对象 Document document = indexSearcher.doc(docId); System.out.println(document.get("name")); System.out.println(document.get("path")); System.out.println(document.get("size")); //System.out.println(document.get("content")); System.out.println("----------------寂寞的分割线"); } //9、关闭IndexReader对象 indexReader.close(); } /** * 分词 * * @throws Exception */ @Test public void testTokenStream() throws Exception { //1、创建一个Analyzer对象,StandardAnalyzer对象 //Analyzer analyzer = new StandardAnalyzer(); IKAnalyzer analyzer = new IKAnalyzer(); //2、使用分析器对象的tokenStream方法获得一个TokenStream对象 TokenStream tokenStream = analyzer.tokenStream("", "Lucene是apache软件基金会4 jakarta项目组的一个子项目,

是一个开放源代码的全文检索引擎工具包,但它不是一个完整的全文检索引擎,而是一个全文检索引擎的架构,

提供了完整的查询引擎和索引引擎,部分文本分析引擎(英文与德文两种西方语言)。"); //3、向TokenStream对象中设置一个引用,相当于数一个指针 CharTermAttribute charTermAttribute = tokenStream.addAttribute(CharTermAttribute.class); //4、调用TokenStream对象的rest方法。如果不调用抛异常 tokenStream.reset(); //5、使用while循环遍历TokenStream对象 while (tokenStream.incrementToken()) { System.out.println(charTermAttribute.toString()); } //6、关闭TokenStream对象 tokenStream.close(); } /** * 删除所有 * * @throws Exception */ @Test public void deleteAllDocument() throws Exception { Directory directory = FSDirectory.open(new File("F:\java\lucene\resource\index").toPath()); IndexWriterConfig config = new IndexWriterConfig(new IKAnalyzer()); IndexWriter indexWriter = new IndexWriter(directory, config); indexWriter.deleteAll(); indexWriter.close(); } /** * 删除指定 * * @throws Exception */ @Test public void deleteDocumentByQuery() throws Exception { Directory directory = FSDirectory.open(new File("F:\java\lucene\resource\index").toPath()); IndexWriterConfig config = new IndexWriterConfig(new IKAnalyzer()); IndexWriter indexWriter = new IndexWriter(directory, config); indexWriter.deleteDocuments(new Term("name", "aaa")); indexWriter.close(); } /** * 更新 * * @throws Exception */ @Test public void updateDocument() throws Exception { Directory directory = FSDirectory.open(new File("F:\java\lucene\resource\index").toPath()); IndexWriterConfig config = new IndexWriterConfig(new IKAnalyzer()); IndexWriter indexWriter = new IndexWriter(directory, config); Document document = new Document(); document.add(new TextField("name", "你好世界", Field.Store.YES)); document.add(new TextField("name1", "你好世界1", Field.Store.YES)); document.add(new TextField("name2", "你好世界2", Field.Store.YES)); indexWriter.updateDocument(new Term("name", "aaa"), document); indexWriter.close(); } /** * 范围查询 * * @throws Exception */ @Test public void testRangeQuery() throws Exception { FSDirectory directory = FSDirectory.open(new File("F:\java\lucene\resource\index").toPath()); DirectoryReader indexReader = DirectoryReader.open(directory); IndexSearcher indexSearcher = new IndexSearcher(indexReader); Query query = LongPoint.newRangeQuery("size", 0l, 100l); TopDocs topDocs = indexSearcher.search(query, 10); System.out.println("查询总记录数:" + topDocs.totalHits); ScoreDoc[] scoreDocs = topDocs.scoreDocs; for (ScoreDoc doc : scoreDocs) { int docId = doc.doc; Document document = indexSearcher.doc(docId); System.out.println(document.get("name")); System.out.println(document.get("path")); System.out.println(document.get("size")); //System.out.println(document.get("content")); System.out.println("----------------寂寞的分割线"); } indexReader.close(); } /** * 分词查询 * * @throws Exception */ @Test public void testQueryParser() throws Exception { FSDirectory directory = FSDirectory.open(new File("F:\java\lucene\resource\index").toPath()); DirectoryReader indexReader = DirectoryReader.open(directory); IndexSearcher indexSearcher = new IndexSearcher(indexReader); QueryParser queryParser = new QueryParser("name", new IKAnalyzer()); Query query = queryParser.parse("这是一段中文"); TopDocs topDocs = indexSearcher.search(query, 10); System.out.println("查询总记录数:" + topDocs.totalHits); ScoreDoc[] scoreDocs = topDocs.scoreDocs; for (ScoreDoc doc : scoreDocs) { int docId = doc.doc; Document document = indexSearcher.doc(docId); System.out.println(document.get("name")); System.out.println(document.get("path")); System.out.println(document.get("size")); //System.out.println(document.get("content")); System.out.println("----------------寂寞的分割线"); } indexReader.close(); } }

四、IKAnalyzer.cfg.xml

<?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd"> <properties> <comment>IK Analyzer 扩展配置</comment> <!--用户可以在这里配置自己的扩展字典 --> <entry key="ext_dict">hotword.dic;</entry> <!--用户可以在这里配置自己的扩展停止词字典--> <entry key="ext_stopwords">stopword.dic;</entry> </properties>

五、可视化工具

luke-master安装目录运行cmd命令:mvn package