注意:升级之前要备份集群中的所有数据,这里备份就不再叙述了。备份前面的文章及提到过,可以去看看。

升级 master 节点

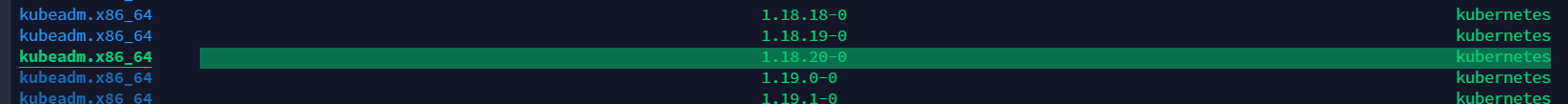

1、查看 kubeadm 要升级到哪个版本

yum list --showduplicates kubeadm --disableexcludes=kubernetes

2、安装 master 节点中 kubeadm 指定版本

yum install -y kubeadm-1.18.20-0 --disableexcludes=kubernetes

3、验证安装的 kubeadm 版本是否正确

kubeadm version

4、查看 k8s 集群升级计划

[root@master1 ~]# kubeadm upgrade plan

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.18.8

[upgrade/versions] kubeadm version: v1.18.8

I1005 12:00:09.520300 33191 version.go:252] remote version is much newer: v1.22.2; falling back to: stable-1.18

[upgrade/versions] Latest stable version: v1.18.20

[upgrade/versions] Latest stable version: v1.18.20

[upgrade/versions] Latest version in the v1.18 series: v1.18.20

[upgrade/versions] Latest version in the v1.18 series: v1.18.20

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 4 x v1.18.8 v1.18.20

Upgrade to the latest version in the v1.18 series:

COMPONENT CURRENT AVAILABLE

API Server v1.18.8 v1.18.20

Controller Manager v1.18.8 v1.18.20

Scheduler v1.18.8 v1.18.20

Kube Proxy v1.18.8 v1.18.20

CoreDNS 1.6.7 1.6.7

Etcd 3.4.3 3.4.3-0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.18.20

Note: Before you can perform this upgrade, you have to update kubeadm to v1.18.20.

_____________________________________________________________________

5、修改 master 节点中的 kubeadm-config.yaml 配置文件中的 k8s 版本,制定的是什么版本改成什么版本。(根据自己情况修改版本)

[root@master2 ~]# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.200.3

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "192.168.200.16:16443"

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.18.20 # 指定版本

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

6、master 节点下载指定 k8s 版本镜像 (根据自己情况下载镜像)

[root@master1 ~]# kubeadm config images pull --config kubeadm-config.yaml

W1005 15:05:32.445771 24310 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[config/images] Pulled k8s.gcr.io/kube-apiserver:v1.18.20

[config/images] Pulled k8s.gcr.io/kube-controller-manager:v1.18.20

[config/images] Pulled k8s.gcr.io/kube-scheduler:v1.18.20

[config/images] Pulled k8s.gcr.io/kube-proxy:v1.18.20

[config/images] Pulled k8s.gcr.io/pause:3.2

[config/images] Pulled k8s.gcr.io/etcd:3.4.3-0

[config/images] Pulled k8s.gcr.io/coredns:1.6.7

7、升级 master 节点

[root@master1 ~]# kubeadm upgrade apply v1.18.20

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade/version] You have chosen to change the cluster version to "v1.18.20"

[upgrade/versions] Cluster version: v1.18.8

[upgrade/versions] kubeadm version: v1.18.20

[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y

[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd]

[upgrade/prepull] Prepulling image for component etcd.

[upgrade/prepull] Prepulling image for component kube-apiserver.

[upgrade/prepull] Prepulling image for component kube-controller-manager.

[upgrade/prepull] Prepulling image for component kube-scheduler.

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-etcd

[upgrade/prepull] Prepulled image for component kube-scheduler.

[upgrade/prepull] Prepulled image for component etcd.

[upgrade/prepull] Prepulled image for component kube-apiserver.

[upgrade/prepull] Prepulled image for component kube-controller-manager.

[upgrade/prepull] Successfully prepulled the images for all the control plane components

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.18.20"...

Static pod: kube-apiserver-master1 hash: 2aba3e103c7f492ddb5f61f62dc4e838

Static pod: kube-controller-manager-master1 hash: babb08b5983f89790db4b66ac73698b3

Static pod: kube-scheduler-master1 hash: 44021c5fcd7502cfd991ad3047a6b4e1

[upgrade/etcd] Upgrading to TLS for etcd

[upgrade/etcd] Non fatal issue encountered during upgrade: the desired etcd version for this Kubernetes version "v1.18.20" is "3.4.3-0", but the current etcd version is "3.4.3". Won't downgrade etcd, instead just continue

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests977319496"

W1005 15:34:09.966822 42905 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[upgrade/staticpods] Preparing for "kube-apiserver" upgrade

[upgrade/staticpods] Renewing apiserver certificate

[upgrade/staticpods] Renewing apiserver-kubelet-client certificate

[upgrade/staticpods] Renewing front-proxy-client certificate

[upgrade/staticpods] Renewing apiserver-etcd-client certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2021-10-05-15-34-03/kube-apiserver.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-apiserver-master1 hash: 2aba3e103c7f492ddb5f61f62dc4e838

Static pod: kube-apiserver-master1 hash: 551da7ad1adbaf89fc6b91be0e7ebd7a

[apiclient] Found 3 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-controller-manager" upgrade

[upgrade/staticpods] Renewing controller-manager.conf certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2021-10-05-15-34-03/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-controller-manager-master1 hash: babb08b5983f89790db4b66ac73698b3

Static pod: kube-controller-manager-master1 hash: eac9c8b79a64b92e71634b7f8458fe57

[apiclient] Found 3 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-scheduler" upgrade

[upgrade/staticpods] Renewing scheduler.conf certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2021-10-05-15-34-03/kube-scheduler.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-scheduler-master1 hash: 44021c5fcd7502cfd991ad3047a6b4e1

Static pod: kube-scheduler-master1 hash: eda45837f8d54b8750b297583fe7441a

[apiclient] Found 3 Pods for label selector component=kube-scheduler

[upgrade/staticpods] Component "kube-scheduler" upgraded successfully!

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[addons] Applied essential addon: CoreDNS

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[addons] Applied essential addon: kube-proxy

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.18.20". Enjoy!

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

注意

除了第一个节点升级用

kubeadm upgrade apply

其他节点升级都用

kubeadm upgrade node

除了第一个节点以外,其他节点不用执行

"不需要执行 kubeadm upgrade plan 和更新 CNI 驱动插件的操作"

8、驱逐 Pod 且不可调度

[root@master1 ~]# kubectl drain master1 --ignore-daemonsets

node/master1 cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/kube-flannel-ds-xznr2, kube-system/kube-proxy-dkd4r

evicting pod kube-system/coredns-66bff467f8-l7x7x

evicting pod kube-system/coredns-66bff467f8-k4cj5

pod/coredns-66bff467f8-l7x7x evicted

pod/coredns-66bff467f8-k4cj5 evicted

node/master1 evicted

9、安装指定版本的 kubelet 和 kubectl

[root@master1 ~]# yum install -y kubelet-1.18.20-0 kubectl-1.18.20-0 --disableexcludes=kubernetes

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirror-hk.koddos.net

* epel: mirrors.ustc.edu.cn

* extras: mirror-hk.koddos.net

* updates: mirror-hk.koddos.net

Resolving Dependencies

--> Running transaction check

---> Package kubectl.x86_64 0:1.18.8-0 will be updated

---> Package kubectl.x86_64 0:1.18.20-0 will be an update

---> Package kubelet.x86_64 0:1.18.8-0 will be updated

---> Package kubelet.x86_64 0:1.18.20-0 will be an update

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================================================================================================== Package Arch Version Repository Size

==================================================================================================================================================================Updating:

kubectl x86_64 1.18.20-0 kubernetes 9.5 M

kubelet x86_64 1.18.20-0 kubernetes 21 M

Transaction Summary

==================================================================================================================================================================Upgrade 2 Packages

Total download size: 30 M

Downloading packages:

Delta RPMs disabled because /usr/bin/applydeltarpm not installed.

(1/2): 16f7bea4bddbf51e2f5582bce368bf09d4d1ed98a82ca1e930e9fe183351a653-kubectl-1.18.20-0.x86_64.rpm | 9.5 MB 00:00:28

(2/2): 942aea8dd81ddbe1873f7760007e31325c9740fa9f697565a83af778c22a419d-kubelet-1.18.20-0.x86_64.rpm | 21 MB 00:00:32

------------------------------------------------------------------------------------------------------------------------------------------------------------------Total 949 kB/s | 30 MB 00:00:32

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Updating : kubelet-1.18.20-0.x86_64 1/4

Updating : kubectl-1.18.20-0.x86_64 2/4

Cleanup : kubectl-1.18.8-0.x86_64 3/4

Cleanup : kubelet-1.18.8-0.x86_64 4/4

Verifying : kubectl-1.18.20-0.x86_64 1/4

Verifying : kubelet-1.18.20-0.x86_64 2/4

Verifying : kubectl-1.18.8-0.x86_64 3/4

Verifying : kubelet-1.18.8-0.x86_64 4/4

Updated:

kubectl.x86_64 0:1.18.20-0 kubelet.x86_64 0:1.18.20-0

Complete!

10、重新加载并重启 kubelet

systemctl daemon-reload

systemctl restart kubelet

11、取消不可调度,节点重新上线

[root@master1 ~]# kubectl uncordon master1

node/master1 uncordoned

升级 node 节点

1、安装指定版本的 kubeadm

yum install -y kubeadm-1.18.20-0 --disableexcludes=kubernetes

2、升级 node 节点

kubeadm upgrade node

3、驱逐 pod 且不可调度

kubectl drain node1 --ignore-daemonsets

4、安装指定的 kubectl 和 kubelet

yum install -y kubelet-1.18.20-0 kubectl-1.18.20-0 --disableexcludes=kubernetes

5、重载 kubelet 并重启

systemctl daemon-reload

systemctl restart kubelet

6、取消不可调度,节点重新上线

kubectl uncordon node1

7、查看是否成功

[root@master1 ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.20", GitCommit:"1f3e19b7beb1cc0110255668c4238ed63dadb7ad", GitTreeState:"clean", BuildDate:"2021-06-16T12:58:51Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.20", GitCommit:"1f3e19b7beb1cc0110255668c4238ed63dadb7ad", GitTreeState:"clean", BuildDate:"2021-06-16T12:51:17Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"}

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready master 5d6h v1.18.20

master2 Ready master 5d6h v1.18.20

master3 Ready master 5d6h v1.18.20

node1 Ready <none> 5d6h v1.18.20

[root@master1 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default nfs-client-provisioner-6d4469b5b5-x7f67 1/1 Running 0 7h56m

default nfs-web-0 1/1 Running 0 7h56m

default nfs-web-1 1/1 Running 0 7h56m

default nfs-web-2 1/1 Running 0 7h55m

kube-system coredns-66bff467f8-qbl9x 1/1 Running 0 115m

kube-system coredns-66bff467f8-v6ljc 1/1 Running 0 112m

kube-system etcd-master1 1/1 Running 1 4d9h

kube-system etcd-master2 1/1 Running 1 4d9h

kube-system etcd-master3 1/1 Running 1 4d9h

kube-system kube-apiserver-master1 1/1 Running 0 140m

kube-system kube-apiserver-master2 1/1 Running 0 117m

kube-system kube-apiserver-master3 1/1 Running 0 116m

kube-system kube-controller-manager-master1 1/1 Running 0 140m

kube-system kube-controller-manager-master2 1/1 Running 0 117m

kube-system kube-controller-manager-master3 1/1 Running 0 116m

kube-system kube-flannel-ds-8p4rh 1/1 Running 1 4d9h

kube-system kube-flannel-ds-ffk5w 1/1 Running 2 4d9h

kube-system kube-flannel-ds-m4x7j 1/1 Running 1 4d9h

kube-system kube-flannel-ds-xznr2 1/1 Running 1 4d9h

kube-system kube-proxy-4z54q 1/1 Running 0 140m

kube-system kube-proxy-8wxvv 1/1 Running 0 140m

kube-system kube-proxy-dkd4r 1/1 Running 0 140m

kube-system kube-proxy-jvdsg 1/1 Running 0 140m

kube-system kube-scheduler-master1 1/1 Running 0 140m

kube-system kube-scheduler-master2 1/1 Running 0 117m

kube-system kube-scheduler-master3 1/1 Running 0 116m

velero minio-7b4ff54f67-gtvq5 1/1 Running 0 6h9m

velero minio-setup-gkrr2 0/1 Completed 2 6h9m