centos7 部署k8s集群

简介

Kubernetes是一个开源的,用于管理云平台中多个主机上的容器化的应用,Kubernetes的目标是让部署容器化的应用简单并且高效(powerful),Kubernetes提供了应用部署,规划,更新,维护的一种机制。

本次部署节点列表

192.168.56.11 master

192.168.56.12 node01

192.168.56.13 node02

192.168.56.14 node03

192.168.56.15 node04

前提条件

1、为了方便部署,可提前修改主机名,重新登录后可显示新设置的主机名。

[root@master ~]# hostnamectl set-hostname master或者node01

[root@master ~]# more /etc/hostname

[root@master ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

3、关闭防火墙或者开放k8s所需的端口

[root@master ~]# firewall-cmd --state //查看当前防火墙状态

[root@master ~]# systemctl stop firewalld //临时关闭防火墙

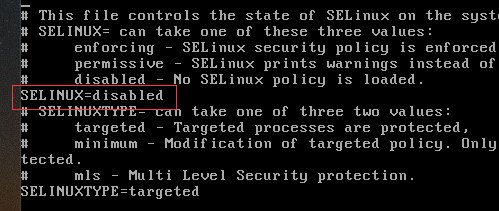

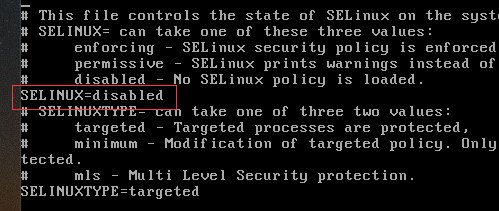

4、关闭selinux,设置sellinux=disabled

[root@master ~]# vim /etc/selinux/config

5、关闭swap

5.1、临时关闭

[root@master ~]# swapoff -a

[root@master ~]# free -m

5.2、永久关闭

[root@master ~]# vim /etc/fstab

# 注释此行脚本

# /dev/mapper/centos-swap swap swap defaults 0 0

[root@master ~]# free -m

6、添加主机名与IP对应的关系

[root@master ~]# vim /etc/hosts

添加如下内容:

192.168.56.11 master

192.168.56.12 node01

192.168.56.13 node02

192.168.56.14 node03

192.168.56.15 node04

7、配置内核参数,将桥接的IPv4流量传递到iptables的链

7.1、永久修改

[root@master ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf

7.2、临时修改

[root@master ~]# sysctl net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-iptables = 1

[root@master ~]# sysctl net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-ip6tables = 1

8、设置kubernetes阿里云源

[root@master ~]# cat <<EOF> /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

名词解释

- [] 中括号中的是repository id,唯一,用来标识不同仓库

- name 仓库名称,自定义

- baseurl 仓库地址

- enabled 是否启用该仓库,默认为1表示启用

- gpgcheck 是否验证从该仓库获得程序包的合法性,1为验证

- repo_gpgcheck 是否验证元数据的合法性 元数据就是程序包列表,1为验证

- gpgkey=URL 数字签名的公钥文件所在位置,如果* gpgcheck值为1,此处就需要指定gpgkey文件的位置,如果gpgcheck值为0就不需要此项了

9、更新缓存

[root@master ~]# yum clean all

[root@master ~]# yum makecache

10、linux文件上传下载工具

[root@master ~]# yum -y install lrzsz

Master 节点安装

1、版本查看

[root@master ~]# yum list kubelet --showduplicates | sort -r

2、安装kubelet、kubeadm和kubectl

- 在部署kubernetes时,要求master node和worker node上的版本保持一致,且以上3个包的版本保持一致,本次采用1.18.2版本。

2.1 安装三个包

[root@master ~]# yum install -y kubelet-1.18.2 kubeadm-1.18.2 kubectl-1.18.2

2.2 安装包说明

- kubelet 运行在集群所有节点上,用于启动Pod和容器等对象的工具

- kubeadm 用于初始化集群,启动集群的命令工具

- kubectl 用于和集群通信的命令行,通过kubectl可以部署和管理应用,查看各种资源,创建、删除和更新各种组件

2.3 启动kubelet并设置开机启动

[root@master ~]# systemctl enable kubelet && systemctl start kubelet

3、从阿里云下载镜像

3.1、新建镜像资源文件

[root@master ~]# touch image.sh

3.2、编辑内容

[root@master ~]# vim image.sh

#!/bin/bash

url=registry.cn-hangzhou.aliyuncs.com/google_containers

version=v1.18.2

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)

for imagename in ${images[@]} ; do

docker pull $url/$imagename

docker tag $url/$imagename k8s.gcr.io/$imagename

docker rmi -f $url/$imagename

done

- url为阿里云镜像仓库地址,version为安装的kubernetes版本

3.3、授权执行

[root@master ~]# chmod u+x image.sh

[root@master ~]# more image.sh

[root@master ~]# ./image.sh

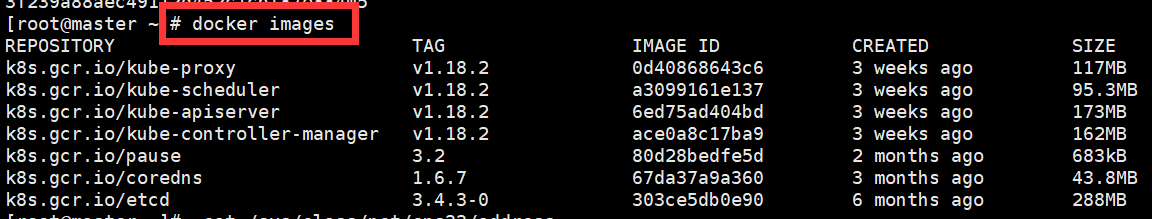

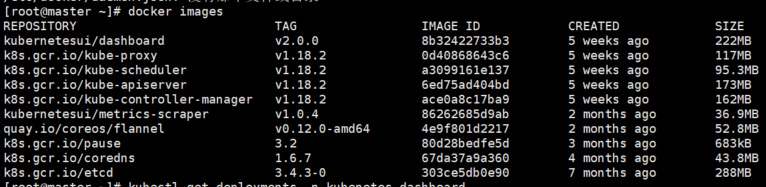

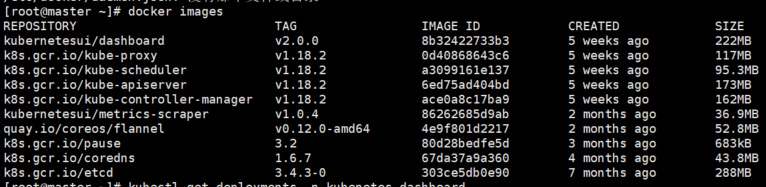

3.4、镜像查看

[root@master ~]# docker images

- 镜像列表如下:

4、初始化Master

4.1、初始化

[root@master ~]# kubeadm init --apiserver-advertise-address 192.168.56.11 --pod-network-cidr=10.244.0.0/16

- apiserver-advertise-address指定master的interface,pod-network-cidr指定Pod网络的范围。

- 建议CPU至少2核,单核CPU会提示如下:the number of available CPUs 1 is less than the required 2。

- 记录kubeadm join的输出,后面需要这个命令将各个节点加入集群中。如下:

kubeadm join 192.168.56.11:6443 --token pe9tlb.kuoifhlthdi0t0iq

--discovery-token-ca-cert-hash sha256:eb4e4530d44b333ba8428dc26dda8ab9c87d8eec196d75480e202834065f14cd

4.2、加载环境变量

[root@master ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@master ~]# source .bash_profile

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

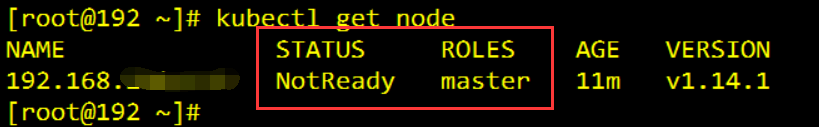

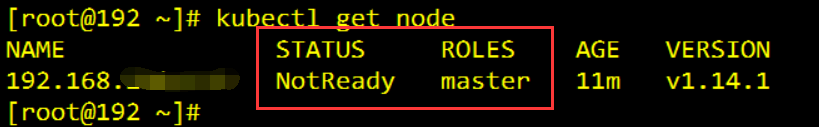

4.3、使用kubectl工具,如图:

[root@master ~]# kubectl get node

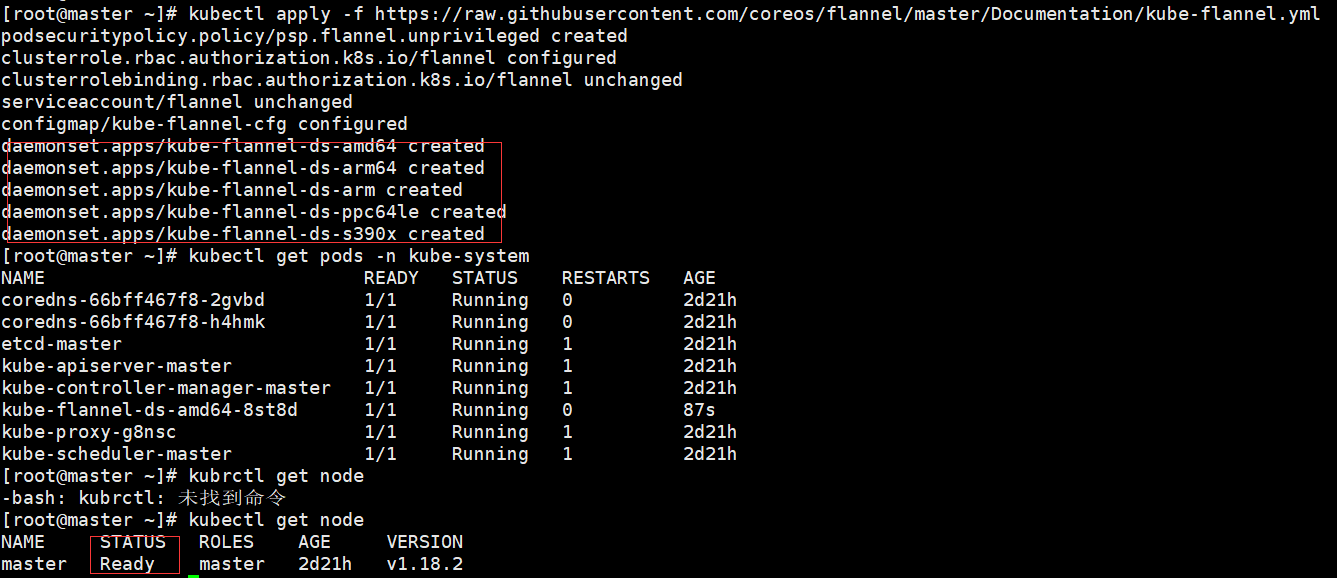

5、安装Pod网络插件(CNI)

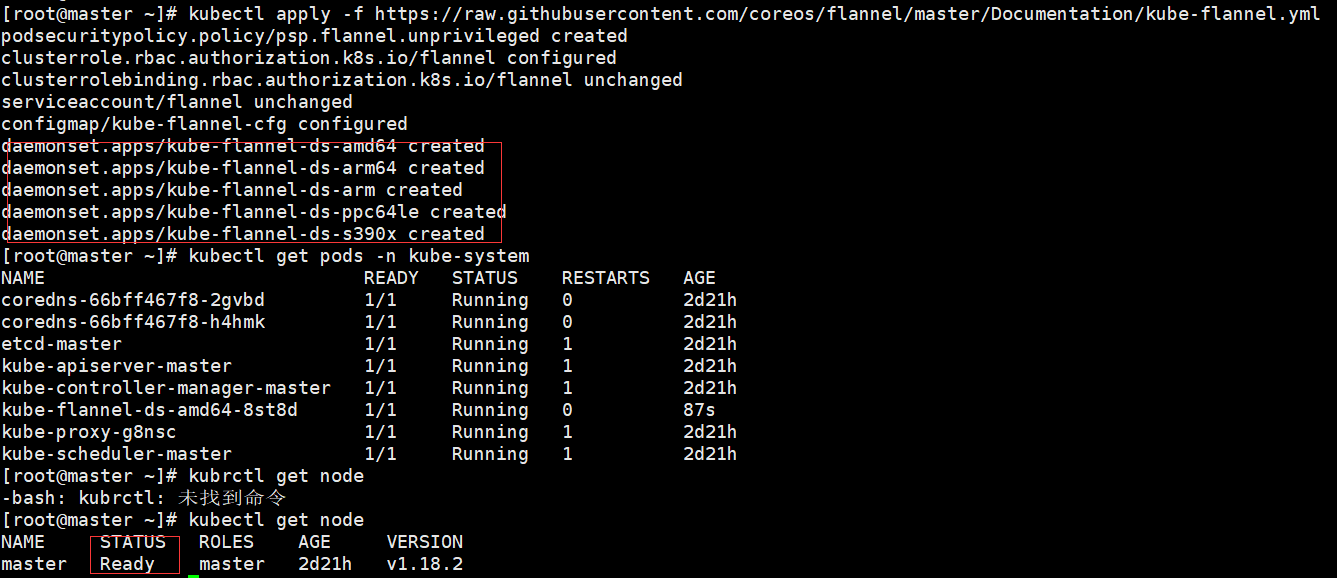

- 运行过程如图:

5.1、安装插件

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

5.2、查看是否部署成功

[root@master ~]# kubectl get pods -n kube-system

5.3、再次查看node,可以看到状态为ready

[root@master ~]# kubectl get node

6、加入集群,以下操作master上执行

6.1、查看节点令牌

[root@master ~]# kubeadm token list

| TOKEN |

TTL |

EXPIRES |

USAGES |

DESCRIPTION |

EXTRA GROUPS |

| 2pifuy.7860pe6z0zp1tykt |

23h |

2020-05-28T14:02:07+08:00 |

authentication,signing |

|

system:bootstrappers:kubeadm:default-node-token |

6.2、若令牌已过期,则生成新的令牌

[root@master ~]# kubeadm token create

W0527 14:02:07.213228 25628 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

2pifuy.7860pe6z0zp1tykt

6.3、获取ca证书sha256编码hash值,生成新的加密串

[root@master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

eb4e4530d44b333ba8428dc26dda8ab9c87d8eec196d75480e202834065f14cd

[root@master ~]#

Node 节点安装

安装kubelet、kubeadm和kubectl,同master

下载镜像 同master

Node节点加入集群

在node节点上分别执行如下操作:

[root@node01 ~]# kubeadm join 192.168.56.11:6443 --token pe9tlb.kuoifhlthdi0t0iq

--discovery-token-ca-cert-hash sha256:eb4e4530d44b333ba8428dc26dda8ab9c87d8eec196d75480e202834065f14cd

Dashboard安装

k8s官网教程

下载并执行kubernetes-dashboard.yaml

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

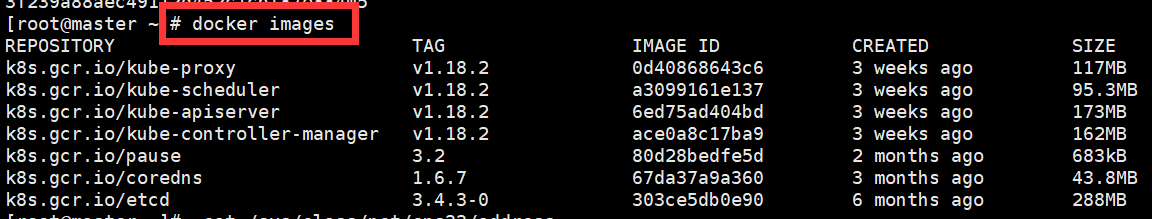

[root@master ~]# docker images

安装文件中定义了dashboard相关的资源,可以查阅YAML文件,资源包含有:

- kubernetes-dashboard命名空间

- ServiceAccount访问用户

- Service服务访问应用,默认为ClusterIP

- Secrets,存放有kubernetes-dashboard-certs,kubernetes-dashboard-csrf,kubernetes-dashboard-key-holder证书

- ConfigMap配置文件

- RBAC认证授权,包含有Role,ClusterRole,RoleBinding,ClusterRoleBinding

- Deployments应用,kubernetes-dashboard核心镜像,还有一个和监控集成的dashboard-metrics-scraper

kubernetes-dashbaord的资源都安装在kubernetes-dashboard命名空间下,包含有Deployments,Services,Secrets,ConfigMap等,资源校验如下:

kubernetes-dashbaord安装完毕后,kubernetes-dashboard默认service的类型为ClusterIP,为了从外部访问控制面板,修改type参数为NodePort。

[root@master ~]# kubectl edit services -n kubernetes-dashboard kubernetes-dashboard

service/kubernetes-dashboard edited

[root@master ~]# kubectl get services -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.96.153.90 <none> 8000/TCP 3h6m

kubernetes-dashboard NodePort 10.107.1.246 <none> 443:32685/TCP 3h6m

配置RBAC认证授权,关联集群中的相关的Role,ClusterRole和ClusterRoleBinding角色参数

[root@master ~]# touch dashboard-rbac.yaml

[root@master ~]# vim dashboard-rbac.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: testcloud

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: testcloud

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: test

namespace: kubernetes-dashboard

[root@master ~]# chmod u+x dashboard-rbac.yaml

[root@master ~]# kubectl apply -f dashboard-rbac.yaml

serviceaccount/testcloud created

clusterrolebinding.rbac.authorization.k8s.io/testcloud created

[root@master ~]# kubectl get serviceaccounts -n kubernetes-dashboard

NAME SECRETS AGE

default 1 3h37m

kubernetes-dashboard 1 3h37m

testcloud 1 64s

[root@master ~]# kubectl get secret -n kubernetes-dashboard

NAME TYPE DATA AGE

default-token-7px65 kubernetes.io/service-account-token 3 4h4m

kubernetes-dashboard-certs Opaque 0 4h4m

kubernetes-dashboard-csrf Opaque 1 4h4m

kubernetes-dashboard-key-holder Opaque 2 4h4m

kubernetes-dashboard-token-flz2n kubernetes.io/service-account-token 3 4h4m

testcloud-token-wp2ts kubernetes.io/service-account-token 3 27m

[root@master ~]# kubectl get secrets -n kubernetes-dashboard testcloud-token-wp2ts -o yaml

复制token参数,进行base64编码,格式如下:

[root@master ~]# echo '你的token值' | base64 -d

复制base64编码后的token,远程登录管理系统https://主机IP:端口地址,页面参数选Token,本机测试为https://192.168.56.11:32685/。

参考文档