在 https://github.com/jiangqy/LSTM-Classification-pytorch 基础上进行的修改

一、需求:短信文本分类

1.1 原始数据

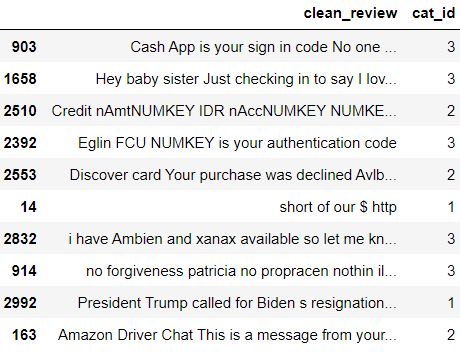

以英语语言为主,人工打标签,分为四类:0,1,2,3。

文本长度:最长为300个单词。

已经经过预处理:去掉所有其它字符,只保留了字母,以空格作为分隔符。

df = pd.read_csv('./data/labeled.csv')

df=df[['clean_review','cat_id']]

df.sample(10)

二、构造训练样本

1. 特征和标签

import torch

from torch.utils.data.dataset import Dataset

import numpy as np

import pandas as pd

class Dictionary(object):

def __init__(self):

self.word2idx = {}

self.idx2word = []

def add_word(self, word):

if word not in self.word2idx:

self.idx2word.append(word)

self.word2idx[word] = len(self.idx2word) - 1

return self.word2idx[word]

def __len__(self):

return len(self.idx2word)

class Corpus(object):

def __init__(self,sen_len):

self.dictionary = Dictionary()

self.dictionary.add_word("UNK") # 要指定样本长度,不够的补0,默认0对应的单词为"UNK"

self.texts,self.labels = self.tokenize(sen_len)

def tokenize(self,sen_len):

"""

得到字典,完成文本从单词到数字的转换

:param sen_len: 文本长度

:return:

"""

df = pd.read_csv("./data/clean_review.csv")

token_text = []

tokens = 0

labels = []

for item in df.iterrows():

line = item[1]["clean_review"] # 该行中,clean_review字段对应的值

labels.append(int(item[1]["cat_id"])) # 该行中,cat_id字段对应的值,即这个样本的标签

words = line.split(" ")

tokens += len(words)

for word in words:

word = word.strip()

if word:

self.dictionary.add_word(word)

txt = torch.LongTensor(np.zeros(sen_len, dtype=np.int64)) # 构造长度为sen_len的tensor

for index,word in enumerate(words[:sen_len]):

word = word.strip()

if word:

txt[index] = self.dictionary.word2idx[word]

token_text.append(txt)

return token_text,labels

2. 自定义Dataset

class LSTMDataset(Dataset):

def __init__(self,sen_len, corpus):

corpus = corpus

self.token_text = corpus.texts

self.labels = corpus.labels

self.sen_len = sen_len

def __getitem__(self, index):

"""

根据索引获取对应的特征和标签

:param index:

:return:

"""

text = self.token_text[index]

label = torch.LongTensor([self.labels[index]])

return text, label

def __len__(self):

return len(self.labels)

三、LSTM模型

import torch.nn as nn

import torch.nn.functional as F

import torch

from torch.autograd import Variable

class LSTMClassifier(nn.Module):

"""

LSTM模型

"""

def __init__(self, embedding_dim, hidden_dim, vocab_size, label_size, batch_size, use_gpu):

super(LSTMClassifier, self).__init__()

self.hidden_dim = hidden_dim

self.batch_size = batch_size

self.use_gpu = use_gpu

self.word_embeddings = nn.Embedding(vocab_size, embedding_dim) # 生成的嵌入矩阵

self.lstm = nn.LSTM(embedding_dim, hidden_dim,num_layers=1) #

self.hidden2label = nn.Linear(hidden_dim, label_size) # 四分类 label_size=4

self.hidden = self.init_hidden()

def init_hidden(self):

if self.use_gpu:

h0 = Variable(torch.zeros(1, self.batch_size, self.hidden_dim).cuda())

c0 = Variable(torch.zeros(1, self.batch_size, self.hidden_dim).cuda())

else:

h0 = Variable(torch.zeros(1, self.batch_size, self.hidden_dim))

c0 = Variable(torch.zeros(1, self.batch_size, self.hidden_dim))

return (h0, c0)

def forward(self, sentence):

embeds = self.word_embeddings(sentence)

x = embeds.view(len(sentence), self.batch_size, -1)

lstm_out, self.hidden = self.lstm(x, self.hidden)

y = self.hidden2label(lstm_out[-1])

y = F.softmax(y,dim=1)

return y

# 得到最终的train_loader,训练过程就是对其进行遍历

corpus = Corpus(DATA_DIR,sentence_len)

train_set = LSTMDataset(sentence_len, corpus)

train_loader = DataLoader(train_set,

batch_size=batch_size,

shuffle=True,

num_workers=4

)

四、损失函数和优化器

optimizer = optim.SGD(model.parameters(), lr=learning_rate)

loss_function = nn.CrossEntropyLoss()

输出层经过softmax之后,可以使用CrossEntropyLoss作为损失函数。

五、开始训练

for epoch in range(epochs):

optimizer = adjust_learning_rate(optimizer, epoch)

## training epoch

total_acc = 0.0

total_loss = 0.0

total = 0.0

for iter, traindata in enumerate(train_loader):

train_inputs, train_labels = traindata

train_labels = torch.squeeze(train_labels)

if use_gpu:

train_inputs, train_labels = Variable(train_inputs.cuda()), train_labels.cuda()

else:

train_inputs = Variable(train_inputs)

model.zero_grad()

model.batch_size = len(train_labels)

model.hidden = model.init_hidden()

output = model(train_inputs.t())

loss = loss_function(output, Variable(train_labels))

loss.backward()

optimizer.step()

# calc training acc

_, predicted = torch.max(output.data, 1)

total_acc += (predicted == train_labels).sum()

total += len(train_labels)

total_loss += loss.item()

train_loss_.append(total_loss / total)

train_acc_.append(total_acc / total)

## testing epoch

total_acc = 0.0

total_loss = 0.0

total = 0.0

for iter, testdata in enumerate(test_loader):

test_inputs, test_labels = testdata

test_labels = torch.squeeze(test_labels)

if use_gpu:

test_inputs, test_labels = Variable(test_inputs.cuda()), test_labels.cuda()

else:

test_inputs = Variable(test_inputs)

model.batch_size = len(test_labels)

model.hidden = model.init_hidden()

output = model(test_inputs.t())

loss = loss_function(output, Variable(test_labels))

# calc testing acc

_, predicted = torch.max(output.data, 1)

total_acc += (predicted == test_labels).sum()

total += len(test_labels)

total_loss += loss.item()

test_loss_.append(total_loss / total)

test_acc_.append(total_acc / total)

print('[Epoch: %3d/%3d] Training Loss: %.3f, Testing Loss: %.3f, Training Acc: %.3f, Testing Acc: %.3f'

% (epoch, epochs, train_loss_[epoch], test_loss_[epoch], train_acc_[epoch], test_acc_[epoch]))

六、模型保存

traced_model = torch.jit.script(model)

traced_model.save("lstm.pt")

如果生成的模型提供给java调用,而不是python直接调用,使用pytorch提供的torch.jit.script方法

教程如下:https://liuzhian.github.io/2021/04/08/初识TorchScript/