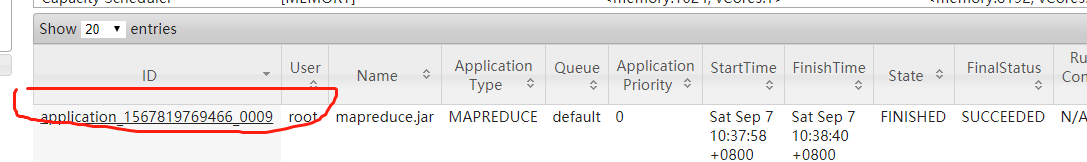

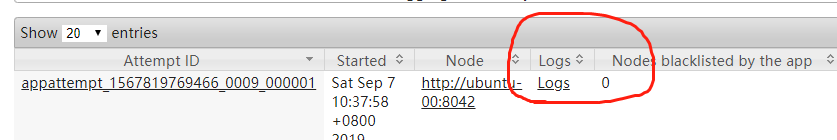

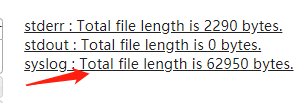

在8088端口可以看到日志文件(主要看error),操作如下:

1.window jdk版本最好和linux jdk 版本一致,不然容易出现莫名奇妙的bug

之前出现一个bug: UnsupportedClassVersionError: .......

就是版本错误引起的,花了好长的时间才搞定

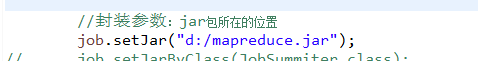

2.当出现ClassNotFoundException 时,需要将src的文件export成jar包放入如下路径,然后在主函数加入代码如下:

3.如果出现 INFO mapreduce.Job: Job job_1398669840354_0003 failed with state FAILED due to:

Application application_1398669840354_0003 failed 2 times due to AM Container for appattempt_1398669840354_0003_000002 exited with exitCode: 1

due to: Exception from container-launch: org.apache.hadoop.util.Shell$ExitCodeException: .......

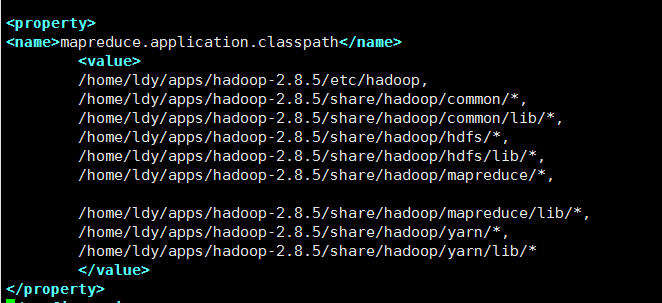

是mapred-default.xml,yarn-default.xml 没加如下参数(在最后添加即可):

mapred-default.xml:

<property>

<name>mapreduce.application.classpath</name>

<value>

$HADOOP_CONF_DIR,

$HADOOP_COMMON_HOME/share/hadoop/common/*,

$HADOOP_COMMON_HOME/share/hadoop/common/lib/*,

$HADOOP_HDFS_HOME/share/hadoop/hdfs/*,

$HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*,

$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*,

$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*,

$HADOOP_YARN_HOME/share/hadoop/yarn/*,

$HADOOP_YARN_HOME/share/hadoop/yarn/lib/*

</value>

</property>

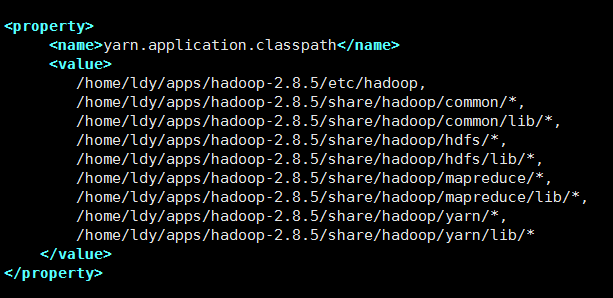

yarn-default.xml :

<property> <name>mapreduce.application.classpath</name> <value> $HADOOP_CONF_DIR, $HADOOP_COMMON_HOME/share/hadoop/common/*, $HADOOP_COMMON_HOME/share/hadoop/common/lib/*, $HADOOP_HDFS_HOME/share/hadoop/hdfs/*, $HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*, $HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*, $HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*, $HADOOP_YARN_HOME/share/hadoop/yarn/*, $HADOOP_YARN_HOME/share/hadoop/yarn/lib/* </value> </property>

(这两个文件分别在hadoop-mapreduce-client-core-2.8.5.jar,hadoop-yarn-api-2.8.5.jar 中)

注:得先将这两个jar包remove bulid Path ,然后才能修改 .xml文件

注:!!!如果你在eclipse启动mapreduce,则改window的jar包,在linux机则改hadoop的mapred-site.xml和yarn-site.xml文件

mapred-site.xml:

yarn-site.xml:

4.若出现AccessContorlException:Permisson denied:.........

应该是用户权限问题,加入如下代码:

//1.设置yarn处理数据时的user

System.setProperty("HADOOP_USER_NAME","root");

5.如出现ExitCodeException:exit code =1 : /bin/bash .........

是因为Job 任务是在window提交的,而接受方是yarn,编辑脚本命令时是以window为标准,所以运行脚本命令时就出错了

加如下代码:

//4.跨平台提交时,需要加这个参数

conf.set("mapreduce.app-submission.cross-platform", "true");

6.出现ExitCodeException exitCode=127:

For more detailed output, check application tracking page:http://dev-hadoop6:8088/cluster/app/application_15447

Diagnostics: Exception from container-launch.

Container id: container_1544766080243_0018_02_000001

Exit code: 127

Stack trace: ExitCodeException exitCode=127:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:585)

at org.apache.hadoop.util.Shell.run(Shell.java:482)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:776)

at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerE

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLa

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLa

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Container exited with a non-zero exit code 127

Failing this attempt. Failing the application.

18/12/14 17:48:56 INFO mapreduce.Job: Counters: 0

18/12/14 17:48:56 WARN mapreduce.HdfsMapReduce: Path /mapred-temp/job-user-analysis-statistic/01/top_in is not

java.io.IOException: Set input path failed !

at com.tracker.offline.common.mapreduce.HdfsMapReduce.setInputPath(HdfsMapReduce.java:165)

at com.tracker.offline.common.mapreduce.HdfsMapReduce.buildJob(HdfsMapReduce.java:122)

at com.tracker.offline.common.mapreduce.HdfsMapReduce.waitForCompletion(HdfsMapReduce.java:65)

at com.tracker.offline.business.job.user.JobAnalysisStatisticMR.main(JobAnalysisStatisticMR.java:88)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

这是编码报错,yarn-env.sh不对

修改yarn-env.sh的java_home即可