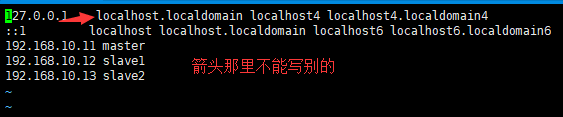

首先必须配置SSH免密码登陆

1.启动你的hadoop集群最少三台电脑 启动路径注意你的静态IP对应

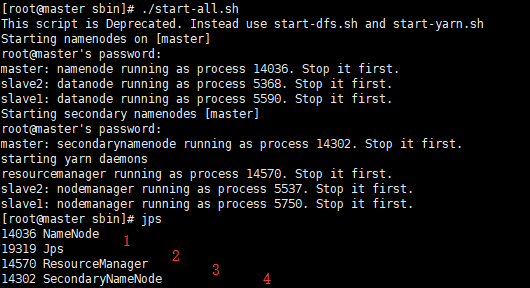

四个进程缺一不可执行

2.进入目录文件

[root@master /]# cd home

创建hduser和file文件

[root@master home]# mkdir hduser

[root@master home]# mkdir file

在file文件下创建两个文本

[root@master file]# echo "hello 1" > f1.txt

[root@master file]# echo "hello 2" > f2.txt

进入hadoop目录

[root@master /]# cd bigData

bash: cd: bigData: 没有那个文件或目录

[root@master /]# cd /usr/local

[root@master local]# cd hadoop-2.8.0/

[root@master hadoop-2.8.0]# ll

总用量 136

drwxr-xr-x. 2 502 dialout 4096 3月 17 2017 bin

drwxr-xr-x. 3 502 dialout 19 3月 17 2017 etc

drwxr-xr-x. 3 root root 17 3月 4 22:44 hdfs

drwxr-xr-x. 2 502 dialout 101 3月 17 2017 include

drwxr-xr-x. 3 502 dialout 19 3月 17 2017 lib

drwxr-xr-x. 2 502 dialout 4096 3月 17 2017 libexec

-rw-r--r--. 1 502 dialout 99253 3月 17 2017 LICENSE.txt

drwxr-xr-x. 2 root root 4096 3月 18 11:55 logs

-rw-r--r--. 1 502 dialout 15915 3月 17 2017 NOTICE.txt

-rw-r--r--. 1 502 dialout 1366 3月 17 2017 README.txt

drwxr-xr-x. 2 502 dialout 4096 3月 17 2017 sbin

drwxr-xr-x. 4 502 dialout 29 3月 17 2017 share

drwxr-xr-x. 3 root root 16 3月 4 22:46 tmp

[root@master hadoop-2.8.0]# cd share

[root@master share]# ll

总用量 0

drwxr-xr-x. 3 502 dialout 19 3月 17 2017 doc

drwxr-xr-x. 9 502 dialout 92 3月 17 2017 hadoop

[root@master share]# cd hadoop/

[root@master hadoop]#

启动Hadoop之后就自动启动了HDFS,创建 HDFS目录/input

[root@master hadoop]#hadoop fs -mkdir /input 创建在根目录下

将f1.txt, f2.txt保存到HDFS中 put上去

[root@master hadoop]# hadoop fs -put home/hduser/file/f*.txt /input/

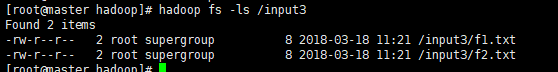

查看HDFS上是否存在 f1.txt f2.txt;

[root@master hadoop]# hadoop fs -ls /input

通过 “hadoop jar xxx.jar” 来执行WordCount程序 进入安装目录 hadoop

[root@master hadoop]# cd mapreduce/

进入mapreduce的目录执行如下命令

hadoop jar hadoop-mapreduce-examples-2.8.0.jar wordcount /input3 /output

[root@master hadoop]# cd mapreduce/

[root@master mapreduce]# hadoop jar hadoop-mapreduce-examples-2.8.0.jar wordcount /input3 /output

18/03/18 11:56:02 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.10.11:8032

18/03/18 11:56:04 INFO input.FileInputFormat: Total input files to process : 2

18/03/18 11:56:05 INFO mapreduce.JobSubmitter: number of splits:2

18/03/18 11:56:05 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1521345320410_0001

18/03/18 11:56:07 INFO impl.YarnClientImpl: Submitted application application_1521345320410_0001

18/03/18 11:56:07 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1521345320410_0001/

18/03/18 11:56:07 INFO mapreduce.Job: Running job: job_1521345320410_0001

18/03/18 11:56:26 INFO mapreduce.Job: Job job_1521345320410_0001 running in uber mode : false

18/03/18 11:56:26 INFO mapreduce.Job: map 0% reduce 0%

18/03/18 11:56:49 INFO mapreduce.Job: map 100% reduce 0%

18/03/18 11:57:01 INFO mapreduce.Job: map 100% reduce 100%

18/03/18 11:57:03 INFO mapreduce.Job: Job job_1521345320410_0001 completed successfully

18/03/18 11:57:03 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=46

FILE: Number of bytes written=408373

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=210

HDFS: Number of bytes written=16

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=37460

Total time spent by all reduces in occupied slots (ms)=10166

Total time spent by all map tasks (ms)=37460

Total time spent by all reduce tasks (ms)=10166

Total vcore-milliseconds taken by all map tasks=37460

Total vcore-milliseconds taken by all reduce tasks=10166

Total megabyte-milliseconds taken by all map tasks=38359040

Total megabyte-milliseconds taken by all reduce tasks=10409984

Map-Reduce Framework

Map input records=2

Map output records=4

Map output bytes=32

Map output materialized bytes=52

Input split bytes=194

Combine input records=4

Combine output records=4

Reduce input groups=3

Reduce shuffle bytes=52

Reduce input records=4

Reduce output records=3

Spilled Records=8

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=458

CPU time spent (ms)=2110

Physical memory (bytes) snapshot=464822272

Virtual memory (bytes) snapshot=6236811264

Total committed heap usage (bytes)=260870144

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=16

File Output Format Counters

Bytes Written=16

表示成功

使用如下命令来查看输出目录中所有结果

[root@master hadoop]# hadoop fs -cat /output/*

[root@master hadoop]# hadoop fs -cat /output/*

f 1

hello 2

j 1

[root@master hadoop]#

至此完毕

配置hadooop环境变量 http://blog.csdn.net/kokjuis/article/details/53537029