1、structured-streaming的state 配置项总结

| Config Name | Description | Default Value | |

| spark.sql.streaming.stateStore.rocksdb.compactOnCommit | Whether we perform a range compaction of RocksDB instance for commit operation | False | |

| spark.sql.streaming.stateStore.rocksdb.blockSizeKB | Approximate size in KB of user data packed per block for a RocksDB BlockBasedTable, which is a RocksDB's default SST file format. | 4 | |

| spark.sql.streaming.stateStore.rocksdb.blockCacheSizeMB | The size capacity in MB for a cache of blocks. |

|

|

| spark.sql.streaming.stateStore.rocksdb.lockAcquireTimeoutMs | The waiting time in millisecond for acquiring lock in the load operation for RocksDB instance. | 60000 | |

| spark.sql.streaming.stateStore.rocksdb.resetStatsOnLoad | Whether we resets all ticker and histogram stats for RocksDB on load. | True | |

| spark.sql.streaming.stateStore.providerClass |

The class used to manage state data in stateful streaming queries. This class must |

org.apache.spark.sql.execution.streaming. state.HDFSBackedStateStoreProvider |

|

| spark.sql.streaming.stateStore.stateSchemaCheck |

When true, Spark will validate the state schema against schema on existing state and |

true | |

| spark.sql.streaming.stateStore.minDeltasForSnapshot | Minimum number of state store delta files that needs to be generated before they consolidated into snapshots. | 10 | |

| spark.sql.streaming.stateStore.formatValidation.enabled |

When true, check if the data from state store is valid or not when running streaming |

true | |

| spark.sql.streaming.stateStore.maintenanceInterval |

The interval in milliseconds between triggering maintenance tasks in StateStore. |

TimeUnit.MINUTES.toMillis(1) | |

| spark.sql.streaming.stateStore.compression.codec |

The codec used to compress delta and snapshot files generated by StateStore. |

lz4 | |

| spark.sql.streaming.stateStore.rocksdb.formatVersion |

Set the RocksDB format version. This will be stored in the checkpoint when starting |

5 | |

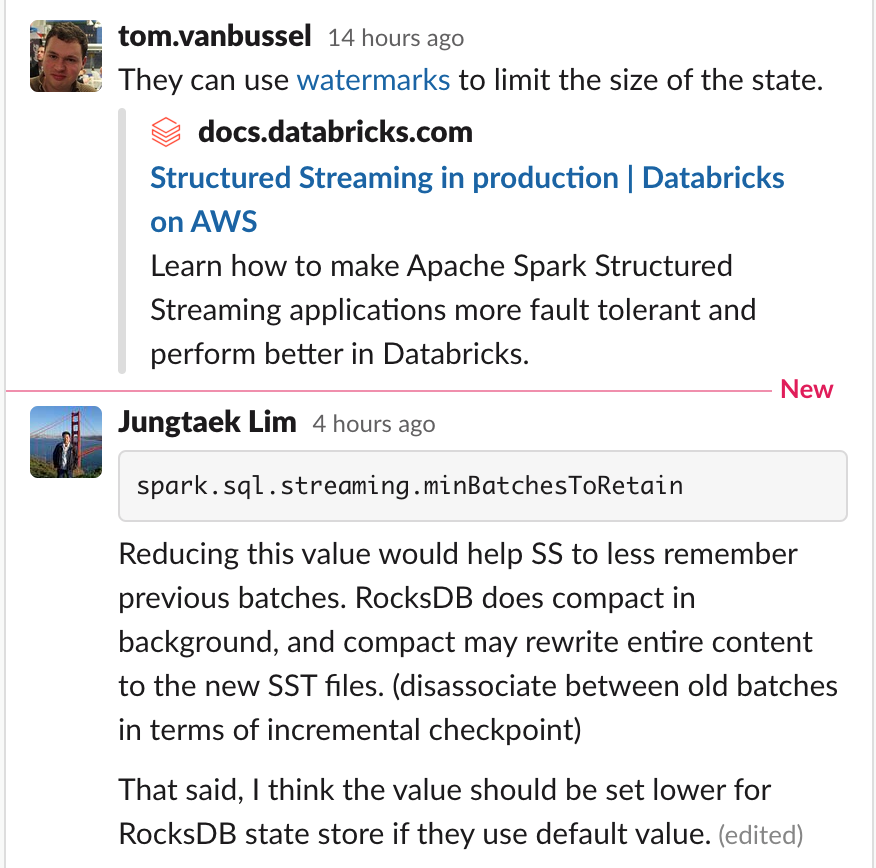

spark.sql.streaming.minBatchesToRetain |

he minimum number of batches that must be retained and made recoverable. | 100 |

使用建议:

-

spark.sql.streaming.minBatchesToRetain 设置的大小对 state 占用的空间有很多的关系。

Timeouts and State

One thing to note is that because we manage the state of the group based on user-defined concepts, as expressed above for the use-cases, the semantics of watermark (expiring or discarding an event) may not always apply here. Instead, we have to specify an appropriate timeout ourselves. Timeout dictates how long we should wait before timing out some intermediate state.

Timeouts can either be based on processing time (GroupStateTimeout.ProcessingTimeTimeout) or event time (GroupStateTimeout.EventTimeTimeout). When using timeouts, you can check for timeout first before processing the values by checking the flag state.hasTimedOut.

To set processing timeout, use GroupState.setTimeoutDuration(...) method. That means the timeout guarantee will occur under the following conditions:

- Timeout will never occur before the clock has advanced X ms specified in the method

- Timeout will eventually occur when there is a trigger in the query, after X ms

To set event time timeout, use GroupState.setTimeoutTimestamp(...). Only for timeouts based on event time must you specify watermark. As such all events in the group older than watermark will be filtered out, and the timeout will occur when the watermark has advanced beyond the set timestamp.

When timeouts occur, your function supplied in the streaming query will be invoked with arguments: the key by which you keep the state; an iterator rows of input, and an old state. The example with mapGroupsWithState below defines a number of functional classes and objects used.

- 1)、GroupState.setTimeoutDuration只适用于ProcessingTimeTimeout ,不适用于EventTimeTimeout

- 2)、watermark一般用于EventTimeTimeout,但是在ProcessingTimeTimeout 中设置watermark也不会报错。

作者:张永清,转载请注明出处:博客园:https://www.cnblogs.com/laoqing/p/15602940.html

2、在 Spark 3.0 中,Proleptic Gregorian calendar 用于解析、格式化和转换日期和时间戳以及提取子组件,如年、日等。Spark 3.0 使用基于ISO chronology的java.time包中的Java 8 API 类。在 Spark 2.4 及以下版本中,这些操作是使用混合日历 ( Julian + Gregorian ) 执行的。这些更改会影响 1582 年 10 月 15 日 (Gregorian) 之前日期的结果,并影响以下 Spark 3.0 API:时间戳/日期字符串的解析/格式化。当用户指定的模式用于解析和格式化时,这会影响 CSV/JSON 数据在unix_timestamp, date_format, to_unix_timestamp, from_unixtime, to_date,to_timestamp函数上的应用。在 Spark 3.0 中,我们在Datetime Patterns for Formatting and Parsing 中定义了我们自己的模式字符串,它是通过DateTimeFormatter在幕后实现的。新实现对其输入执行严格检查。例如,2015-07-22 10:00:00如果模式是yyyy-MM-dd因为解析器不消耗整个输入,则无法解析时间戳。另一个例子是31/01/2015 00:00输入不能被dd/MM/yyyy hh:mm模式解析,因为hh假设小时在范围内1-12. 在 Spark 2.4 及以下版本中,java.text.SimpleDateFormat用于时间戳/日期字符串转换,支持的模式在SimpleDateFormat中描述。可以通过设置spark.sql.legacy.timeParserPolicy为来恢复旧行为LEGACY

| Config Name | Description | Default Value |

| spark.sql.legacy.timeParserPolicy |

When LEGACY, java.text.SimpleDateFormat is used for formatting and parsing |

| spark.sql.session.timeZone | The ID of session local timezone in the format of either region-based zone IDs or zone offsets. Region IDs must have the form 'area/city', such as 'America/Los_Angeles'. Zone offsets must be in the format '(+|-)HH', '(+|-)HH:mm' or '(+|-)HH:mm:ss', e.g '-08', '+01:00' or '-13:33:33'. Also 'UTC' and 'Z' are supported as aliases of '+00:00'. Other short names are not recommended to use because they can be ambiguous |

作者:张永清,转载请注明出处:博客园:https://www.cnblogs.com/laoqing/p/15602940.html

3、其他配置:

- spark.sql.files.ignoreMissingFiles:Whether to ignore missing files. If true, the Spark jobs will continue to run when encountering missing files and the contents that have been read will still be returned.This configuration is effective only when using file-based sources such as Parquet, JSON and ORC.例如出现A file referenced in the transaction log cannot be found.(https://docs.microsoft.com/en-us/azure/databricks/kb/delta/file-transaction-log-not-found),set the Spark property

spark.sql.files.ignoreMissingFilestotruein the cluster’s Spark Config. - 从 Spark 2.4 开始,Spark 将按照 SQL 标准遵循优先规则来评估查询中引用的集合操作。如果没有用括号指定顺序,除了所有的 INTERSECT 操作都在任何 UNION、EXCEPT 或 MINUS 操作之前执行之外,集合操作从左到右执行。为所有集合操作赋予同等优先级的旧行为在新添加的配置下保留,spark.sql.legacy.setopsPrecedence.enabled默认值为false。当此属性设置为true时,spark 将从左到右评估集合运算符,因为它们出现在查询中,因为没有使用括号强制执行显式排序。

- 从 Spark 2.4 开始,Spark 默认最大化使用vectorized ORC reader来读取 ORC 文件。要做到这一点,spark.sql.orc.impl和spark.sql.orc.filterPushdown改变它们的默认值分别为native和true。某些旧的 Apache Hive 版本无法读取由native ORC writer创建的 ORC 文件。使用spark.sql.orc.impl=hive创建可以与hive2.1.1及之前版本共享的文件。

- 从 Spark 2.4 开始,Spark 在比较DATE 类型与 TIMESTAMP 类型时候会将双方都提升为 TIMESTAMP 类型后进行比较将spark.sql.legacy.compareDateTimestampInTimestamp设置为false恢复以前的行为。Spark 3.0 中将删除此选项。

- 从 Spark 2.4 开始,不允许创建具有非空位置的托管表。尝试创建具有非空位置的托管表时会引发异常。设置spark.sql.legacy.allowCreatingManagedTableUsingNonemptyLocation为true恢复以前的行为。Spark 3.0 中将删除此选项。

- 从 Spark 2.0 开始,Spark 默认会转换 Parquet Hive 表以获得更好的性能。从 Spark 2.4 开始,Spark 默认也会转换 ORC Hive 表。这意味着 Spark 默认使用自己的 ORC 支持而不是 Hive SerDe。例如,CREATE TABLE t(id int) STORED AS ORC将在 Spark 2.3 中使用 Hive SerDe 处理,在 Spark 2.4 中,它将转换为 Spark 的 ORC 数据源表并应用 ORC 向量化。设置spark.sql.hive.convertMetastoreOrc=false恢复以前的行为。

- 在 2.3 及更早版本中,如果行中至少有一个列值格式错误,则 CSV 行被视为格式错误。CSV 解析器在 DROPMALFORMED 模式下丢弃此类行或在 FAILFAST 模式下输出错误。从 Spark 2.4 开始,CSV 行仅在包含从 CSV 数据源请求的格式错误的列值时才被视为格式错误,其他值可以忽略。例如,CSV 文件包含“id,name”标题和一行“1234”。在 Spark 2.4 中,id 列的选择由一列值为 1234 的行组成,但在 Spark 2.3 及更早版本中,它在 DROPMALFORMED 模式下为空。要恢复以前的行为,请设置spark.sql.csv.parser.columnPruning.enabled为false。

- 从 Spark 2.4 开始,为了计算统计的文件列出操作默认是并行完成的。这可以通过设置spark.sql.statistics.parallelFileListingInStatsComputation.enabled为False来禁用。

- 在 Spark 2.3 及更早版本中,没有 GROUP BY 的 HAVING 被视为 WHERE。这意味着,SELECT 1 FROM range(10) HAVING true被执行为SELECT 1 FROM range(10) WHERE true 并返回 10 行。这违反了 SQL 标准,并已在 Spark 2.4 中修复。从 Spark 2.4 开始,没有 GROUP BY 的 HAVING 被视为全局聚合,这意味着SELECT 1 FROM range(10) HAVING true只会返回一行。要恢复以前的行为,请设置spark.sql.legacy.parser.havingWithoutGroupByAsWhere为true。

- 在版本 2.3 及更早版本中,从 Parquet 数据源表读取时,对于 Hive Metastore 模式和 Parquet 模式中列名采用不同字母大小写的任何列,Spark 始终返回 null,无论是否spark.sql.caseSensitive设置为true或false。从 2.4 开始,当spark.sql.caseSensitive设置为 时false,Spark 在 Hive Metastore 模式和 Parquet 模式之间进行不区分大小写的列名解析,因此即使列名在不同的字母大小写中,Spark 也会返回相应的列值。如果存在歧义,即匹配多个 Parquet 列,则会引发异常。当spark.sql.hive.convertMetastoreParquet设置为true时,此更改也适用于 Parquet Hive 表。

- 从 Spark 2.4.5 开始,TRUNCATE TABLE命令会在重新创建表/分区路径时尝试设置回原始权限和 ACL。要恢复早期版本的行为,请设置spark.sql.truncateTable.ignorePermissionAcl.enabled为true。2)从 Spark 2.4.5 开始,spark.sql.legacy.mssqlserver.numericMapping.enabled添加了配置以支持对 SMALLINT 和 REAL JDBC 类型分别使用 IntegerType 和 DoubleType 的旧 MsSQLServer 方言映射行为。要恢复 2.4.3 及更早版本的行为,请设置spark.sql.legacy.mssqlserver.numericMapping.enabled为true.

- 在 Spark 2.4 及以下版本中,Dataset.groupByKey如果键是非结构类型,例如 int、string、array 等,则分组数据集的键属性结果被错误地命名为“value”。这是违反直觉的并且使得聚合查询的schema非预期。例如,ds.groupByKey(...).count()的schema是(value, count)。从 Spark 3.0 开始,我们将分组属性命名为“key”。旧行为保留在新添加的配置下spark.sql.legacy.dataset.nameNonStructGroupingKeyAsValue,默认值为false。

- 在 Spark 3.0 中,将值插入具有不同数据类型的表列时,将按照 ANSI SQL 标准执行类型强制。某些不合理的类型转换,例如转换string为int和double到boolean是不允许的。如果值超出列的数据类型的范围,则会引发运行时异常。在 Spark 2.4 及以下版本中,只要cast有效,表插入期间的类型转换是允许的。向整数字段插入超出范围的值时,将插入值的低位(与 Java/Scala 数字类型转换相同)。例如,如果将257插入字节类型的字段,则结果为1。 行为由spark.sql.storeAssignmentPolicy选项控制,默认值为“ANSI”。将该选项设置为“Legacy”可恢复之前的行为。

- Spark 2.4 及更低版本:SET即使指定的键用于SparkConf条目,该命令也可以在没有任何警告的情况下运行,并且由于该命令不会更新SparkConf而无效,但该行为可能会使用户感到困惑。在 3.0 中,如果使用SparkConf密钥,该命令将失败。您可以通过设置spark.sql.legacy.setCommandRejectsSparkCoreConfs为false来禁用此类检查。

- 在 Spark 3.0 中,下面列出的属性被保留;如果您在CREATE DATABASE ... WITH DBPROPERTIES和ALTER TABLE ... SET TBLPROPERTIES等位置指定保留属性,则命令将失败。您需要它们的特定子句来指定它们,例如,CREATE DATABASE test COMMENT 'any comment' LOCATION 'some path'。您可以设置spark.sql.legacy.notReserveProperties为true忽略ParseException,在这种情况下,这些属性将被静默删除,例如:SET DBPROPERTIES('location'='/tmp')将无效。在 Spark 2.4 及以下版本中,这些属性既没有保留,也没有副作用,例如,SET DBPROPERTIES('location'='/tmp')不会更改数据库的位置,而只是创建一个无意义的属性像'a'='b'.

- 在 Spark 3.0 中,您也可以使用ADD FILE添加文件目录。早些时候,您只能使用此命令添加单个文件。要恢复早期版本的行为,请设置spark.sql.legacy.addSingleFileInAddFile为true。

- 在 Spark 3.0 中,当哈希表达式应用于MapType的元素时候会抛出analysis exception. 要恢复 Spark 3.0 之前的行为,请设置spark.sql.legacy.allowHashOnMapType为true.

- 在 Spark 3.0 中,当调用array/map函数时不带任何参数,它会返回一个元素类型为 NullType的空集合。在 Spark 2.4 及以下版本中,它返回一个元素类型为StringType的空集合。要恢复 Spark 3.0 之前的行为,您可以设置spark.sql.legacy.createEmptyCollectionUsingStringType为true.

- 在 Spark 2.4 及以下版本中,您可以通过内置函数如CreateMap、StringToMap等创建带有重复键的映射。带有重复键的映射的行为是未定义的,例如,映射查找选择首个出现的重复键,Dataset.collect只保留最后出现的重复键,MapKeys返回重复的键等。在 Spark 3.0 中,当发现重复的键时,Spark 会抛出RuntimeException。您可以设置spark.sql.mapKeyDedupPolicy为LAST_WIN使用 last wins 策略对映射键进行重复数据删除。用户仍然可以从不强制执行它的数据源(例如 Parquet)读取具有重复键的映射值,行为是未定义的。

- 在 Spark 3.0 中,org.apache.spark.sql.functions.udf(AnyRef, DataType)默认情况下不允许使用。建议删除返回类型参数以自动切换到类型化 Scala udf,或设置spark.sql.legacy.allowUntypedScalaUDF为 true 以继续使用它。在 Spark 2.4 及以下版本中,如果org.apache.spark.sql.functions.udf(AnyRef, DataType)获取带有原始类型参数的 Scala 闭包,如果输入值为 null,则返回的 UDF 将返回 null。但是,在 Spark 3.0 中,如果输入值为 null,UDF 将返回 Java 类型的默认值。例如,val f = udf((x: Int) => x, IntegerType),f($"x")返回null在spark2.4及以下如果x是null,在spark 3.0返回0。引入此行为更改是因为 Spark 3.0 默认使用 Scala 2.12 构建。

- 在 Spark 3.0 中,高阶函数exists遵循三值布尔逻辑,即如果predicate返回任何nulls 并且没有获取到true值 ,则exists返回null而不是false。例如,exists(array(1, null, 3), x -> x % 2 == 0)是null。可以通过设置spark.sql.legacy.followThreeValuedLogicInArrayExists为来恢复以前的行为false。

- 在 Spark 3.0 中,以科学记数法(例如,1E2)书写的数字将被解析为 Double。在 Spark 2.4 及以下版本中,它们被解析为decimal.要恢复 Spark 3.0 之前的行为,您可以设置spark.sql.legacy.exponentLiteralAsDecimal.enabled为true.

- 在 Spark 3.0 中,日期时间间隔字符串被转换为from与to边界相关的间隔。如果输入字符串与指定边界定义的模式不匹配,ParseException则抛出异常。例如,interval '2 10:20' hour to minute引发异常,因为预期的格式是[+|-]h[h]:[m]m. 在 Spark 2.4 版本中,from没有考虑边界,而是to使用边界来截断结果区间。例如,所示示例中的日间时间间隔字符串转换为interval 10 hours 20 minutes. 要恢复 Spark 3.0 之前的行为,您可以设置spark.sql.legacy.fromDayTimeString.enabled为true.

- 在 Spark 3.0 中,默认情况下不允许十进制的负小数位,例如像1E10BDis 之类的文字数据类型DecimalType(11, 0)。在 Spark 2.4 及以下版本中,它是DecimalType(2, -9). 要恢复 Spark 3.0 之前的行为,您可以设置spark.sql.legacy.allowNegativeScaleOfDecimal为true.

- 在 Spark 3.0 中,如果数据集查询包含由自联接引起的不明确的列引用,则查询失败。一个典型的例子:val df1 = ...; val df2 = df1.filter(...);,然后df1.join(df2, df1("a") > df2("a"))返回一个非常令人困惑的空结果。这是因为 Spark 无法解析指向自联接表的 Dataset 列引用,df1("a")这与df2("a")Spark 中的完全相同。要恢复 Spark 3.0 之前的行为,您可以设置spark.sql.analyzer.failAmbiguousSelfJoin为false.

- 在 Spark 3.0 中,spark.sql.legacy.ctePrecedencePolicy引入了控制嵌套 WITH 子句中名称冲突的行为。默认情况下EXCEPTION,Spark 会抛出 AnalysisException,它会强制用户选择他们想要的特定替换顺序。如果设置为CORRECTED(推荐),内部 CTE 定义优先于外部定义。例如,将 config 设置为false,WITH t AS (SELECT 1), t2 AS (WITH t AS (SELECT 2) SELECT * FROM t) SELECT * FROM t2返回2,而将其设置为LEGACY,结果是12.4 及以下版本中的行为。

- 在 Spark 3.0 中,配置spark.sql.crossJoin.enabled成为内部配置,默认情况下为 true,因此默认情况下,spark 不会在隐式交叉联接的 sql 上引发异常。

- 在Spark 3.0中, Spark转换String 为Date/Timestamp类型通过与dates/timestamp类型进行二进制比较. 转换Date/Timestamp为String类型的之前行为可以通过设置spark.sql.legacy.typeCoercion.datetimeToString.enabled=true来还原。

- 在 Spark 3.0 中,从字符串到日期和时间戳的转换支持特殊值。这些值只是简单的符号简写,在读取时会转换为普通的日期或时间戳值。日期支持以下字符串值:epoch [zoneId] - 1970-01-01today [zoneId] - 指定时区中的当前日期 spark.sql.session.timeZoneyesterday [zoneId] - 当前日期 - 1tomorrow [zoneId] - 当前日期 + 1now- 运行当前查询的日期。它和今天的概念是一样的例如SELECT date 'tomorrow' - date 'yesterday';应该输出2. 以下是特殊的时间戳值:epoch [zoneId] - 1970-01-01 00:00:00+00(Unix 系统时间为零)today [zoneId] - 今天午夜yesterday [zoneId] - 昨天午夜tomorrow [zoneId] - 明天午夜now - 当前查询开始时间例如SELECT timestamp 'tomorrow';。

- 在 Spark 2.4 及以下版本中,当使用 Spark 原生数据源(parquet/orc)读取 Hive SerDe 表时,Spark 会推断实际文件的schema 并更新 Metastore 中的表schema 。在 Spark 3.0 中,Spark 不再推断schema 。这应该不会给最终用户带来任何问题,但如果确实如此,请将其设置spark.sql.hive.caseSensitiveInferenceMode为INFER_AND_SAVE.

- 在 Spark 2.4 及以下版本中,如果无法将分区列值转换为相应的用户提供的架构,则将其转换为 null。在 3.0 中,分区列值使用用户提供的架构进行验证。如果验证失败,则抛出异常。您可以通过设置spark.sql.sources.validatePartitionColumns为来禁用此类验证 false。

- 在 Spark 3.0 中,如果文件或子目录在递归目录列表期间消失(即,它们出现在中间列表中,但由于并发文件删除或对象存储一致性问题,在递归目录列表的后期阶段无法读取或列出) ) 那么列表将失败并出现异常,除非spark.sql.files.ignoreMissingFiles是true(默认false)。在以前的版本中,这些丢失的文件或子目录将被忽略。请注意,这种行为更改仅适用于初始表文件列表期间(或期间REFRESH TABLE),而不适用于查询执行期间:净变化spark.sql.files.ignoreMissingFiles是现在在表文件列表/查询计划期间遵守,而不仅仅是在查询执行时。

- 在Spark2.4及以下版本中, JSON数据源解析将 empty strings 转换为null 对于一些数据类型如 IntegerType. 对于FloatType, DoubleType, DateType and TimestampType, 遇见 empty strings 会失败and并会 throws exceptions. Spark 3.0 不允许 empty strings 并且会为除了StringType and BinaryType外的其他类型 抛出异常. 可以通过设置spark.sql.legacy.json.allowEmptyString.enabled为true来恢复之前允许空字符串的行为。

- 在 Spark 2.4 版中,当通过 cloneSession()创建Spark session时候,新创建的 Spark session会从其父级SparkContext继承其配置,即使在其父级 Spark session中可能存在具有不同值的相同配置。在 Spark 3.0 中,parent SparkSession 的配置比 parent SparkContext具有更高的优先级。您可以通过设置spark.sql.legacy.sessionInitWithConfigDefaults为来恢复旧行为true。

- 在 Spark 3.0 中,我们将内置的 Hive 从 1.2 升级到了 2.3,它带来了以下影响您可能需要根据要连接的hive metastore的版本设定spark.sql.hive.metastore.version及spark.sql.hive.metastore.jars。例如:如果您的 Hive Metastore 版本是 1.2.1 ,则设置spark.sql.hive.metastore.version为1.2.1和。spark.sql.hive.metastore.jars为maven您需要将您的自定义 SerDes 迁移到 Hive 2.3 或使用hive-1.2配置文件构建您自己的 Spark 。有关更多详细信息,请参阅HIVE-15167。小数字符串表示在hive1.2跟hive2.3中表示不同,当使用转换算子在SQL中用于用于脚本转换。在 Hive 1.2 中,字符串表示省略尾随零。但是在 Hive 2.3 中,如果需要,它总是用尾随零填充到 18 位数字。

- 在 Spark 3.0 中,如果 JSON 数据源和 JSON 函数schema_of_json与 JSON 选项定义的模式匹配,则它们会从字符串值推断 TimestampType timestampFormat。从 3.0.1 版本开始,默认情况下禁用时间戳类型推断。将 JSON 选项inferTimestamp设置true为启用此类类型推断。

- 在spark3.1,统计聚合函数包括std,stddev,stddev_samp,variance,var_samp,skewness,kurtosis,covar_samp,corr将返回NULL而不是Double.NaN当在表达评估期间除0时发生,例如,当stddev_samp在单个元件组施加。在 Spark 3.0 及更早版本中,它会在这种情况下返回Double.NaN。要恢复 Spark 3.1 之前的行为,您可以设置spark.sql.legacy.statisticalAggregate为true.

- 在 Spark 3.1 中, grouping_id() 返回long值。在 Spark 3.0 及更早版本中,此函数返回 int 值。要恢复 Spark 3.1 之前的行为,您可以设置spark.sql.legacy.integerGroupingId为true.

- 在 Spark 3.1 中,SQL UI 数据采用formatted展示查询计划解释结果。要恢复 Spark 3.1 之前的行为,您可以设置spark.sql.ui.explainMode为extended.

- 在 Spark 3.1 中,struct和map在将它们转换为字符串时由方括号{}包裹。例如,show()动作和CAST表达式使用这样的括号。在 Spark 3.0 及更早版本中,[]括号用于相同的目的。要恢复 Spark 3.1 之前的行为,您可以设置spark.sql.legacy.castComplexTypesToString.enabled为true.

- 在 Spark 3.1 中,结构、数组和映射的 NULL 元素在将它们转换为字符串时被转换为“null”。在 Spark 3.0 或更早版本中,NULL 元素被转换为空字符串。要恢复 Spark 3.1 之前的行为,您可以设置spark.sql.legacy.castComplexTypesToString.enabled为true.

- 在 Spark 3.1 中当spark.sql.ansi.enabled为 false,如果小数类型列的总和溢出,Spark 总是返回 null。在 Spark 3.0 或更早版本中,小数类型列的总和可能会返回 null 或不正确的结果,甚至在运行时失败(取决于实际的查询计划执行情况)。

- 在spark 3.1中 path option不能与下面的方法DataFrameReader.load(), DataFrameWriter.save(), DataStreamReader.load(), or DataStreamWriter.start()共存当下面的方法调用path参数。除此之外paths option不能与DataFrameReader.load()共存。例如. spark.read.format("csv").option("path", "/tmp").load("/tmp2") or spark.read.option("path", "/tmp").csv("/tmp2") will throw org.apache.spark.sql.AnalysisException。在spark 3.0及以下版本path option选项被覆盖当一个路径参数传递到上述方法中。 path option被添加到path列表中,当多个path参数传递给DataFrameReader.load().要恢复 Spark 3.1 之前的行为,您可以设置spark.sql.legacy.pathOptionBehavior.enabled为true.

- 在 Spark 3.1 中,如果时间戳在 1900-01-01 00:00:00Z 之前并且加载(保存)为 INT96 类型,则从/向parquet文件加载和保存时间戳会失败。在 Spark 3.0 中,操作不会失败,但可能会导致输入时间戳的偏移,因为从/到 Julian 到/从 Proleptic Gregorian 日历变基。要恢复 Spark 3.1 之前的行为,您可以将spark.sql.legacy.parquet.int96RebaseModeInRead或/和设置spark.sql.legacy.parquet.int96RebaseModeInWrite为LEGACY。

- 在 Spark 3.1 中,临时视图将具有与永久视图相同的行为,即捕获和存储运行时 SQL 配置、SQL 文本、目录和命名空间。捕获的视图属性将在视图分辨率的解析和分析阶段应用。要恢复 Spark 3.1 之前的行为,您可以设置spark.sql.legacy.storeAnalyzedPlanForView为true.

- 在 Spark 3.1 中,通过CACHE TABLE ... AS SELECT创建的临时视图也将具有与永久视图相同的行为。特别是,当临时视图被删除时,Spark 将使其所有缓存依赖项以及临时视图本身的缓存失效。这与 Spark 3.0 及以下版本不同,后者只做后者。要恢复以前的行为,您可以设置spark.sql.legacy.storeAnalyzedPlanForView为true。

- 从 Spark 3.1 开始,表模式支持 CHAR/CHARACTER 和 VARCHAR 类型。表扫描/插入将尊重 char/varchar 语义。如果在表模式以外的地方使用 char/varchar,则会抛出异常(CAST 是一个异常,它像以前一样简单地将 char/varchar 视为字符串)。要恢复 Spark 3.1 之前的行为,它将它们视为 STRING 类型并忽略长度参数,例如CHAR(4),您可以设置spark.sql.legacy.charVarcharAsString为true。

附:更多内容可以参考spark源码,在spark源码中,org.apache.spark.sql.internal.SQLConf中定义了常见的conf

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.spark.sql.internal

import java.util.{Locale, NoSuchElementException, Properties, TimeZone}

import java.util

import java.util.concurrent.TimeUnit

import java.util.concurrent.atomic.AtomicReference

import java.util.zip.Deflater

import scala.collection.JavaConverters._

import scala.collection.immutable

import scala.util.Try

import scala.util.control.NonFatal

import scala.util.matching.Regex

import org.apache.hadoop.fs.Path

import org.apache.spark.{SparkConf, SparkContext, TaskContext}

import org.apache.spark.internal.Logging

import org.apache.spark.internal.config._

import org.apache.spark.internal.config.{IGNORE_MISSING_FILES => SPARK_IGNORE_MISSING_FILES}

import org.apache.spark.network.util.ByteUnit

import org.apache.spark.sql.catalyst.ScalaReflection

import org.apache.spark.sql.catalyst.analysis.{HintErrorLogger, Resolver}

import org.apache.spark.sql.catalyst.expressions.CodegenObjectFactoryMode

import org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator

import org.apache.spark.sql.catalyst.plans.logical.HintErrorHandler

import org.apache.spark.sql.catalyst.util.DateTimeUtils

import org.apache.spark.sql.connector.catalog.CatalogManager.SESSION_CATALOG_NAME

import org.apache.spark.sql.errors.{QueryCompilationErrors, QueryExecutionErrors}

import org.apache.spark.sql.types.{AtomicType, TimestampNTZType, TimestampType}

import org.apache.spark.unsafe.array.ByteArrayMethods

import org.apache.spark.util.Utils

////////////////////////////////////////////////////////////////////////////////////////////////////

// This file defines the configuration options for Spark SQL.

////////////////////////////////////////////////////////////////////////////////////////////////////

object SQLConf {

private[this] val sqlConfEntriesUpdateLock = new Object

@volatile

private[this] var sqlConfEntries: util.Map[String, ConfigEntry[_]] = util.Collections.emptyMap()

private[this] val staticConfKeysUpdateLock = new Object

@volatile

private[this] var staticConfKeys: java.util.Set[String] = util.Collections.emptySet()

private def register(entry: ConfigEntry[_]): Unit = sqlConfEntriesUpdateLock.synchronized {

require(!sqlConfEntries.containsKey(entry.key),

s"Duplicate SQLConfigEntry. ${entry.key} has been registered")

val updatedMap = new java.util.HashMap[String, ConfigEntry[_]](sqlConfEntries)

updatedMap.put(entry.key, entry)

sqlConfEntries = updatedMap

}

// For testing only

private[sql] def unregister(entry: ConfigEntry[_]): Unit = sqlConfEntriesUpdateLock.synchronized {

val updatedMap = new java.util.HashMap[String, ConfigEntry[_]](sqlConfEntries)

updatedMap.remove(entry.key)

sqlConfEntries = updatedMap

}

private[internal] def getConfigEntry(key: String): ConfigEntry[_] = {

sqlConfEntries.get(key)

}

private[internal] def getConfigEntries(): util.Collection[ConfigEntry[_]] = {

sqlConfEntries.values()

}

private[internal] def containsConfigEntry(entry: ConfigEntry[_]): Boolean = {

getConfigEntry(entry.key) == entry

}

private[sql] def containsConfigKey(key: String): Boolean = {

sqlConfEntries.containsKey(key)

}

def registerStaticConfigKey(key: String): Unit = staticConfKeysUpdateLock.synchronized {

val updated = new util.HashSet[String](staticConfKeys)

updated.add(key)

staticConfKeys = updated

}

def isStaticConfigKey(key: String): Boolean = staticConfKeys.contains(key)

def buildConf(key: String): ConfigBuilder = ConfigBuilder(key).onCreate(register)

def buildStaticConf(key: String): ConfigBuilder = {

ConfigBuilder(key).onCreate { entry =>

SQLConf.registerStaticConfigKey(entry.key)

SQLConf.register(entry)

}

}

/**

* Merge all non-static configs to the SQLConf. For example, when the 1st [[SparkSession]] and

* the global [[SharedState]] have been initialized, all static configs have taken affect and

* should not be set to other values. Other later created sessions should respect all static

* configs and only be able to change non-static configs.

*/

private[sql] def mergeNonStaticSQLConfigs(

sqlConf: SQLConf,

configs: Map[String, String]): Unit = {

for ((k, v) <- configs if !staticConfKeys.contains(k)) {

sqlConf.setConfString(k, v)

}

}

/**

* Extract entries from `SparkConf` and put them in the `SQLConf`

*/

private[sql] def mergeSparkConf(sqlConf: SQLConf, sparkConf: SparkConf): Unit = {

sparkConf.getAll.foreach { case (k, v) =>

sqlConf.setConfString(k, v)

}

}

/**

* Default config. Only used when there is no active SparkSession for the thread.

* See [[get]] for more information.

*/

private lazy val fallbackConf = new ThreadLocal[SQLConf] {

override def initialValue: SQLConf = new SQLConf

}

/** See [[get]] for more information. */

def getFallbackConf: SQLConf = fallbackConf.get()

private lazy val existingConf = new ThreadLocal[SQLConf] {

override def initialValue: SQLConf = null

}

def withExistingConf[T](conf: SQLConf)(f: => T): T = {

val old = existingConf.get()

existingConf.set(conf)

try {

f

} finally {

if (old != null) {

existingConf.set(old)

} else {

existingConf.remove()

}

}

}

/**

* Defines a getter that returns the SQLConf within scope.

* See [[get]] for more information.

*/

private val confGetter = new AtomicReference[() => SQLConf](() => fallbackConf.get())

/**

* Sets the active config object within the current scope.

* See [[get]] for more information.

*/

def setSQLConfGetter(getter: () => SQLConf): Unit = {

confGetter.set(getter)

}

/**

* Returns the active config object within the current scope. If there is an active SparkSession,

* the proper SQLConf associated with the thread's active session is used. If it's called from

* tasks in the executor side, a SQLConf will be created from job local properties, which are set

* and propagated from the driver side, unless a `SQLConf` has been set in the scope by

* `withExistingConf` as done for propagating SQLConf for operations performed on RDDs created

* from DataFrames.

*

* The way this works is a little bit convoluted, due to the fact that config was added initially

* only for physical plans (and as a result not in sql/catalyst module).

*

* The first time a SparkSession is instantiated, we set the [[confGetter]] to return the

* active SparkSession's config. If there is no active SparkSession, it returns using the thread

* local [[fallbackConf]]. The reason [[fallbackConf]] is a thread local (rather than just a conf)

* is to support setting different config options for different threads so we can potentially

* run tests in parallel. At the time this feature was implemented, this was a no-op since we

* run unit tests (that does not involve SparkSession) in serial order.

*/

def get: SQLConf = {

if (TaskContext.get != null) {

val conf = existingConf.get()

if (conf != null) {

conf

} else {

new ReadOnlySQLConf(TaskContext.get())

}

} else {

val isSchedulerEventLoopThread = SparkContext.getActive

.flatMap { sc => Option(sc.dagScheduler) }

.map(_.eventProcessLoop.eventThread)

.exists(_.getId == Thread.currentThread().getId)

if (isSchedulerEventLoopThread) {

// DAGScheduler event loop thread does not have an active SparkSession, the `confGetter`

// will return `fallbackConf` which is unexpected. Here we require the caller to get the

// conf within `withExistingConf`, otherwise fail the query.

val conf = existingConf.get()

if (conf != null) {

conf

} else if (Utils.isTesting) {

throw QueryExecutionErrors.cannotGetSQLConfInSchedulerEventLoopThreadError()

} else {

confGetter.get()()

}

} else {

val conf = existingConf.get()

if (conf != null) {

conf

} else {

confGetter.get()()

}

}

}

}

val ANALYZER_MAX_ITERATIONS = buildConf("spark.sql.analyzer.maxIterations")

.internal()

.doc("The max number of iterations the analyzer runs.")

.version("3.0.0")

.intConf

.createWithDefault(100)

val OPTIMIZER_EXCLUDED_RULES = buildConf("spark.sql.optimizer.excludedRules")

.doc("Configures a list of rules to be disabled in the optimizer, in which the rules are " +

"specified by their rule names and separated by comma. It is not guaranteed that all the " +

"rules in this configuration will eventually be excluded, as some rules are necessary " +

"for correctness. The optimizer will log the rules that have indeed been excluded.")

.version("2.4.0")

.stringConf

.createOptional

val OPTIMIZER_MAX_ITERATIONS = buildConf("spark.sql.optimizer.maxIterations")

.internal()

.doc("The max number of iterations the optimizer runs.")

.version("2.0.0")

.intConf

.createWithDefault(100)

val OPTIMIZER_INSET_CONVERSION_THRESHOLD =

buildConf("spark.sql.optimizer.inSetConversionThreshold")

.internal()

.doc("The threshold of set size for InSet conversion.")

.version("2.0.0")

.intConf

.createWithDefault(10)

val OPTIMIZER_INSET_SWITCH_THRESHOLD =

buildConf("spark.sql.optimizer.inSetSwitchThreshold")

.internal()

.doc("Configures the max set size in InSet for which Spark will generate code with " +

"switch statements. This is applicable only to bytes, shorts, ints, dates.")

.version("3.0.0")

.intConf

.checkValue(threshold => threshold >= 0 && threshold <= 600, "The max set size " +

"for using switch statements in InSet must be non-negative and less than or equal to 600")

.createWithDefault(400)

val PLAN_CHANGE_LOG_LEVEL = buildConf("spark.sql.planChangeLog.level")

.internal()

.doc("Configures the log level for logging the change from the original plan to the new " +

"plan after a rule or batch is applied. The value can be 'trace', 'debug', 'info', " +

"'warn', or 'error'. The default log level is 'trace'.")

.version("3.1.0")

.stringConf

.transform(_.toUpperCase(Locale.ROOT))

.checkValue(logLevel => Set("TRACE", "DEBUG", "INFO", "WARN", "ERROR").contains(logLevel),

"Invalid value for 'spark.sql.planChangeLog.level'. Valid values are " +

"'trace', 'debug', 'info', 'warn' and 'error'.")

.createWithDefault("trace")

val PLAN_CHANGE_LOG_RULES = buildConf("spark.sql.planChangeLog.rules")

.internal()

.doc("Configures a list of rules for logging plan changes, in which the rules are " +

"specified by their rule names and separated by comma.")

.version("3.1.0")

.stringConf

.createOptional

val PLAN_CHANGE_LOG_BATCHES = buildConf("spark.sql.planChangeLog.batches")

.internal()

.doc("Configures a list of batches for logging plan changes, in which the batches " +

"are specified by their batch names and separated by comma.")

.version("3.1.0")

.stringConf

.createOptional

val DYNAMIC_PARTITION_PRUNING_ENABLED =

buildConf("spark.sql.optimizer.dynamicPartitionPruning.enabled")

.doc("When true, we will generate predicate for partition column when it's used as join key")

.version("3.0.0")

.booleanConf

.createWithDefault(true)

val DYNAMIC_PARTITION_PRUNING_USE_STATS =

buildConf("spark.sql.optimizer.dynamicPartitionPruning.useStats")

.internal()

.doc("When true, distinct count statistics will be used for computing the data size of the " +

"partitioned table after dynamic partition pruning, in order to evaluate if it is worth " +

"adding an extra subquery as the pruning filter if broadcast reuse is not applicable.")

.version("3.0.0")

.booleanConf

.createWithDefault(true)

val DYNAMIC_PARTITION_PRUNING_FALLBACK_FILTER_RATIO =

buildConf("spark.sql.optimizer.dynamicPartitionPruning.fallbackFilterRatio")

.internal()

.doc("When statistics are not available or configured not to be used, this config will be " +

"used as the fallback filter ratio for computing the data size of the partitioned table " +

"after dynamic partition pruning, in order to evaluate if it is worth adding an extra " +

"subquery as the pruning filter if broadcast reuse is not applicable.")

.version("3.0.0")

.doubleConf

.createWithDefault(0.5)

val DYNAMIC_PARTITION_PRUNING_REUSE_BROADCAST_ONLY =

buildConf("spark.sql.optimizer.dynamicPartitionPruning.reuseBroadcastOnly")

.internal()

.doc("When true, dynamic partition pruning will only apply when the broadcast exchange of " +

"a broadcast hash join operation can be reused as the dynamic pruning filter.")

.version("3.0.0")

.booleanConf

.createWithDefault(true)

val COMPRESS_CACHED = buildConf("spark.sql.inMemoryColumnarStorage.compressed")

.doc("When set to true Spark SQL will automatically select a compression codec for each " +

"column based on statistics of the data.")

.version("1.0.1")

.booleanConf

.createWithDefault(true)

val COLUMN_BATCH_SIZE = buildConf("spark.sql.inMemoryColumnarStorage.batchSize")

.doc("Controls the size of batches for columnar caching. Larger batch sizes can improve " +

"memory utilization and compression, but risk OOMs when caching data.")

.version("1.1.1")

.intConf

.createWithDefault(10000)

val IN_MEMORY_PARTITION_PRUNING =

buildConf("spark.sql.inMemoryColumnarStorage.partitionPruning")

.internal()

.doc("When true, enable partition pruning for in-memory columnar tables.")

.version("1.2.0")

.booleanConf

.createWithDefault(true)

val IN_MEMORY_TABLE_SCAN_STATISTICS_ENABLED =

buildConf("spark.sql.inMemoryTableScanStatistics.enable")

.internal()

.doc("When true, enable in-memory table scan accumulators.")

.version("3.0.0")

.booleanConf

.createWithDefault(false)

val CACHE_VECTORIZED_READER_ENABLED =

buildConf("spark.sql.inMemoryColumnarStorage.enableVectorizedReader")

.doc("Enables vectorized reader for columnar caching.")

.version("2.3.1")

.booleanConf

.createWithDefault(true)

val COLUMN_VECTOR_OFFHEAP_ENABLED =

buildConf("spark.sql.columnVector.offheap.enabled")

.internal()

.doc("When true, use OffHeapColumnVector in ColumnarBatch.")

.version("2.3.0")

.booleanConf

.createWithDefault(false)

val PREFER_SORTMERGEJOIN = buildConf("spark.sql.join.preferSortMergeJoin")

.internal()

.doc("When true, prefer sort merge join over shuffled hash join. " +

"Sort merge join consumes less memory than shuffled hash join and it works efficiently " +

"when both join tables are large. On the other hand, shuffled hash join can improve " +

"performance (e.g., of full outer joins) when one of join tables is much smaller.")

.version("2.0.0")

.booleanConf

.createWithDefault(true)

val RADIX_SORT_ENABLED = buildConf("spark.sql.sort.enableRadixSort")

.internal()

.doc("When true, enable use of radix sort when possible. Radix sort is much faster but " +

"requires additional memory to be reserved up-front. The memory overhead may be " +

"significant when sorting very small rows (up to 50% more in this case).")

.version("2.0.0")

.booleanConf

.createWithDefault(true)

val AUTO_BROADCASTJOIN_THRESHOLD = buildConf("spark.sql.autoBroadcastJoinThreshold")

.doc("Configures the maximum size in bytes for a table that will be broadcast to all worker " +

"nodes when performing a join. By setting this value to -1 broadcasting can be disabled. " +

"Note that currently statistics are only supported for Hive Metastore tables where the " +

"command `ANALYZE TABLE <tableName> COMPUTE STATISTICS noscan` has been " +

"run, and file-based data source tables where the statistics are computed directly on " +

"the files of data.")

.version("1.1.0")

.bytesConf(ByteUnit.BYTE)

.createWithDefaultString("10MB")

val SHUFFLE_HASH_JOIN_FACTOR = buildConf("spark.sql.shuffledHashJoinFactor")

.doc("The shuffle hash join can be selected if the data size of small" +

" side multiplied by this factor is still smaller than the large side.")

.version("3.3.0")

.intConf

.checkValue(_ >= 1, "The shuffle hash join factor cannot be negative.")

.createWithDefault(3)

val LIMIT_SCALE_UP_FACTOR = buildConf("spark.sql.limit.scaleUpFactor")

.internal()

.doc("Minimal increase rate in number of partitions between attempts when executing a take " +

"on a query. Higher values lead to more partitions read. Lower values might lead to " +

"longer execution times as more jobs will be run")

.version("2.1.1")

.intConf

.createWithDefault(4)

val ADVANCED_PARTITION_PREDICATE_PUSHDOWN =

buildConf("spark.sql.hive.advancedPartitionPredicatePushdown.enabled")

.internal()

.doc("When true, advanced partition predicate pushdown into Hive metastore is enabled.")

.version("2.3.0")

.booleanConf

.createWithDefault(true)

val LEAF_NODE_DEFAULT_PARALLELISM = buildConf("spark.sql.leafNodeDefaultParallelism")

.doc("The default parallelism of Spark SQL leaf nodes that produce data, such as the file " +

"scan node, the local data scan node, the range node, etc. The default value of this " +

"config is 'SparkContext#defaultParallelism'.")

.version("3.2.0")

.intConf

.checkValue(_ > 0, "The value of spark.sql.leafNodeDefaultParallelism must be positive.")

.createOptional

val SHUFFLE_PARTITIONS = buildConf("spark.sql.shuffle.partitions")

.doc("The default number of partitions to use when shuffling data for joins or aggregations. " +

"Note: For structured streaming, this configuration cannot be changed between query " +

"restarts from the same checkpoint location.")

.version("1.1.0")

.intConf

.checkValue(_ > 0, "The value of spark.sql.shuffle.partitions must be positive")

.createWithDefault(200)

val SHUFFLE_TARGET_POSTSHUFFLE_INPUT_SIZE =

buildConf("spark.sql.adaptive.shuffle.targetPostShuffleInputSize")

.internal()

.doc("(Deprecated since Spark 3.0)")

.version("1.6.0")

.bytesConf(ByteUnit.BYTE)

.checkValue(_ > 0, "advisoryPartitionSizeInBytes must be positive")

.createWithDefaultString("64MB")

val ADAPTIVE_EXECUTION_ENABLED = buildConf("spark.sql.adaptive.enabled")

.doc("When true, enable adaptive query execution, which re-optimizes the query plan in the " +

"middle of query execution, based on accurate runtime statistics.")

.version("1.6.0")

.booleanConf

.createWithDefault(true)

val ADAPTIVE_EXECUTION_FORCE_APPLY = buildConf("spark.sql.adaptive.forceApply")

.internal()

.doc("Adaptive query execution is skipped when the query does not have exchanges or " +

"sub-queries. By setting this config to true (together with " +

s"'${ADAPTIVE_EXECUTION_ENABLED.key}' set to true), Spark will force apply adaptive query " +

"execution for all supported queries.")

.version("3.0.0")

.booleanConf

.createWithDefault(false)

val ADAPTIVE_EXECUTION_LOG_LEVEL = buildConf("spark.sql.adaptive.logLevel")

.internal()

.doc("Configures the log level for adaptive execution logging of plan changes. The value " +

"can be 'trace', 'debug', 'info', 'warn', or 'error'. The default log level is 'debug'.")

.version("3.0.0")

.stringConf

.transform(_.toUpperCase(Locale.ROOT))

.checkValues(Set("TRACE", "DEBUG", "INFO", "WARN", "ERROR"))

.createWithDefault("debug")

val ADVISORY_PARTITION_SIZE_IN_BYTES =

buildConf("spark.sql.adaptive.advisoryPartitionSizeInBytes")

.doc("The advisory size in bytes of the shuffle partition during adaptive optimization " +

s"(when ${ADAPTIVE_EXECUTION_ENABLED.key} is true). It takes effect when Spark " +

"coalesces small shuffle partitions or splits skewed shuffle partition.")

.version("3.0.0")

.fallbackConf(SHUFFLE_TARGET_POSTSHUFFLE_INPUT_SIZE)

val COALESCE_PARTITIONS_ENABLED =

buildConf("spark.sql.adaptive.coalescePartitions.enabled")

.doc(s"When true and '${ADAPTIVE_EXECUTION_ENABLED.key}' is true, Spark will coalesce " +

"contiguous shuffle partitions according to the target size (specified by " +

s"'${ADVISORY_PARTITION_SIZE_IN_BYTES.key}'), to avoid too many small tasks.")

.version("3.0.0")

.booleanConf

.createWithDefault(true)

val COALESCE_PARTITIONS_PARALLELISM_FIRST =

buildConf("spark.sql.adaptive.coalescePartitions.parallelismFirst")

.doc("When true, Spark does not respect the target size specified by " +

s"'${ADVISORY_PARTITION_SIZE_IN_BYTES.key}' (default 64MB) when coalescing contiguous " +

"shuffle partitions, but adaptively calculate the target size according to the default " +

"parallelism of the Spark cluster. The calculated size is usually smaller than the " +

"configured target size. This is to maximize the parallelism and avoid performance " +

"regression when enabling adaptive query execution. It's recommended to set this config " +

"to false and respect the configured target size.")

.version("3.2.0")

.booleanConf

.createWithDefault(true)

val COALESCE_PARTITIONS_MIN_PARTITION_SIZE =

buildConf("spark.sql.adaptive.coalescePartitions.minPartitionSize")

.doc("The minimum size of shuffle partitions after coalescing. This is useful when the " +

"adaptively calculated target size is too small during partition coalescing.")

.version("3.2.0")

.bytesConf(ByteUnit.BYTE)

.checkValue(_ > 0, "minPartitionSize must be positive")

.createWithDefaultString("1MB")

val COALESCE_PARTITIONS_MIN_PARTITION_NUM =

buildConf("spark.sql.adaptive.coalescePartitions.minPartitionNum")

.internal()

.doc("(deprecated) The suggested (not guaranteed) minimum number of shuffle partitions " +

"after coalescing. If not set, the default value is the default parallelism of the " +

"Spark cluster. This configuration only has an effect when " +

s"'${ADAPTIVE_EXECUTION_ENABLED.key}' and " +

s"'${COALESCE_PARTITIONS_ENABLED.key}' are both true.")

.version("3.0.0")

.intConf

.checkValue(_ > 0, "The minimum number of partitions must be positive.")

.createOptional

val COALESCE_PARTITIONS_INITIAL_PARTITION_NUM =

buildConf("spark.sql.adaptive.coalescePartitions.initialPartitionNum")

.doc("The initial number of shuffle partitions before coalescing. If not set, it equals to " +

s"${SHUFFLE_PARTITIONS.key}. This configuration only has an effect when " +

s"'${ADAPTIVE_EXECUTION_ENABLED.key}' and '${COALESCE_PARTITIONS_ENABLED.key}' " +

"are both true.")

.version("3.0.0")

.intConf

.checkValue(_ > 0, "The initial number of partitions must be positive.")

.createOptional

val FETCH_SHUFFLE_BLOCKS_IN_BATCH =

buildConf("spark.sql.adaptive.fetchShuffleBlocksInBatch")

.internal()

.doc("Whether to fetch the contiguous shuffle blocks in batch. Instead of fetching blocks " +

"one by one, fetching contiguous shuffle blocks for the same map task in batch can " +

"reduce IO and improve performance. Note, multiple contiguous blocks exist in single " +

s"fetch request only happen when '${ADAPTIVE_EXECUTION_ENABLED.key}' and " +

s"'${COALESCE_PARTITIONS_ENABLED.key}' are both true. This feature also depends " +

"on a relocatable serializer, the concatenation support codec in use, the new version " +

"shuffle fetch protocol and io encryption is disabled.")

.version("3.0.0")

.booleanConf

.createWithDefault(true)

val LOCAL_SHUFFLE_READER_ENABLED =

buildConf("spark.sql.adaptive.localShuffleReader.enabled")

.doc(s"When true and '${ADAPTIVE_EXECUTION_ENABLED.key}' is true, Spark tries to use local " +

"shuffle reader to read the shuffle data when the shuffle partitioning is not needed, " +

"for example, after converting sort-merge join to broadcast-hash join.")

.version("3.0.0")

.booleanConf

.createWithDefault(true)

val SKEW_JOIN_ENABLED =

buildConf("spark.sql.adaptive.skewJoin.enabled")

.doc(s"When true and '${ADAPTIVE_EXECUTION_ENABLED.key}' is true, Spark dynamically " +

"handles skew in shuffled join (sort-merge and shuffled hash) by splitting (and " +

"replicating if needed) skewed partitions.")

.version("3.0.0")

.booleanConf

.createWithDefault(true)

val SKEW_JOIN_SKEWED_PARTITION_FACTOR =

buildConf("spark.sql.adaptive.skewJoin.skewedPartitionFactor")

.doc("A partition is considered as skewed if its size is larger than this factor " +

"multiplying the median partition size and also larger than " +

"'spark.sql.adaptive.skewJoin.skewedPartitionThresholdInBytes'")

.version("3.0.0")

.intConf

.checkValue(_ >= 0, "The skew factor cannot be negative.")

.createWithDefault(5)

val SKEW_JOIN_SKEWED_PARTITION_THRESHOLD =

buildConf("spark.sql.adaptive.skewJoin.skewedPartitionThresholdInBytes")

.doc("A partition is considered as skewed if its size in bytes is larger than this " +

s"threshold and also larger than '${SKEW_JOIN_SKEWED_PARTITION_FACTOR.key}' " +

"multiplying the median partition size. Ideally this config should be set larger " +

s"than '${ADVISORY_PARTITION_SIZE_IN_BYTES.key}'.")

.version("3.0.0")

.bytesConf(ByteUnit.BYTE)

.createWithDefaultString("256MB")

val NON_EMPTY_PARTITION_RATIO_FOR_BROADCAST_JOIN =

buildConf("spark.sql.adaptive.nonEmptyPartitionRatioForBroadcastJoin")

.internal()

.doc("The relation with a non-empty partition ratio lower than this config will not be " +

"considered as the build side of a broadcast-hash join in adaptive execution regardless " +

"of its size.This configuration only has an effect when " +

s"'${ADAPTIVE_EXECUTION_ENABLED.key}' is true.")

.version("3.0.0")

.doubleConf

.checkValue(_ >= 0, "The non-empty partition ratio must be positive number.")

.createWithDefault(0.2)

val ADAPTIVE_OPTIMIZER_EXCLUDED_RULES =

buildConf("spark.sql.adaptive.optimizer.excludedRules")

.doc("Configures a list of rules to be disabled in the adaptive optimizer, in which the " +

"rules are specified by their rule names and separated by comma. The optimizer will log " +

"the rules that have indeed been excluded.")

.version("3.1.0")

.stringConf

.createOptional

val ADAPTIVE_AUTO_BROADCASTJOIN_THRESHOLD =

buildConf("spark.sql.adaptive.autoBroadcastJoinThreshold")

.doc("Configures the maximum size in bytes for a table that will be broadcast to all " +

"worker nodes when performing a join. By setting this value to -1 broadcasting can be " +

s"disabled. The default value is same with ${AUTO_BROADCASTJOIN_THRESHOLD.key}. " +

"Note that, this config is used only in adaptive framework.")

.version("3.2.0")

.bytesConf(ByteUnit.BYTE)

.createOptional

val ADAPTIVE_MAX_SHUFFLE_HASH_JOIN_LOCAL_MAP_THRESHOLD =

buildConf("spark.sql.adaptive.maxShuffledHashJoinLocalMapThreshold")

.doc("Configures the maximum size in bytes per partition that can be allowed to build " +

"local hash map. If this value is not smaller than " +

s"${ADVISORY_PARTITION_SIZE_IN_BYTES.key} and all the partition size are not larger " +

"than this config, join selection prefer to use shuffled hash join instead of " +

s"sort merge join regardless of the value of ${PREFER_SORTMERGEJOIN.key}.")

.version("3.2.0")

.bytesConf(ByteUnit.BYTE)

.createWithDefault(0L)

val ADAPTIVE_OPTIMIZE_SKEWS_IN_REBALANCE_PARTITIONS_ENABLED =

buildConf("spark.sql.adaptive.optimizeSkewsInRebalancePartitions.enabled")

.doc(s"When true and '${ADAPTIVE_EXECUTION_ENABLED.key}' is true, Spark will optimize the " +

"skewed shuffle partitions in RebalancePartitions and split them to smaller ones " +

s"according to the target size (specified by '${ADVISORY_PARTITION_SIZE_IN_BYTES.key}'), " +

"to avoid data skew.")

.version("3.2.0")

.booleanConf

.createWithDefault(true)

val ADAPTIVE_FORCE_OPTIMIZE_SKEWED_JOIN =

buildConf("spark.sql.adaptive.forceOptimizeSkewedJoin")

.doc("When true, force enable OptimizeSkewedJoin even if it introduces extra shuffle.")

.version("3.3.0")

.booleanConf

.createWithDefault(false)

val ADAPTIVE_CUSTOM_COST_EVALUATOR_CLASS =

buildConf("spark.sql.adaptive.customCostEvaluatorClass")

.doc("The custom cost evaluator class to be used for adaptive execution. If not being set," +

" Spark will use its own SimpleCostEvaluator by default.")

.version("3.2.0")

.stringConf

.createOptional

val SUBEXPRESSION_ELIMINATION_ENABLED =

buildConf("spark.sql.subexpressionElimination.enabled")

.internal()

.doc("When true, common subexpressions will be eliminated.")

.version("1.6.0")

.booleanConf

.createWithDefault(true)

val SUBEXPRESSION_ELIMINATION_CACHE_MAX_ENTRIES =

buildConf("spark.sql.subexpressionElimination.cache.maxEntries")

.internal()

.doc("The maximum entries of the cache used for interpreted subexpression elimination.")

.version("3.1.0")

.intConf

.checkValue(_ >= 0, "The maximum must not be negative")

.createWithDefault(100)

val CASE_SENSITIVE = buildConf("spark.sql.caseSensitive")

.internal()

.doc("Whether the query analyzer should be case sensitive or not. " +

"Default to case insensitive. It is highly discouraged to turn on case sensitive mode.")

.version("1.4.0")

.booleanConf

.createWithDefault(false)

val CONSTRAINT_PROPAGATION_ENABLED = buildConf("spark.sql.constraintPropagation.enabled")

.internal()

.doc("When true, the query optimizer will infer and propagate data constraints in the query " +

"plan to optimize them. Constraint propagation can sometimes be computationally expensive " +

"for certain kinds of query plans (such as those with a large number of predicates and " +

"aliases) which might negatively impact overall runtime.")

.version("2.2.0")

.booleanConf

.createWithDefault(true)

val ESCAPED_STRING_LITERALS = buildConf("spark.sql.parser.escapedStringLiterals")

.internal()

.doc("When true, string literals (including regex patterns) remain escaped in our SQL " +

"parser. The default is false since Spark 2.0. Setting it to true can restore the behavior " +

"prior to Spark 2.0.")

.version("2.2.1")

.booleanConf

.createWithDefault(false)

val FILE_COMPRESSION_FACTOR = buildConf("spark.sql.sources.fileCompressionFactor")

.internal()

.doc("When estimating the output data size of a table scan, multiply the file size with this " +

"factor as the estimated data size, in case the data is compressed in the file and lead to" +

" a heavily underestimated result.")

.version("2.3.1")

.doubleConf

.checkValue(_ > 0, "the value of fileCompressionFactor must be greater than 0")

.createWithDefault(1.0)

val PARQUET_SCHEMA_MERGING_ENABLED = buildConf("spark.sql.parquet.mergeSchema")

.doc("When true, the Parquet data source merges schemas collected from all data files, " +

"otherwise the schema is picked from the summary file or a random data file " +

"if no summary file is available.")

.version("1.5.0")

.booleanConf

.createWithDefault(false)

val PARQUET_SCHEMA_RESPECT_SUMMARIES = buildConf("spark.sql.parquet.respectSummaryFiles")

.doc("When true, we make assumption that all part-files of Parquet are consistent with " +

"summary files and we will ignore them when merging schema. Otherwise, if this is " +

"false, which is the default, we will merge all part-files. This should be considered " +

"as expert-only option, and shouldn't be enabled before knowing what it means exactly.")

.version("1.5.0")

.booleanConf

.createWithDefault(false)

val PARQUET_BINARY_AS_STRING = buildConf("spark.sql.parquet.binaryAsString")

.doc("Some other Parquet-producing systems, in particular Impala and older versions of " +

"Spark SQL, do not differentiate between binary data and strings when writing out the " +

"Parquet schema. This flag tells Spark SQL to interpret binary data as a string to provide " +

"compatibility with these systems.")

.version("1.1.1")

.booleanConf

.createWithDefault(false)

val PARQUET_INT96_AS_TIMESTAMP = buildConf("spark.sql.parquet.int96AsTimestamp")

.doc("Some Parquet-producing systems, in particular Impala, store Timestamp into INT96. " +

"Spark would also store Timestamp as INT96 because we need to avoid precision lost of the " +

"nanoseconds field. This flag tells Spark SQL to interpret INT96 data as a timestamp to " +

"provide compatibility with these systems.")

.version("1.3.0")

.booleanConf

.createWithDefault(true)

val PARQUET_INT96_TIMESTAMP_CONVERSION = buildConf("spark.sql.parquet.int96TimestampConversion")

.doc("This controls whether timestamp adjustments should be applied to INT96 data when " +

"converting to timestamps, for data written by Impala. This is necessary because Impala " +

"stores INT96 data with a different timezone offset than Hive & Spark.")

.version("2.3.0")

.booleanConf

.createWithDefault(false)

object ParquetOutputTimestampType extends Enumeration {

val INT96, TIMESTAMP_MICROS, TIMESTAMP_MILLIS = Value

}

val PARQUET_OUTPUT_TIMESTAMP_TYPE = buildConf("spark.sql.parquet.outputTimestampType")

.doc("Sets which Parquet timestamp type to use when Spark writes data to Parquet files. " +

"INT96 is a non-standard but commonly used timestamp type in Parquet. TIMESTAMP_MICROS " +

"is a standard timestamp type in Parquet, which stores number of microseconds from the " +

"Unix epoch. TIMESTAMP_MILLIS is also standard, but with millisecond precision, which " +

"means Spark has to truncate the microsecond portion of its timestamp value.")

.version("2.3.0")

.stringConf

.transform(_.toUpperCase(Locale.ROOT))

.checkValues(ParquetOutputTimestampType.values.map(_.toString))

.createWithDefault(ParquetOutputTimestampType.INT96.toString)

val PARQUET_COMPRESSION = buildConf("spark.sql.parquet.compression.codec")

.doc("Sets the compression codec used when writing Parquet files. If either `compression` or " +

"`parquet.compression` is specified in the table-specific options/properties, the " +

"precedence would be `compression`, `parquet.compression`, " +

"`spark.sql.parquet.compression.codec`. Acceptable values include: none, uncompressed, " +

"snappy, gzip, lzo, brotli, lz4, zstd.")

.version("1.1.1")

.stringConf

.transform(_.toLowerCase(Locale.ROOT))

.checkValues(Set("none", "uncompressed", "snappy", "gzip", "lzo", "lz4", "brotli", "zstd"))

.createWithDefault("snappy")

val PARQUET_FILTER_PUSHDOWN_ENABLED = buildConf("spark.sql.parquet.filterPushdown")

.doc("Enables Parquet filter push-down optimization when set to true.")

.version("1.2.0")

.booleanConf

.createWithDefault(true)

val PARQUET_FILTER_PUSHDOWN_DATE_ENABLED = buildConf("spark.sql.parquet.filterPushdown.date")

.doc("If true, enables Parquet filter push-down optimization for Date. " +

s"This configuration only has an effect when '${PARQUET_FILTER_PUSHDOWN_ENABLED.key}' is " +

"enabled.")

.version("2.4.0")

.internal()

.booleanConf

.createWithDefault(true)

val PARQUET_FILTER_PUSHDOWN_TIMESTAMP_ENABLED =

buildConf("spark.sql.parquet.filterPushdown.timestamp")

.doc("If true, enables Parquet filter push-down optimization for Timestamp. " +

s"This configuration only has an effect when '${PARQUET_FILTER_PUSHDOWN_ENABLED.key}' is " +

"enabled and Timestamp stored as TIMESTAMP_MICROS or TIMESTAMP_MILLIS type.")

.version("2.4.0")

.internal()

.booleanConf

.createWithDefault(true)

val PARQUET_FILTER_PUSHDOWN_DECIMAL_ENABLED =

buildConf("spark.sql.parquet.filterPushdown.decimal")

.doc("If true, enables Parquet filter push-down optimization for Decimal. " +

s"This configuration only has an effect when '${PARQUET_FILTER_PUSHDOWN_ENABLED.key}' is " +

"enabled.")

.version("2.4.0")

.internal()

.booleanConf

.createWithDefault(true)

val PARQUET_FILTER_PUSHDOWN_STRING_STARTSWITH_ENABLED =

buildConf("spark.sql.parquet.filterPushdown.string.startsWith")

.doc("If true, enables Parquet filter push-down optimization for string startsWith function. " +

s"This configuration only has an effect when '${PARQUET_FILTER_PUSHDOWN_ENABLED.key}' is " +

"enabled.")

.version("2.4.0")

.internal()

.booleanConf

.createWithDefault(true)

val PARQUET_FILTER_PUSHDOWN_INFILTERTHRESHOLD =

buildConf("spark.sql.parquet.pushdown.inFilterThreshold")

.doc("For IN predicate, Parquet filter will push-down a set of OR clauses if its " +

"number of values not exceeds this threshold. Otherwise, Parquet filter will push-down " +

"a value greater than or equal to its minimum value and less than or equal to " +

"its maximum value. By setting this value to 0 this feature can be disabled. " +

s"This configuration only has an effect when '${PARQUET_FILTER_PUSHDOWN_ENABLED.key}' is " +

"enabled.")

.version("2.4.0")

.internal()

.intConf

.checkValue(threshold => threshold >= 0, "The threshold must not be negative.")

.createWithDefault(10)

val PARQUET_AGGREGATE_PUSHDOWN_ENABLED = buildConf("spark.sql.parquet.aggregatePushdown")

.doc("If true, MAX/MIN/COUNT without filter and group by will be pushed" +

" down to Parquet for optimization. MAX/MIN/COUNT for complex types and timestamp" +

" can't be pushed down")

.version("3.3.0")

.booleanConf

.createWithDefault(false)

val PARQUET_WRITE_LEGACY_FORMAT = buildConf("spark.sql.parquet.writeLegacyFormat")

.doc("If true, data will be written in a way of Spark 1.4 and earlier. For example, decimal " +

"values will be written in Apache Parquet's fixed-length byte array format, which other " +

"systems such as Apache Hive and Apache Impala use. If false, the newer format in Parquet " +

"will be used. For example, decimals will be written in int-based format. If Parquet " +

"output is intended for use with systems that do not support this newer format, set to true.")

.version("1.6.0")

.booleanConf

.createWithDefault(false)

val PARQUET_OUTPUT_COMMITTER_CLASS = buildConf("spark.sql.parquet.output.committer.class")

.doc("The output committer class used by Parquet. The specified class needs to be a " +

"subclass of org.apache.hadoop.mapreduce.OutputCommitter. Typically, it's also a subclass " +

"of org.apache.parquet.hadoop.ParquetOutputCommitter. If it is not, then metadata " +

"summaries will never be created, irrespective of the value of " +

"parquet.summary.metadata.level")

.version("1.5.0")

.internal()

.stringConf

.createWithDefault("org.apache.parquet.hadoop.ParquetOutputCommitter")

val PARQUET_VECTORIZED_READER_ENABLED =

buildConf("spark.sql.parquet.enableVectorizedReader")

.doc("Enables vectorized parquet decoding.")

.version("2.0.0")

.booleanConf

.createWithDefault(true)

val PARQUET_RECORD_FILTER_ENABLED = buildConf("spark.sql.parquet.recordLevelFilter.enabled")

.doc("If true, enables Parquet's native record-level filtering using the pushed down " +

"filters. " +

s"This configuration only has an effect when '${PARQUET_FILTER_PUSHDOWN_ENABLED.key}' " +

"is enabled and the vectorized reader is not used. You can ensure the vectorized reader " +

s"is not used by setting '${PARQUET_VECTORIZED_READER_ENABLED.key}' to false.")

.version("2.3.0")

.booleanConf

.createWithDefault(false)

val PARQUET_VECTORIZED_READER_BATCH_SIZE = buildConf("spark.sql.parquet.columnarReaderBatchSize")

.doc("The number of rows to include in a parquet vectorized reader batch. The number should " +

"be carefully chosen to minimize overhead and avoid OOMs in reading data.")

.version("2.4.0")

.intConf

.createWithDefault(4096)

val ORC_COMPRESSION = buildConf("spark.sql.orc.compression.codec")

.doc("Sets the compression codec used when writing ORC files. If either `compression` or " +

"`orc.compress` is specified in the table-specific options/properties, the precedence " +

"would be `compression`, `orc.compress`, `spark.sql.orc.compression.codec`." +

"Acceptable values include: none, uncompressed, snappy, zlib, lzo, zstd, lz4.")

.version("2.3.0")

.stringConf

.transform(_.toLowerCase(Locale.ROOT))

.checkValues(Set("none", "uncompressed", "snappy", "zlib", "lzo", "zstd", "lz4"))

.createWithDefault("snappy")

val ORC_IMPLEMENTATION = buildConf("spark.sql.orc.impl")

.doc("When native, use the native version of ORC support instead of the ORC library in Hive. " +

"It is 'hive' by default prior to Spark 2.4.")

.version("2.3.0")

.internal()

.stringConf

.checkValues(Set("hive", "native"))

.createWithDefault("native")

val ORC_VECTORIZED_READER_ENABLED = buildConf("spark.sql.orc.enableVectorizedReader")

.doc("Enables vectorized orc decoding.")

.version("2.3.0")

.booleanConf

.createWithDefault(true)

val ORC_VECTORIZED_READER_BATCH_SIZE = buildConf("spark.sql.orc.columnarReaderBatchSize")

.doc("The number of rows to include in a orc vectorized reader batch. The number should " +

"be carefully chosen to minimize overhead and avoid OOMs in reading data.")

.version("2.4.0")

.intConf

.createWithDefault(4096)

val ORC_VECTORIZED_READER_NESTED_COLUMN_ENABLED =

buildConf("spark.sql.orc.enableNestedColumnVectorizedReader")

.doc("Enables vectorized orc decoding for nested column.")

.version("3.2.0")

.booleanConf

.createWithDefault(false)

val ORC_FILTER_PUSHDOWN_ENABLED = buildConf("spark.sql.orc.filterPushdown")

.doc("When true, enable filter pushdown for ORC files.")

.version("1.4.0")

.booleanConf

.createWithDefault(true)

val ORC_AGGREGATE_PUSHDOWN_ENABLED = buildConf("spark.sql.orc.aggregatePushdown")

.doc("If true, aggregates will be pushed down to ORC for optimization. Support MIN, MAX and " +

"COUNT as aggregate expression. For MIN/MAX, support boolean, integer, float and date " +

"type. For COUNT, support all data types.")

.version("3.3.0")

.booleanConf

.createWithDefault(false)

val ORC_SCHEMA_MERGING_ENABLED = buildConf("spark.sql.orc.mergeSchema")

.doc("When true, the Orc data source merges schemas collected from all data files, " +

"otherwise the schema is picked from a random data file.")

.version("3.0.0")

.booleanConf

.createWithDefault(false)

val HIVE_VERIFY_PARTITION_PATH = buildConf("spark.sql.hive.verifyPartitionPath")

.doc("When true, check all the partition paths under the table\'s root directory " +

"when reading data stored in HDFS. This configuration will be deprecated in the future " +

s"releases and replaced by ${SPARK_IGNORE_MISSING_FILES.key}.")

.version("1.4.0")

.booleanConf

.createWithDefault(false)

val HIVE_METASTORE_PARTITION_PRUNING =

buildConf("spark.sql.hive.metastorePartitionPruning")

.doc("When true, some predicates will be pushed down into the Hive metastore so that " +

"unmatching partitions can be eliminated earlier.")

.version("1.5.0")

.booleanConf

.createWithDefault(true)

val HIVE_METASTORE_PARTITION_PRUNING_INSET_THRESHOLD =

buildConf("spark.sql.hive.metastorePartitionPruningInSetThreshold")

.doc("The threshold of set size for InSet predicate when pruning partitions through Hive " +

"Metastore. When the set size exceeds the threshold, we rewrite the InSet predicate " +

"to be greater than or equal to the minimum value in set and less than or equal to the " +

"maximum value in set. Larger values may cause Hive Metastore stack overflow. But for " +

"InSet inside Not with values exceeding the threshold, we won't push it to Hive Metastore."

)

.version("3.1.0")

.internal()

.intConf

.checkValue(_ > 0, "The value of metastorePartitionPruningInSetThreshold must be positive")

.createWithDefault(1000)

val HIVE_METASTORE_PARTITION_PRUNING_FALLBACK_ON_EXCEPTION =

buildConf("spark.sql.hive.metastorePartitionPruningFallbackOnException")

.doc("Whether to fallback to get all partitions from Hive metastore and perform partition " +

"pruning on Spark client side, when encountering MetaException from the metastore. Note " +

"that Spark query performance may degrade if this is enabled and there are many " +

"partitions to be listed. If this is disabled, Spark will fail the query instead.")

.version("3.3.0")

.booleanConf

.createWithDefault(false)

val HIVE_METASTORE_PARTITION_PRUNING_FAST_FALLBACK =

buildConf("spark.sql.hive.metastorePartitionPruningFastFallback")

.doc("When this config is enabled, if the predicates are not supported by Hive or Spark " +

"does fallback due to encountering MetaException from the metastore, " +

"Spark will instead prune partitions by getting the partition names first " +

"and then evaluating the filter expressions on the client side. " +

"Note that the predicates with TimeZoneAwareExpression is not supported.")

.version("3.3.0")

.booleanConf

.createWithDefault(false)

val HIVE_MANAGE_FILESOURCE_PARTITIONS =

buildConf("spark.sql.hive.manageFilesourcePartitions")

.doc("When true, enable metastore partition management for file source tables as well. " +

"This includes both datasource and converted Hive tables. When partition management " +

"is enabled, datasource tables store partition in the Hive metastore, and use the " +

s"metastore to prune partitions during query planning when " +

s"${HIVE_METASTORE_PARTITION_PRUNING.key} is set to true.")

.version("2.1.1")

.booleanConf

.createWithDefault(true)

val HIVE_FILESOURCE_PARTITION_FILE_CACHE_SIZE =

buildConf("spark.sql.hive.filesourcePartitionFileCacheSize")

.doc("When nonzero, enable caching of partition file metadata in memory. All tables share " +

"a cache that can use up to specified num bytes for file metadata. This conf only " +

"has an effect when hive filesource partition management is enabled.")

.version("2.1.1")

.longConf

.createWithDefault(250 * 1024 * 1024)

object HiveCaseSensitiveInferenceMode extends Enumeration {

val INFER_AND_SAVE, INFER_ONLY, NEVER_INFER = Value

}

val HIVE_CASE_SENSITIVE_INFERENCE = buildConf("spark.sql.hive.caseSensitiveInferenceMode")

.internal()

.doc("Sets the action to take when a case-sensitive schema cannot be read from a Hive Serde " +

"table's properties when reading the table with Spark native data sources. Valid options " +

"include INFER_AND_SAVE (infer the case-sensitive schema from the underlying data files " +

"and write it back to the table properties), INFER_ONLY (infer the schema but don't " +

"attempt to write it to the table properties) and NEVER_INFER (the default mode-- fallback " +

"to using the case-insensitive metastore schema instead of inferring).")

.version("2.1.1")

.stringConf

.transform(_.toUpperCase(Locale.ROOT))

.checkValues(HiveCaseSensitiveInferenceMode.values.map(_.toString))

.createWithDefault(HiveCaseSensitiveInferenceMode.NEVER_INFER.toString)

val HIVE_TABLE_PROPERTY_LENGTH_THRESHOLD =

buildConf("spark.sql.hive.tablePropertyLengthThreshold")

.internal()

.doc("The maximum length allowed in a single cell when storing Spark-specific information " +

"in Hive's metastore as table properties. Currently it covers 2 things: the schema's " +

"JSON string, the histogram of column statistics.")

.version("3.2.0")

.intConf

.createOptional

val OPTIMIZER_METADATA_ONLY = buildConf("spark.sql.optimizer.metadataOnly")

.internal()

.doc("When true, enable the metadata-only query optimization that use the table's metadata " +

"to produce the partition columns instead of table scans. It applies when all the columns " +

"scanned are partition columns and the query has an aggregate operator that satisfies " +

"distinct semantics. By default the optimization is disabled, and deprecated as of Spark " +

"3.0 since it may return incorrect results when the files are empty, see also SPARK-26709." +

"It will be removed in the future releases. If you must use, use 'SparkSessionExtensions' " +

"instead to inject it as a custom rule.")

.version("2.1.1")

.booleanConf

.createWithDefault(false)

val COLUMN_NAME_OF_CORRUPT_RECORD = buildConf("spark.sql.columnNameOfCorruptRecord")

.doc("The name of internal column for storing raw/un-parsed JSON and CSV records that fail " +

"to parse.")

.version("1.2.0")

.stringConf

.createWithDefault("_corrupt_record")

val BROADCAST_TIMEOUT = buildConf("spark.sql.broadcastTimeout")

.doc("Timeout in seconds for the broadcast wait time in broadcast joins.")

.version("1.3.0")

.timeConf(TimeUnit.SECONDS)

.createWithDefaultString(s"${5 * 60}")

// This is only used for the thriftserver

val THRIFTSERVER_POOL = buildConf("spark.sql.thriftserver.scheduler.pool")

.doc("Set a Fair Scheduler pool for a JDBC client session.")

.version("1.1.1")

.stringConf

.createOptional

val THRIFTSERVER_INCREMENTAL_COLLECT =

buildConf("spark.sql.thriftServer.incrementalCollect")

.internal()

.doc("When true, enable incremental collection for execution in Thrift Server.")

.version("2.0.3")

.booleanConf

.createWithDefault(false)

val THRIFTSERVER_FORCE_CANCEL =

buildConf("spark.sql.thriftServer.interruptOnCancel")

.doc("When true, all running tasks will be interrupted if one cancels a query. " +

"When false, all running tasks will remain until finished.")

.version("3.2.0")

.booleanConf

.createWithDefault(false)

val THRIFTSERVER_QUERY_TIMEOUT =

buildConf("spark.sql.thriftServer.queryTimeout")

.doc("Set a query duration timeout in seconds in Thrift Server. If the timeout is set to " +

"a positive value, a running query will be cancelled automatically when the timeout is " +

"exceeded, otherwise the query continues to run till completion. If timeout values are " +

"set for each statement via `java.sql.Statement.setQueryTimeout` and they are smaller " +

"than this configuration value, they take precedence. If you set this timeout and prefer " +

"to cancel the queries right away without waiting task to finish, consider enabling " +

s"${THRIFTSERVER_FORCE_CANCEL.key} together.")

.version("3.1.0")

.timeConf(TimeUnit.SECONDS)

.createWithDefault(0L)

val THRIFTSERVER_UI_STATEMENT_LIMIT =

buildConf("spark.sql.thriftserver.ui.retainedStatements")

.doc("The number of SQL statements kept in the JDBC/ODBC web UI history.")

.version("1.4.0")

.intConf

.createWithDefault(200)

val THRIFTSERVER_UI_SESSION_LIMIT = buildConf("spark.sql.thriftserver.ui.retainedSessions")

.doc("The number of SQL client sessions kept in the JDBC/ODBC web UI history.")

.version("1.4.0")

.intConf

.createWithDefault(200)

// This is used to set the default data source

val DEFAULT_DATA_SOURCE_NAME = buildConf("spark.sql.sources.default")

.doc("The default data source to use in input/output.")

.version("1.3.0")

.stringConf

.createWithDefault("parquet")

val CONVERT_CTAS = buildConf("spark.sql.hive.convertCTAS")

.internal()

.doc("When true, a table created by a Hive CTAS statement (no USING clause) " +

"without specifying any storage property will be converted to a data source table, " +

s"using the data source set by ${DEFAULT_DATA_SOURCE_NAME.key}.")

.version("2.0.0")

.booleanConf

.createWithDefault(false)

val GATHER_FASTSTAT = buildConf("spark.sql.hive.gatherFastStats")

.internal()

.doc("When true, fast stats (number of files and total size of all files) will be gathered" +

" in parallel while repairing table partitions to avoid the sequential listing in Hive" +

" metastore.")

.version("2.0.1")

.booleanConf

.createWithDefault(true)

val PARTITION_COLUMN_TYPE_INFERENCE =

buildConf("spark.sql.sources.partitionColumnTypeInference.enabled")

.doc("When true, automatically infer the data types for partitioned columns.")

.version("1.5.0")

.booleanConf

.createWithDefault(true)

val BUCKETING_ENABLED = buildConf("spark.sql.sources.bucketing.enabled")

.doc("When false, we will treat bucketed table as normal table")

.version("2.0.0")

.booleanConf

.createWithDefault(true)

val BUCKETING_MAX_BUCKETS = buildConf("spark.sql.sources.bucketing.maxBuckets")

.doc("The maximum number of buckets allowed.")

.version("2.4.0")

.intConf

.checkValue(_ > 0, "the value of spark.sql.sources.bucketing.maxBuckets must be greater than 0")

.createWithDefault(100000)

val AUTO_BUCKETED_SCAN_ENABLED =

buildConf("spark.sql.sources.bucketing.autoBucketedScan.enabled")

.doc("When true, decide whether to do bucketed scan on input tables based on query plan " +

"automatically. Do not use bucketed scan if 1. query does not have operators to utilize " +

"bucketing (e.g. join, group-by, etc), or 2. there's an exchange operator between these " +

s"operators and table scan. Note when '${BUCKETING_ENABLED.key}' is set to " +

"false, this configuration does not take any effect.")

.version("3.1.0")

.booleanConf

.createWithDefault(true)

val CAN_CHANGE_CACHED_PLAN_OUTPUT_PARTITIONING =

buildConf("spark.sql.optimizer.canChangeCachedPlanOutputPartitioning")

.internal()

.doc("Whether to forcibly enable some optimization rules that can change the output " +

"partitioning of a cached query when executing it for caching. If it is set to true, " +

"queries may need an extra shuffle to read the cached data. This configuration is " +

"disabled by default. Currently, the optimization rules enabled by this configuration " +

s"are ${ADAPTIVE_EXECUTION_ENABLED.key} and ${AUTO_BUCKETED_SCAN_ENABLED.key}.")

.version("3.2.0")

.booleanConf

.createWithDefault(false)

val CROSS_JOINS_ENABLED = buildConf("spark.sql.crossJoin.enabled")

.internal()

.doc("When false, we will throw an error if a query contains a cartesian product without " +

"explicit CROSS JOIN syntax.")

.version("2.0.0")

.booleanConf

.createWithDefault(true)

val ORDER_BY_ORDINAL = buildConf("spark.sql.orderByOrdinal")

.doc("When true, the ordinal numbers are treated as the position in the select list. " +

"When false, the ordinal numbers in order/sort by clause are ignored.")

.version("2.0.0")

.booleanConf

.createWithDefault(true)

val GROUP_BY_ORDINAL = buildConf("spark.sql.groupByOrdinal")

.doc("When true, the ordinal numbers in group by clauses are treated as the position " +

"in the select list. When false, the ordinal numbers are ignored.")

.version("2.0.0")

.booleanConf

.createWithDefault(true)

val GROUP_BY_ALIASES = buildConf("spark.sql.groupByAliases")

.doc("When true, aliases in a select list can be used in group by clauses. When false, " +

"an analysis exception is thrown in the case.")

.version("2.2.0")

.booleanConf

.createWithDefault(true)

// The output committer class used by data sources. The specified class needs to be a

// subclass of org.apache.hadoop.mapreduce.OutputCommitter.

val OUTPUT_COMMITTER_CLASS = buildConf("spark.sql.sources.outputCommitterClass")

.version("1.4.0")

.internal()

.stringConf

.createOptional

val FILE_COMMIT_PROTOCOL_CLASS =

buildConf("spark.sql.sources.commitProtocolClass")

.version("2.1.1")

.internal()

.stringConf

.createWithDefault(

"org.apache.spark.sql.execution.datasources.SQLHadoopMapReduceCommitProtocol")

val PARALLEL_PARTITION_DISCOVERY_THRESHOLD =

buildConf("spark.sql.sources.parallelPartitionDiscovery.threshold")

.doc("The maximum number of paths allowed for listing files at driver side. If the number " +

"of detected paths exceeds this value during partition discovery, it tries to list the " +

"files with another Spark distributed job. This configuration is effective only when " +

"using file-based sources such as Parquet, JSON and ORC.")

.version("1.5.0")

.intConf

.checkValue(parallel => parallel >= 0, "The maximum number of paths allowed for listing " +

"files at driver side must not be negative")

.createWithDefault(32)

val PARALLEL_PARTITION_DISCOVERY_PARALLELISM =

buildConf("spark.sql.sources.parallelPartitionDiscovery.parallelism")

.doc("The number of parallelism to list a collection of path recursively, Set the " +

"number to prevent file listing from generating too many tasks.")

.version("2.1.1")

.internal()

.intConf

.createWithDefault(10000)

val IGNORE_DATA_LOCALITY =

buildConf("spark.sql.sources.ignoreDataLocality")

.doc("If true, Spark will not fetch the block locations for each file on " +

"listing files. This speeds up file listing, but the scheduler cannot " +

"schedule tasks to take advantage of data locality. It can be particularly " +

"useful if data is read from a remote cluster so the scheduler could never " +

"take advantage of locality anyway.")

.version("3.0.0")

.internal()

.booleanConf

.createWithDefault(false)

// Whether to automatically resolve ambiguity in join conditions for self-joins.