前言

最近在重新温习python基础-正则,感觉正则很强大,不过有点枯燥,想着,就去应用正则,找点有趣的事玩玩

00xx01---代理IP

有好多免费的ip,不过一个一个保存太难了,也不可能,还是用我们的python爬取吧

1 import requests

2 import re

3

4 #防反爬

5 headers={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36" }

6

7 url = "https://www.xicidaili.com/nn/1"

8

9 response = requests.get(url,headers=headers)

10 # print(response.text)

11

12 html = response.text

13 #print(html)

14

15 #re.S忽略换行的干扰

16 ips = re.findall("<td>(d+.d+.d+.d+)</td>",html,re.S)

17 ports = re.findall(("<td>(d+)</td>"),html,re.S)

18 print(ips)

19 print(ports)

00xx03---拼接IP和端口

1 import requests

2 import re

3

4 #防反爬

5 headers={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36" }

6

7 url = "https://www.xicidaili.com/nn/1"

8

9 response = requests.get(url,headers=headers)

10 # print(response.text)

11

12 html = response.text

13 # print(html)

14

15 #re.S忽略换行的干扰

16 ips = re.findall("<td>(d+.d+.d+.d+)</td>",html,re.S)

17 ports = re.findall(("<td>(d+)</td>"),html,re.S)

18 #print(ips)

19 #print(ports)

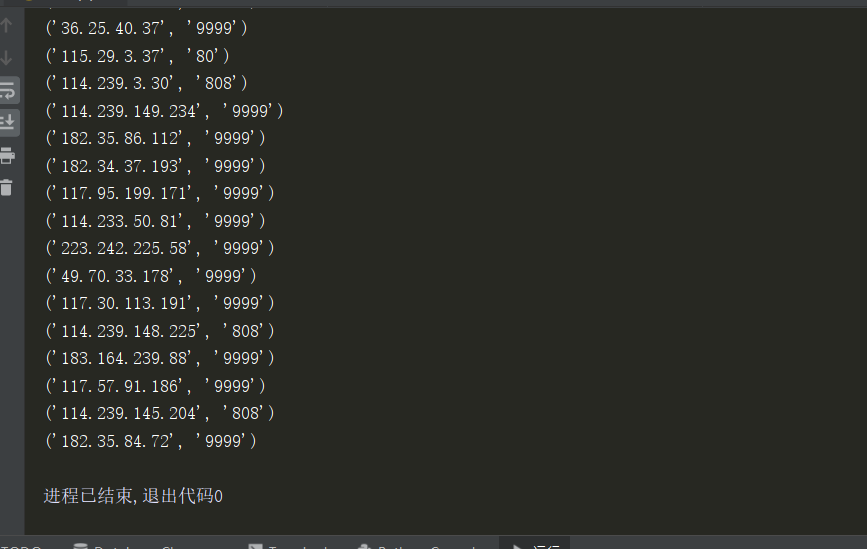

20 for ip in zip(ips,ports ): #提取拼接ip和端口

21 print(ip)

00xx03---验证IP可行性

思路:带着ip和端口去访问一个网站,百度就可以

1 import requests

2 import re

3

4

5 headers={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36" }

6 for i in range(1,1000):

7 #网址

8 url = "https://www.xicidaili.com/nn/{}".format(i)

9

10 response = requests.get(url,headers=headers)

11 # print(response.text)

12

13 html = response.text

14

15 #re.S忽略换行的干扰

16 ips = re.findall("<td>(d+.d+.d+.d+)</td>",html,re.S)

17 ports = re.findall(("<td>(d+)</td>"),html,re.S)

18 # print(ips)

19 # print(ports)

20 for ip in zip(ips,ports ): #提取拼接ip和端口

21 proxies = {

22 "http":"http://" + ip[0] + ":" + ip[1],

23 "https":"http://" + ip[0] + ":" + ip[1]

24 }

25 try:

26 res = requests.get("http://www.baidu.com",proxies=proxies,timeout = 3) #访问网站等待3s没有反应,自动断开

27 print(ip,"能使用")

28 with open("ip.text",mode="a+") as f:

29 f.write(":".join(ip)) #写入ip.text文本

30 f.write("

") #换行

31 except Exception as e: #捕捉错误异常

32 print(ip,"不能使用")

00xx04---写入文本

1 import requests 2 import re 3 4 #防反爬 5 headers={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36" } 6 7 url = "https://www.xicidaili.com/nn/1" 8 9 response = requests.get(url,headers=headers) 10 # print(response.text) 11 12 html = response.text 13 # print(html) 14 15 #re.S忽略换行的干扰 16 ips = re.findall("<td>(d+.d+.d+.d+)</td>",html,re.S) 17 ports = re.findall(("<td>(d+)</td>"),html,re.S) 18 #print(ips) 19 #print(ports) 20 for ip in zip(ips,ports ): #提取拼接ip和端口 21 print(ip) 22 proxies = { 23 "http":"http://" + ip[0] + ":" + ip[1], 24 "https":"http://" + ip[0] + ":" + ip[1] 25 } 26 try: 27 res = requests.get("http://www.baidu.com",proxies=proxies,timeout = 3) #访问网站等待3s没有反应,自动断开 28 print(ip,"能使用") 29 with open("ip.text",mode="a+") as f: 30 f.write(":".join(ip)) #写入ip.text文本 31 f.write(" ") #换行 32 except Exception as e: #捕捉错误异常 33 print(ip,"不能使用")

爬了一页,才几个能用,有3000多页,不可能手动的

00xx05---批量爬

1 #!/usr/bin/env python3

2 # coding:utf-8

3 # 2019/11/18 22:38

4 #lanxing

5 import requests

6 import re

7

8 #防反爬

9 headers={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36" }

10 for i in range(1,3000): #爬3000个网页

11 #网站

12 url = "https://www.xicidaili.com/nn/{}".format(i)

13

14 response = requests.get(url,headers=headers)

15 # print(response.text)

16

17 html = response.text

18 # print(html)

19

20 #re.S忽略换行的干扰

21 ips = re.findall("<td>(d+.d+.d+.d+)</td>",html,re.S)

22 ports = re.findall(("<td>(d+)</td>"),html,re.S)

23 #print(ips)

24 #print(ports)

25 for ip in zip(ips,ports ): #提取拼接ip和端口

26 print(ip)

27 proxies = {

28 "http":"http://" + ip[0] + ":" + ip[1],

29 "https":"http://" + ip[0] + ":" + ip[1]

30 }

31 try:

32 res = requests.get("http://www.baidu.com",proxies=proxies,timeout = 3) #访问网站等待3s没有反应,自动断开

33 print(ip,"能使用")

34 with open("ip.text",mode="a+") as f:

35 f.write(":".join(ip)) #写入ip.text文本

36 f.write("

") #换行

37 except Exception as e: #捕捉错误异常

38 print(ip,"不能使用")

00xx06---最后

哈哈,感觉爬的速度太慢了,毕竟是单线程,如果要快速爬,可以试试用多线程爬取,

以后再补充完善代码吧