整体流程

后处理主要内容列表

- Ambient Occlusion

- Anti-aliasing

- Auto-exposure

- Bloom

- Chromatic Aberration

- Color Grading

- Deferred Fog

- Depth of Field

- Grain

- Lens Distortion

- Motion Blur

- Screen-space reflections

- Vignette

PostProcess Effect处理顺序

- Anti-aliasing

- Blur

Builtins:

DepthOfField

Uber Effects:

- AutoExposure

- LensDistortion

- CHromaticAberration

- Bloom

- Vignette

- Grain

- ColorGrading(tonemapping)

关键类

PostProcessResource 绑定shader资源

PostProcessRenderContext 重要参数缓存、

PostProcessLayer 后处理渲染控制类

PostProcessEffectRenderer 所有后处理Effect继承它,并实现其render接口

PostProcessEffectSettings Effect的面板、属性描述类

一个Effect一般包括

- 一个它自己的shader

- 一个UI描述类(CustomEffect,继承PostProcessEffectSettings)

- 一个渲染接口类(CustomEffectRender,继承PostProcessEffectRender)

自定义后处理

可以添加这几类后处理:BeforeTransparent,BeforeStack,AfterStack,这类后处理可以不修改原PostProcessing下的代码进行添加。

如果想添加Builtin阶段的后处理,那么一般在PostProcessing/Runtime/Effects下进行添加,这类后处理可能会修改PostProcessing下的代码或shader

核心函数

后处理的入口函数为PostProcessLayer::OnPreRender

核心渲染控制逻辑

1- BuildCommandBuffers

PostProcessLayer::BuildCommandBuffers

{

int tempRt = m_TargetPool.Get();

context.GetScreenSpaceTemporaryRT(m_LegacyCmdBuffer, tempRt, 0, sourceFormat, RenderTextureReadWrite.sRGB);

m_LegacyCmdBuffer.BuiltinBlit(cameraTarget, tempRt, RuntimeUtilities.copyStdMaterial, stopNaNPropagation ? 1 : 0);

context.command = m_LegacyCmdBuffer;

context.source = tempRt;

context.destination = cameraTarget;

Render(context);

...

}该函数从摄像机拷贝了一份临时RT作为source,接着进入渲染阶段Render(context)

2- Render(context)

if (hasBeforeStackEffects)

lastTarget = RenderInjectionPoint(PostProcessEvent.BeforeStack, context, "BeforeStack", lastTarget);

// Builtin stack,Effects

lastTarget = RenderBuiltins(context, !needsFinalPass, lastTarget);

// After the builtin stack but before the final pass (before FXAA & Dithering)

if (hasAfterStackEffects)

lastTarget = RenderInjectionPoint(PostProcessEvent.AfterStack, context, "AfterStack", lastTarget);

// And close with the final pass

if (needsFinalPass)

RenderFinalPass(context, lastTarget);这里最关键的是RenderBuiltins和RenderFinalPass

3- RenderBuiltins

这是后处理的关键逻辑

中间的大量Effect都是计算参数、获得各种Texture

最终使用Uber将各种效果混合到context.destination

int RenderBuiltins(PostProcessRenderContext context, bool isFinalPass, int releaseTargetAfterUse)

{

...

context.uberSheet = uberSheet;//context.resources.shaders.uber

....

cmd.BeginSample("BuiltinStack");

int tempTarget = -1;

var finalDestination = context.destination;//暂存 finalRT

if (!isFinalPass)

{

// Render to an intermediate target as this won't be the final pass

tempTarget = m_TargetPool.Get();

context.GetScreenSpaceTemporaryRT(cmd, tempTarget, 0, context.sourceFormat);

context.destination = tempTarget;//临时RT,临时destination

...

}

....

int depthOfFieldTarget = RenderEffect<DepthOfField>(context, true);

..

int motionBlurTarget = RenderEffect<MotionBlur>(context, true);

..

if (ShouldGenerateLogHistogram(context))

m_LogHistogram.Generate(context);

// Uber effects

RenderEffect<AutoExposure>(context);

uberSheet.properties.SetTexture(ShaderIDs.AutoExposureTex, context.autoExposureTexture);

RenderEffect<LensDistortion>(context);

RenderEffect<ChromaticAberration>(context);

RenderEffect<Bloom>(context);

RenderEffect<Vignette>(context);

RenderEffect<Grain>(context);

if (!breakBeforeColorGrading)

RenderEffect<ColorGrading>(context);

if (isFinalPass)

{

uberSheet.EnableKeyword("FINALPASS");

dithering.Render(context);

ApplyFlip(context, uberSheet.properties);

}

else

{

ApplyDefaultFlip(uberSheet.properties);

}

//使用uber shader混合前面的Effects的结果

cmd.BlitFullscreenTriangle(context.source, context.destination, uberSheet, 0);

context.source = context.destination;

context.destination = finalDestination;

...releaseRTs

cmd.EndSample("BuiltinStack");

return tempTarget;

}RT

PostProcess中RT总览

可以看到保存渲染图象的RT都的RW都为sRGB

RT与sRGB

在客户端配置为linear-space,开启HDR的情况下 如果创建RT时,RenderTextureReadWrite为sRGB,也就是RT保存的是sRGB值,读值时,会自动进行sRGB->linear转化,写值时自动进行linear->sRGB转化

初始时,只有一个cameraTarget,它的RenderTextureReadWrite为sRGB 在进入Effects前,会拷贝一个临时RT作为context.source,他的RenderTextureReadWrite为sRGB

经过Anti-aliasing、Blur、Beforestack后,会将context.destination改为一个空白的临时RT(RW为sRGB)

在Uber最后的混合阶段,会将所有效果混合

RT的尺寸

通过PostProcessRenderContext.GetScreenSpaceTemporaryRT获取RT,其默认尺寸存储在PostProcessRenderContext.width,PostProcessRenderContext.height

设置PostProcessRenderContext.m_Camera会调用Camera.set,这里会处理默认RT尺寸

if (m_Camera.stereoEnabled)

{

#if UNITY_2017_2_OR_NEWER

var xrDesc = XRSettings.eyeTextureDesc;

width = xrDesc.width;

height = xrDesc.height;

screenWidth = XRSettings.eyeTextureWidth;

screenHeight = XRSettings.eyeTextureHeight;

#endif

}

else

{

width = m_Camera.pixelWidth;

height = m_Camera.pixelHeight;

...

}除了几个主要RT,中间作为Uber的纹理参数的RT的尺寸都不一定与cameraRT尺寸一样。

典型的几个都是通过new RenderTexture创建的,他们的大小和格式都需要注意

Effects

Effects处理顺序

这里再次列出来

- Anti-aliasing

- Blur

Builtins:

DepthOfField

Uber Effects:

- AutoExposure

- LensDistortion

- CHromaticAberration

- Bloom

- Vignette

- Grain

- ColorGrading(tonemapping)

Uber Effects

说明

Uber Effects包括

- AutoExposure

- LensDistortion

- CHromaticAberration

- Bloom

- Vignette

- Grain

- ColorGrading(tonemapping)

- Uber混合阶段

其中Uber混合阶段之前的每个阶段会根据配置生成对应的参数和临时纹理,作为参数传递给Uber

同时如果某个阶段启用了,其render接口还会激活Uber中对应的关键字(比如Vignette的render极端会激活"VIGENETTE"),使用此方法来控制是Uber阶段是否执行某个阶段

Bloom

说明

Bloom 通过Downsample和Upsample得到一张BloomTex,这个过程需要确定迭代次数,每次使用的RT的尺寸

Bloom面板上有个关键参数Anamorphic Ratio([-1,1])能决定这些临时RT的尺寸

最终得到的BloomTex的尺寸就是第0次Downsample的尺寸,也就是(tw,th)

面板上的重要参数说明

- threshold :亮度分离阈值,比如大于改值的进行Bloom效果

- intensity :强度

- Anamorphic Ratio:决定bloomtex的尺寸,间接决定模糊迭代次数

Bloom 分为三步

- 分离原图中亮度较大的像素,进行降分辨率处理

- 把分离的亮度图进行高斯模糊

- 将模糊后的亮度图和原图进行叠加,这一步在uber阶段完成

Bloom临时RT尺寸、迭代次数确定

公式

代码

float ratio = Mathf.Clamp(settings.anamorphicRatio, -1, 1);

float rw = ratio < 0 ? -ratio : 0f;

float rh = ratio > 0 ? ratio : 0f;

int tw = Mathf.FloorToInt(context.screenWidth / (2f - rw));

int th = Mathf.FloorToInt(context.screenHeight / (2f - rh));在DownSample迭代时,对应的RT的边长每次会减少一半

迭代次数iterations确定

int s = Mathf.Max(tw, th);

float logs = Mathf.Log(s, 2f) + Mathf.Min(settings.diffusion.value, 10f) - 10f;

int logs_i = Mathf.FloorToInt(logs);

int iterations = Mathf.Clamp(logs_i, 1, k_MaxPyramidSize);

float sampleScale = 0.5f + logs - logs_i;

sheet.properties.SetFloat(ShaderIDs.SampleScale, sampleScale);Downsample和Upsample

shader

- Bloom.shader 核心shader

- Sampling.hlsl 若干采样函数

不同阶段使用的pass列表

Downsample

每次Downsample,RT边长会缩短一倍,同时创建了一对参数一样的临时RT,这些RT的(RW都为sRGB)

shader使用上

- 第0次使用的是 FragPrefilter13或FragPrefilter4

- 其他循环使用 FragDownsample13或Downsample4

var lastDown = context.source;

for (int i = 0; i < iterations; i++)

{

int mipDown = m_Pyramid[i].down;

int mipUp = m_Pyramid[i].up;

int pass = i == 0? (int)Pass.Prefilter13 + qualityOffset

: (int)Pass.Downsample13 + qualityOffset;

context.GetScreenSpaceTemporaryRT(cmd, mipDown, 0, context.sourceFormat, RenderTextureReadWrite.Default, FilterMode.Bilinear, tw, th);

context.GetScreenSpaceTemporaryRT(cmd, mipUp, 0, context.sourceFormat, RenderTextureReadWrite.Default, FilterMode.Bilinear, tw, th);

cmd.BlitFullscreenTriangle(lastDown, mipDown, sheet, pass);

lastDown = mipDown;

tw = Mathf.Max(tw / 2, 1);

th = Mathf.Max(th / 2, 1);

}Upsample

int lastUp = m_Pyramid[iterations - 1].down;

for (int i = iterations - 2; i >= 0; i--)

{

int mipDown = m_Pyramid[i].down;

int mipUp = m_Pyramid[i].up;

cmd.SetGlobalTexture(ShaderIDs.BloomTex, mipDown);

cmd.BlitFullscreenTriangle(lastUp, mipUp, sheet, (int)Pass.UpsampleTent + qualityOffset);

lastUp = mipUp;

}Uber混合阶段

这个阶段在Uber Effects都执行完之后才执行,进行效果混合

这段逻辑在Uber.shader中

half4 bloom = UpsampleTent(TEXTURE2D_PARAM(_BloomTex, sampler_BloomTex), uvDistorted, _BloomTex_TexelSize.xy, _Bloom_Settings.x);

bloom *= _Bloom_Settings.y;

dirt *= _Bloom_Settings.z;

color += bloom * half4(_Bloom_Color, 1.0);

color += dirt * bloom;这里将bloom颜色叠加到color上

Vignette

说明

聚焦,边缘darkening

效果略

有两个模式

- Classic 边缘黑化

- Masked 使用一张自定义图片覆盖在屏幕上,以实现特俗效果

Vignette重要工作都在Uber中执行,其Render部分只根据设置进行参数设置

var sheet = context.uberSheet;

sheet.EnableKeyword("VIGNETTE");

sheet.properties.SetColor(ShaderIDs.Vignette_Color, settings.color.value);

if (settings.mode == VignetteMode.Classic)

{

sheet.properties.SetFloat(ShaderIDs.Vignette_Mode, 0f);

sheet.properties.SetVector(ShaderIDs.Vignette_Center, settings.center.value);

float roundness = (1f - settings.roundness.value) * 6f + settings.roundness.value;

sheet.properties.SetVector(ShaderIDs.Vignette_Settings, new Vector4(settings.intensity.value * 3f, settings.smoothness.value * 5f, roundness, settings.rounded.value ? 1f : 0f));

}

else // Masked

{

sheet.properties.SetFloat(ShaderIDs.Vignette_Mode, 1f);

sheet.properties.SetTexture(ShaderIDs.Vignette_Mask, settings.mask.value);

sheet.properties.SetFloat(ShaderIDs.Vignette_Opacity, Mathf.Clamp01(settings.opacity.value));

}Uber混合阶段

if (_Vignette_Mode < 0.5)

{

half2 d = abs(uvDistorted - _Vignette_Center) * _Vignette_Settings.x;

d.x *= lerp(1.0, _ScreenParams.x / _ScreenParams.y, _Vignette_Settings.w);

d = pow(saturate(d), _Vignette_Settings.z); // Roundness

half vfactor = pow(saturate(1.0 - dot(d, d)), _Vignette_Settings.y);

color.rgb *= lerp(_Vignette_Color, (1.0).xxx, vfactor);

color.a = lerp(1.0, color.a, vfactor);

}

else

{

half vfactor = SAMPLE_TEXTURE2D(_Vignette_Mask, sampler_Vignette_Mask, uvDistorted).a;

#if !UNITY_COLORSPACE_GAMMA

{

vfactor = SRGBToLinear(vfactor);

}

#endif

half3 new_color = color.rgb * lerp(_Vignette_Color, (1.0).xxx, vfactor);

color.rgb = lerp(color.rgb, new_color, _Vignette_Opacity);

color.a = lerp(1.0, color.a, vfactor);

}Grain

效果略

grain是基于噪声模拟老式电影胶片的颗粒感,恐怖游戏中常用这中效果

它的Render部分是使用GrainBaker.shader中的算法生成一张128x128的GrainTex,Uber阶段将之混合到最终效果

Uber混合阶段

half3 grain = SAMPLE_TEXTURE2D(_GrainTex, sampler_GrainTex, i.texcoordStereo * _Grain_Params2.xy + _Grain_Params2.zw).rgb;

// Noisiness response curve based on scene luminance

float lum = 1.0 - sqrt(Luminance(saturate(color)));

lum = lerp(1.0, lum, _Grain_Params1.x);

color.rgb += color.rgb * grain * _Grain_Params1.y * lum;ColorGrading

说明

ColorGrading有三种模式:

HighDefinitionRange

LowDefinitionRange

External :要求支持compute shader 与3D RT

有三条管线,分别是

RenderExternalPipeline3D :要求支持compute shader 与3D RT

RenderHDRPipeline3D :要求支持compute shader 与3D RT

RenderHDRPipeline2D 当不支持compute shader和3D RT时,使用这个进行HDR color pipeline

RenderLDRPipeline2D

这里只考虑RenderHDRPipeline2D

RenderHDRPipeline2D

该阶段分为5个部分

- Tonemapping

- Basic

- Channel Mixer

- Trackballs

- Grading Curves

使用的shader为lut2DBaker,核心的文件还有Colors.hlsl、ACES.hlsl

目的是生成一张颜色查找表Lut2D,然后在Uber阶段,根据该表查找映射颜色,作为新的颜色值

Lut2D的RenderTextureReadWrite为Linear,也就是存储的是Linear数据

可选的,有3种Tonemapping方式:Neutral、ACES、Custom

这里只考虑ACES

根据配置设置好lut2DBaker的各个阶段的参数和特性后,就进入计算阶段

context.command.BeginSample("HdrColorGradingLut2D");

context.command.BlitFullscreenTriangle(BuiltinRenderTextureType.None, m_InternalLdrLut, lutSheet, (int)Pass.LutGenHDR2D);

context.command.EndSample("HdrColorGradingLut2D");当计算结束,会把计算结果Lut2D作为参数出传递给Uber,同时还会设置对应参数,最后的颜色替换阶段在Uber中完成

uberSheet.EnableKeyword("COLOR_GRADING_HDR_2D");

uberSheet.properties.SetVector(ShaderIDs.Lut2D_Params, new Vector3(1f / lut.width, 1f / lut.height, lut.height - 1f));

uberSheet.properties.SetTexture(ShaderIDs.Lut2D, lut);

uberSheet.properties.SetFloat(ShaderIDs.PostExposure, RuntimeUtilities.Exp2(settings.postExposure.value));Lut2DBaker

入口

float4 FragHDR(VaryingsDefault i) : SV_Target

{

float3 colorLutSpace = GetLutStripValue(i.texcoord, _Lut2D_Params);

float3 graded = ColorGradeHDR(colorLutSpace);

return float4(graded, 1.0);

}

float3 GetLutStripValue(float2 uv, float4 params)

{

uv -= params.yz;

float3 color;

color.r = frac(uv.x * params.x);

color.b = uv.x - color.r / params.x;

color.g = uv.y;

return color * params.w;

}_Lut2D_Params是写死的,值为:

(lut_height, 0.5 / lut_width, 0.5 / lut_height, lut_height / lut_height - 1)

其中lut_height = 32;lut_width = 32*32也就是说,该表的大小为(32*32,32)

下图是一个例子(这里观察到的结果与实际的存储值是不一致的)

ColorGradeHDR主要将HDR颜色转到ACES颜色空间,并进行tonemapping

//相关函数在Colors.hlsl中

float3 ColorGradeHDR(float3 colorLutSpace)

{

//得到HDR颜色

float3 colorLinear = LUT_SPACE_DECODE(colorLutSpace);

//

float3 aces = unity_to_ACES(colorLinear);

// ACEScc (log) space

float3 acescc = ACES_to_ACEScc(aces);

acescc = LogGradeHDR(acescc);

aces = ACEScc_to_ACES(acescc);

// ACEScg (linear) space

float3 acescg = ACES_to_ACEScg(aces);

acescg = LinearGradeHDR(acescg);

// Tonemap ODT(RRT(aces))

aces = ACEScg_to_ACES(acescg);

//tonemap

colorLinear = AcesTonemap(aces);

return colorLinear;

}两个重要的映射函数

#define LUT_SPACE_ENCODE(x) LinearToLogC(x)

#define LUT_SPACE_DECODE(x) LogCToLinear(x) //在Uber中进行颜色映射时使用该接口

float3 LinearToLogC(float3 x)

{

return LogC.c * log10(LogC.a * x + LogC.b) + LogC.d;

}

float3 LogCToLinear(float3 x)

{

return (pow(10.0, (x - LogC.d) / LogC.c) - LogC.b) / LogC.a;

}

static const ParamsLogC LogC =

{

0.011361, // cut

5.555556, // a

0.047996, // b

0.244161, // c

0.386036, // d

5.301883, // e

0.092819 // f

};LinearToLogC

公式

图像

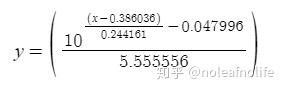

LogCToLinear

公式

图像

Uber阶段

这里根据lut进行颜色映射

color *= _PostExposure;

float3 colorLutSpace = saturate(LUT_SPACE_ENCODE(color.rgb));

color.rgb = ApplyLut2D(TEXTURE2D_PARAM(_Lut2D, sampler_Lut2D), colorLutSpace, _Lut2D_Params);这里使用LUT_SPACE_ENCODE将颜色映射到HDR空间,然后通过这个值在lut中查找到对应的颜色值,作为新的color

// 2D LUT grading

// scaleOffset = (1 / lut_width, 1 / lut_height, lut_height - 1)

//

half3 ApplyLut2D(TEXTURE2D_ARGS(tex, samplerTex), float3 uvw, float3 scaleOffset)

{

// Strip format where `height = sqrt(width)`

uvw.z *= scaleOffset.z;

float shift = floor(uvw.z);

uvw.xy = uvw.xy * scaleOffset.z * scaleOffset.xy + scaleOffset.xy * 0.5;

uvw.x += shift * scaleOffset.y;

uvw.xyz = lerp(

SAMPLE_TEXTURE2D(tex, samplerTex, uvw.xy).rgb,

SAMPLE_TEXTURE2D(tex, samplerTex, uvw.xy + float2(scaleOffset.y, 0.0)).rgb,

uvw.z - shift

);

return uvw;

}Uber整合阶段

原始颜色假设为color0

color.rgb *= autoExposure;

//Bloom

color += dirt * bloom;

//Vignette

color.rgb = lerp(color.rgb, new_color, _Vignette_Opacity); color.a = lerp(1.0, color.a, vfactor);

//Grain

color.rgb += color.rgb * grain * _Grain_Params1.y * lum;

//COLOR_GRADING_HDR_2D

color.rgb = ApplyLut2D(TEXTURE2D_PARAM(_Lut2D, sampler_Lut2D), colorLutSpace, _Lut2D_Params);之后,还会根据是否是final pass执行如下逻辑

非final pass

UNITY_BRANCH

if (_LumaInAlpha > 0.5)

{

// Put saturated luma in alpha for FXAA - higher quality than "green as luma" and

// necessary as RGB values will potentially still be HDR for the FXAA pass

half luma = Luminance(saturate(output));

output.a = luma;

}

#if UNITY_COLORSPACE_GAMMA

{

output = LinearToSRGB(output);

}

#endif如果是sRGB工作空间,还会将结果进行gamma矫正

final pass

如果定义了UNITY_COLORSPACE_GAMMA

还需要将linear 转到 sRGB

#if UNITY_COLORSPACE_GAMMA

{

output = LinearToSRGB(output);

}

#endif注意

Bloom一般开启fastmode,此模式下只采样4次,默认模式会采样13次