http://www.opengpu.org/bbs/viewthread.php?tid=834&extra=page%3D2%26amp;filter%3Ddigest

http://www.humus.name/index.php?page=Comments&ID=255

A couple of notes about Z

Tuesday, April 21, 2009 | Permalink

It is often said that Z is non-linear, whereas W is linear. This gives a W-buffer a uniformly distributed resolution across the view frustum, whereas a Z-buffer has better precision close up and poor precision in the distance. Given that objects don't normally get thicker just because they are farther away a W-buffer generally has fewer artifacts on the same number of bits than a Z-buffer. In the past some hardware has supported a W-buffer, but these days they are considered deprecated and hardware don't implement it anymore. Why, aren't they better? Not really. Here's why:

While W is linear in view space it's not linear in screen space. Z, which is non-linear in view space, is on the other hand linear in screen space. This fact can be observed by a simple shader in DX10:

float dx = ddx(In.position.z);

float dy = ddy(In.position.z);

return 1000.0 * float4(abs(dx), abs(dy), 0, 0);

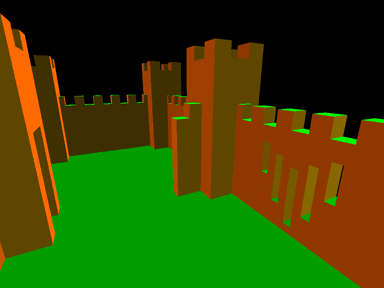

Here In.position is SV_Position. The result looks something like this:

Note how all surfaces appear single colored. The difference in Z pixel-to-pixel is the same across any given primitive. This matters a lot to hardware. One reason is that interpolating Z is cheaper than interpolating W. Z does not have to be perspective corrected. With cheaper units in hardware you can reject a larger number of pixels per cycle with the same transistor budget. This of course matters a lot for pre-Z passes and shadow maps. With modern hardware linearity in screen space also turned out to be a very useful property for Z optimizations. Given that the gradient is constant across the primitive it's also relatively easy to compute the exact depth range within a tile for Hi-Z culling. It also means techniques such as Z-compression are possible. With a constant Z delta in X and Y you don't need to store a lot of information to be able to fully recover all Z values in a tile, provided that the primitive covered the entire tile.

These days the depth buffer is increasingly being used for other purposes than just hidden surface removal. Being linear in screen space turns out to be a very desirable property for post-processing. Assume for instance that you want to do edge detection on the depth buffer, perhaps for antialiasing by blurring edges. This is easily done by comparing a pixel's depth with its neighbors' depths. With Z values you have constant pixel-to-pixel deltas, except for across edges of course. This is easy to detect by comparing the delta to the left and to the right, and if they don't match (with some epsilon) you crossed an edge. And then of course the same with up-down and diagonally as well. This way you can also reject pixels that don't belong to the same surface if you implement say a blur filter but don't want to blur across edges, for instance for smoothing out artifacts in screen space effects, such as SSAO with relatively sparse sampling.

What about the precision in view space when doing hidden surface removal then, which is still is the main use of a depth buffer? You can regain most of the lost precision compared to W-buffering by switching to a floating point depth buffer. This way you get two types of non-linearities that to a large extent cancel each other out, that from Z and that from a floating point representation. For this to work you have to flip the depth buffer so that the far plane is 0.0 and the near plane 1.0, which is something that's recommended even if you're using a fixed point buffer since it also improves the precision on the math during transformation. You also have to switch the depth test from LESS to GREATER. If you're relying on a library function to compute your projection matrix, for instance D3DXMatrixPerspectiveFovLH(), the easiest way to accomplish this is to just swap the near and far parameters.

Z ya! ![]()

| 老外这文章写得不错,主要是解释Z-buffer和W-buffer怎么权衡使用。 按这篇文章的说法是W-buffer的压缩比Z-buffer要困难一些,这一点可以理解,因为Zbuffer是NDC空间的,而W-buffer是VCS或者是CCS空间的,所以要计算DPCM的话,Zbuffer只需要在XY方向上各求2次偏导数,而W-buffer需要求在XY方向上各求3次偏导数。因为SCS下的Z已经是透视投影过的坐标了。 还有一个值得思考的是Z-buffer和W-buffer的问题,其实W-buffer更加直观,而且如果使用Float,那么会有更加大的表示域。但是目前的GPU在Z-buffer上都使用Fixed Point,这是晶体管考虑,对于未来的LRB来说,其实可以考虑用Float Precision来实现W-buffer,从而甩弃不精确的Z-buffer! 但是这需要API支持 :〉 |