携带cookie模拟登录

- 需要在爬虫里面自定义一个

start_requests()的函数- 里面的内容:

def start_requests(self):

cookies = '真实有效的cookie'

yield scrapy.Request(

self.start_urls[0],

callback = self.paese,

cookies = cookies

)

下载中间件

- 只需在文件最下面定义自己的中间件即可

下载中间键里可以做很多内容:携带登录信息,设置user-agent,添加代理等

- 使用前要在settings里面设置一下

- 数字代表权重

projectname.middlewares.DownloadMiddlewareName

DOWNLOADER_MIDDLEWARES = {

'superspider.middlewares.SuperspiderDownloaderMiddleware': 543,

}

- 设置user-agent

process_request

定义一个名为RandomUserAgentMiddleware的下载中间件

from fake_useragent import UserAgent

class RandomUserAgentMiddleware:

def process_request(self, request, spider):

##### 还可以为不同爬虫指定不同的中间件

if spider.name == 'spider1':

ua = UserAgent()

request.headers["User-Agent"] = ua.random

在settings里导入

DOWNLOADER_MIDDLEWARES = {

'superspider.middlewares.RandomUserAgentMiddleware': 543

}

- 审核user-agent

process_response- 需要返回response

- 在settings里导入

class UserAgentCheck:

def process_response(self, request, response, spider):

print(request.headers['User-Agent'])

return response

DOWNLOADER_MIDDLEWARES = {

'superspider.middlewares.RandomUserAgentMiddleware': 543,

'superspider.middlewares.UserAgentCheck': 544

}

- 设置代理

- 需要在request的meta信息中添加proxy字段

- 添加代理的形式:协议+IP+端口

- settings里导入

class ProxyMiddleware:

def process_request(self, request, spider):

if spider.name == 'spider0':

request.meta["proxy"] = "http://ip:port"

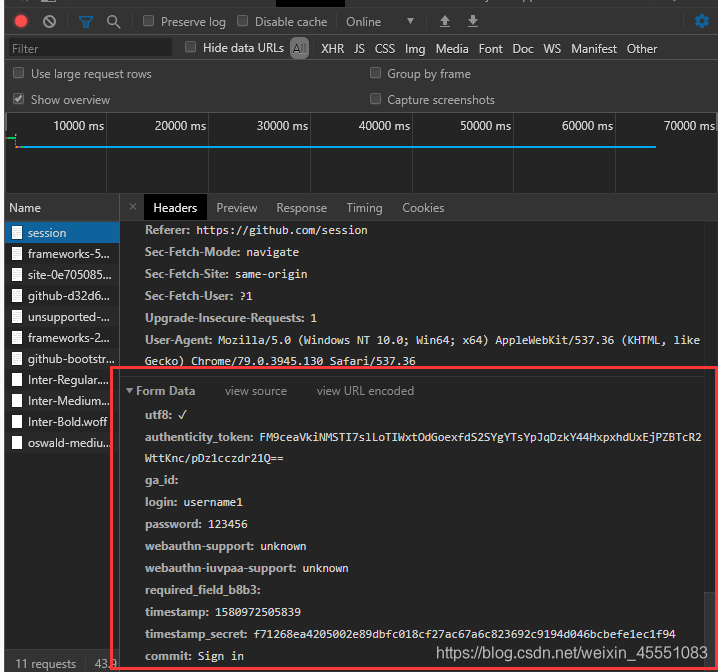

模拟登录GitHub

自己构造表单模拟登录 使用 FormRequest

- 明确要yield的内容,并交给下一个函数处理

# -*- coding: utf-8 -*-

import scrapy

class Spider0Spider(scrapy.Spider):

name = 'spider0'

allowed_domains = ['github.com']

start_urls = ['https://github.com/session']

def parse(self, response):

yield scrapy.FormRequest(

"https://github.com/session",

formdata=,

callback=self.after_login

)

def after_login(self, response):

pass

- formdate的构造

# -*- coding: utf-8 -*-

import scrapy

class Spider0Spider(scrapy.Spider):

name = 'spider0'

allowed_domains = ['github.com']

start_urls = ['https://github.com/session']

def parse(self, response):

form = {

'utf8': "✓",

'authenticity_token': response.xpath(

"//*[@id='unsupported-browser']/div/div/div[2]/form/input[2]/text()").extract_first(),

'ga_id': response.xpath('//*[@id="login"]/form/input[3]/@value').extract_first(),

'login': "",

'password': "",

'webauthn-support': 'supported',

'webauthn-iuvpaa-support': 'supported',

response.xpath('//*[@id="login"]/form/div[3]/input[5]/@name').extract_first(): "",

'timestamp': response.xpath("//*[@id='login']/form/div[3]/input[6]/@value").extract_first(),

"timestamp_secret": response.xpath("//*[@id='login'']/form/div[3]/input[7]/@value").extract_first(),

"commit": "Sign in"

}

print(form)

yield scrapy.FormRequest(

"https://github.com/session",

formdata=form,

callback=self.after_login

)

def after_login(self, response):

pass

自动寻找form表单中的信息

# -*- coding: utf-8 -*-

import scrapy

class Spider0Spider(scrapy.Spider):

name = 'spider0'

allowed_domains = ['github.com']

start_urls = ['https://github.com/session']

def parse(self, response):

yield scrapy.FormRequest.from_response(

response,

formdata={"login": "", "password":""},

callback=self.after_login

)

def after_login(self, response):

print(response.text)