地址:

Spark PipeLine

是基于DataFrames的高层的API,可以方便用户构建和调试机器学习流水线

可以使得多个机器学习算法顺序执行,达到高效的数据处理的目的

DataFrame是来自Spark SQL的ML DataSet 可以存储一系列的数据类型,text,特征向量,Label和预测结果

Transformer:将DataFrame转化为另外一个DataFrame的算法,通过实现transform()方法

Estimator:将DataFrame转化为一个Transformer的算法,通过实现fit()方法

PipeLine:将多个Transformer和Estimator串成一个特定的ML Wolkflow

Parameter:Tansformer和Estimator共用同一个声明参数的API

Transformer和Estimator是PipeLine的Stage

Pipeline是一系列的Stage按照声明的顺序排列成的工作流

Transformer.transform()和Estimator.fit()都是无状态的

每一个Transformer和Estimator的实例都有唯一的ID在声明参数的时候非常有用

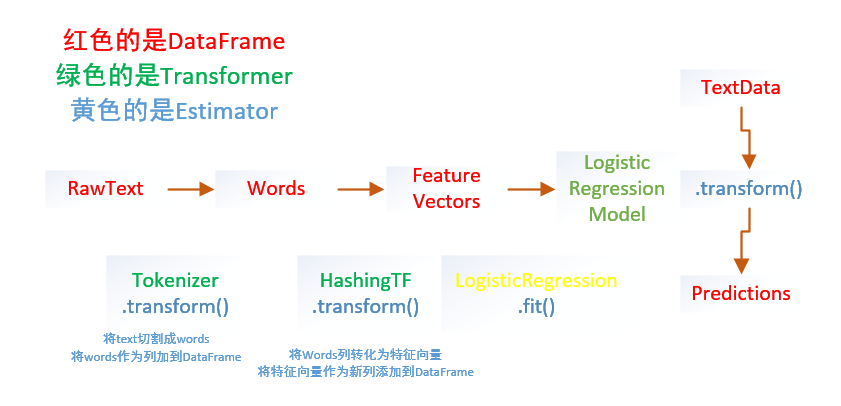

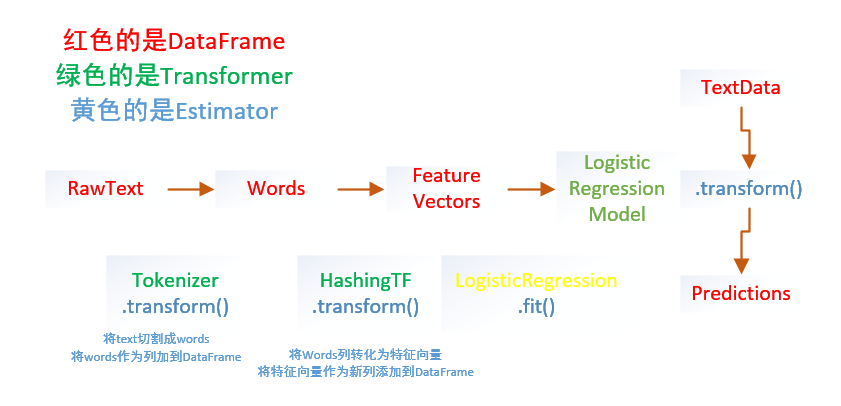

下面是一个线性的PipeLine的流程

上面创建的是线性的PipeLine,每一步都依赖上一步的结果

如果数据流可以组成有向不循环图(Directed Acyclic Graph DAG)

那么可以创建Non-Linear Pipeline

RuntimeCheching:因为PipeLine可以操作多种类型的DataFrame

所以不能使用编译时检测

那么PipeLine或者PipeLine Model使用运行时检测

这种检测使用了DataFrame Schema这个Schema是DataFrame列的数据类型的描述

Unique PipeLine Stage:PipeLine Stage应当都是唯一的实例,都拥有唯一的ID

Param是一个命名参数,带有自包含文档

ParamMap是一个参数与值的对(parameter,value)

将参数传递给算法主要有下面两种方式:

1. 为实例设置参数,若Ir是LogisticRegression的实例,调用Ir.SetMaxIter(10)意味着Ir.fit()做多调用10次

2. 传递一个ParamMap给fit()或者transform()那么位于map中的所有的parameter都会通过setter方法override以前的参数

很多时候将PipeLine保存到disk方便以后的使用是值得的

Spark 1.6时候,model Import/Export函数被添加到PipeLine API

大部分transformer和一些ML Model支持I/O

下面是基本组件的一些操作的例子:

#导入向量和模型from pyspark.ml.linalg importVectorsfrom pyspark.ml.classification importLogisticRegression#准备训练数据# Prepare training data from a list of (label, features) tuples.training = spark.createDataFrame([(1.0,Vectors.dense([0.0,1.1,0.1])),(0.0,Vectors.dense([2.0,1.0,-1.0])),(0.0,Vectors.dense([2.0,1.3,1.0])),(1.0,Vectors.dense([0.0,1.2,-0.5]))],["label","features"])#创建回归实例,这个实例是Estimator# Create a LogisticRegression instance. This instance is an Estimator.lr =LogisticRegression(maxIter=10, regParam=0.01)#打印出参数和文档# Print out the parameters, documentation, and any default values.print"LogisticRegression parameters: "+ lr.explainParams()+" "#使用Ir中的参数训练出Model1# Learn a LogisticRegression model. This uses the parameters stored in lr.model1 = lr.fit(training)# Since model1 is a Model (i.e., a transformer produced by an Estimator),# we can view the parameters it used during fit().# This prints the parameter (name: value) pairs, where names are unique IDs for this# LogisticRegression instance.#查看model1在fit()中使用的参数print"Model 1 was fit using parameters: "print model1.extractParamMap()#修改其中的一个参数# We may alternatively specify parameters using a Python dictionary as a paramMapparamMap ={lr.maxIter:20}#覆盖掉paramMap[lr.maxIter]=30# Specify 1 Param, overwriting the original maxIter.#更新参数对paramMap.update({lr.regParam:0.1, lr.threshold:0.55})# Specify multiple Params.# You can combine paramMaps, which are python dictionaries.#新的参数,合并为两组参数对paramMap2 ={lr.probabilityCol:"myProbability"}# Change output column nameparamMapCombined = paramMap.copy()paramMapCombined.update(paramMap2)#重新得到model2并拿出来参数看看# Now learn a new model using the paramMapCombined parameters.# paramMapCombined overrides all parameters set earlier via lr.set* methods.model2 = lr.fit(training, paramMapCombined)print"Model 2 was fit using parameters: "print model2.extractParamMap()#准备测试的数据# Prepare test datatest = spark.createDataFrame([(1.0,Vectors.dense([-1.0,1.5,1.3])),(0.0,Vectors.dense([3.0,2.0,-0.1])),(1.0,Vectors.dense([0.0,2.2,-1.5]))],["label","features"])# Make predictions on test data using the Transformer.transform() method.# LogisticRegression.transform will only use the 'features' column.# Note that model2.transform() outputs a "myProbability" column instead of the usual# 'probability' column since we renamed the lr.probabilityCol parameter previously.prediction = model2.transform(test)#得到预测的DataFrame打印出预测中的选中列selected = prediction.select("features","label","myProbability","prediction")for row in selected.collect():print row

下面是一个PipeLine的实例:

from pyspark.ml importPipelinefrom pyspark.ml.classification importLogisticRegressionfrom pyspark.ml.feature importHashingTF,Tokenizer#准备测试数据# Prepare training documents from a list of (id, text, label) tuples.training = spark.createDataFrame([(0L,"a b c d e spark",1.0),(1L,"b d",0.0),(2L,"spark f g h",1.0),(3L,"hadoop mapreduce",0.0)],["id","text","label"])#构建机器学习流水线# Configure an ML pipeline, which consists of three stages: tokenizer, hashingTF, and lr.tokenizer =Tokenizer(inputCol="text", outputCol="words")hashingTF =HashingTF(inputCol=tokenizer.getOutputCol(), outputCol="features")lr =LogisticRegression(maxIter=10, regParam=0.01)pipeline =Pipeline(stages=[tokenizer, hashingTF, lr])#训练出model# Fit the pipeline to training documents.model = pipeline.fit(training)#测试数据# Prepare test documents, which are unlabeled (id, text) tuples.test = spark.createDataFrame([(4L,"spark i j k"),(5L,"l m n"),(6L,"mapreduce spark"),(7L,"apache hadoop")],["id","text"])#预测,打印出想要的结果# Make predictions on test documents and print columns of interest.prediction = model.transform(test)selected = prediction.select("id","text","prediction")for row in selected.collect():print(row)