Ceph put 操作

ceph osd pool create mypool 32 32

rados put day.jpg /usr/share/backgrounds/day.jpg -p mypool

rados ls -p mypool

ceph osd map mypool day.jpg

Ceph 与 Volumes 结合使用

mkdir /data/volumes -p

cd /data/volumes

ceph osd pool create kubernetes 8

ceph osd lspools

ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=kubernetes'

[client.kubernetes]

key = AQDdGqZgAHAaJBAABTNl15S9fWw1vkyuCcT8sQ==

# 查看

ceph auth ls

echo AQDdGqZgAHAaJBAABTNl15S9fWw1vkyuCcT8sQ==| base64

QVFEZEdxWmdBSEFhSkJBQUJUTmwxNVM5Zld3MXZreXVDY1Q4c1E9PQo=

vi secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret

type: "kubernetes.io/rbd"

data:

key: QVFEZEdxWmdBSEFhSkJBQUJUTmwxNVM5Zld3MXZreXVDY1Q4c1E9PQo=

kubectl apply -f secret.yaml

kubectl get secrets

# 创建 rbd 块

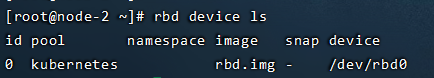

rbd create -p kubernetes --image-feature layering rbd.img --size 5G

rbd info kubernetes/rbd.img

ceph mon stat

# Pod 中引用 RBD volumes

vi pods.yaml

apiVersion: v1

kind: Pod

metadata:

name: volume-rbd-demo

spec:

containers:

- name: pod-with-rbd

image: nginx:1.7.9

imagePullPolicy: IfNotPresent

ports:

- name: www

containerPort: 80

protocol: TCP

volumeMounts:

- name: rbd-demo

mountPath: /data

volumes:

- name: rbd-demo

rbd:

monitors:

- 192.168.31.207:6789

- 192.168.31.159:6789

- 192.168.31.198:6789

pool: kubernetes

image: rbd.img

fsType: ext4

user: kubernetes

secretRef:

name: ceph-secret

# 测试

kubectl apply -f pods.yaml

kubectl get pods

kubectl exec -it volume-rbd-demo -- df -h