Python爬取新闻列表并保存为xml(读取ini配置文件)

一,分析网站

-

目标网站[ http://www.cankaoxiaoxi.com/china/szyw/1.shtml ]

-

爬取深度为2

-

1,列表页

-

2,内容页

-

-

列表页新闻标题包含在ul>li中,并且在上图红框处有一个li为分割线,所以要对li进行判断,为空则跳过这个li

-

内容页,文章包含在

<div class = "articleText"> </div> -

翻页,当我们点击下一页时在url[ http://www.cankaoxiaoxi.com/china/szyw/1.shtml ],数字的地方会递增

for i in range(1, 10): url = "http://www.cankaoxiaoxi.com/china/szyw/{}.shtml".format(i)

二,代码实现

-

1,csv, xml, ini配置文件的读取,前面几篇博客都有实例,可以查看我之前的博客

-

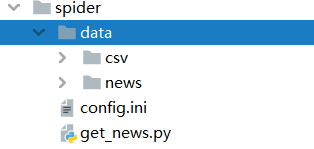

2,项目目录

-

3,ini配置文件

-

4,代码

import time import xml.etree.ElementTree as ET import configparser import csv import requests from bs4 import BeautifulSoup from faker import Factory def get_soup(url, encoding="utf-8"): # 返回soup time.sleep(1) # 使用faker库生成不透的user_agent fa = Factory.create() headers = { "User-Agent": fa.user_agent(), } try: resp = requests.get(url, headers=headers) resp.encoding=encoding return BeautifulSoup(resp.text, 'lxml') except Exception as e: print("get_soup: %s : %s" % (type(e), url)) return None def get_news_item(url): # 获取新闻列表里面的每一条链接 news_list = [] # 爬取10页 for page in range(1,10): url = url.format(str(page)) soup = get_soup(url) if soup is None: continue news_ul = soup.find('ul', {'class': 'txt-list-a fz-14'}) news_li = news_ul.find_all('li') for i in range(len(news_li)): try: # 判断li中是否有内容 if len(news_li[i].text) == 0: continue # 获取时间 new_time = news_li[i].span.text # 获取标题 title = news_li[i].a.text.strip() # 获取链接 link = news_li[i].a['href'] new_info = { "time":new_time, "title": title, "link": link, } # print(title, time, link) news_list.append(new_info) except Exception as e: print("get_news_item: %s : %s" % (type(e), link)) continue return news_list def get_news_info(news_list, article_file_path, article_encoding="utf-8"): # 获取文章的主题并保存为csv和xml文件 index = 1 with open('data/csv/news.csv', 'a', encoding="utf-8") as csv_file: # 写入csv writer = csv.writer(csv_file) writer.writerow(['id', '标题', '时间', '链接', '文章']) for new in news_list: new_link = new.get('link') soup = get_soup(new_link) if soup is None: continue try: article = soup.find("div", {"class": "articleText"}) article_p = article.find_all('p') article_text = "" for t in article_p: article_text += t.text.strip() new_ET = ET.Element('new') # 写入xml文档 new_ET.set("id", str(index)) ET.SubElement(new_ET, 'title').text = new.get('title') ET.SubElement(new_ET, 'time').text = new.get('time') ET.SubElement(new_ET, 'article').text = article_text # 创建文档 tree = ET.ElementTree(new_ET) tree.write(file_or_filename=article_file_path+str(index)+'.xml', encoding=article_encoding, xml_declaration=True) # 写入csv writer.writerow([str(index), new.get('title'), new.get('time'), new.get('link'), article_text]) print("page"+str(index)+"写入成功!!!") index += 1 except Exception as e: print("get_news_info: %s : %s" % (type(e), new_link)) if __name__ == '__main__': # 读取配置文件 config = configparser.ConfigParser() config.read("config.ini", encoding="utf-8") url = "http://www.cankaoxiaoxi.com/china/szyw/{}.shtml" news_list = get_news_item(url) get_news_info(news_list, config['article']['article_file_path']) -

5,爬取结果

-

xml文件

-

csv文件

·

-

三,注意事项

- 1,设置请求间隔时间,防止过快请求服务器,被服务器拒绝

- 2,try,except捕获异常,尤其是进行页面解析时,可以很容易发现程序漏洞,增加健壮性