hdfs dfsadmin

[-report [-live] [-dead] [-decommissioning]]

[-safemode <enter | leave | get | wait>]

[-saveNamespace]

[-rollEdits]

[-restoreFailedStorage true|false|check]

[-refreshNodes]

[-setQuota <quota> <dirname>...<dirname>]

[-clrQuota <dirname>...<dirname>]

[-setSpaceQuota <quota> <dirname>...<dirname>]

[-clrSpaceQuota <dirname>...<dirname>]

[-finalizeUpgrade]

[-rollingUpgrade [<query|prepare|finalize>]]

[-refreshServiceAcl]

[-refreshUserToGroupsMappings]

[-refreshSuperUserGroupsConfiguration]

[-refreshCallQueue]

[-refresh <host:ipc_port> <key> [arg1..argn]

[-reconfig <datanode|...> <host:ipc_port> <start|status>]

[-printTopology]

[-refreshNamenodes datanode_host:ipc_port]

[-deleteBlockPool datanode_host:ipc_port blockpoolId [force]]

[-setBalancerBandwidth <bandwidth in bytes per second>]

[-fetchImage <local directory>]

[-allowSnapshot <snapshotDir>]

[-disallowSnapshot <snapshotDir>]

[-shutdownDatanode <datanode_host:ipc_port> [upgrade]]

[-getDatanodeInfo <datanode_host:ipc_port>]

[-metasave filename]

[-setStoragePolicy path policyName]

[-getStoragePolicy path]

[-help [cmd]]

-report [-live] [-dead] [-decommissioning]:

Reports basic filesystem information and statistics.

Optional flags may be used to filter the list of displayed DNs.

-safemode <enter|leave|get|wait>: Safe mode maintenance command.

Safe mode is a Namenode state in which it

1. does not accept changes to the name space (read-only)

2. does not replicate or delete blocks.

Safe mode is entered automatically at Namenode startup, and

leaves safe mode automatically when the configured minimum

percentage of blocks satisfies the minimum replication

condition. Safe mode can also be entered manually, but then

it can only be turned off manually as well.

-saveNamespace: Save current namespace into storage directories and reset edits log.

Requires safe mode.

-rollEdits: Rolls the edit log.

-restoreFailedStorage: Set/Unset/Check flag to attempt restore of failed storage replicas if they become available.

-refreshNodes: Updates the namenode with the set of datanodes allowed to connect to the namenode.

Namenode re-reads datanode hostnames from the file defined by

dfs.hosts, dfs.hosts.exclude configuration parameters.

Hosts defined in dfs.hosts are the datanodes that are part of

the cluster. If there are entries in dfs.hosts, only the hosts

in it are allowed to register with the namenode.

Entries in dfs.hosts.exclude are datanodes that need to be

decommissioned. Datanodes complete decommissioning when

all the replicas from them are replicated to other datanodes.

Decommissioned nodes are not automatically shutdown and

are not chosen for writing new replicas.

-finalizeUpgrade: Finalize upgrade of HDFS.

Datanodes delete their previous version working directories,

followed by Namenode doing the same.

This completes the upgrade process.

-rollingUpgrade [<query|prepare|finalize>]:

query: query the current rolling upgrade status.

prepare: prepare a new rolling upgrade.

finalize: finalize the current rolling upgrade.

-metasave <filename>: Save Namenode's primary data structures

to <filename> in the directory specified by hadoop.log.dir property.

<filename> is overwritten if it exists.

<filename> will contain one line for each of the following

1. Datanodes heart beating with Namenode

2. Blocks waiting to be replicated

3. Blocks currrently being replicated

4. Blocks waiting to be deleted

-setQuota <quota> <dirname>...<dirname>: Set the quota <quota> for each directory <dirName>.

The directory quota is a long integer that puts a hard limit

on the number of names in the directory tree

For each directory, attempt to set the quota. An error will be reported if

1. N is not a positive integer, or

2. User is not an administrator, or

3. The directory does not exist or is a file.

Note: A quota of 1 would force the directory to remain empty.

-clrQuota <dirname>...<dirname>: Clear the quota for each directory <dirName>.

For each directory, attempt to clear the quota. An error will be reported if

1. the directory does not exist or is a file, or

2. user is not an administrator.

It does not fault if the directory has no quota.

-setSpaceQuota <quota> <dirname>...<dirname>: Set the disk space quota <quota> for each directory <dirName>.

The space quota is a long integer that puts a hard limit

on the total size of all the files under the directory tree.

The extra space required for replication is also counted. E.g.

a 1GB file with replication of 3 consumes 3GB of the quota.

Quota can also be specified with a binary prefix for terabytes,

petabytes etc (e.g. 50t is 50TB, 5m is 5MB, 3p is 3PB).

For each directory, attempt to set the quota. An error will be reported if

1. N is not a positive integer, or

2. user is not an administrator, or

3. the directory does not exist or is a file, or

-clrSpaceQuota <dirname>...<dirname>: Clear the disk space quota for each directory <dirName>.

For each directory, attempt to clear the quota. An error will be reported if

1. the directory does not exist or is a file, or

2. user is not an administrator.

It does not fault if the directory has no quota.

-refreshServiceAcl: Reload the service-level authorization policy file

Namenode will reload the authorization policy file.

-refreshUserToGroupsMappings: Refresh user-to-groups mappings

-refreshSuperUserGroupsConfiguration: Refresh superuser proxy groups mappings

-refreshCallQueue: Reload the call queue from config

-refresh: Arguments are <hostname:port> <resource_identifier> [arg1..argn]

Triggers a runtime-refresh of the resource specified by <resource_identifier>

on <hostname:port>. All other args after are sent to the host.

-reconfig <datanode|...> <host:ipc_port> <start|status>:

Starts reconfiguration or gets the status of an ongoing reconfiguration.

The second parameter specifies the node type.

Currently, only reloading DataNode's configuration is supported.

-printTopology: Print a tree of the racks and their

nodes as reported by the Namenode

-refreshNamenodes: Takes a datanodehost:port as argument,

For the given datanode, reloads the configuration files,

stops serving the removed block-pools

and starts serving new block-pools

-deleteBlockPool: Arguments are datanodehost:port, blockpool id

and an optional argument "force". If force is passed,

block pool directory for the given blockpool id on the given

datanode is deleted along with its contents, otherwise

the directory is deleted only if it is empty. The command

will fail if datanode is still serving the block pool.

Refer to refreshNamenodes to shutdown a block pool

service on a datanode.

-setBalancerBandwidth <bandwidth>:

Changes the network bandwidth used by each datanode during

HDFS block balancing.

<bandwidth> is the maximum number of bytes per second

that will be used by each datanode. This value overrides

the dfs.balance.bandwidthPerSec parameter.

--- NOTE: The new value is not persistent on the DataNode.---

-fetchImage <local directory>:

Downloads the most recent fsimage from the Name Node and saves it in the specified local directory.

-allowSnapshot <snapshotDir>:

Allow snapshots to be taken on a directory.

-disallowSnapshot <snapshotDir>:

Do not allow snapshots to be taken on a directory any more.

-shutdownDatanode <datanode_host:ipc_port> [upgrade]

Submit a shutdown request for the given datanode. If an optional

"upgrade" argument is specified, clients accessing the datanode

will be advised to wait for it to restart and the fast start-up

mode will be enabled. When the restart does not happen in time,

clients will timeout and ignore the datanode. In such case, the

fast start-up mode will also be disabled.

-getDatanodeInfo <datanode_host:ipc_port>

Get the information about the given datanode. This command can

be used for checking if a datanode is alive.

-setStoragePolicy path policyName

Set the storage policy for a file/directory.

-getStoragePolicy path

Get the storage policy for a file/directory.

-help [cmd]: Displays help for the given command or all commands if none

is specified.

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines.

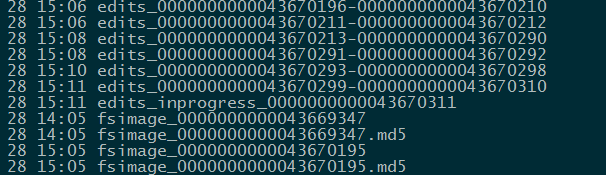

hdfs dfsadmin -rollEdits

说明:roll一个新的editlog 与journal 同步

日志:

Successfully rolled edit logs.

New segment starts at txid 43670311

执行成功后 active-nn 与journal 上的 edits_inprogress txid 都会从43670311开始