准备工作

下载 Hadoop 源码 Source (当前最新 2.9.2)

https://hadoop.apache.org/releases.html

打开压缩包会看到 BUILDING.txt 文件,这是官方提供的编译说明,参看 Linux 部分

Requirements: * Unix System * JDK 1.7 or 1.8 * Maven 3.0 or later * Findbugs 1.3.9 (if running findbugs) * ProtocolBuffer 2.5.0 * CMake 2.6 or newer (if compiling native code), must be 3.0 or newer on Mac * Zlib devel (if compiling native code) * openssl devel (if compiling native hadoop-pipes and to get the best HDFS encryption performance) * Linux FUSE (Filesystem in Userspace) version 2.6 or above (if compiling fuse_dfs) * Internet connection for first build (to fetch all Maven and Hadoop dependencies) * python (for releasedocs) * Node.js / bower / Ember-cli (for YARN UI v2 building)

搭建编译环境

JDK(1.8)

https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

# 解压 tar -zxf /opt/bak/jdk-8u192-linux-x64.tar.gz -C /opt/app/ # 配置JDK环境变量 vi /etc/profile # JAVA_HOME # export为把变量导出为全局变量 export JAVA_HOME=/opt/app/jdk1.8.0_192/ # .表示当前路径,:表示分隔符 export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$CLASSPATH # $PATH表示取出当前系统中的值,类似于i = 3 + i export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH # 刷新环境变量 source /etc/profile # 验证JDK java -version # java version "1.8.0_192" # Java(TM) SE Runtime Environment (build 1.8.0_192-b12) # Java HotSpot(TM) 64-Bit Server VM (build 25.192-b12, mixed mode)

Maven(3.6.0)

http://maven.apache.org/download.cgi

# 解压 tar -zxf /opt/bak/apache-maven-3.6.0-bin.tar.gz -C /opt/app/ # 配置环境变量 vi /etc/profile # MAVEN_HOME export MAVEN_HOME=/opt/app/apache-maven-3.6.0/ export PATH=$PATH:$MAVEN_HOME/bin # 刷新环境变量 source /etc/profile # 验证Maven mvn -v # Apache Maven 3.6.0 (97c98ec64a1fdfee7767ce5ffb20918da4f719f3; 2018-10-25T02:41:47+08:00) # Maven home: /opt/app/apache-maven-3.6.0 # Java version: 1.8.0_192, vendor: Oracle Corporation, runtime: /opt/app/jdk1.8.0_192/jre # Default locale: zh_CN, platform encoding: UTF-8 # OS name: "linux", version: "3.10.0-862.el7.x86_64", arch: "amd64", family: "unix" # 配置 Maven 仓库 (华为) vim /opt/app/apache-maven-3.6.0/conf/settings.xml

settings.xml

<!-- 本地仓库路径 --> <localRepository>/opt/repo</localRepository> <!-- 网络仓库地址 --> <mirrors> <mirror> <id>huaweicloud</id> <mirrorOf>central</mirrorOf> <url>https://mirrors.huaweicloud.com/repository/maven/</url> </mirror> </mirrors>

Ant(1.10.5)

https://ant.apache.org/bindownload.cgi

# 解压 tar -zxf /opt/bak/apache-ant-1.10.5-bin.tar.gz -C /opt/app/ # 配置环境变量 vi /etc/profile # ANT_HOME export ANT_HOME=/opt/app/apache-ant-1.10.5/ export PATH=$PATH:$ANT_HOME/bin # 刷新环境变量 source /etc/profile # 验证 ant -version # Apache Ant(TM) version 1.10.5 compiled on July 10 2018

Protobuf(版本必须为2.5)

https://github.com/protocolbuffers/protobuf/releases/tag/v2.5.0

# protobuf 需要编译安装,先配置 yum 源,安装编译工具 # 清空原有 yum 源 rm -rf /etc/yum.repos.d/* # 下载阿里 yum 源配置文件 curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo # 安装编译工具 yum install -y glibc-headers gcc-c++ make cmake # 解压 protobuf tar -zxf /opt/bak/protobuf-2.5.0.tar.gz -C /opt/app/ # 编译安装(需要一点时间) cd /opt/app/protobuf-2.5.0/ # 检查当前的环境是否满足要安装软件的依赖关系,并设置安装目录 ./configure --prefix=/opt/app/protobuf-2.5.0/ # 从 Makefile 中读取指令,编译 make # 测试 make check # 从 Makefile 中读取指令,安装到指定的位置 make install # 让动态链接库为系统所共享 ldconfig # 配置环境变量 vim /etc/profile # 用于指定查找共享库(动态链接库)时除了默认路径之外的其他路径 # LD_LIBRARY_PATH export LD_LIBRARY_PATH=/opt/app/protobuf-2.5.0/ export PATH=$PATH:$LD_LIBRARY_PATH # 刷新环境变量 source /etc/profile # 验证 protoc --version # libprotoc 2.5.0

openssl devel

yum install -y openssl-devel ncurses-devel

Snappy(1.1.7,让 hadoop 支持压缩,非必须)

https://github.com/google/snappy/releases

Snappy(1.1.7) 编译需要 CMake3 版本,先安装 CMake3 (3.13.2)

# 解压 tar -zxf /opt/bak/cmake-3.13.2.tar.gz -C /opt/app/ # 安装 gcc (若没有安装) yum install -y gcc gcc-c++ # 编译安装 CMake3 (需要一点时间) cd /opt/app/cmake-3.13.2/ # 检查依赖,设置安装路径 ./bootstrap --prefix=/opt/app/cmake-3.13.2/ # 编译 gmake # 安装 gmake install # 移除旧的 cmake 版本 yum remove -y cmake # 配置环境变量 vim /etc/profile # CMake_HOME export CMake_HOME=/opt/app/cmake-3.13.2/ export PATH=$PATH:$CMake_HOME/bin # 刷新环境变量 source /etc/profile # 验证 cmake --version # cmake version 3.13.2 # CMake suite maintained and supported by Kitware (kitware.com/cmake).

安装 Snappy

# 解压 tar -zxf /opt/bak/snappy-1.1.7.tar.gz -C /opt/app/ # 编译安装 cd /opt/app/snappy-1.1.7/ # 创建构建文件夹 mkdir build # 编译 cd build && cmake ../ && make # 安装 make install Install the project... -- Install configuration: "" -- Installing: /usr/local/lib64/libsnappy.a -- Installing: /usr/local/include/snappy-c.h -- Installing: /usr/local/include/snappy-sinksource.h -- Installing: /usr/local/include/snappy.h -- Installing: /usr/local/include/snappy-stubs-public.h -- Installing: /usr/local/lib64/cmake/Snappy/SnappyTargets.cmake -- Installing: /usr/local/lib64/cmake/Snappy/SnappyTargets-noconfig.cmake -- Installing: /usr/local/lib64/cmake/Snappy/SnappyConfig.cmake -- Installing: /usr/local/lib64/cmake/Snappy/SnappyConfigVersion.cmake # 验证 ls -lh /usr/local/lib64 | grep snappy # -rw-r--r--. 1 root root 184K 3月 13 22:23 libsnappy.a

开始编译

官方编译参数说明

Maven build goals:

* Clean : mvn clean [-Preleasedocs]

* Compile : mvn compile [-Pnative]

* Run tests : mvn test [-Pnative]

* Create JAR : mvn package

* Run findbugs : mvn compile findbugs:findbugs

* Run checkstyle : mvn compile checkstyle:checkstyle

* Install JAR in M2 cache : mvn install

* Deploy JAR to Maven repo : mvn deploy

* Run clover : mvn test -Pclover [-DcloverLicenseLocation=${user.name}/.clover.license]

* Run Rat : mvn apache-rat:check

* Build javadocs : mvn javadoc:javadoc

* Build distribution : mvn package [-Pdist][-Pdocs][-Psrc][-Pnative][-Dtar][-Preleasedocs][-Pyarn-ui]

* Change Hadoop version : mvn versions:set -DnewVersion=NEWVERSION

Build options:

* Use -Pnative to compile/bundle native code

* Use -Pdocs to generate & bundle the documentation in the distribution (using -Pdist)

* Use -Psrc to create a project source TAR.GZ

* Use -Dtar to create a TAR with the distribution (using -Pdist)

* Use -Preleasedocs to include the changelog and release docs (requires Internet connectivity)

* Use -Pyarn-ui to build YARN UI v2. (Requires Internet connectivity)

Snappy build options:

Snappy is a compression library that can be utilized by the native code.

It is currently an optional component, meaning that Hadoop can be built with

or without this dependency.

* Use -Drequire.snappy to fail the build if libsnappy.so is not found.

If this option is not specified and the snappy library is missing,

we silently build a version of libhadoop.so that cannot make use of snappy.

This option is recommended if you plan on making use of snappy and want

to get more repeatable builds.

* Use -Dsnappy.prefix to specify a nonstandard location for the libsnappy

header files and library files. You do not need this option if you have

installed snappy using a package manager.

* Use -Dsnappy.lib to specify a nonstandard location for the libsnappy library

files. Similarly to snappy.prefix, you do not need this option if you have

installed snappy using a package manager.

* Use -Dbundle.snappy to copy the contents of the snappy.lib directory into

the final tar file. This option requires that -Dsnappy.lib is also given,

and it ignores the -Dsnappy.prefix option. If -Dsnappy.lib isn't given, the

bundling and building will fail.

OpenSSL build options:

OpenSSL includes a crypto library that can be utilized by the native code.

It is currently an optional component, meaning that Hadoop can be built with

or without this dependency.

* Use -Drequire.openssl to fail the build if libcrypto.so is not found.

If this option is not specified and the openssl library is missing,

we silently build a version of libhadoop.so that cannot make use of

openssl. This option is recommended if you plan on making use of openssl

and want to get more repeatable builds.

* Use -Dopenssl.prefix to specify a nonstandard location for the libcrypto

header files and library files. You do not need this option if you have

installed openssl using a package manager.

* Use -Dopenssl.lib to specify a nonstandard location for the libcrypto library

files. Similarly to openssl.prefix, you do not need this option if you have

installed openssl using a package manager.

* Use -Dbundle.openssl to copy the contents of the openssl.lib directory into

the final tar file. This option requires that -Dopenssl.lib is also given,

and it ignores the -Dopenssl.prefix option. If -Dopenssl.lib isn't given, the

bundling and building will fail.

Tests options:

* Use -DskipTests to skip tests when running the following Maven goals:

'package', 'install', 'deploy' or 'verify'

* -Dtest=<TESTCLASSNAME>,<TESTCLASSNAME#METHODNAME>,....

* -Dtest.exclude=<TESTCLASSNAME>

* -Dtest.exclude.pattern=**/<TESTCLASSNAME1>.java,**/<TESTCLASSNAME2>.java

* To run all native unit tests, use: mvn test -Pnative -Dtest=allNative

* To run a specific native unit test, use: mvn test -Pnative -Dtest=<test>

For example, to run test_bulk_crc32, you would use:

mvn test -Pnative -Dtest=test_bulk_crc32

编译

# 解压 hadoop 源码 tar -zxf /opt/bak/hadoop-2.9.2-src.tar.gz -C /opt/app/ # 编译 cd /opt/app/hadoop-2.9.2-src/ mvn clean package -Pdist,native -DskipTests –Dtar # 带 snappy 版本编译 mvn clean package -Pdist,native -DskipTests -Dtar -Dbundle.snappy -Dsnappy.lib=/usr/local/lib64 # -Pdist,native :把重新编译生成的hadoop动态库 # -DskipTests :跳过测试 # -Dtar :最后把文件以tar打包 # -Dbundle.snappy :添加snappy压缩支持(默认官网下载的是不支持的) # -Dsnappy.lib=/usr/local/lib :指snappy在编译机器上安装后的库路径

编译成功后的打印结果

[INFO] ------------------------------------------------------------------------ [INFO] Reactor Summary for Apache Hadoop Main 2.9.2: [INFO] [INFO] Apache Hadoop Main ................................. SUCCESS [ 23.222 s] [INFO] Apache Hadoop Build Tools .......................... SUCCESS [ 38.032 s] [INFO] Apache Hadoop Project POM .......................... SUCCESS [ 13.828 s] [INFO] Apache Hadoop Annotations .......................... SUCCESS [ 11.047 s] [INFO] Apache Hadoop Assemblies ........................... SUCCESS [ 0.281 s] [INFO] Apache Hadoop Project Dist POM ..................... SUCCESS [ 30.537 s] [INFO] Apache Hadoop Maven Plugins ........................ SUCCESS [ 22.664 s] [INFO] Apache Hadoop MiniKDC .............................. SUCCESS [ 37.050 s] [INFO] Apache Hadoop Auth ................................. SUCCESS [ 6.176 s] [INFO] Apache Hadoop Auth Examples ........................ SUCCESS [ 6.545 s] [INFO] Apache Hadoop Common ............................... SUCCESS [01:32 min] [INFO] Apache Hadoop NFS .................................. SUCCESS [ 5.024 s] [INFO] Apache Hadoop KMS .................................. SUCCESS [ 10.940 s] [INFO] Apache Hadoop Common Project ....................... SUCCESS [ 0.056 s] [INFO] Apache Hadoop HDFS Client .......................... SUCCESS [ 25.101 s] [INFO] Apache Hadoop HDFS ................................. SUCCESS [ 52.534 s] [INFO] Apache Hadoop HDFS Native Client ................... SUCCESS [ 5.757 s] [INFO] Apache Hadoop HttpFS ............................... SUCCESS [ 16.739 s] [INFO] Apache Hadoop HDFS BookKeeper Journal .............. SUCCESS [ 15.768 s] [INFO] Apache Hadoop HDFS-NFS ............................. SUCCESS [ 3.893 s] [INFO] Apache Hadoop HDFS-RBF ............................. SUCCESS [ 20.197 s] [INFO] Apache Hadoop HDFS Project ......................... SUCCESS [ 0.044 s] [INFO] Apache Hadoop YARN ................................. SUCCESS [ 0.058 s] [INFO] Apache Hadoop YARN API ............................. SUCCESS [ 14.627 s] [INFO] Apache Hadoop YARN Common .......................... SUCCESS [ 27.408 s] [INFO] Apache Hadoop YARN Registry ........................ SUCCESS [ 4.724 s] [INFO] Apache Hadoop YARN Server .......................... SUCCESS [ 0.050 s] [INFO] Apache Hadoop YARN Server Common ................... SUCCESS [ 11.299 s] [INFO] Apache Hadoop YARN NodeManager ..................... SUCCESS [ 28.335 s] [INFO] Apache Hadoop YARN Web Proxy ....................... SUCCESS [ 2.798 s] [INFO] Apache Hadoop YARN ApplicationHistoryService ....... SUCCESS [ 13.109 s] [INFO] Apache Hadoop YARN Timeline Service ................ SUCCESS [ 4.428 s] [INFO] Apache Hadoop YARN ResourceManager ................. SUCCESS [ 20.831 s] [INFO] Apache Hadoop YARN Server Tests .................... SUCCESS [ 1.059 s] [INFO] Apache Hadoop YARN Client .......................... SUCCESS [ 5.287 s] [INFO] Apache Hadoop YARN SharedCacheManager .............. SUCCESS [ 3.199 s] [INFO] Apache Hadoop YARN Timeline Plugin Storage ......... SUCCESS [ 3.138 s] [INFO] Apache Hadoop YARN Router .......................... SUCCESS [ 4.267 s] [INFO] Apache Hadoop YARN TimelineService HBase Backend ... SUCCESS [ 16.384 s] [INFO] Apache Hadoop YARN Timeline Service HBase tests .... SUCCESS [ 2.344 s] [INFO] Apache Hadoop YARN Applications .................... SUCCESS [ 0.043 s] [INFO] Apache Hadoop YARN DistributedShell ................ SUCCESS [ 2.740 s] [INFO] Apache Hadoop YARN Unmanaged Am Launcher ........... SUCCESS [ 1.604 s] [INFO] Apache Hadoop YARN Site ............................ SUCCESS [ 0.042 s] [INFO] Apache Hadoop YARN UI .............................. SUCCESS [ 0.202 s] [INFO] Apache Hadoop YARN Project ......................... SUCCESS [ 5.631 s] [INFO] Apache Hadoop MapReduce Client ..................... SUCCESS [ 0.132 s] [INFO] Apache Hadoop MapReduce Core ....................... SUCCESS [ 17.885 s] [INFO] Apache Hadoop MapReduce Common ..................... SUCCESS [ 13.105 s] [INFO] Apache Hadoop MapReduce Shuffle .................... SUCCESS [ 2.950 s] [INFO] Apache Hadoop MapReduce App ........................ SUCCESS [ 7.437 s] [INFO] Apache Hadoop MapReduce HistoryServer .............. SUCCESS [ 4.776 s] [INFO] Apache Hadoop MapReduce JobClient .................. SUCCESS [ 5.024 s] [INFO] Apache Hadoop MapReduce HistoryServer Plugins ...... SUCCESS [ 1.584 s] [INFO] Apache Hadoop MapReduce Examples ................... SUCCESS [ 4.781 s] [INFO] Apache Hadoop MapReduce ............................ SUCCESS [ 2.414 s] [INFO] Apache Hadoop MapReduce Streaming .................. SUCCESS [ 3.705 s] [INFO] Apache Hadoop Distributed Copy ..................... SUCCESS [ 3.749 s] [INFO] Apache Hadoop Archives ............................. SUCCESS [ 1.765 s] [INFO] Apache Hadoop Archive Logs ......................... SUCCESS [ 1.826 s] [INFO] Apache Hadoop Rumen ................................ SUCCESS [ 4.424 s] [INFO] Apache Hadoop Gridmix .............................. SUCCESS [ 3.199 s] [INFO] Apache Hadoop Data Join ............................ SUCCESS [ 2.016 s] [INFO] Apache Hadoop Ant Tasks ............................ SUCCESS [ 1.674 s] [INFO] Apache Hadoop Extras ............................... SUCCESS [ 2.332 s] [INFO] Apache Hadoop Pipes ................................ SUCCESS [ 4.439 s] [INFO] Apache Hadoop OpenStack support .................... SUCCESS [ 3.532 s] [INFO] Apache Hadoop Amazon Web Services support .......... SUCCESS [03:14 min] [INFO] Apache Hadoop Azure support ........................ SUCCESS [ 27.134 s] [INFO] Apache Hadoop Aliyun OSS support ................... SUCCESS [ 40.460 s] [INFO] Apache Hadoop Client ............................... SUCCESS [ 6.228 s] [INFO] Apache Hadoop Mini-Cluster ......................... SUCCESS [ 1.359 s] [INFO] Apache Hadoop Scheduler Load Simulator ............. SUCCESS [ 4.537 s] [INFO] Apache Hadoop Resource Estimator Service ........... SUCCESS [ 14.946 s] [INFO] Apache Hadoop Azure Data Lake support .............. SUCCESS [ 30.651 s] [INFO] Apache Hadoop Tools Dist ........................... SUCCESS [ 14.665 s] [INFO] Apache Hadoop Tools ................................ SUCCESS [ 0.023 s] [INFO] Apache Hadoop Distribution ......................... SUCCESS [ 47.203 s] [INFO] Apache Hadoop Cloud Storage ........................ SUCCESS [ 0.666 s] [INFO] Apache Hadoop Cloud Storage Project ................ SUCCESS [ 0.033 s] [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------

snappy 压缩支持,可以在安装 Hadoop 后用命令检查,在 lib/native 路径下也有相关文件

hadoop checknative

编译过程中请保持网络畅通,编译可能会因为包依赖下载出错而失败,可多试几次。

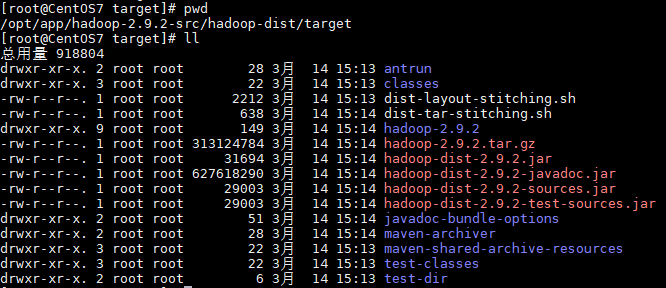

编译成功后生成的文件在 hadoop-dist/target 路径下