我们今天来用Python爬虫爬取金庸所有的武侠小说,网址为:http://jinyong.zuopinj.com/,网页如下:

Python代码如下:

1 # -*- coding: utf-8 -*- 2 import urllib.request 3 from bs4 import BeautifulSoup 4 5 #get book's chapter 6 def get_chapter(url): 7 html = urllib.request.urlopen(url) 8 content = html.read().decode('utf8') 9 html.close() 10 soup = BeautifulSoup(content, "lxml") 11 title = soup.find('h1').text #chapter title 12 text = soup.find('div', id='htmlContent') #chapter content 13 #processing the content to get nice style 14 content = text.get_text(' ','br/').replace(' ', ' ') 15 content = content.replace(' ', ' ') 16 return title, ' '+content 17 18 def main(): 19 books = ['射雕英雄传','天龙八部','鹿鼎记','神雕侠侣','笑傲江湖','碧血剑','倚天屠龙记', 20 '飞狐外传','书剑恩仇录','连城诀','侠客行','越女剑','鸳鸯刀','白马啸西风', 21 '雪山飞狐'] 22 order = [1,2,3,4,5,6,7,8,10,11,12,14,15,13,9] #order of books to scrapy 23 #list to store each book's scrapying range 24 page_range = [1,43,94,145,185,225,248,289,309,329,341,362,363,364,375,385] 25 26 for i,book in enumerate(books): 27 for num in range(page_range[i],page_range[i+1]): 28 url = "http://jinyong.zuopinj.com/%s/%s.html"%(order[i],num) 29 try: 30 title, chapter = get_chapter(url) 31 with open('E://%s.txt'%book, 'a', encoding='gb18030') as f: 32 print(book+':'+title+'-->写入成功!') 33 f.write(title+' ') 34 f.write(chapter+' ') 35 except Exception as e: 36 print(e) 37 print('全部写入完毕!') 38 39 main()

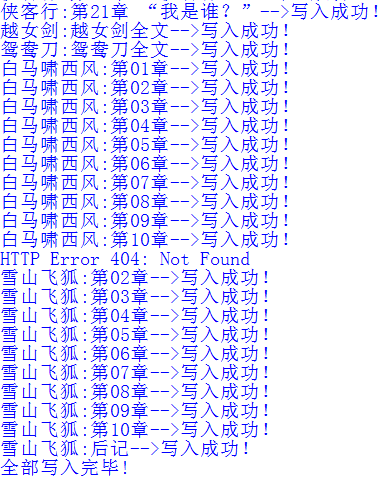

运行结果如下:

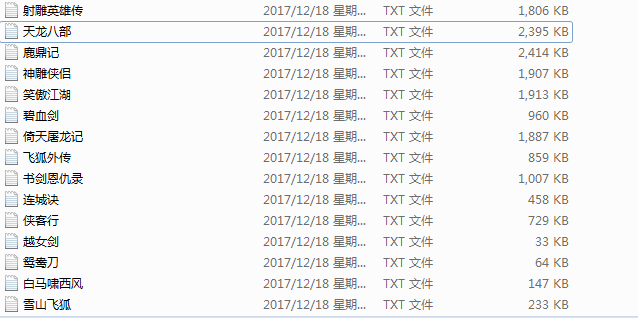

上面的运行结果“HTTP Error 404: Not Found”是因为这个网页不存在,并不影响书本内容的完整性。我们可以去E盘查看文件是否下载成功:

· 15本书都下载完毕了!整个过程才用了不到10分钟!爬虫的力量真是伟大啊~~