软件、框架的安装主要参考:http://www.jianshu.com/p/a03aab073a35

Scrapy官方文档:https://docs.scrapy.org/en/latest/intro/install.html(安装、爬虫都有参考的)

程序逻辑、流程主要参考http://cuiqingcai.com/4421.html,

其他细节百度

环境:

macOS10.12.3

Python2.7

Scrapy1.3.3

一、软件(python)、框架(scrapy)的安装

mac自带Python,根据Scrapy官网建议,最好下载最新的Python版本安装

1、安装pip工具包的支撑环境

各个电脑的情况不一样,支撑包的情况也不一样,反正一句话:缺什么就装什么,我采用Homebrew

2、pip源修改(镜像地址)

这个一定要做,默认地址访问速度特别慢,翻墙了都很慢,最后我的总结是:有一半的时间都是因为这个原因导致的

首先创建配置文件,默认情况下Mac端好像是没有pip的配置文件的,我们需要自行创建。

打开终端,在HOME下创建.pip目录:

echo $HOME

mkdir .pip

接下来创建配置文件pip.conf:

touch pip.conf

接下来编辑配置文件,随便使用什么编辑器打开刚刚新建的pip.conf文件,输入以下两行:

[global]

index-url = http://pypi.mirrors.ustc.edu.cn/simple

输入完成后保存退出即可,至此,pip源就修改完了

国内的镜像较多,参考:http://it.taocms.org/08/8567.htm、http://www.jianshu.com/p/a03aab073a35哪个行就用哪个

3、HOME目录进行Command Line Tools安装,终端下执行

xcode-select --install

4、安装Scrapy,

终端执行pip install Scrapy

如果提示失败,自行看失败原因,例如跟six有关,就升级six包:sudo pip install six

通过pip你可以安装、升级大部分支撑包,简单一句:缺哪个就安装哪个包,哪个包版本不对,就升级哪个。这里有Scrapy依赖包的关系和版本:https://pypi.python.org/pypi/Scrapy/1.3.3,链接根据需要访问不同的Scrapy版本页面

我遇到的另一个问题主要是Scrapy需要支撑包版本问题,和终端账户问题。建议多尝试,采用root账户统一安装

二、编写爬虫爬取mzitu全站图片:

Scrapy官方文档和http://cuiqingcai.com/4421.html写的很清楚了,我就不班门弄斧,直接贴出代码:

run.py,运行程序:

from scrapy.cmdline import execute execute(['scrapy', 'crawl', 'mzitu'])

items.py:用来定义Item有哪些属性

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # http://doc.scrapy.org/en/latest/topics/items.html import scrapy class MzituScrapyItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name = scrapy.Field() image_urls = scrapy.Field() images = scrapy.Field() image_paths = scrapy.Field()

pipelines.py

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html from scrapy import Request from scrapy.pipelines.images import ImagesPipeline from scrapy.exceptions import DropItem import re class MzituScrapyPipeline(ImagesPipeline): def file_path(self, request, response=None, info=None): """ :param request: 每一个图片下载管道请求 :param response: :param info: :param strip :清洗Windows系统的文件夹非法字符,避免无法创建目录 :return: 每套图的分类目录 """ item = request.meta['item'] FolderName = item['name'] image_guid = request.url.split('/')[-1] filename = u'full/{0}/{1}'.format(FolderName, image_guid) return filename def get_media_requests(self, item, info): """ :param item: spider.py中返回的item :param info: :return: """ for img_url in item['image_urls']: yield Request(img_url, meta={'item': item}) def item_completed(self, results, item, info): image_paths = [x['path'] for ok, x in results if ok] if not image_paths: raise DropItem("Item contains no images") item['image_paths'] = image_paths return item

settings.py,项目设置文件:

# -*- coding: utf-8 -*- # Scrapy settings for mzitu_scrapy project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # http://doc.scrapy.org/en/latest/topics/settings.html # http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html # http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html BOT_NAME = 'mzitu_scrapy' SPIDER_MODULES = ['mzitu_scrapy.spiders'] NEWSPIDER_MODULE = 'mzitu_scrapy.spiders' ITEM_PIPELINES = {'mzitu_scrapy.pipelines.MzituScrapyPipeline': 300} IMAGES_STORE = '/tmp/images' IMAGES_EXPIRES = 1 # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'mzitu_scrapy (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = True # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'mzitu_scrapy.middlewares.MzituScrapySpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'mzitu_scrapy.middlewares.MyCustomDownloaderMiddleware': 543, #} # Enable or disable extensions # See http://scrapy.readthedocs.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html #ITEM_PIPELINES = { # 'mzitu_scrapy.pipelines.MzituScrapyPipeline': 300, #} # Enable and configure the AutoThrottle extension (disabled by default) # See http://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

spider.py,主程序:

# -*- coding: UTF-8 -*- from scrapy import Request from scrapy.spiders import CrawlSpider, Rule from scrapy.linkextractors import LinkExtractor from mzitu_scrapy.items import MzituScrapyItem class Spider(CrawlSpider): name = 'mzitu' allowed_domains = ['mzitu.com'] start_urls = ['http://www.mzitu.com/'] my_img_urls = [] rules = ( Rule(LinkExtractor(allow=('http://www.mzitu.com/d{1,6}',), deny=('http://www.mzitu.com/d{1,6}/d{1,6}')), callback='parse_item', follow=True), ) def parse_item(self, response): """ :param response: 下载器返回的response :return: """ item = MzituScrapyItem() # max_num为页面最后一张图片的位置 max_num = response.xpath("descendant::div[@class='main']/div[@class='content']/div[@class='pagenavi']/a[last()-1]/span/text()").extract_first(default="N/A") for num in range(1, int(max_num)): # page_url 为每张图片所在的页面地址 page_url = response.url + '/' + str(num) yield Request(page_url, callback=self.img_url) def img_url(self, response): """取出图片URL 并添加进self.img_urls列表中 :param response: :param img_url 为每张图片的真实地址 """ item = MzituScrapyItem() name = response.xpath("descendant::div[@class='main-image']/descendant::img/@alt").extract_first(default="N/A") img_urls = response.xpath("descendant::div[@class='main-image']/descendant::img/@src").extract() item['image_urls'] = img_urls item['name'] = name return item

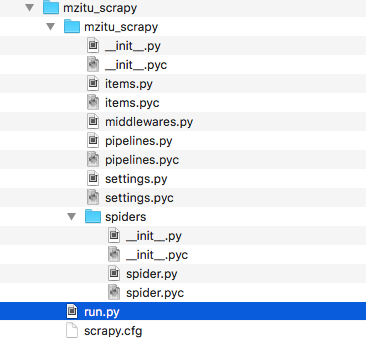

项目结构如下:

最后终端进入项目目录,执行:

python run.py crawl mzitu_scrapy

如果遇到问题多找百度答案,坑还是不少的。

我这里提几点:

1、Scrapy是默认给文件按照hash来命名的,想要文件原来的名字就覆写file_path方法,网络上写的覆写方法完全没有问题

2、如果想给文件归类放不同的文件夹怎么办?所谓文件夹不过是路径而已,一样是修改file_path,只不过要给n个文件写入同一个文件夹,我的思路就是一个item[name]对应n个文件,该name就是文件夹名称。注意http://cuiqingcai.com/4421.html的主程序是错的,文件夹名称和文件根本对不上,有严重的逻辑问题。为了这个问题我找了好久答案,一直都以为是覆写file_path方法的思路是错的,最后才发现原来主程序中name和文件对不上导致的

3、xpath语法可以上http://www.w3school.com.cn/xpath/index.asp现学,不难,可以结合chrome的一个xpath插件(xpath helper)在按照xpath语法修改(我基本是用该插件来验证写的语句对不对)

最后,如果你因为防火墙的问题,纠结上不了chrome应用商店,安装不了xpath helper。想办法翻墙即可。

文中有错误,或改善的地方,欢迎指正,大家一起探讨