一 Node管理

1.1 Node隔离——方式一

在硬件升级、硬件维护等情况下,我们需要将某些Node隔离,使其脱离Kubernetes集群的调度范围。Kubernetes提供了一种机制,既可以将Node纳入调度范围,也可以将Node脱离调度范围。

创建配置文件unschedule_node.yaml,在spec部分指定unschedulable为true:

[root@k8smaster01 study]# vi unschedule_node.yaml

apiVersion: v1

kind: Node

metadata:

name: k8snode03

labels:

kubernetes.io/hostname: k8snode03

spec:

unschedulable: true[root@k8smaster01 study]# kubectl replace -f unschedule_node.yaml

[root@k8smaster01 study]# kubectl get nodes #查看下线的节点

隔离之后,对于后续创建的Pod,系统将不会再向该Node进行调度。

提示:也可以使用如下命令进行隔离:

kubectl patch node k8s-node1 -p '{"spec":"{"unschedulable":"true"}"}'

注意:将某个Node脱离调度范围时,在其上运行的Pod并不会自动停止,管理员需要手动停止在该Node上运行的Pod。

1.2 Node恢复——方式一

[root@k8smaster01 study]# vi schedule_node.yaml

apiVersion: v1

kind: Node

metadata:

name: k8snode03

labels:

kubernetes.io/hostname: k8snode03

spec:

unschedulable: false[root@k8smaster01 study]# kubectl replace -f schedule_node.yaml

[root@k8smaster01 study]# kubectl get nodes #查看下线的节点

提示:也可以使用如下命令进行隔离:

kubectl patch node k8s-node1 -p '{"spec":"{"unschedulable":"false"}"}'

1.3 Node隔离——方式二

[root@k8smaster01 study]# kubectl cordon k8snode01

[root@k8smaster01 study]# kubectl get nodes | grep -E 'NAME|node01'

NAME STATUS ROLES AGE VERSION

k8snode01 Ready,SchedulingDisabled <none> 47h v1.15.6

1.4 Node恢复——方式二

[root@k8smaster01 study]# kubectl uncordon k8snode01

[root@k8smaster01 study]# kubectl get nodes | grep -E 'NAME|node01'

NAME STATUS ROLES AGE VERSION

k8snode01 Ready <none> 47h v1.15.6

1.5 Node扩容

生产环境中通常需要对Node节点进行扩容,从而将应用系统进行水平扩展。在Kubernetes集群中,一个新Node的加入需要在该Node上安装Docker、kubelet和kube-proxy服务,然后配置kubelet和kubeproxy的启动参数,将Master URL指定为当前Kubernetes集群Master的地址,最后启动这些服务。通过kubelet默认的自动注册机制,新的Node将会自动加入现有的Kubernetes集群中。

Kubernetes Master在接受了新Node的注册之后,会自动将其纳入当前集群的调度范围,之后创建容器时,可对新的Node进行调度了。通过这种机制,Kubernetes实现了集群中Node的扩容。

示例1:基于kubeadm部署的Kubernetes扩容Node。

[root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8snode04

[root@localhost study]# vi k8sinit.sh #节点初始化

# Modify Author: xhy # Modify Date: 2019-06-23 22:19 # Version: #***************************************************************# # Initialize the machine. This needs to be executed on every machine. # Add host domain name. cat >> /etc/hosts << EOF 172.24.8.71 k8smaster01 172.24.8.72 k8smaster02 172.24.8.73 k8smaster03 172.24.8.74 k8snode01 172.24.8.75 k8snode02 172.24.8.76 k8snode03 172.24.8.41 k8snode04 EOF # Add docker user useradd -m docker # Disable the SELinux. sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config # Turn off and disable the firewalld. systemctl stop firewalld systemctl disable firewalld # Modify related kernel parameters & Disable the swap. cat > /etc/sysctl.d/k8s.conf << EOF net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.tcp_tw_recycle = 0 vm.swappiness = 0 vm.overcommit_memory = 1 vm.panic_on_oom = 0 net.ipv6.conf.all.disable_ipv6 = 1 EOF sysctl -p /etc/sysctl.d/k8s.conf >&/dev/null swapoff -a sed -i '/ swap / s/^(.*)$/#1/g' /etc/fstab modprobe br_netfilter # Add ipvs modules cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF chmod 755 /etc/sysconfig/modules/ipvs.modules bash /etc/sysconfig/modules/ipvs.modules # Install rpm yum install -y conntrack git ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget gcc gcc-c++ make openssl-devel # Install Docker Compose sudo curl -L "https://get.daocloud.io/docker/compose/releases/download/1.25.0/docker-compose-`uname -s`-`uname -m`" -o /usr/local/bin/docker-compose sudo chmod +x /usr/local/bin/docker-compose # Update kernel rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org rpm -Uvh https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm yum --disablerepo="*" --enablerepo="elrepo-kernel" install -y kernel-ml-5.4.1-1.el7.elrepo sed -i 's/^GRUB_DEFAULT=.*/GRUB_DEFAULT=0/' /etc/default/grub grub2-mkconfig -o /boot/grub2/grub.cfg yum update -y # Reboot the machine. reboot

[root@localhost study]# vi dockerinit.sh #docker初始化及安装

# Modify Author: xhy # Modify Date: 2019-06-23 22:19 # Version: #***************************************************************# yum -y install yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum -y install docker-ce-18.09.9-3.el7.x86_64 mkdir /etc/docker cat > /etc/docker/daemon.json <<EOF { "registry-mirrors": ["https://dbzucv6w.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ] } EOF systemctl restart docker systemctl enable docker

[root@localhost study]# vi kubeinit.sh #kube初始化及安装

# Modify Author: xhy # Modify Date: 2019-06-23 22:19 # Version: #***************************************************************# cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF yum install -y kubeadm-1.15.6-0.x86_64 kubelet-1.15.6-0.x86_64 kubectl-1.15.6-0.x86_64 --disableexcludes=kubernetes systemctl enable kubelet

[root@k8smaster01 study]# kubeadm token create #创建token

dzqqnn.ar4w7xcz9byenf7i

[root@k8smaster01 study]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

d8cf7c0384fff8779227f1a913d981d02b9f8f79a70365ba76a909e7160899a9

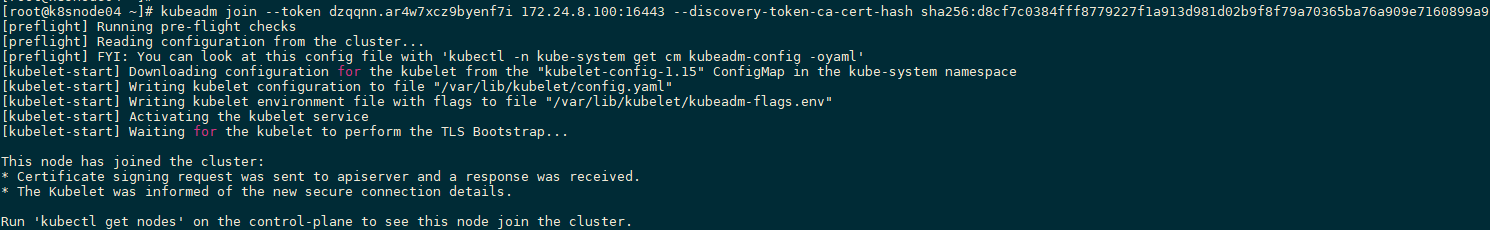

[root@k8snode04 study]# kubeadm join --token dzqqnn.ar4w7xcz9byenf7i 172.24.8.100:16443 --discovery-token-ca-cert-hash sha256:d8cf7c0384fff8779227f1a913d981d02b9f8f79a70365ba76a909e7160899a9

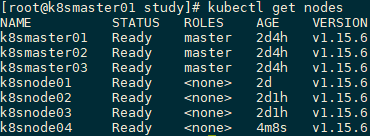

[root@k8smaster01 study]# kubectl get nodes

二 更新Label

2.1 资源标签管理

[root@k8smaster01 study]# kubectl label pod kubernetes-dashboard-66cb8889-6ssqh role=mydashboard -n kube-system #增加label

[root@k8smaster01 study]# kubectl get pods -L role -n kube-system #查看label

[root@k8smaster01 study]# kubectl label pod kubernetes-dashboard-66cb8889-6ssqh role=yourdashboard --overwrite -n kube-system #修改label

[root@k8smaster01 study]# kubectl get pods -L role -n kube-system #查看label

[root@k8smaster01 study]# kubectl label pod kubernetes-dashboard-66cb8889-6ssqh role- -n kube-system #删除label

[root@k8smaster01 study]# kubectl get pods -L role -n kube-system #查看label

三 Namespace管理

3.1 创建namespace

Kubernetes通过命名空间和Context的设置对不同的工作组进行区分,使得它们既可以共享同一个Kubernetes集群的服务,也能够互不干扰。

[root@k8smaster01 study]# vi namespace-dev.yaml

apiVersion: v1

kind: Namespace

metadata:

name: dev[root@k8smaster01 study]# vi namespace-pro.yaml

apiVersion: v1

kind: Namespace

metadata:

name: pro

[root@k8smaster01 study]# kubectl create -f namespace-dev.yaml

[root@k8smaster01 study]# kubectl create -f namespace-pro.yaml #创建如上两个namespace

[root@k8smaster01 study]# kubectl get namespaces #查看namespace

四 Context管理

4.1 定义Context

为不同的两个工作组分别定义一个Context,即运行环境,这个运行环境将属于某个特定的命名空间。

[root@k8smaster01 study]# kubectl config set-context kubernetes --server=https://172.24.8.100:16443

[root@k8smaster01 study]# kubectl config set-context ctx-dev --namespace=dev --cluster=kubernetes --user=devuser

[root@k8smaster01 study]# kubectl config set-context ctx-prod --namespace=prod --cluster=kubernetes --user=produser

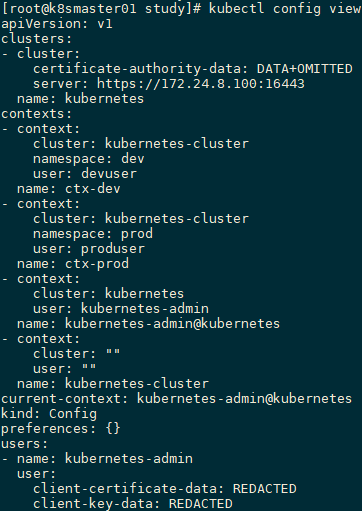

[root@k8smaster01 study]# kubectl config view #查看定义的Context

4.2 设置工作组环境

使用kubectl config use-context <context_name>命令设置当前运行环境。

[root@k8smaster01 ~]# kubectl config use-context ctx-dev #将当前运行环境设置为ctx-dev

注意:运如上设置,当前的运行环境被设置为开发组所需的环境。之后的所有操作都将在名为development的命名空间中完成。

4.3 创建资源

[root@k8smaster01 ~]# vi redis-slave-controller.yaml

apiVersion: v1 kind: ReplicationController metadata: name: redis-slave labels: name: redis-slave spec: replicas: 2 selector: name: redis-slave template: metadata: labels: name: redis-slave spec: containser: - name: slave image: kubeguide/guestbook-redis-slave ports: - containerPort: 6379

[root@k8smaster01 ~]# kubectl create -f redis-slave-controller.yaml #在ctx-dev组创建应用

[root@k8smaster01 ~]# kubectl get pods #查看

[root@k8smaster01 ~]# kubectl config use-context ctx-prod #切换ctx-prod工作组

[root@k8smaster01 ~]# kubectl get pods #再次查看

结论:为两个工作组分别设置了两个运行环境,设置好当前运行环境时,各工作组之间的工作将不会相互干扰,并且都能在同一个Kubernetes集群中同时工作。