HashSet&HashMap浅析

HashSet特性

1、不能保证元素是有序的

Hashset内部采用hash值进行存储索引,而hash值不保证有序

2、不保存重复元素

由于HashSet底层是将要插入的元素当作map的key进行存储(底层采用HashMap作为数据存储结构),所以不保存相同的数据。

HashSet的构造方法

内部由HashMap支持,当没有指定参数的时候, loadFactor = 0.75 不初始化threshold

public HashSet() {

map = new HashMap<>();//由hashmap支持,内部存储是一个hashmap

}

使用Collection对象初始化HashSet时,threshold初始值为:max(c.size/0.75+1, 16)

javapublic HashSet(Collection<? extends E> c) {

map = new HashMap<>(Math.max((int) (c.size()/.75f) + 1, 16));

addAll(c);

}

可以自定义threshold和loadFactor的初始大小

public HashSet(int initialCapacity, float loadFactor) {

map = new HashMap<>(initialCapacity, loadFactor);

}

单独设置threshold的初始化大小

public HashSet(int initialCapacity) {

map = new HashMap<>(initialCapacity);

}

Jdk1.8会调用tableSizeFor来对initialCapacity进行处理。此方法计算出接近initialCapacity参数的2^n来作为初始化容量

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

this.threshold = tableSizeFor(initialCapacity);

}

static final int tableSizeFor(int cap) {

int n = cap - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

HashSet添加元素

public boolean add(E e) {

return map.put(e, PRESENT)==null;

}

把添加的元素作为内部维护存储数据的map的key

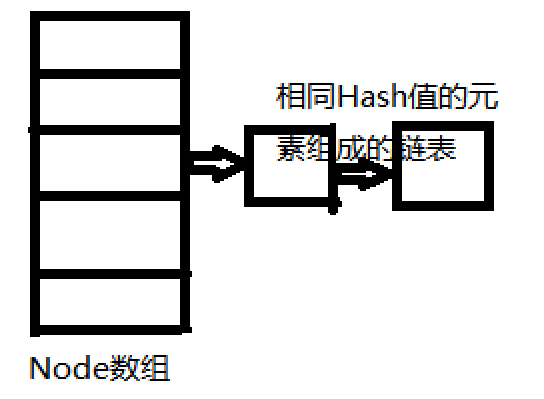

可以看出有个hash的属性,HashMap的底层就是一个Node数组,通过对key的hash值化,在根据这个i = (n - 1) & hash公式获得插入元素的应该存储位置。因此,由于这样的设计,使得HashMap的元素获取为O(1)复杂度。如果发生Hash碰撞,可以看到在Node类中有一个Node的实例Node<K,V> next;,显而易见,会链表的形势来进行存储。数据结构如下

并且,链表中有这样一个优化机制:当链表长度>=8是,链表会进化成红黑树结构

static class Node<K,V> implements Map.Entry<K,V> {

final int hash;

final K key;

V value;

Node<K,V> next;

Node(int hash, K key, V value, Node<K,V> next) {

this.hash = hash;

this.key = key;

this.value = value;

this.next = next;

}

}

HashMap的put()方法

public V put(K key, V value) {

return putVal(hash(key), key, value, false, true);

}

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

Node<K,V>[] tab; Node<K,V> p; int n, i;

// 如果table为初始化或长度为0,进行table的初始化

// table是一个HashMap$Node内部类数组

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

// 如果 通过下标“i =(n-1)&hash”不为空的,则创建一个Node对象添加进tab[i]

if ((p = tab[i = (n - 1) & hash]) == null)

tab[i] = newNode(hash, key, value, null);

else {

Node<K,V> e; K k;

// 如果hash值相同且key与Node的key相同,则不做处理

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

// 如果p 是treeNode类型,才用树形结构去进行存储

else if (p instanceof TreeNode)

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

else {

// 插入在p的链表(p.next)尾部

for (int binCount = 0; ; ++binCount) {

if ((e = p.next) == null) {

p.next = newNode(hash, key, value, null);

// 如果p的链表长度>=7时,将转换为TreeNode结构存储

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

break;

}

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

break;

p = e;

}

}

if (e != null) { // existing mapping for key

V oldValue = e.value;

if (!onlyIfAbsent || oldValue == null)

e.value = value;

afterNodeAccess(e);

return oldValue;

}

}

++modCount;

if (++size > threshold)

resize();

afterNodeInsertion(evict);

return null;

}

HashMap扩容机制

final Node<K,V>[] resize() {

Node<K,V>[] oldTab = table;

int oldCap = (oldTab == null) ? 0 : oldTab.length;

int oldThr = threshold;

int newCap, newThr = 0;

//oldTab!=null,则oldCap>0

if (oldCap > 0) {

if (oldCap >= MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return oldTab;

}

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

//当oldCap<16时,是不进行threshold*2的

//如果能进来证明此map是扩容而不是初始化

newThr = oldThr << 1; // double threshold

}

else if (oldThr > 0) // initial capacity was placed in threshold

//进入这个if代表map构造时采用的有参构造

newCap = oldThr;

else { // zero initial threshold signifies using defaults

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

if (newThr == 0) {//当threshold<16,threshold没有扩容,newThr = 0时: threshold扩容为newCap的loadFactor倍

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

threshold = newThr;

@SuppressWarnings({"rawtypes","unchecked"})

Node<K,V>[] newTab = (Node<K,V>[])new Node[newCap];

table = newTab;

//如果“oldTab != null”说明是扩容,否则直接返回newTab

if (oldTab != null) {

for (int j = 0; j < oldCap; ++j) {

Node<K,V> e;

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

else if (e instanceof TreeNode)

((TreeNode<K,V>)e).split(this, newTab, j, oldCap);

else { // preserve order

Node<K,V> loHead = null, loTail = null;

Node<K,V> hiHead = null, hiTail = null;

Node<K,V> next;

do {

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}