Keepalived高可用

环境准备:

准备三台RHEL7虚拟机,2台做Web服务器(部署Keepalived实现Web服务器的高可用),Web服务器的浮动VIP地址为192.168.4.80,1台作为客户端(proxy)。

配置主机名、IP和yum源:

- proxy (eth0:192.168.4.5)

- web1 (eth0:192.168.4.100)

- web2 (eth0:192.1.68.4.200)

web1和web2部署默认网页页面

(以web1为例)

[root@web1 ~]# yum -y install httpd

[root@web1 ~]# echo "192.168.4.100" > /var/www/html/index.html //注:web2写入不同的页面内容“192.168.4.200”

[root@web1 ~]# systemctl restart httpd

[root@web1 ~]# systemctl enable httpd

web1和web2安装Keepalived

[root@web1 ~]# yum -y install keepalived

[root@web2 ~]# yum -y install keepalived

配置文件/etc/keepalived/keepalived.conf 部分内容解析:

//全局配置

global_defs {

notification_email { //设置报警收件邮箱

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL //设置id,用于标识主机

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

//浮动IP配置

vrrp_instance VI_1 {

state MASTER //状态。MASTER或BACKUP。仅决定初始的状态,实际状态由优先级决定

interface eth0 //网络接口名称

virtual_router_id 51 //组。号码一样为同一组。主备服务器组号必须一致(在同一组内比较优先级)

priority 100 //服务器优先级,优先级高优先获取VIP

advert_int 1 //每1秒比较优先级

authentication { //添加密码认证(避免攻击者设置相同组号,设置高优先级从而成为主服务器

auth_type PASS

auth_pass 1111 //主备服务器密码必须一致

}

virtual_ipaddress { //谁是主服务器谁获得该VIP

192.168.200.16

192.168.200.17

192.168.200.18

}

}

......

web1和web2修改keepalived配置文件

注意:本实验中,32行之后的集群相关配置全删掉

[root@web1 ~]# vim /etc/keepalived/keepalived.conf

......

router_id web1

......

virtual_ipaddress {

192.168.4.80 //浮动IP

}

[root@web2 ~]# vim /etc/keepalived/keepalived.conf

......

router_id web2

......

state BACKUP //web2为备份服务器

......

priority 50 //设置优先级小于web1的优先级(100)

......

virtual_ipaddress {

192.168.4.80

}

重启keepalived服务

[root@web1 ~]# systemctl restart keepalived

[root@web2 ~]# systemctl restart keepalived

检查VIP:

[root@web1 ~]# ip a s eth0 //使用ip addr show命令查看VIP。ifconfig命令查不到VIP

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:20:21:7b brd ff:ff:ff:ff:ff:ff

inet 192.168.4.100/24 brd 192.168.4.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.4.80/32 scope global eth0 //浮动IP在web1主机上

valid_lft forever preferred_lft forever

inet6 fe80::34ae:6e89:a7f:f845/64 scope link

valid_lft forever preferred_lft forever

[root@web2 ~]# ip a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:4e:86:ab brd ff:ff:ff:ff:ff:ff

inet 192.168.4.200/24 brd 192.168.4.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::1142:46d2:cc3b:ea58/64 scope link

valid_lft forever preferred_lft forever

配置防火墙和SELinux

注意:每次启动keepalived会自动添加一个drop的防火墙规则,需要清空!

[root@web1 ~]# iptables -F

[root@web1 ~]# setenforce 0

[root@web2 ~]# iptables -F

[root@web2 ~]# setenforce 0

[root@proxy ~]# ping 192.168.4.80 //VIP可ping通

测试

模拟web1故障:

[root@web1 ~]# poweroff //将web1关机

[root@web2 ~]# ip a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:4e:86:ab brd ff:ff:ff:ff:ff:ff

inet 192.168.4.200/24 brd 192.168.4.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.4.80/32 scope global eth0 //浮动IP自动飘到了web2主机上

valid_lft forever preferred_lft forever

inet6 fe80::1142:46d2:cc3b:ea58/64 scope link

valid_lft forever preferred_lft forever

模拟web1故障排除:

[root@room9pc01 ~]# virsh start web1 //再将web1开机,模拟故障排除

[root@web1 ~]# ip a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:20:21:7b brd ff:ff:ff:ff:ff:ff

inet 192.168.4.100/24 brd 192.168.4.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::34ae:6e89:a7f:f845/64 scope link

valid_lft forever preferred_lft forever

[root@web1 ~]# systemctl start keepalived.service //再启动keepalived服务

[root@web1 ~]# iptables -F

[root@web1 ~]# ip a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:20:21:7b brd ff:ff:ff:ff:ff:ff

inet 192.168.4.100/24 brd 192.168.4.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.4.80/32 scope global eth0 //浮动IP飘回到web1主机

valid_lft forever preferred_lft forever

inet6 fe80::34ae:6e89:a7f:f845/64 scope link

valid_lft forever preferred_lft forever

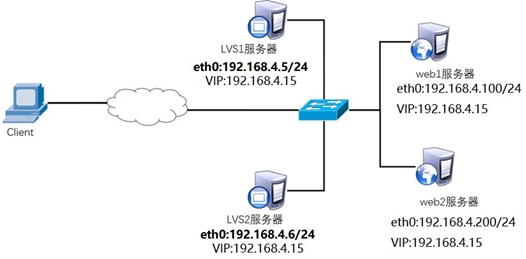

Keepalived+LVS调度器高可用

使用Keepalived为LVS调度器提供高可用,防止LVS调度器单点故障:

- LVS1调度器(proxy1)IP为192.168.4.5

- LVS2调度器(proxy2)IP为192.168.4.6

- 虚拟服务器VIP为192.168.4.15

- 真实Web服务器IP为192.168.4.100、192.168.4.200

- 使用加权轮询调度算法,真实web服务器权重不同

环境准备

使用5台RHEL虚拟机,都配置好yum,1台作为客户端、2台作为LVS调度器、2台作为Real Server (web服务器),拓扑如下:

配置好如下IP:

|

主机名 |

IP |

|

client |

eth0:192.168.4.10/24 |

|

proxy1 |

eth0:192.168.4.5/24 |

|

proxy2 |

eth0:192.168.4.6/24 |

|

web1 |

eth0:192.168.4.100/24 |

|

web2 |

eth0:192.168.4.200/24 |

即:在前面实验基础上再新增一台proxy2(192.168.4.6),关闭web1和web2的keepalived服务。

[root@web1 ~]# systemctl stop keepalived

[root@web2 ~]# systemctl stop keepalived

[root@proxy ~]# hostnamectl set-hostname proxy1

[root@room9pc01 ~]# virsh start --console proxy2

[root@localhost ~]# hostnamectl set-hostname proxy2

[root@localhost ~]# nmcli con modify eth0 ipv4.method manual ipv4.addresses 192.168.4.6/24 connection.autoconnect yes

[root@localhost ~]# nmcli con up eth0

[root@proxy2 ~]# scp 192.168.4.5:/etc/yum.repos.d/my.repo /etc/yum.repos.d/

[root@proxy2 ~]# yum repolist

web1和web2配置VIP并防止IP冲突

(注:proxy1和proxy2不手动配置VIP,而是由keepalived自动配置)

web1配置:(web2同样操作)

[root@web1 ~]# cd /etc/sysconfig/network-scripts/

[root@web1 network-scripts]# cp ifcfg-lo{,:0}

注意:这里的子网掩码必须是32(也就是全255),网络地址与IP地址一样,广播地址与IP地址也一样。

[root@web1 network-scripts]# vim ifcfg-lo:0

DEVICE=lo:0

IPADDR=192.168.4.15

NETMASK=255.255.255.255

NETWORK=192.168.4.15

BROADCAST=192.168.4.15

ONBOOT=yes

NAME=lo:0

注意:这里因为web1也配置与调度器一样的VIP地址,默认肯定会出现地址冲突。写入这四行的主要目的就是访问192.168.4.15的数据包,只有调度器会响应,其他主机都不做任何响应。

[root@web1 ~]# vim /etc/sysctl.conf

末尾添加4行:

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

#当有arp广播问谁是192.168.4.15时,本机忽略该ARP广播,不做任何回应。本机不要向外宣告自己的lo回环地址是192.168.4.15

重启网络服务,设置防火墙与SELinux

[root@web1 ~]# systemctl restart network

[root@web1 ~]# systemctl stop firewalld

[root@web1 ~]# setenforce 0

两台LVS调度器安装Keepalived、ipvsadm

注:两台LVS调度器相同操作

[root@proxy1 ~]# yum -y install keepalived ipvsadm

[root@proxy1 ~]# systemctl enable keepalived //开机自启keepalived

[root@proxy1 ~]# ipvsadm -C //清除所有规则

[root@proxy1 ~]# ipvsadm -Ln //查看规则

配置Keepalived实现LVS(DR模式)调度器高可用

LVS1调度器(proxy1)配置Keepalived:

[root@proxy1 ~]# vim /etc/keepalived/keepalived.conf

......

router_id LVS1

......

virtual_ipaddress {

192.168.4.15

}

//设置ipvsadm的VIP规则

virtual_server 192.168.4.15 80 {

delay_loop 6

lb_algo wrr //LVS调度算法:wrr 加权轮询调度

lb_kind DR //LVS模式:DR 直接路由模式

#persistence_timeout 50 //此行的作用是保持连接,即客户端在一定时间内始终访问相同服务器。为实验效果所以此处注释。

protocol TCP

//设置后端web1服务器RIP

real_server 192.168.4.100 80 {

weight 1 //权重

TCP_CHECK { //健康检查

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

//设置后端web2服务器RIP

real_server 192.168.4.200 80 {

weight 2

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

LVS2调度器(proxy2)配置Keepalived:

[root@proxy1 ~]# scp /etc/keepalived/keepalived.conf 192.168.4.6:/etc/keepalived/keepalived.conf

[root@proxy2 ~]# vim /etc/keepalived/keepalived.conf

......

router_id LVS2

......

state BACKUP

......

priority 50

......

启动proxy1和proxy2的keepalived

[root@proxy1 ~]# systemctl restart keepalived

[root@proxy1 ~]# iptables -F

[root@proxy2 ~]# systemctl restart keepalived

[root@proxy2 ~]# iptables -F

测试

//已实现加权轮询调度:

[root@client ~]# curl 192.168.4.15

192.168.4.100

[root@client ~]# curl 192.168.4.15

192.168.4.200

[root@client ~]# curl 192.168.4.15

192.168.4.200

[root@client ~]# curl 192.168.4.15

192.168.4.100

[root@client ~]# curl 192.168.4.15

192.168.4.200

[root@client ~]# curl 192.168.4.15

192.168.4.200

//已实现LVS调度器高可用:

[root@proxy2 ~]# ip a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:af:04:25 brd ff:ff:ff:ff:ff:ff

inet 192.168.4.6/24 brd 192.168.4.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::dd7a:452c:ef80:98b3/64 scope link

valid_lft forever preferred_lft forever

[root@proxy1 ~]# poweroff //proxy1关机

[root@proxy2 ~]# ip a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:af:04:25 brd ff:ff:ff:ff:ff:ff

inet 192.168.4.6/24 brd 192.168.4.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.4.15/32 scope global eth0 //VIP自动到了proxy2上

valid_lft forever preferred_lft forever

inet6 fe80::dd7a:452c:ef80:98b3/64 scope link

valid_lft forever preferred_lft forever

//客户端依然可正常访问

[root@client ~]# curl 192.168.4.15

192.168.4.100

[root@client ~]# curl 192.168.4.15

192.168.4.200

[root@client ~]# curl 192.168.4.15

192.168.4.200

[root@client ~]# curl 192.168.4.15

192.168.4.100

[root@client ~]# curl 192.168.4.15

192.168.4.200

[root@client ~]# curl 192.168.4.15

192.168.4.200