前文初步介绍了Linux用户态设备驱动,本文将介绍一个典型的案例。Again, 如对Linux用户态设备驱动程序开发感兴趣,请阅读本文,否则请飘过。

Device Drivers in User Space: A Case for Network Device Driver | 用户态设备驱动:以网卡驱动为例

Hemant Agrawal and Ravi Malhotra, Member, IACSIT

Abstract -- Traditionally device drivers specially the network one's are implemented and used in Linux Kernel for various reasons. However in recent trend, many network stack vendors are moving towards the user space based drivers. Open Source – 'GPL' is one of strong reason for such a move. In the absence of generic guidelines, there are various options to implement device drivers in user space. Each has their advantage and disadvantage. In this paper, we will cover multiple issues with user space device driver and will give more insight about the Network Device Driver implementation in User Space. Index Terms -- Network drivers, user space, zero copy.

摘要:基于各种原因,传统的设备驱动(特别是网卡驱动) 是在Linux内核中实现和使用的。然而,最近的趋势表明,很多网络栈供应商正在将设备驱动转移到用户态实现。为什么导致这样的转移?GPL是诸多原因中强有力的一个。逃离了通用指南的藩篱,在用户态实现设备驱动的可选方案就多了,当然,每一种解决方案都有其优缺点。在本文中,我们将首先讨论在用户态实现设备驱动面临的诸多问题,然后就如何在用户态实现一个网卡驱动做深入的讨论。

关键字:网卡驱动,用户态,零拷贝。

I. INTRODUCTION | 简介

However, in recent times, there has been a shift towards running data path applications in the user space context. Linux user space provides several advantages for applications with respect to a more robust and flexible process management, standardized system call interface, simpler resource management, availability of a large number of libraries for XML, regular expression parsing etc. It also makes applications easier to debug by providing memory isolation and independent restart. At the same time, while kernel space applications need to confirm to GPL guidelines, user space applications are not bound by such restrictions.

传统的设备驱动是在内核空间实现的,然而,从内核切换到用户空间上下文运行数据通路应用程序的转变正在发生。Linux用户空间为应用程序提供了多个优点,包括更稳定更灵活的进程管理,标准化的系统调用接口,更简单的资源管理,大量的XML库,和正则表达式解析等等。Linux用户空间还使应用调试起来更简单,通过提供内存隔离和独立重启机制。与此同时,内核态的应用程序需要遵循GPL,而用户态的应用程序却不需要受到这样的限制。

User space data path processing comes with its own overheads. Since the network drivers run in kernel context and use kernel space memory for packet storage, there is an overhead of copying the packet data from user-space to kernel space memory and vice-versa. Also, user/kernel-mode transitions usually impose a considerable performance overhead, thereby violates the low latency and high throughput requirements of data path applications.

用户态数据通路处理的开销是伴随着数据处理而发生的。由于网卡驱动运行在内核态而且使用内核内存来存储数据包,那么将数据包从用户态拷贝到内核态必然产生一个开销,反之亦然。此外,在用户态和内核态之间来回切换通常会产生相当大的性能开销,这显然与数据通路应用程序所需要的低延迟和高吞吐量背道而驰。

In the rest of this paper, we shall explore an alternative approach to reduce these overheads for user space data path applications.

在本文的剩余部分,我们将探讨一种方法,该方法用于降低用户态数据通路应用程序的开销。

II. MAPPING MEMORY TO USER-SPACE | 将(设备)内存映射到用户空间

As an alternative to the traditional I/O model, the Linux kernel provides a user-space application with means to directly map the memory available to kernel to a user space address range. In the context of device drivers, this can provide user space applications direct access to the device memory which includes register configuration and I/O descriptors. All accesses by the application to the assigned address range ends up directly accessing the device memory.

作为对传统的I/O模型的一种替代方式,Linux内核为用户空间应用程序提供了将对内核来说是可用的内存空间直接映射到用户态地址空间的方法。在设备驱动的上下文中,这可以给用户空间应用程序提供对设备内存的直接访问,其中包括设备寄存器配置和I/O描述符。应用程序对已分配的内存空间的所有访问都以直接访问设备内存而结束。

There are several Linux system calls which allow this kind

of memory mapping, the simplest being the mmap() call. The

mmap() call allows the user application to map a physical

device address range one page at a time or a contiguous range

of physical memory in multiples of page size.

在Linux系统中,存在着不止一个能够提供这种内存映射的系统调用,其中最简单的就是mmap()系统调用。mmap()系统调用允许应用程序每一次只将一个物理内存页映射到用户空间,也允许将多个连续的物理内存页映射到用户空间。

Other Linux system calls for mapping memory include splice()/vmsplice() which allows an arbitrary kernel buffer to be read or written to from user space, while tee() allows a copy between 2 kernel space buffers without access from user space[1].

其他提供内存映射的系统调用包括splice()/vmsplice(),它们允许(从用户态发起的)对任意的内核缓冲区做读写。而tee()系统调用允许在两个内核缓冲区之间直接做数据拷贝,而不需要访问用户态。

The task of mapping between the physical memories to the user space memory is typically done using Translation Look-aside Buffers or TLB. The number of TLB entries in a given processor is typically limited and as such they are used as a cache by Linux kernel. The size of the memory region mapped by each entry is typically restricted to the minimum page size supported by the processor, which is 4k bytes.

将物理内存映射到用户空间内存通常使用TLB(旁路转换缓冲,即快表或页表缓冲)。在一个给定的处理器上,TLB条目数量通常是有限的,因此他们是作为Linux内核的cache来使用。由TLB条目映射的内存区域大小通常来说是受限的,即为处理器支持的最小内存页大小(4KB)。

Linux maps the kernel memory using a small set of TLB entries which are fixed during initialization time. For user space applications however, the number of TLB entries are limited and each TLB miss can result in a performance hit. To avoid such penalties, Linux provides concept of a Huge-TLB, which allows user space applications to map pages larger than the default minimum page size of 4k bytes. This mapping can be used not only for application data but text segment as well.

Linux在初始化阶段使用固定的一小部分TLB条目来对内核内存进行映射。但是对用户态应用来说,TLB条目的数量是有限的,每一次TLB未命中都会导致性能下降。为了避免这样的不利因素对性能的影响,Linux引入了Huge-TLB的概念,即允许用户态应用映射大于4KB(最小内存页尺寸)的内存页。这种映射不但可以用在应用数据上,而且可以用在(正文)代码段上。

Several efficient mechanisms have been developed in Linux to support zero copy mechanisms between user space and kernel space based on memory mapping and other techniques [2]-[4]. These can be used by the data path applications while continuing the leverage the existing kernel space network driver implementation. However they still consume the precious CPU cycles and per packet processing cost still remain moderately higher. Having a direct access to the hardware from the user space can eliminates the need for any mechanisms to transfer packets back and forth between user space and kernel space, and thus it can reduce the per packet processing cost to a minimum.

基于内存映射和其他技术,Linux已经开发了几种有效的机制来支持在内核空间与用户空间之间保持零拷贝(zero copy)。这些机制可以为数据通路应用程序所使用,在继续使用现有的内核态网卡驱动实现的情况下。然后,弥足珍贵的CPU周期仍然被消耗掉,而且单个数据包处理的成本仍旧比较高(虽然在可接受的范围内)。从用户态直接访问硬件,就有效地规避了在用户态与内核态之间来来回回地传输数据包,因此能够最小化单个数据包的处理成本。

III. UIO DRIVERS

Linux provides a standard UIO framework[4] for developing user space based device drivers. The UIO framework defines a small kernel space component which performs 2 key tasks: o Indicate device memory regions to user space. o Register for device interrupts and provide interrupt indication to user space.

Linux为基于用户态的设备驱动开发提供一个标准的Userspace I/O(UIO)框架。UIO框架定义了一个小的内核态组件,该组件负责执行两个核心任务:

- 给用户态指明设备内存区域的起始位置。

- 注册设备中断并向用户态提供中断服务。

The kernel space UIO component then exposes the device via a set of sysfs entries like /dev/uioXX. The user space component searches for these entries, reads the device address ranges and maps them to user space memory. The user space component can perform all device management tasks including I/O from the device. For interrupts however, it needs to perform a blocking read() on the device entry, which results in the kernel component putting the user space application to sleep and wakes it up once an interrupt is received.

内核态UIO组件通过一组形如/dev/uioXXX的条目将设备暴露给用户态。用户态组件搜索这些条目,读取设备的起始地址,将其映射到用户空间内存。用户态组件可以执行所有的设备管理任务,包括来自设备的I/O。然而,中断需要在设备条目上执行一个阻塞的读操作,该阻塞操作将导致用户态应用程序被内核组件设为睡眠状态,等中断被接收后才被唤醒。

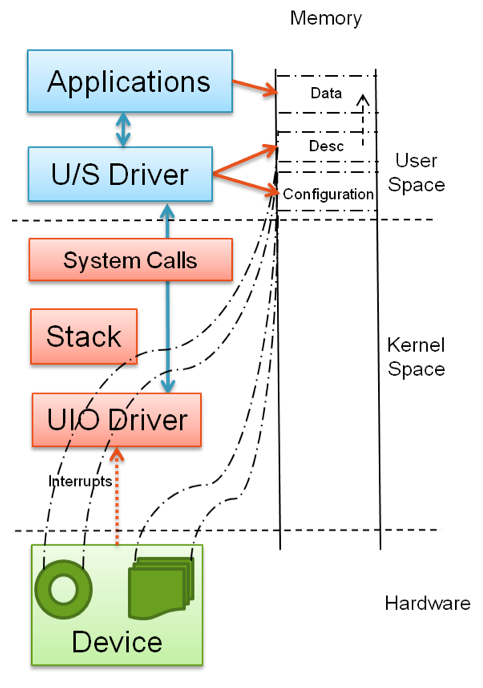

IV. USER SPACE NETWORK DRIVERS | 用户态网卡驱动

The memory required by a network device driver can be of three types: o Configuration space: this refers to the common configuration registers of the device. o I/O descriptor space: this refers to the descriptors used by the device to access data from the device. o I/O data space: this refers to the actual I/O data accessed from the device.

网络设备驱动所需的内存分为三种类型:

- 配置空间:设备的公共的配置寄存器。

- I/O描述符空间:被设备用来访问设备中的数据的描述符。

- I/O数据空间:被设备访问的实际的I/O数据。

Taking the case of a typical Ethernet device, the above can refer to the common device configuration (including MAC configuration), buffer-descriptor rings, and packet data buffers.

以典型的以太网设备为例,上述内存类型分别对应为公共设备配置(包括MAC地址配置),环缓冲区描述符和包数据缓冲区。

In case of kernel space network drivers, all 3 regions are mapped to kernel space, and any access to these from the user-space is typically abstracted out via either ioctl() calls or read()/write() calls, from where a copy of the data is provided to the user space application.

在内核态网络设备驱动程序中,所有的三个区域都是映射到内核空间。从用户空间访问这三个区域的话被典型地抽象为:通过ioctl()调用或者read()/write()调用。数据被拷贝到用户空间给用户态应用程序使用。

User space network drivers on the other hand, map all 3 regions directly to user space memory. This allows the user space application to directly drive the buffer descriptor rings from user space. Data buffers can be managed and accessed directly by the application without overhead of a copy.

另一方面,在用户态网络设备驱动程序中,所有的三个区域都是直接映射到用户空间。于是允许用户态应用程序在用户态直接驱动环缓冲区描述符。无需任何的内存拷贝开销,应用程序就可以直接管理和访问数据缓冲区。

Taking the specific example of an implementation of a user space network driver for eTSEC Ethernet controller on a Freescale QorIQ P1020 platform, the configuration space is a single region of 4k size, which is page boundary aligned. This contains all the device specific registers including controller settings, MAC settings, interrupts etc. Besides this, the MDIO region also needs to be mapped to allow configuration of the Ethernet Phy devices. The eTSEC provides for up to 8 different individual buffer descriptor rings, each of which are mapped onto a separate memory region, to allow for simultaneous access by multiple applications. The data buffers referenced by the descriptor rings are allocated from a single contagious memory block, which is allocated and mapped to user space during initialization time.

举个具体的例子,在飞思卡尔的QorIQ P1020平台上实现的eTSEC以太网控制器的用户态网卡驱动,配置空间是一个页边对齐的大小为4K的独立的区域,包括所有的设备相关的寄存器(控制器设置,MAC地址设置和中断等)。除此之外, MDIO区域也需要被映射,以允许对以太网物理设备进行配置。eTESC提供多达8个独立的缓冲区描述符环,每一个环都被映射到一个单独的内存区域中,从而允许多个应用程序对设备同时进行访问。被描述符环引用的数据缓冲区是从一个单一的(具有传染性?)的内存块分配的,该内存块在初始化阶段被分配/映射到用户空间。

V. CONSTRAINTS OF USER SPACE DRIVERS | 用户态设备驱动受到的限制

Direct access to network devices brings its own set of complications for user space applications, which were hidden by several layers of kernel stack and system calls. o Sharing a single network device across multiple applications. o Blocking access to network data. o Lack of network stack services like TCP/IP. o Memory management for packet buffers. o Resource management across application restarts. o Lack of a standardized driver interface for applications.

对网络设备的直接访问给用户空间应用带来了一系列复杂的问题,这些问题之所以通常看不到,是因为被多个内核栈和系统调用给屏蔽掉了。

- 跨多个应用程序共享单个网络设备。

- 阻塞对网络数据的访问。

- 缺少网络栈服务,例如TCP/IP。

- 对数据包缓冲区的内存管理。

- 横跨多个应用程序重启的资源管理。

- 对应用程序来说,缺乏标准的驱动程序接口。

Figure 1: Kernel space network driver 内核态网卡驱动

Figure 2: User space network driver 用户态网卡驱动

A. Sharing Devices Unlike the Linux socket layer which allows multiple applications to open sockets – TCP, UDP or raw IP, the user space network drivers allow only a single application to access the data from an interface. However, most network interfaces nowadays provide multiple buffer descriptor rings in both receive and transmit direction. Further, these interfaces also provide some kind of hardware classification mechanism to divert incoming traffic to these multiple rings. Such a mechanism can be used to map individual buffer descriptor rings to different applications. This again limits the number of applications on a single interface to the number of rings supported by the hardware device. An alternate to this is to develop a dispatcher framework over the user space driver, which will deal with multiple applications.

A. 设备共享。

Linux套接字层允许多个应用程序打开socket(TCP, UDP或raw IP), 用户态网卡驱动则不然,它只允许单个应用程序从接口中访问数据。然而,现如今,大多数网络接口在接收(rx)和发送(tx)方向上提供多个缓冲描述符环。此外,这些接口还提供某种硬件分类机制,该机制将传入的流量转移到多个缓冲描述符环。这种机制可用于映射单个缓冲区描述符环到多个不同的应用程序。这又限制了在单个接口上为硬件设备所支持的应用程序的数量。一个替代方案就是在用户态设备驱动上开发分发器框架,用以处理多个应用程序。

B. Blocking Access to Data Unlike traditional socket based access which allows user space applications to block until data was available on the socket, or to do a select()/poll() to wait on multiple inputs, the user space application has to constantly poll the buffer descriptor ring for an indication for incoming data. This can be addressed by the use of a blocking read() call on the UIO device entry, which would allow the user space application to block on receive interrupts from the Ethernet device. This also provides the application with the freedom of when it wants to be notified of interrupts – i.e. instead of being interrupted for each packet, it can choose to implement a polling mechanism to consume a certain number of buffer descriptor entries before returning to other processing tasks. When all buffer descriptor entries are consumed, the application can again perform a read() to block until further data arrives.

B. 阻塞数据访问。

传统的基于socket的访问允许用户空间应用程序阻塞,直到数据在socket上变得可用;或用户空间应用程序使用select()/poll()等待多个输入,但是必须不断地轮询缓冲区,该缓冲区用于指示有数据到达。 这可以在UIO设备条目上用一个阻塞read()调用来实现,它允许用户空间应用程序阻塞住以接收来自以太设备的中断。这还给应用程序提供了自由,当它希望被通知有中断发生,而不是对于每个数据包都去响应中断。应用程序可以有选择地区实现一种轮询机制,该机制在返回到其他处理任务之前消耗掉一定数量的缓冲区描述符条目。当所有的缓冲区描述符条目都被消耗掉的时候,应用程序再进行一次read()阻塞操作,等待新的数据到达。

C. Lack of Network Stack Services The Linux network stack and socket interface also abstract basic networking services from applications like route lookup, ARP etc. In the absence of such services, the application has to either runs its own equivalent of a network stack or maintain a local copy of the routing and neighbor databases in the kernel.

C. 缺乏网络栈服务。

Linux网络栈和socket接口对应用程序提供基本的网络服务抽象,例如路由查找,ARP(以太网网络地址解析)等。在没有诸如此类的服务的情况下,应用程序必须自己运行一个与网络栈等效的东东,或者在本地维护一份来自内核的路由/邻居数据库拷贝。

D. Memory Management for Buffers The user space application also needs to deal with the buffers provided to the network device for storing & retrieving data. Besides allocation and freeing of these buffers, it also needs to perform the translation of the user space virtual address to the physical address before providing them to the device. Doing this translation for each buffer at runtime can be very costly. Also, since the number of TLBs in the processor may be limited, performance may be hit. The alternative is to use Huge-TLB to allocate a single large chunk of memory, and carve out the data buffers out of this memory chunk.

D. 缓冲区的内存管理。

用户空间应用程序还需要处理缓冲区,该缓冲区被用来给网络设备提供数据存储和数据检索。除了分配和释放这些缓冲区,它还需要执行用户空间虚拟地址到物理地址的转换,在提供设备之前。在运行时对每个缓冲区执行地址转换的代价无疑是非常昂贵的。另一种方法是使用巨型TLB分配单个大内存块,并将数据缓冲区从内存块中分割出来。

E. Application Restart The application is responsible for allocating and managing device resources and current state of the device. In case the application crashes or is restarted without being given control to perform cleanup, the device may be left in an inconsistent state. One way to resolve this could be to use the kernel space UIO component to keep track of application process state and on restart, to reset the device and reset any memory mappings created by the application.

E. 应用程序重启。

应用程序负责分配和管理设备资源和设备的当前状态。当应用程序崩溃或重新启动时,如没有执行cleanup, 那么设备的状态可能会不一致。解决这个问题的一种方法就是使用内核态的UIO组件。UIO组件跟踪应用程序的状态, 在应用重启时重置设备,并重置被应用程序创建的所有内存映射。

F. Standardized User Interface The current generation of user space network drivers provide a set of low level API which are often very specific to the device implementation, rather than confirm to standard system call API like open()/close(), read()/write() or send()/receive(). This implies that the application needs to be ported to use each specific network device.

F. 标准的用户接口。

用户空间网络驱动程序提供一组通常非常低级别API,这些API与设备实现密切相关,而不是标准的系统调用API例如open/close, read/write或send/receive。那么,当应用程序需要使用另一个特定的网络设备的话,就意味着需要进行移植。

VI. CONCLUSION | 总结

While the UIO framework provides user space applications with the freedom of having direct access to network devices, it brings its own share of limitations in terms of sharing across applications, resource and memory management. The current generation of user space network drivers works well in a constrained use case environment of a single application tightly coupled to a network device. However, further work on such drivers must take into account addressing some of these limitations.

UIO框架为用户态应用程序提供了具有直接访问网络设备的自由,也引入了一些局限性,在跨应用程序共享资源和内存方面。对与网络设备紧密耦合的单个应用程序来说,当前的用户态网络设备驱动在其受限的用例环境中工作得非常好。然而,这一类驱动的下一步工作则必须考虑如何解决其存在的局限性。

REFERENCES | 参考文献

[1] M. Welsh et al., “Memory Management For User-Level Network

Interfaces,” IEEE Micro, pp. 77-82, Mar.-Apr. 1998.

[2] D. Stancevic, “Zero Copy I: User-Mode Perspective,” Linux Journal,

pp. 105, Jan. 2003.

[3] N. M. Thadani et al., “ An Efficient Zero-Copy I/O Framework for

UNIX,” Sun Microsystem Inc, May 1995.

[4] H. Koch, The Userspace I/O HOWTO, [Online]. Available:

http://www.kernel.org/doc/htmldocs/uio-howto.html