1.安装wkhtmltopdf

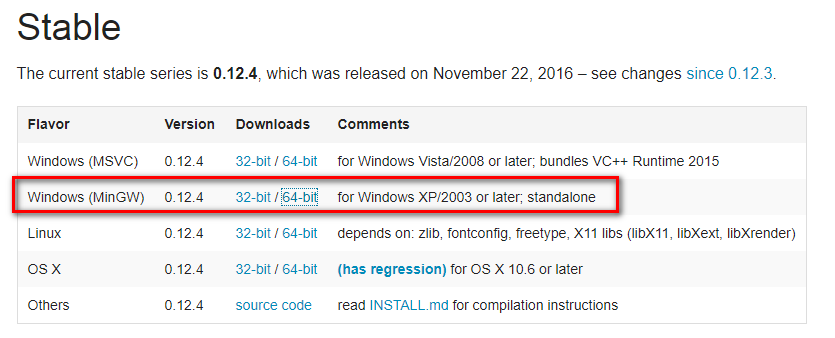

Windows平台直接在 http://wkhtmltopdf.org/downloads.html 下载稳定版的 wkhtmltopdf 进行安装,安装完成之后把该程序的执行路径加入到系统环境 $PATH 变量中,否则 pdfkit 找不到 wkhtmltopdf 就出现错误 “No wkhtmltopdf executable found”

2.安装pdfkit

直接pip install pdfkit

pdfkit 是 wkhtmltopdf 的Python封装包

1 import pdfkit 2 3 # 有下面3中途径生产pdf 4 5 pdfkit.from_url('http://google.com', 'out.pdf') 6 7 pdfkit.from_file('test.html', 'out.pdf') 8 9 pdfkit.from_string('Hello!', 'out.pdf')

3.合并pdf,使用PyPDF2

直接pip install PyPDF2

1 from PyPDF2 import PdfFileMerger 2 merger = PdfFileMerger() 3 input1 = open("1.pdf", "rb") 4 input2 = open("2.pdf", "rb") 5 merger.append(input1) 6 merger.append(input2) 7 # 写入到输出pdf文档中 8 output = open("hql_all.pdf", "wb") 9 merger.write(output)

4.综合示例:

1 # coding=utf-8 2 import os 3 import re 4 import time 5 import logging 6 import pdfkit 7 import requests 8 from bs4 import BeautifulSoup 9 from PyPDF2 import PdfFileMerger 10 11 html_template = """ 12 <!DOCTYPE html> 13 <html lang="en"> 14 <head> 15 <meta charset="UTF-8"> 16 </head> 17 <body> 18 {content} 19 </body> 20 </html> 21 22 """ 23 24 25 def parse_url_to_html(url, name): 26 """ 27 解析URL,返回HTML内容 28 :param url:解析的url 29 :param name: 保存的html文件名 30 :return: html 31 """ 32 try: 33 response = requests.get(url) 34 soup = BeautifulSoup(response.content, 'html.parser') 35 # 正文 36 body = soup.find_all(class_="x-wiki-content")[0] 37 # 标题 38 title = soup.find('h4').get_text() 39 40 # 标题加入到正文的最前面,居中显示 41 center_tag = soup.new_tag("center") 42 title_tag = soup.new_tag('h1') 43 title_tag.string = title 44 center_tag.insert(1, title_tag) 45 body.insert(1, center_tag) 46 html = str(body) 47 # body中的img标签的src相对路径的改成绝对路径 48 pattern = "(<img .*?src=")(.*?)(")" 49 50 def func(m): 51 if not m.group(3).startswith("http"): 52 rtn = m.group(1) + "http://www.liaoxuefeng.com" + m.group(2) + m.group(3) 53 return rtn 54 else: 55 return m.group(1)+m.group(2)+m.group(3) 56 html = re.compile(pattern).sub(func, html) 57 html = html_template.format(content=html) 58 html = html.encode("utf-8") 59 with open(name, 'wb') as f: 60 f.write(html) 61 return name 62 63 except Exception as e: 64 65 logging.error("解析错误", exc_info=True) 66 67 68 def get_url_list(): 69 """ 70 获取所有URL目录列表 71 :return: 72 """ 73 response = requests.get("http://www.liaoxuefeng.com/wiki/0014316089557264a6b348958f449949df42a6d3a2e542c000") 74 soup = BeautifulSoup(response.content, "html.parser") 75 menu_tag = soup.find_all(class_="uk-nav uk-nav-side")[1] 76 urls = [] 77 for li in menu_tag.find_all("li"): 78 url = "http://www.liaoxuefeng.com" + li.a.get('href') 79 urls.append(url) 80 return urls 81 82 83 def save_pdf(htmls, file_name): 84 """ 85 把所有html文件保存到pdf文件 86 :param htmls: html文件列表 87 :param file_name: pdf文件名 88 :return: 89 """ 90 options = { 91 'page-size': 'Letter', 92 'margin-top': '0.75in', 93 'margin-right': '0.75in', 94 'margin-bottom': '0.75in', 95 'margin-left': '0.75in', 96 'encoding': "UTF-8", 97 'custom-header': [ 98 ('Accept-Encoding', 'gzip') 99 ], 100 'cookie': [ 101 ('cookie-name1', 'cookie-value1'), 102 ('cookie-name2', 'cookie-value2'), 103 ], 104 'outline-depth': 10, 105 } 106 pdfkit.from_file(htmls, file_name, options=options) 107 108 109 def main(): 110 start = time.time() 111 file_name = u"liaoxuefeng_Python3_tutorial" 112 urls = get_url_list() 113 for index, url in enumerate(urls): 114 parse_url_to_html(url, str(index) + ".html") 115 htmls =[] 116 pdfs =[] 117 for i in range(0,124): 118 htmls.append(str(i)+'.html') 119 pdfs.append(file_name+str(i)+'.pdf') 120 121 save_pdf(str(i)+'.html', file_name+str(i)+'.pdf') 122 123 print u"转换完成第"+str(i)+'个html' 124 125 merger = PdfFileMerger() 126 for pdf in pdfs: 127 merger.append(open(pdf,'rb')) 128 print u"合并完成第"+str(i)+'个pdf'+pdf 129 130 output = open(u"廖雪峰Python_all.pdf", "wb") 131 merger.write(output) 132 133 print u"输出PDF成功!" 134 135 for html in htmls: 136 os.remove(html) 137 print u"删除临时文件"+html 138 139 for pdf in pdfs: 140 os.remove(pdf) 141 print u"删除临时文件"+pdf 142 143 total_time = time.time() - start 144 print(u"总共耗时:%f 秒" % total_time) 145 146 147 if __name__ == '__main__': 148 main()