Jsoup是一款Java的HTML解析器,可直接解析某个URL地址、HTML文本内容。它提供了一套非常省力的API,可通过DOM,CSS以及类似于jQuery的操作方法来取出和操作数据。

基本了解参考中文文档:http://www.open-open.com/jsoup/

下面介绍一个具体例子:

比如我们要抓取:http://datacenter.mep.gov.cn/report/air_daily/air_dairy.jsp上某个时段的所有城市的天气信息并且保存为csv格式的文件。

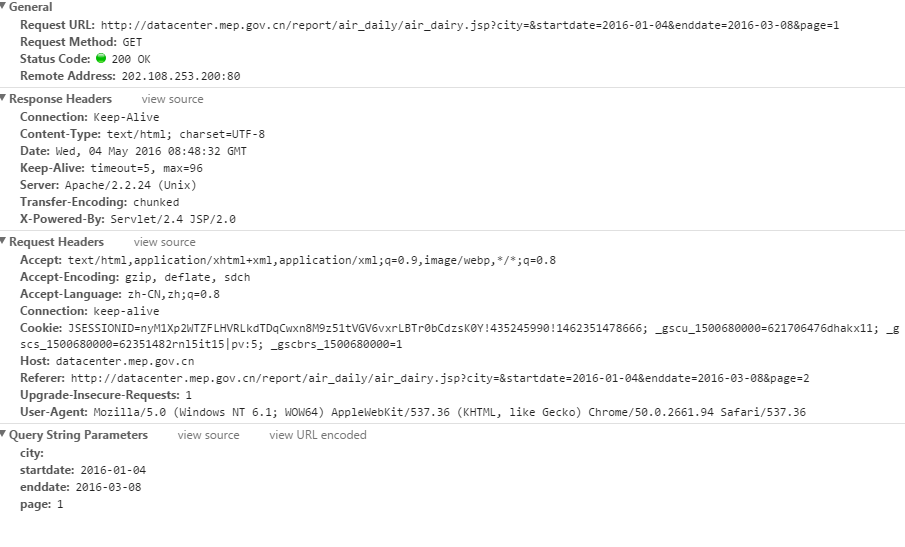

在Chrome下先执行一次访问,查看页面访问参数:

其中得到请求方式、URL、参数列表等信息。

然后根据文档开始编写代码。

项目结构如下:

CityWeather.java:

1 package bean; 2 3 public class CityWeather 4 { 5 public final static int ITEM_NUMBER = 6; 6 private String id; 7 private String city; 8 private String date; 9 private String aqi; 10 private String aql; 11 private String pp; 12 13 public CityWeather(){} 14 15 public CityWeather(String id, String city, String date, 16 String aqi, String aql, String pp) 17 { 18 this.id = id; 19 this.city = city; 20 this.date = date; 21 this.aqi = aqi; 22 this.aql = aql; 23 this.pp = pp; 24 } 25 26 public String getId() 27 { 28 return id; 29 } 30 31 public void setId(String id) 32 { 33 this.id = id; 34 } 35 36 public String getCity() 37 { 38 return city; 39 } 40 41 public void setCity(String city) 42 { 43 this.city = city; 44 } 45 46 public String getDate() 47 { 48 return date; 49 } 50 51 public void setDate(String date) 52 { 53 this.date = date; 54 } 55 56 public String getAqi() 57 { 58 return aqi; 59 } 60 61 public void setAqi(String aqi) 62 { 63 this.aqi = aqi; 64 } 65 66 public String getAql() 67 { 68 return aql; 69 } 70 71 public void setAql(String aql) 72 { 73 this.aql = aql; 74 } 75 76 public String getPp() 77 { 78 return pp; 79 } 80 81 public void setPp(String pp) 82 { 83 this.pp = pp; 84 } 85 86 @Override 87 public String toString() 88 { 89 return id + ", " + city + ", " + date + ", " + aqi + 90 ", " + aql + ", " + pp + " "; 91 } 92 }

War.java:

1 package bean; 2 3 public class War 4 { 5 //定义常量 6 public final static int GET = 0; 7 public final static int POST = 1; 8 //待访问网址的url 9 private String url; 10 //参数列表 11 private String[] params; 12 //对应参数值 13 private String[] values; 14 //请求类型,默认为GET 15 private int requestMethod; 16 //待获取元素的选择器语法表示 17 private String selector; 18 19 public War(String url, String[] params, String[] values, 20 int requestMethod, String selector) 21 { 22 this.url = url; 23 this.params = params; 24 this.values = values; 25 this.requestMethod = requestMethod; 26 this.setSelector(selector); 27 } 28 29 public String getUrl() 30 { 31 return url; 32 } 33 34 public void setUrl(String url) 35 { 36 this.url = url; 37 } 38 39 public String[] getParams() 40 { 41 return params; 42 } 43 44 public void setParams(String[] params) 45 { 46 this.params = params; 47 } 48 49 public String[] getValues() 50 { 51 return values; 52 } 53 54 public void setValues(String[] values) 55 { 56 this.values = values; 57 } 58 59 public int getRequestMethod() 60 { 61 return requestMethod; 62 } 63 64 public void setRequestMethod(int requestMethod) 65 { 66 this.requestMethod = requestMethod; 67 } 68 69 public String getSelector() 70 { 71 return selector; 72 } 73 74 public void setSelector(String selector) 75 { 76 this.selector = selector; 77 } 78 }

Op.java:

1 package io; 2 import java.io.BufferedWriter; 3 import java.io.FileWriter; 4 import java.io.IOException; 5 import java.io.Writer; 6 import java.util.ArrayList; 7 import bean.CityWeather; 8 9 public class Op 10 { 11 public static void List2File(ArrayList<CityWeather> list, String file) throws IOException 12 { 13 Writer w = new FileWriter(file, true); 14 BufferedWriter buffWriter = new BufferedWriter(w); 15 for(CityWeather cw : list) 16 { 17 buffWriter.write(cw.toString()); 18 } 19 buffWriter.close(); 20 w.close(); 21 } 22 }

Crawler.java:

1 package service; 2 import bean.CityWeather; 3 import bean.War; 4 import org.jsoup.Jsoup; 5 import org.jsoup.nodes.Document; 6 import org.jsoup.nodes.Element; 7 import org.jsoup.select.Elements; 8 import org.jsoup.Connection; 9 import java.io.IOException; 10 import java.util.ArrayList; 11 12 public class Crawler 13 { 14 public static ArrayList<CityWeather> getResults(War war) throws IOException 15 { 16 Connection conn = Jsoup.connect(war.getUrl()); 17 if(war.getParams() != null) 18 { 19 for(int i = 0; i < war.getParams().length; i++) 20 { 21 conn.data(war.getParams()[i], war.getValues()[i]); 22 } 23 } 24 Document doc = null; 25 if(war.getRequestMethod() == War.GET) 26 { 27 doc = conn.timeout(10000).get(); 28 } 29 else 30 { 31 doc = conn.timeout(10000).post(); 32 } 33 if(doc == null) 34 { 35 System.out.print("unavailable "); 36 return null; 37 } 38 Elements results = doc.select(war.getSelector()); 39 ArrayList<CityWeather> list = new ArrayList<CityWeather>(); 40 int i = 0; 41 CityWeather cw = null; 42 for(Element e: results) 43 { 44 switch(i % CityWeather.ITEM_NUMBER) 45 { 46 case 0: 47 cw = new CityWeather(); 48 cw.setId(e.text()); 49 break; 50 case 1: 51 cw.setCity(e.text()); 52 break; 53 case 2: 54 cw.setDate(e.text()); 55 break; 56 case 3: 57 cw.setAqi(e.text()); 58 break; 59 case 4: 60 cw.setAql(e.text()); 61 break; 62 default: 63 cw.setPp(e.text().replace(',', '|')); 64 list.add(cw); 65 } 66 i++; 67 } 68 return list; 69 } 70 }

Test.java:

1 package test; 2 import java.text.DateFormat; 3 import java.util.ArrayList; 4 import java.util.Date; 5 import service.Crawler; 6 import bean.CityWeather; 7 import bean.War; 8 import io.Op; 9 10 public class Test 11 { 12 public static void main(String[] args) throws Exception 13 { 14 System.out.println(DateFormat.getDateTimeInstance().format(new Date()) + " 开始运行"); 15 String url = "http://datacenter.mep.gov.cn/report/air_daily/air_dairy.jsp"; 16 String[] params = { "city", "startdate", "enddate", "page" }; 17 String[] values = { "", "2016-05-01", "2016-05-01", "2" }; 18 int requestMethod = War.GET; 19 String selector = "td.report1_6"; 20 War war = new War(url, params, values, requestMethod, selector); 21 ArrayList<CityWeather> list = Crawler.getResults(war); 22 Op.List2File(list, "data/file.csv"); 23 System.out.println(DateFormat.getDateTimeInstance().format(new Date()) + " 运行结束"); 24 } 25 }

经过测试:抓取14年到16年的天气信息,跑的非常慢...大概跑了一天?...

而且访问有时候会失败,需要对ArrayList<CityWeather> list = Crawler.getResults(war);循环调用直到list的size不为0表示访问成功才可以访问下一页。

没有异常处理...

我在考虑是不是可以多线程同时访问一页最后再把结果merge起来,这样肯定快很多吧。

有空实现一下。

今天试了一下,开了1000个线程网站就暂时性bug了...

看来还要适当控制一下...果然多线程、分布式之类的还需要花时间仔细研究一下。

代码下载:https://github.com/SplayHuo/JavaProject