一 资源对象-k8s-api简介

1 查看api接口

1 创建一个管理员并授权,然后获取用户的token,操作步骤见2 coredns部署和etcd数据备份和恢复

kubectl get secret -n kubernetes-dashboard

kubectl describe secret admin-user-token-ckw5q -n kubernetes-dashboard #查看admin密钥内容

2 然后通过token去查看api接口,命令如下所示:

curl --cacert /etc/kubernetes/ssl/ca.pem -H "Authorization: Bearer eyJhbGciOiJSUzI1NiIsImtpZCI6IlZpRzhrSjFJMmlHdnlmcnRPb1dEMGExaEZGZXdIdmFzbXFGMTQ5VV9tTXMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTZtcjhwIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJlNTA5NmVmZi1hNzkxLTRhOGUtOTFkYi1lNmY1N2QxZTQ3NGEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.GC4aGvry48UkN3u6o3IMtNE5yf6p3BDjD6u97ws75nNP48nwzm2U03XQLwNeWVt3nyj7fWoEyQhTZ-omHkq1_ZHQiwr1zZRPolJ1H7f_RKChIJiuX7WuCrwlfzlNX3AcVo54EmwfIEvQdK91nzVuO0f_u9RYLXTMOJJYN4D0UMzkEcxNB1Ke-Hp5sF-uknV8zHVhbK2rMkVtlE5utPHjz34qNBtOT3SUSzW0D9DY4ZRBcd_0WpW3DSaVjcywS0F0XZ5IV-oepDjn6f9IGnIY37EdbJM3NvsYJ07lEpHJ-YrLnmXVLG7NCCaTi64C36lm0HniyU5k62Y2E-38TgJ-Qg" https://172.31.7.100:6443

二 控制器-kubernetes Pod、Job、cronjob简介及示例

2.1 pod

1 pod是k8s中最小单元

2 一个pod中可以运行一个容器,也可以运行多个容器

3 运行多个容器的话,这些容器是一起被调度的

4 pod的生命周期是短暂的,不会自愈

5 一般我们是通过controller来创建和管理pod的

pod的yaml文件如下:

kubectl apply -f pod.yaml

apiVersion: v1

kind: Pod #类型就写pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.20.2-alpine

ports:

- containerPort: 80

2.2 job和cronjob

job就是只运行一次,适用于初始化环境的时候,执行一次。

cronjob在一定周期内,多次运行

2.2.1 job的yaml文件如下:

kubectl apply -f job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: job-mysql-init

namespace: linux66

spec:

template:

spec:

containers:

- name: job-mysql-init-container

image: centos:7.9.2009

command: ["/bin/sh"]

args: ["-c", "echo data init job at `date +%Y-%m-%d_%H-%M-%S` >> /cache/data.log"]

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

hostPath:

path: /tmp/jobdata

restartPolicy: Never

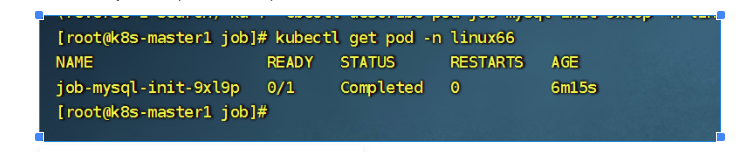

运行完毕的job任务的pod状态是complate,是无法exec登录进去的

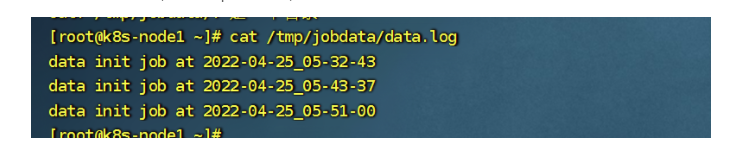

然后我们去node节点,查看/tmp/data目录,是否有日志生成

2.2.2 cronjob

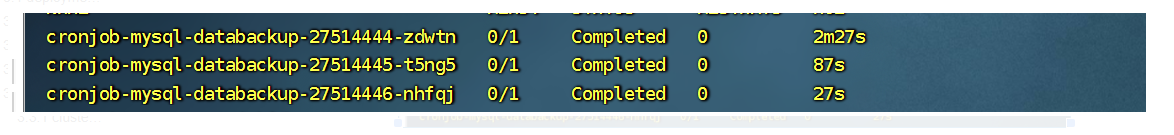

cronjob 最多保留最近三次的执行记录,

cronjob实例:

apiVersion: batch/v1

kind: CronJob

metadata:

name: cronjob-mysql-databackup

spec:

#schedule: "30 2 * * *"

schedule: "* * * * *" #每分钟运行一次

jobTemplate:

spec:

template:

spec:

containers:

- name: cronjob-mysql-databackup-pod

image: centos:7.9.2009

#imagePullPolicy: IfNotPresent

command: ["/bin/sh"]

args: ["-c", "echo mysql databackup cronjob at `date +%Y-%m-%d_%H-%M-%S` >> /cache/data.log"]

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

hostPath:

path: /tmp/cronjobdata

restartPolicy: OnFailure

三 控制器 kubernetes Deployment、StatefullSet,DaemonSet,以及Service简介及示例

3.1 deployment-副本控制器介绍

在deployment之前有两个控制器 Replication Controller和ReplicaSet,分别为第一代控制器和第二代控制器,deployment是 第三代控制器,除了有rs的功能之外,还有很多高级功能,滚动升级,回滚等。

deployent实例:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2

selector:

#app: ng-deploy-80 #rc

matchLabels: #rs or deployment

app: ng-deploy-80

#matchExpressions:

# - {key: app, operator: In, values: [ng-deploy-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx:1.16.0

ports:

- containerPort: 80

主要是通过pod的label标签来判断pod的个数,是否到达预期的副本数量

3.2 StatefullSet

为了解决有状态服务的集群部署,集群之间数据同步问题(mysql主从等)

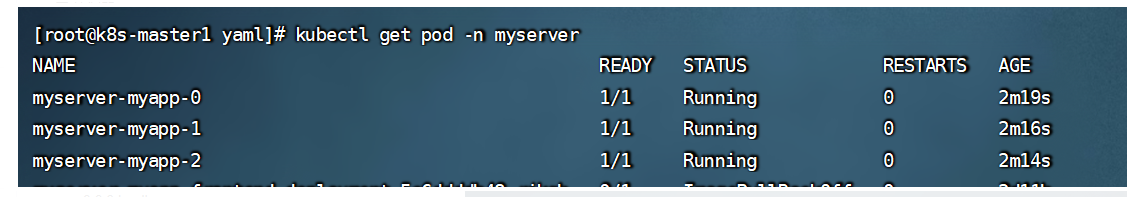

statefullset 所管理的pod拥有唯一且固定的名称

stafefullset 是按照顺序对pod进行启动,伸缩和停止的

headless services (无头服务,请求直接解析到Pod ip)

下面以nginx为例

[root@k8s-master1 yaml]# cat 1-Statefulset.yaml

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: myserver-myapp

namespace: myserver

spec:

replicas: 3

serviceName: "myserver-myapp-service"

selector:

matchLabels:

app: myserver-myapp-frontend

template:

metadata:

labels:

app: myserver-myapp-frontend

spec:

containers:

- name: myserver-myapp-frontend

image: nginx:1.20.2-alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: myserver-myapp-service

namespace: myserver

spec:

clusterIP: None

ports:

- name: http

port: 80

selector:

app: myserver-myapp-frontend

运行之后,效果如下所示:

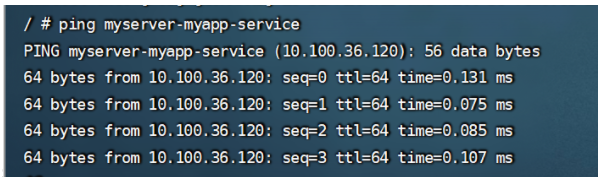

查看colusterip 发现没有Ip,然后进去一个Pod里,去直接ping 这个service地址,返回的地址是其中一个pod的地址

3.3 DaemonSet

在集群中每个节点运行同一个pod,当有新的节点加入时,也会为新的节点运行相同的Pod,

实例1,所有node节点运行一个nginx pod

hostNetwork: true #表示pod用物理机的网络,这样每个Node节点,可以直接访问到pod的地址,不必在做转发,节省效率

hostPID: true #开启

[root@k8s-master1 daemonset]# cat 1-DaemonSet-webserverp.yaml

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: myserver-myapp

namespace: myserver

spec:

selector:

matchLabels:

app: myserver-myapp-frontend

template:

metadata:

labels:

app: myserver-myapp-frontend

spec:

tolerations:

# this toleration is to have the daemonset runnable on master nodes

# remove it if your masters can't run pods

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

hostNetwork: true

hostPID: true

containers:

- name: myserver-myapp-frontend

image: nginx:1.20.2-alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: myserver-myapp-frontend

namespace: myserver

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30018

protocol: TCP

type: NodePort

selector:

app: myserver-myapp-frontend

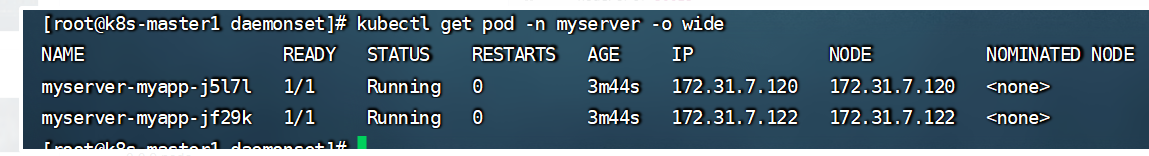

效果如下:发现pod的ip和node节点的Ip是一样的,就是因为设置了hostNetwork: true

实例2在每个node节点运行一个日志收集客户端

[root@k8s-master1 daemonset]# cat 2-DaemonSet-fluentd.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-elasticsearch

namespace: kube-system

labels:

k8s-app: fluentd-logging

spec:

selector:

matchLabels:

name: fluentd-elasticsearch

template:

metadata:

labels:

name: fluentd-elasticsearch

spec:

tolerations:

# this toleration is to have the daemonset runnable on master nodes

# remove it if your masters can't run pods

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: fluentd-elasticsearch

image: quay.io/fluentd_elasticsearch/fluentd:v2.5.2

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

3.4 service介绍以及类型

3.4.1 service的三种调度策略

由于pod重建之后ip就发生了变化,因此pod直接无法通过ip进行通信,而 service则解耦了服务和应用,service实现的方式就是通过label标签动态匹配后端的的pod

kube-proxy 监听着k8s-apiserver,一旦service资源发生变化,kube-proxy就会生成对应的负载调度的调整,这样就保证service最新的状态。

kube-proxy 有三种调度策略

userspace:k8s1.1之前

iptables 1.2-1.11之前

ipvs k8s1.11之后,如果没有开启ipvs,则自动降级为iptables

3.4.2 service类型-clusterip 用于内部服务,基于service name访问

实例演示:

1 创建deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-cluster # deployment名字

spec:

replicas: 1

selector:

#matchLabels: #rs or deployment

# app: ng-deploy3-80

matchExpressions:

- {key: app, operator: In, values: [ng-clusterip]} #deployment匹配的pod标签

template:

metadata:

labels:

app: ng-clusterip #pod标签

spec:

containers:

- name: ng-clusterip #容器的名字

image: nginx:1.17.5

ports:

- containerPort: 80

2 然后在创建一个clusterip,关联刚才的pod

apiVersion: v1

kind: Service

metadata:

name: ng-clusterip #service的名字

spec:

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

type: ClusterIP

selector:

app: ng-clusterip #匹配的pod的标签,才能关联到pod

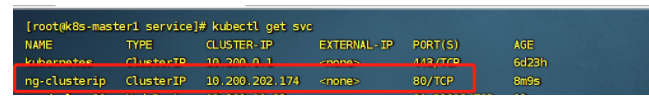

查看svc

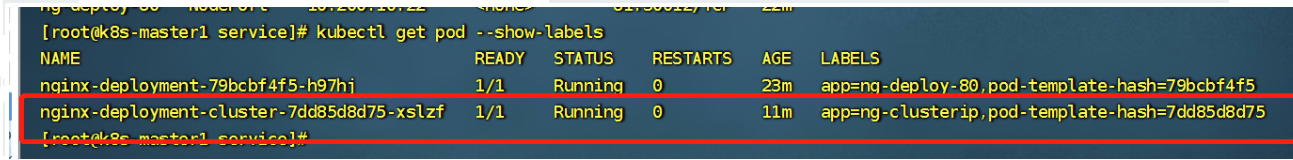

查看Pod的标签

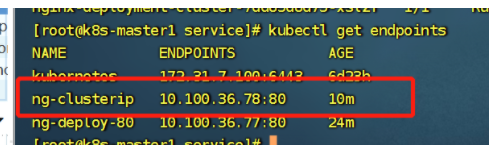

查看svc后端绑定的pod

3.4.3 nodeport: 用于k8s集群以外的服务,访问k8s内部的服务

1 创建一个deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

#matchLabels: #rs or deployment

# app: ng-deploy3-80

matchExpressions:

- {key: app, operator: In, values: [ng-deploy-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-deploy-80 # pod的标签

spec:

containers:

- name: ng-deploy-80

image: nginx:1.17.5

ports:

- containerPort: 80

2 创建一个nodeport类型的的service

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30012

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

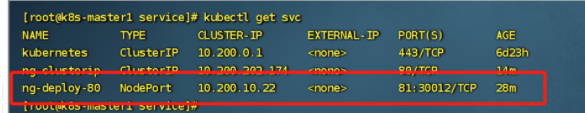

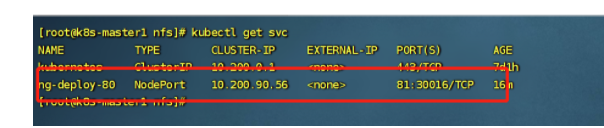

查看svc

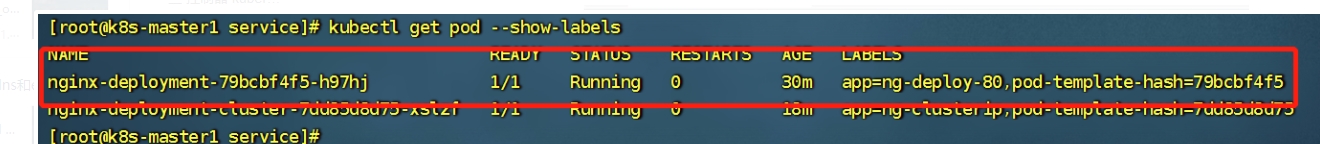

查看Pod标签

3.4.4 loadbalancer :用于公有云环境的服务暴露

3.4.5 用于将 k8s集群外部的服务映射至k8s集群内部访问,从而让内部的pod能够通过固定的service name 访问集群外部的服务

四 .存储-kubernetes Volume存储卷简介、emptyDir、hostPath、NFS简介及示例

4.1 emptydir:本地临时卷,容器删除完后,数据会丢失

实例

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

emptyDir: {}

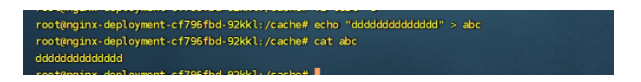

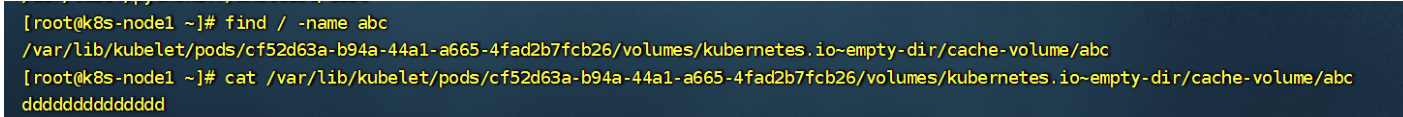

创建完这个deployment之后,进去pod,去/cache创建一个文件,然后去node节点上去查找这个文件

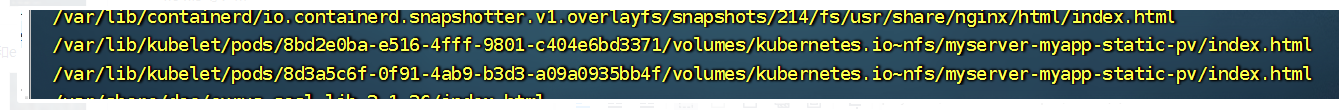

node节点文件所在目录:

然后我们把这个pod删除,然后在看看node节点数据是否还在,结果已经没了

kubectl delete -f deploy_emptyDir.yml # 删除pod

4.2 hostpath:本地存储卷,容器删除完后,数据会保存在宿主机上

实例yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /cache #容器目录

name: cache-volume

volumes:

- name: cache-volume

hostPath:

path: /tmp/linux66 # 宿主机目录

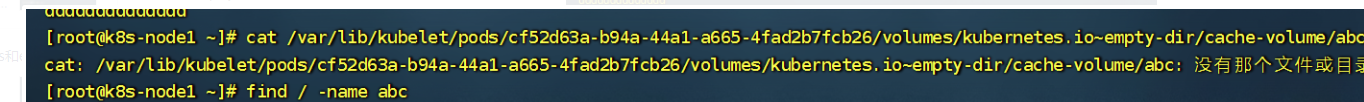

然后我们apply 创建一下,然后再去cache目录里写入东西,然后我们在删除deployment,删除完之后,容器内的数据已经没了,但是宿主机/tmp/linux66 这个目录还存有数据。

4.3 nfs 等:网络存储卷,可以实现容器间数据共享和持久化

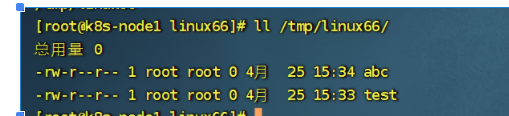

1 先准备一个nfs服务器

yum install -y nfs-utils rpcbind

[root@k8s-etcd01 ~]# cat /etc/exports

/tmp/data 172.31.7.0/24(rw,sync,no_root_squash,fsid=0)

然后重启nfs服务

2 客户端安装

客户端可以安装一个showmount

如果不安装nfs-common(针对ubuntu),可能会造成挂载访问变慢

yum install showmount

yum install nfs-utils rpcbind

客户端测试:

3 nfs实例挂载一个目录

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html/mysite #pod内的目录

name: my-nfs-volume

volumes:

- name: my-nfs-volume

nfs:

server: 172.31.7.101 #nfs目录

path: /tmp/data

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30016

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

创建后,查看svc

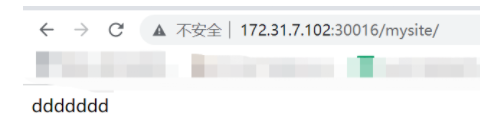

进去/usr/share/nginx/html/mysite 这个目录或者nfs的挂载目录/tmp/data/ 去里面自定义一个页面,效果如下:

4 同时挂载两个目录实例:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-site2

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-81

template:

metadata:

labels:

app: ng-deploy-81

spec:

containers:

- name: ng-deploy-81

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html/mysite

name: my-nfs-volume

- mountPath: /usr/share/nginx/html/magedu

name: magedu-statics-volume

volumes:

- name: my-nfs-volume

nfs:

server: 172.31.7.101

path: /tmp/data

- name: magedu-statics-volume

nfs:

server: 172.31.7.101

path: /tmp/magedu

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-81

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30017

protocol: TCP

type: NodePort

selector:

app: ng-deploy-81

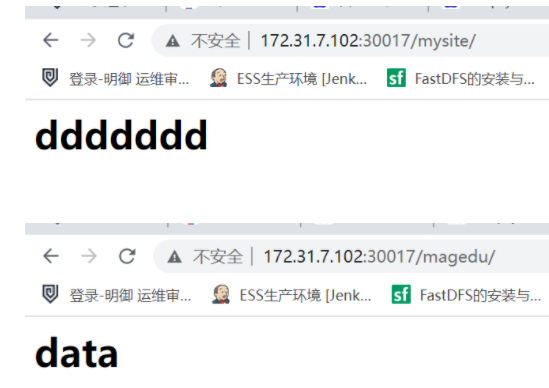

创建完之后,如下效果

五 存储-PV、PVC简介、静态PV/PVC创建及使用案例,动态PV/PVC创建及使用案例

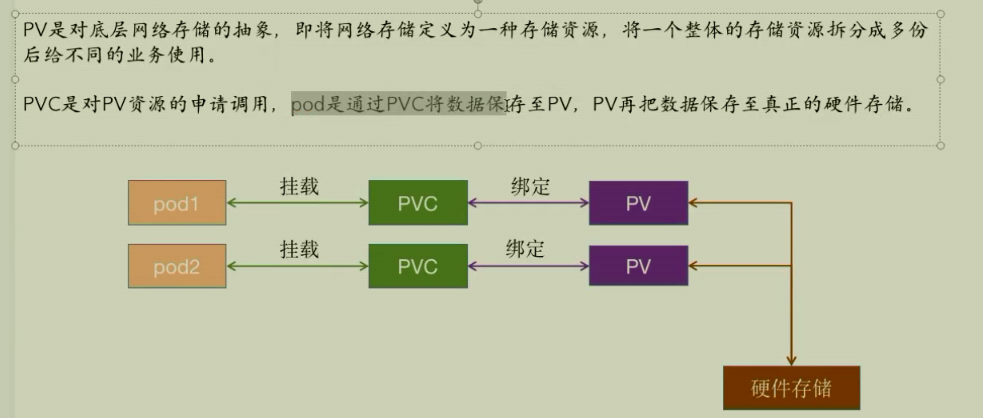

5.1 pv

是集群中存储资源的一个集群资源,不隶属于任何的namespace,pv的数据最终存储在硬件存储。pod不能直接挂载pv,pv需要绑定给pvc,才能由pod挂载pvc使用。

pv的访问模式:

ReadWriteOnce pv只能以单个节点,读写权限挂载 RWO

ReadOnlyMany 可以被多个节点挂载,权限是只读,ROX

ReadWriteMany 可以被多个节点挂载,权限是读写 RWX

pv的回收策略:

Retain(保留)——手动回收

Recycle(回收)——基本擦除( rm -rf /thevolume/* )

Delete(删除)——关联的存储资产(例如 AWS EBS、GCE PD、Azure Disk 和 OpenStack Cinder 卷)

将被删除当前,只有 NFS 和 HostPath 支持回收策略。AWS EBS、GCE PD、Azure Disk 和 Cinder 卷支持删除策略

5.2 pvc

pod挂载pvc,并将数据存储在pvc,另外pvc在创建的时候需要指定namespace,就是pod要和pvc在一个namespace里,可以对pvc设置特定的空间大小和访问模式,pod删除了,对pvc的数据没有影响。

5.3 静态pv实例

5.3.1 创建pv

[root@k8s-master1 static_pv]# cat 1-myapp-persistentvolume.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: myserver-myapp-static-pv #pv的名字

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/myserver/myappdata

server: 172.31.7.101

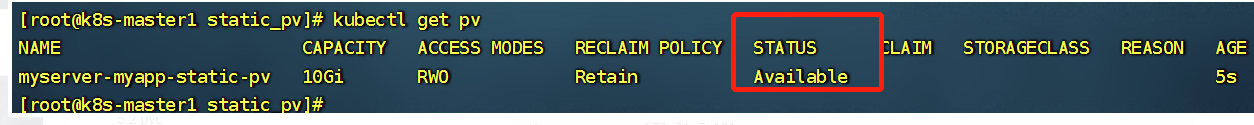

刚创建的pv状态

5.3.2 创建pvc

[root@k8s-master1 static_pv]# cat 2-myapp-persistentvolumeclaim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: myserver-myapp-static-pvc # pvc名字

namespace: myserver

spec:

volumeName: myserver-myapp-static-pv #pv的名字

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

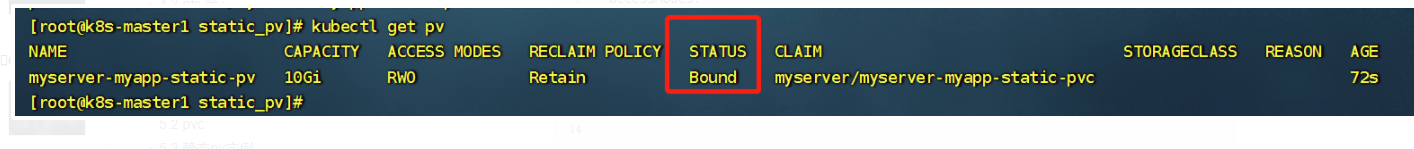

pvc创建之后pv的状态

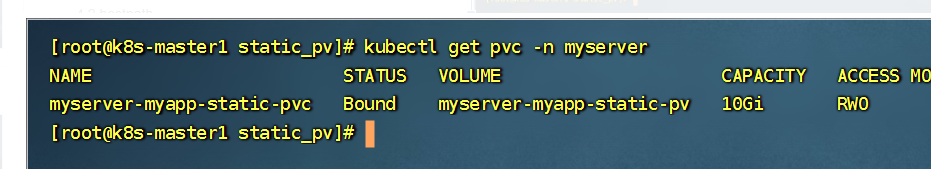

查看pvc

5.3.3 创建deployment去挂载pvc

[root@k8s-master1 static_pv]# cat 3-myapp-webserver.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: myserver-myapp

name: myserver-myapp-deployment-name

namespace: myserver

spec:

replicas: 2

selector:

matchLabels:

app: myserver-myapp-frontend

template:

metadata:

labels:

app: myserver-myapp-frontend

spec:

containers:

- name: myserver-myapp-container

image: nginx:1.20.0

#imagePullPolicy: Always

volumeMounts:

- mountPath: "/usr/share/nginx/html/statics"

name: statics-datadir

volumes:

- name: statics-datadir

persistentVolumeClaim:

claimName: myserver-myapp-static-pvc # pvc的名字

---

kind: Service

apiVersion: v1

metadata:

labels:

app: myserver-myapp-service

name: myserver-myapp-service-name

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30080

selector:

app: myserver-myapp-frontend

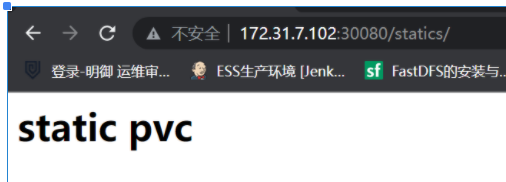

最后进去pod /usr/share/nginx/html/statics 这个目录,创建一个index.html文件,访问如下:

在node节点上对应的所存储的目录是下面两个目录

当删除deployment之后,数据不会丢失,重新创建deployment,还是挂载原来的pvc,数据会回来的

5.4 动态pvc

动态存储卷,适用于有状态的服务集群,比如mysql一主多从,zookeeper集群

5.4.1 创建账户

kubectl apply -f 1-rbac.yaml

apiVersion: v1

kind: Namespace

metadata:

name: nfs

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

5.4.2 创建storageclass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage #注意这个名字要跟第四步的名字一致

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

reclaimPolicy: Retain #PV的删除策略,默认为delete,删除PV后立即删除NFS server的数据

parameters:

archiveOnDelete: "true" #删除pod时保留pod数据,默认为false时为不保留数据

5.4.3 创建nfs,可以帮助自动创建pv

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

spec:

replicas: 1

strategy: #部署策略

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: docker.io/shuaigege/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 172.31.7.122

- name: NFS_PATH

value: /tmp/data

volumes:

- name: nfs-client-root

nfs:

server: 172.31.7.122

path: /tmp/data

5.4.4 创建pvc

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: myserver-myapp-dynamic-pvc

namespace: myserver

spec:

storageClassName: managed-nfs-storage #调用的storageclass 名称

accessModes:

- ReadWriteMany #访问权限

resources:

requests:

storage: 500Mi #空间大小

5.4.5 创建pod

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: myserver-myapp

name: myserver-myapp-deployment-name

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp-frontend

template:

metadata:

labels:

app: myserver-myapp-frontend

spec:

containers:

- name: myserver-myapp-container

image: nginx:1.20.0

#imagePullPolicy: Always

volumeMounts:

- mountPath: "/usr/share/nginx/html/statics"

name: statics-datadir

volumes:

- name: statics-datadir

persistentVolumeClaim:

claimName: myserver-myapp-dynamic-pvc

---

kind: Service

apiVersion: v1

metadata:

labels:

app: myserver-myapp-service

name: myserver-myapp-service-name

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30080

selector:

app: myserver-myapp-frontend

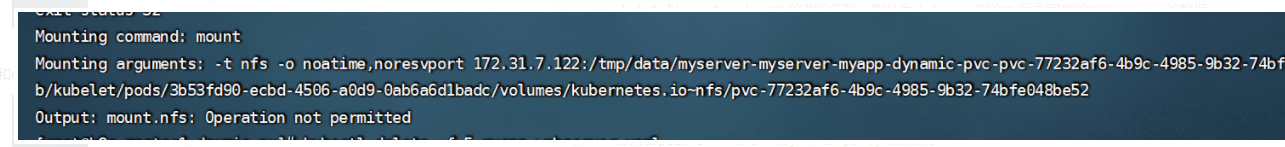

说明:曾经在第五步pod挂载不上pvc,一般是用了nfs4挂载,我的没用,也报错了内容如下,我的是centos7系统,所以更改了storageclass的内容,原来strageclass内容是下面的内容,ubuntu应该不会报错

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

reclaimPolicy: Retain #PV的删除策略,默认为delete,删除PV后立即删除NFS server的数据

mountOptions:

- noresvport #告知NFS客户端在重新建立网络连接时,使用新的传输控制协议源端口

- noatime #访问文件时不更新文件inode中的时间戳,高并发环境可提高性能

parameters:

mountOptions: "vers=4.1,noresvport,noatime"

archiveOnDelete: "true" #删除pod时保留pod数据,默认为false时为不保留数据

报错如下:

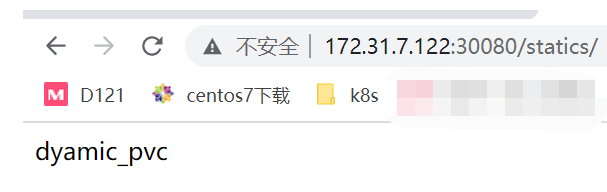

5.4.6 测试挂载数据

我的pod挂载的是/tmp/data/目录,对外端口为30080,我设置一个html进行访问,直接去/tmp/data/ 下新建一个index.html

六 .存储-ConfigMap、Secret简介及示例

6.1 configmap

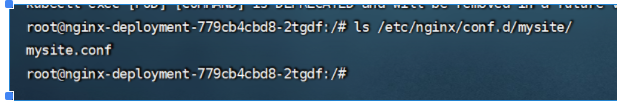

实例一:演示一个将nginx的配置文件,挂载到pod指定目录

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

default: |

server {

listen 80;

server_name www.mysite.com;

index index.html index.php index.htm;

location / {

root /data/nginx/html;

if (!-e $request_filename) {

rewrite ^/(.*) /index.html last;

}

}

}

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx:1.20.0

ports:

- containerPort: 80

volumeMounts:

- name: nginx-config

mountPath: /etc/nginx/conf.d/mysite

volumes:

- name: nginx-config

configMap:

name: nginx-config

items:

- key: default #key 为default

path: mysite.conf # 挂载文件名字

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30019

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

进入到pod里,查看mysite.conf文件,然后去Pod /data/nginx/html 这个目录创建测试文件,进行访问

域名访问这个需要借助反向代理,端口为30019

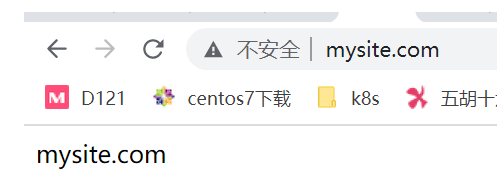

实例二 传递变量到Pod

传递username和password到pod里面

kubectl apply -f 2-deploy_configmap_env.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

username: "user1"

password: "12345678"

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

env:

- name: magedu

value: linux66

- name: MY_USERNAME

valueFrom:

configMapKeyRef:

name: nginx-config

key: username # 挂载username

- name: MY_PASSWORD

valueFrom:

configMapKeyRef:

name: nginx-config

key: password # 挂载password

ports:

- containerPort: 80

效果如图所示:进去pod,然后执行env

6.2 secret

secret 是一种包含少量敏感信息如密码,密钥,secret的名称必须是合法的dns子域名,每个secret 的大小最多为1Mb

secret的类型和使用场景

opaque 用户定义的任意数据

service-account-token serviceaccount 令牌

dockercfg dockercfg文件的序列化形式

dockerconfigjson docker/config.json文件序列化形式

basic-auth 用于基本身份认证的凭据

ssh-auth 用于ssh身份认证的凭据

tls 保存 crt证书和key证书

token 启动引导令牌数据

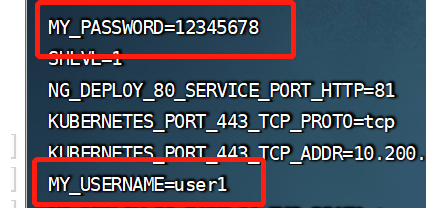

6.2.1 opaque -data类型

挂载一个加密的用户名和密码

[root@k8s-master1 secret]# cat 1-secret-Opaque-data.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysecret-data

namespace: myserver

type: Opaque

data:

user: YWRtaW4K

password: MTIzNDU2Cg==

kubectl get secrets -n myserver mysecret-data -o yaml #去查看里面的数据

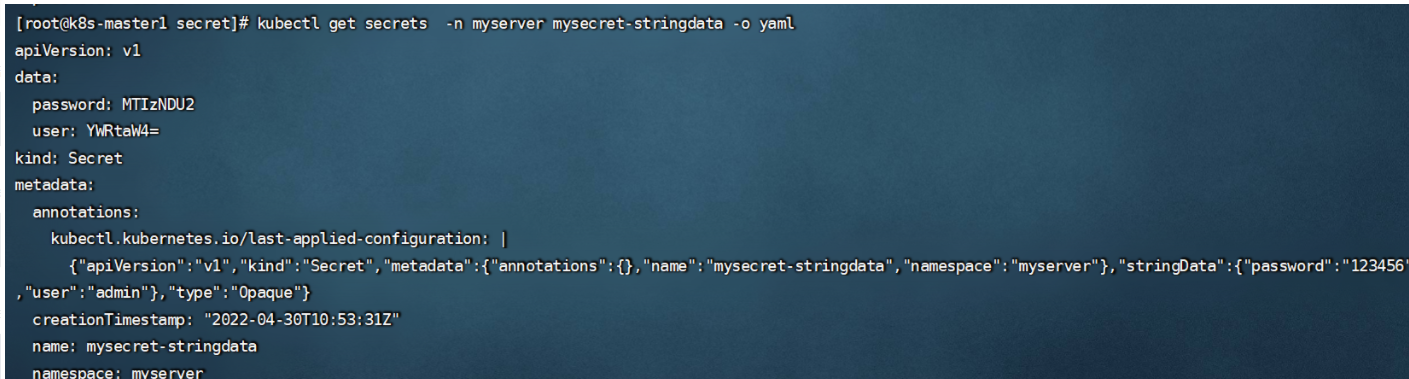

6.2.2 opaque-stringdata

[root@k8s-master1 secret]# cat 2-secret-Opaque-stringData.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysecret-stringdata

namespace: myserver

type: Opaque

stringData:

user: 'admin'

password: '123456'

查看里面的数据

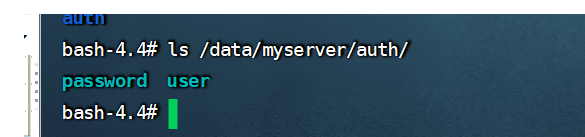

6.2.3 pod如何挂载secret

这里演示6.2.1里生成的secret文件,名字为mysecret-data

[root@k8s-master1 secret]# cat 3-secret-Opaque-mount.yaml

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: myserver-myapp-app1-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp-app1

template:

metadata:

labels:

app: myserver-myapp-app1

spec:

containers:

- name: myserver-myapp-app1

image: tomcat:7.0.94-alpine

ports:

- containerPort: 8080

volumeMounts:

- mountPath: /data/myserver/auth #挂载的路径

name: myserver-auth-secret

volumes:

- name: myserver-auth-secret

secret:

secretName: mysecret-data #挂载的secret的名字

---

apiVersion: v1

kind: Service

metadata:

name: myserver-myapp-app1

namespace: myserver

spec:

ports:

- name: http

port: 8080

targetPort: 8080

nodePort: 30018

protocol: TCP

type: NodePort

selector:

app: myserver-myapp-app1

然后进入到Pod里,去查看挂载是否成功

kubectl exec -it myserver-myapp-app1-deployment-9c46fdb4d-z9d7c /bin/bash -n myserver

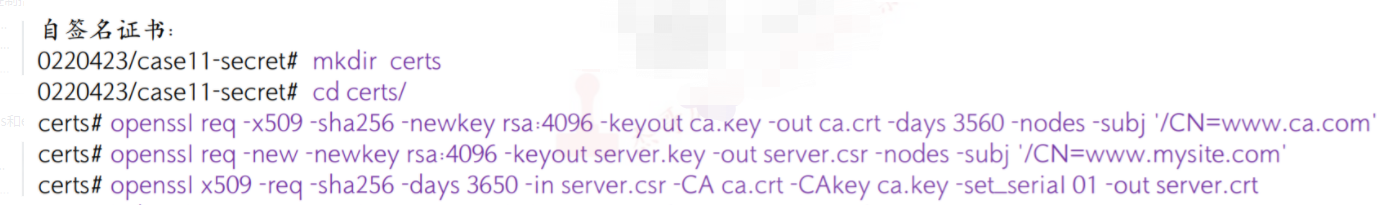

6.2.4 secret-tls证书类型举例:

目的,就是实现https 证书功能

首先自己签发证书

创建tls的secret: 要根据刚才的签名证书

[root@k8s-master1 certs]# kubectl create secret tls myserver-tls-key --cert=./server.crt --key=./server.key -n myserver

secret/myserver-tls-key created

[root@k8s-master1 certs]# kubectl get secret -n myserver

NAME TYPE DATA AGE

default-token-6flnm kubernetes.io/service-account-token 3 3h47m

myserver-tls-key kubernetes.io/tls

然后创建Pod去挂载secret

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

namespace: myserver

data:

default: |

server {

listen 80;

server_name www.mysite.com;

listen 443 ssl;

ssl_certificate /etc/nginx/conf.d/certs/tls.crt;

ssl_certificate_key /etc/nginx/conf.d/certs/tls.key;

location / {

root /usr/share/nginx/html;

index index.html;

if ($scheme = http ){ #未加条件判断,会导致死循环

rewrite / https://www.mysite.com permanent;

}

if (!-e $request_filename) {

rewrite ^/(.*) /index.html last;

}

}

}

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: myserver-myapp-frontend-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp-frontend

template:

metadata:

labels:

app: myserver-myapp-frontend

spec:

containers:

- name: myserver-myapp-frontend

image: nginx:1.20.2-alpine

ports:

- containerPort: 80

volumeMounts:

- name: nginx-config

mountPath: /etc/nginx/conf.d/myserver

- name: myserver-tls-key

mountPath: /etc/nginx/conf.d/certs

volumes:

- name: nginx-config

configMap:

name: nginx-config

items:

- key: default

path: mysite.conf

- name: myserver-tls-key

secret:

secretName: myserver-tls-key #挂载 secret名字

---

apiVersion: v1

kind: Service

metadata:

name: myserver-myapp-frontend

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30018

protocol: TCP

- name: htts

port: 443

targetPort: 443

nodePort: 30019

protocol: TCP

selector:

app: myserver-myapp-frontend

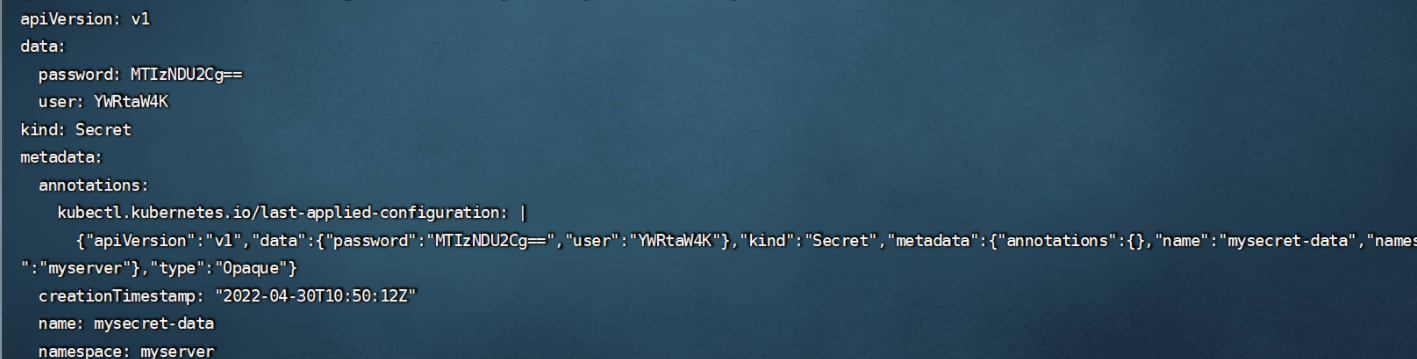

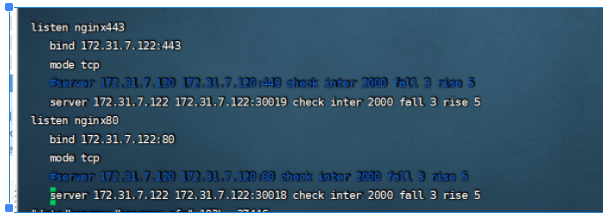

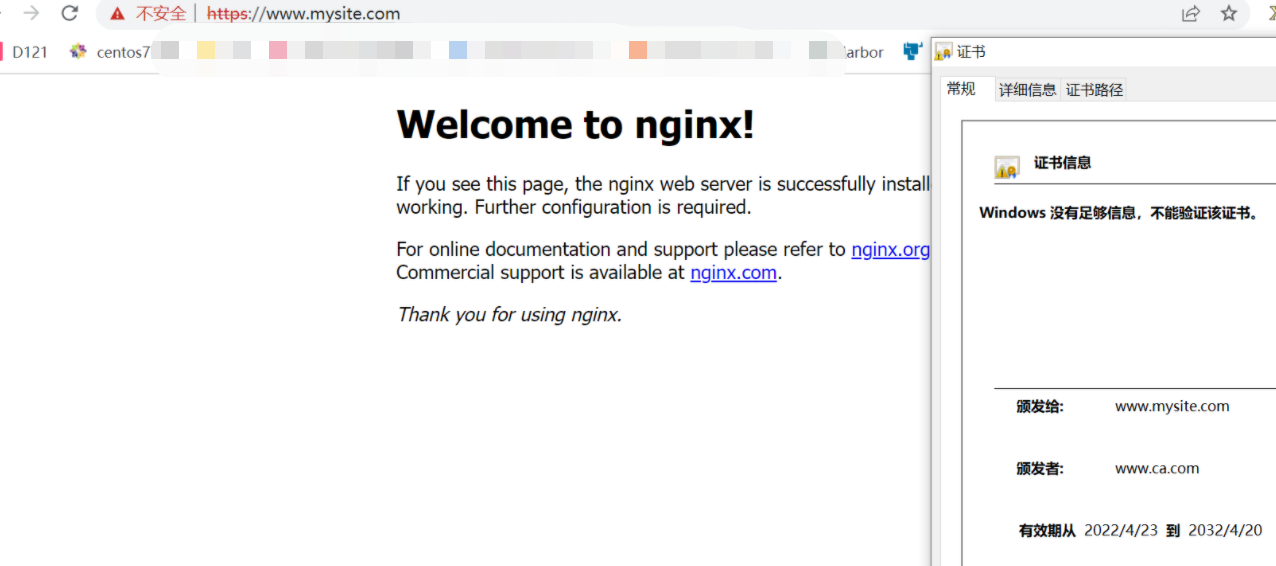

然后去访问www.site.com效果

需要借助代理去访问

然后更改host指向 7.122

6.2.5 dockerconfigjson 类型

当镜像为私有仓库的时候,需要在每个node节点提前登陆镜像仓库,我们利用dockerconfigjson 在去下载镜像的时候,就不需要一一登录了

我这里用的是阿里云仓库测试的,创建一个项目,设置为私有,然后手动登录一次,会生成config.json文件

1 创建docker secret

kubectl create secret generic aliyun-registry-image-pull-key --from-file=.dockerconfigjson=/root/.docker/config.json --type=kbernetes.io/dockerconfigjson -n myserver

secret的名字为aliyun-registry-image-pull-key

/root/docker/config.json 这个文件为登录docker的密码文件

2 创建一个deployment去下载镜像

apiVersion: apps/v1

kind: Deployment

metadata:

name: myserver-myapp-frontend-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp-frontend

template:

metadata:

labels:

app: myserver-myapp-frontend

spec:

containers:

- name: myserver-myapp-frontend

image: registry.cn-qingdao.aliyuncs.com/zhangshijie/nginx:1.16.1-alpine-perl

ports:

- containerPort: 80

imagePullSecrets:

- name: aliyun-registry-image-pull-key

---

apiVersion: v1

kind: Service

metadata:

name: myserver-myapp-frontend

namespace: myserver

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30018

protocol: TCP

type: NodePort

selector:

app: myserver-myapp-frontend