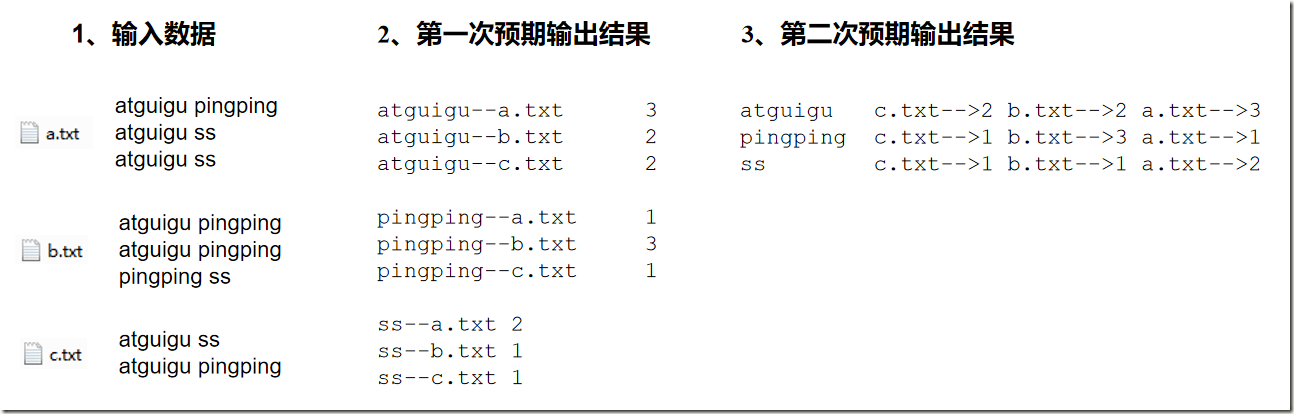

一、倒排索引案例(多job串联)

1.1、需求及分析

1.2、代码编写

1.2.1、第一次处理

1)第一次处理,编写OneIndexMapper类

package com.dianchou.mr.index; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.lib.input.FileSplit; import java.io.IOException; public class OneIndexMapper extends Mapper<LongWritable, Text, Text, IntWritable> { Text k = new Text(); IntWritable v = new IntWritable(1); String name; @Override protected void setup(Context context) throws IOException, InterruptedException { FileSplit split = (FileSplit) context.getInputSplit(); name = split.getPath().getName(); } @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String line = value.toString(); String[] words = line.split(" "); for (String word : words) { k.set(word + "---" + name); context.write(k,v); } } }

2)第一次处理,编写OneIndexReducer类

package com.dianchou.mr.index; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; import java.io.IOException; public class OneIndexReducer extends Reducer<Text, IntWritable,Text,IntWritable> { //atguigu--a.txt 3 IntWritable v = new IntWritable(); @Override protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { int sum = 0; for (IntWritable value : values) { sum += value.get(); } v.set(sum); context.write(key, v); } }

3)第一次处理,编写OneIndexDriver类

package com.dianchou.mr.index; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class OneIndexDriver { public static void main(String[] args) throws Exception { // 输入输出路径需要根据自己电脑上实际的输入输出路径设置 args = new String[] { "D:\hadoop\index-input", "D:\hadoop\index-output" }; Configuration conf = new Configuration(); Job job = Job.getInstance(conf); job.setJarByClass(OneIndexDriver.class); job.setMapperClass(OneIndexMapper.class); job.setReducerClass(OneIndexReducer.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); job.waitForCompletion(true); } }

4)查看第一次输出结果

atguigu--a.txt 3 atguigu--b.txt 2 atguigu--c.txt 2 pingping--a.txt 1 pingping--b.txt 3 pingping--c.txt 1 ss--a.txt 2 ss--b.txt 1 ss--c.txt 1

1.1.2、第二次处理

1)第二次处理,编写TwoIndexMapper类

package com.dianchou.mr.index; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; public class TwoIndexMapper extends Mapper<LongWritable, Text,Text,Text> { Text k = new Text(); Text v = new Text(); @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { //atguigu---a.txt 3 ==>atguigu a.txt 3 String line = value.toString(); String[] fields = line.split("---"); k.set(fields[0]); v.set(fields[1]); context.write(k, v); } }

2)第二次处理,编写TwoIndexReducer类

package com.dianchou.mr.index; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; import java.io.IOException; public class TwoIndexReducer extends Reducer<Text,Text,Text,Text> { Text v = new Text(); @Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { // atguigu a.txt 3 // atguigu b.txt 2 // atguigu c.txt 2 // atguigu c.txt-->2 b.txt-->2 a.txt-->3 StringBuilder sb = new StringBuilder(); for (Text value : values) { sb.append(value.toString().replace(" ", "-->") + " "); } v.set(sb.toString()); context.write(key, v); } }

3)第二次处理,编写TwoIndexDriver类

package com.dianchou.mr.index; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class TwoIndexDriver { public static void main(String[] args) throws Exception { // 输入输出路径需要根据自己电脑上实际的输入输出路径设置 args = new String[] { "D:\hadoop\index-output", "D:\hadoop\index-output2" }; Configuration config = new Configuration(); Job job = Job.getInstance(config); job.setJarByClass(TwoIndexDriver.class); job.setMapperClass(TwoIndexMapper.class); job.setReducerClass(TwoIndexReducer.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(Text.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); boolean result = job.waitForCompletion(true); System.exit(result?0:1); } }

4)第二次查看最终结果

atguigu c.txt-->2 b.txt-->2 a.txt-->3 pingping c.txt-->1 b.txt-->3 a.txt-->1 ss c.txt-->1 b.txt-->1 a.txt-->2

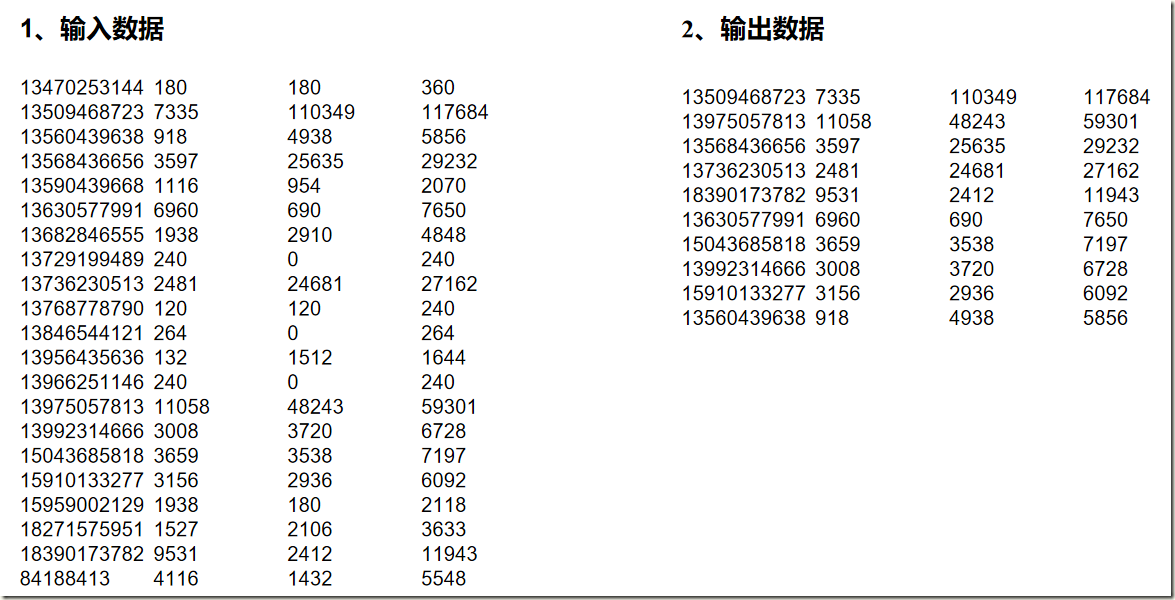

二、TopN案例

2.1、需求及分析

2.2、代码实现

1)编写FlowBean类

package com.dianchou.mr.top; import java.io.DataInput; import java.io.DataOutput; import java.io.IOException; import org.apache.hadoop.io.WritableComparable; public class FlowBean implements WritableComparable<FlowBean>{ private long upFlow; private long downFlow; private long sumFlow; public FlowBean() { super(); } public FlowBean(long upFlow, long downFlow) { super(); this.upFlow = upFlow; this.downFlow = downFlow; } @Override public void write(DataOutput out) throws IOException { out.writeLong(upFlow); out.writeLong(downFlow); out.writeLong(sumFlow); } @Override public void readFields(DataInput in) throws IOException { upFlow = in.readLong(); downFlow = in.readLong(); sumFlow = in.readLong(); } public long getUpFlow() { return upFlow; } public void setUpFlow(long upFlow) { this.upFlow = upFlow; } public long getDownFlow() { return downFlow; } public void setDownFlow(long downFlow) { this.downFlow = downFlow; } public long getSumFlow() { return sumFlow; } public void setSumFlow(long sumFlow) { this.sumFlow = sumFlow; } @Override public String toString() { return upFlow + " " + downFlow + " " + sumFlow; } public void set(long downFlow2, long upFlow2) { downFlow = downFlow2; upFlow = upFlow2; sumFlow = downFlow2 + upFlow2; } @Override public int compareTo(FlowBean bean) { int result; if (this.sumFlow > bean.getSumFlow()) { result = -1; }else if (this.sumFlow < bean.getSumFlow()) { result = 1; }else { result = 0; } return result; } }

2)编写TopNMapper类

package com.dianchou.mr.top; import java.io.IOException; import java.util.Iterator; import java.util.TreeMap; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; public class TopNMapper extends Mapper<LongWritable, Text, FlowBean, Text> { // 定义一个TreeMap作为存储数据的容器(天然按key排序) private TreeMap<FlowBean, Text> flowMap = new TreeMap<FlowBean, Text>(); private FlowBean kBean; @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { kBean = new FlowBean(); Text v = new Text(); // 1 获取一行 String line = value.toString(); // 2 切割 String[] fields = line.split(" "); // 3 封装数据 String phoneNum = fields[0]; long upFlow = Long.parseLong(fields[1]); long downFlow = Long.parseLong(fields[2]); long sumFlow = Long.parseLong(fields[3]); kBean.setDownFlow(downFlow); kBean.setUpFlow(upFlow); kBean.setSumFlow(sumFlow); v.set(phoneNum); // 4 向TreeMap中添加数据 flowMap.put(kBean, v); // 5 限制TreeMap的数据量,超过10条就删除掉流量最小的一条数据 if (flowMap.size() > 10) { flowMap.remove(flowMap.lastKey()); } } @Override protected void cleanup(Context context) throws IOException, InterruptedException { // 6 遍历treeMap集合,输出数据 Iterator<FlowBean> bean = flowMap.keySet().iterator(); while (bean.hasNext()) { FlowBean k = bean.next(); context.write(k, flowMap.get(k)); } } }

3)编写TopNReducer类

package com.dianchou.mr.top; import java.io.IOException; import java.util.Iterator; import java.util.TreeMap; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; public class TopNReducer extends Reducer<FlowBean, Text, Text, FlowBean> { // 定义一个TreeMap作为存储数据的容器(天然按key排序) TreeMap<FlowBean, Text> flowMap = new TreeMap<FlowBean, Text>(); @Override protected void reduce(FlowBean key, Iterable<Text> values, Context context) throws IOException, InterruptedException { for (Text value : values) { FlowBean bean = new FlowBean(); bean.set(key.getDownFlow(), key.getUpFlow()); // 1 向treeMap集合中添加数据 flowMap.put(bean, new Text(value)); // 2 限制TreeMap数据量,超过10条就删除掉流量最小的一条数据 if (flowMap.size() > 10) { flowMap.remove(flowMap.lastKey()); } } } @Override protected void cleanup(Reducer<FlowBean, Text, Text, FlowBean>.Context context) throws IOException, InterruptedException { // 3 遍历集合,输出数据 Iterator<FlowBean> it = flowMap.keySet().iterator(); while (it.hasNext()) { FlowBean v = it.next(); context.write(new Text(flowMap.get(v)), v); } } }

4)编写TopNDriver类

package com.dianchou.mr.top; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class TopNDriver { public static void main(String[] args) throws Exception { args = new String[]{"D:\hadoop\top-input","D:\hadoop\top-output"}; // 1 获取配置信息,或者job对象实例 Configuration configuration = new Configuration(); Job job = Job.getInstance(configuration); // 6 指定本程序的jar包所在的本地路径 job.setJarByClass(TopNDriver.class); // 2 指定本业务job要使用的mapper/Reducer业务类 job.setMapperClass(TopNMapper.class); job.setReducerClass(TopNReducer.class); // 3 指定mapper输出数据的kv类型 job.setMapOutputKeyClass(FlowBean.class); job.setMapOutputValueClass(Text.class); // 4 指定最终输出的数据的kv类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(FlowBean.class); // 5 指定job的输入原始文件所在目录 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); // 7 将job中配置的相关参数,以及job所用的java类所在的jar包, 提交给yarn去运行 boolean result = job.waitForCompletion(true); System.exit(result ? 0 : 1); } }

三、共同好友案例

3.1、需求及分析

好友列表数据,冒号前是一个用户,冒号后是该用户的所有好友(数据中的好友关系是单向的),求出哪些人两两之间有共同好友,及他俩的共同好友都有谁?

数据输入:

A:B,C,D,F,E,O B:A,C,E,K C:F,A,D,I D:A,E,F,L E:B,C,D,M,L F:A,B,C,D,E,O,M G:A,C,D,E,F H:A,C,D,E,O I:A,O J:B,O K:A,C,D L:D,E,F M:E,F,G O:A,H,I,J

需求分析:

先求出A、B、C、….等是谁的好友

第一次输出结果:

A I,K,C,B,G,F,H,O,D, B A,F,J,E, C A,E,B,H,F,G,K, D G,C,K,A,L,F,E,H, E G,M,L,H,A,F,B,D, F L,M,D,C,G,A, G M, H O, I O,C, J O, K B, L D,E, M E,F, O A,H,I,J,F,

第二次输出结果:

A-B E C A-C D F A-D E F A-E D B C A-F O B C D E A-G F E C D A-H E C D O A-I O A-J O B A-K D C A-L F E D A-M E F B-C A B-D A E B-E C B-F E A C B-G C E A B-H A E C B-I A B-K C A B-L E B-M E B-O A C-D A F C-E D C-F D A C-G D F A C-H D A C-I A C-K A D C-L D F C-M F C-O I A D-E L D-F A E D-G E A F D-H A E D-I A D-K A D-L E F D-M F E D-O A E-F D M C B E-G C D E-H C D E-J B E-K C D E-L D F-G D C A E F-H A D O E C F-I O A F-J B O F-K D C A F-L E D F-M E F-O A G-H D C E A G-I A G-K D A C G-L D F E G-M E F G-O A H-I O A H-J O H-K A C D H-L D E H-M E H-O A I-J O I-K A I-O A K-L D K-O A L-M E F

3.2、代码编写

1)第一次Mapper类

package com.dianchou.mr.friends; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; public class OneShareFriendsMapper extends Mapper<LongWritable, Text, Text, Text> { @Override protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, Text>.Context context) throws IOException, InterruptedException { // 1 获取一行 A:B,C,D,F,E,O String line = value.toString(); //读取到最后 if ("".equals(line)) { return; } // 2 切割 String[] fields = line.split(":"); // 3 获取person和好友 String person = fields[0]; String[] friends = fields[1].split(","); // 4写出去 for (String friend : friends) { // 输出 <好友,人> context.write(new Text(friend), new Text(person)); } } }

2)第一次Reducer类

package com.dianchou.mr.friends; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; import java.io.IOException; public class OneShareFriendsReducer extends Reducer<Text, Text, Text, Text> { @Override protected void reduce(Text key, Iterable<Text> values, Context context)throws IOException, InterruptedException { StringBuffer sb = new StringBuffer(); //1 拼接 for(Text person: values){ sb.append(person).append(","); } //2 写出 context.write(key, new Text(sb.toString())); } }

3)第一次Driver类

package com.dianchou.mr.friends; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class OneShareFriendsDriver { public static void main(String[] args) throws Exception { args = new String[]{"D:\hadoop\friends-input","D:\hadoop\friends-output"}; // 1 获取job对象 Configuration configuration = new Configuration(); Job job = Job.getInstance(configuration); // 2 指定jar包运行的路径 job.setJarByClass(OneShareFriendsDriver.class); // 3 指定map/reduce使用的类 job.setMapperClass(OneShareFriendsMapper.class); job.setReducerClass(OneShareFriendsReducer.class); // 4 指定map输出的数据类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(Text.class); // 5 指定最终输出的数据类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); // 6 指定job的输入原始所在目录 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); // 7 提交 boolean result = job.waitForCompletion(true); System.exit(result?0:1); } }

4)第二次Mapper类

package com.dianchou.mr.friends; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; import java.util.Arrays; public class TwoShareFriendsMapper extends Mapper<LongWritable, Text, Text, Text> { @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { // A I,K,C,B,G,F,H,O,D, // 友 人,人,人 String line = value.toString(); String[] friend_persons = line.split(" "); String friend = friend_persons[0]; String[] persons = friend_persons[1].split(","); Arrays.sort(persons); for (int i = 0; i < persons.length - 1; i++) { for (int j = i + 1; j < persons.length; j++) { // 发出 <人-人,好友> ,这样,相同的“人-人”对的所有好友就会到同1个reduce中去 context.write(new Text(persons[i] + "-" + persons[j]), new Text(friend)); } } } }

5)第二次Reducer类

package com.dianchou.mr.friends; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; import java.io.IOException; public class TwoShareFriendsReducer extends Reducer<Text, Text, Text, Text> { @Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { StringBuffer sb = new StringBuffer(); for (Text friend : values) { sb.append(friend).append(" "); } context.write(key, new Text(sb.toString())); } }

6)第二次Driver类

package com.dianchou.mr.friends; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class TwoShareFriendsDriver { public static void main(String[] args) throws Exception { args = new String[]{"D:\hadoop\friends-output","D:\hadoop\friends-output2"}; // 1 获取job对象 Configuration configuration = new Configuration(); Job job = Job.getInstance(configuration); // 2 指定jar包运行的路径 job.setJarByClass(TwoShareFriendsDriver.class); // 3 指定map/reduce使用的类 job.setMapperClass(TwoShareFriendsMapper.class); job.setReducerClass(TwoShareFriendsReducer.class); // 4 指定map输出的数据类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(Text.class); // 5 指定最终输出的数据类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); // 6 指定job的输入原始所在目录 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); // 7 提交 boolean result = job.waitForCompletion(true); System.exit(result?0:1); } }