一、系统初始化

1)环境准备

| 节点 | ip |

| master01 | 10.0.0.11 |

| node01 | 10.0.0.20 |

| node02 | 10.0.0.21 |

| harbor | 10.0.0.12 |

2)设置系统主机名及hosts解析

#修改主机名 [root@k8s-master ~]# hostnamectl set-hostname k8s-master01 #配置hosts解析 [root@k8s-master01 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.0.0.11 k8s-master01 10.0.0.20 k8s-node01 10.0.0.21 k8s-node02 10.0.0.12 harbor #拷贝hosts文件置其他服务器 [root@k8s-master01 ~]# scp /etc/hosts root@10.0.0.20:/etc/hosts [root@k8s-master01 ~]# scp /etc/hosts root@10.0.0.21:/etc/hosts [root@k8s-master01 ~]# scp /etc/hosts root@10.0.0.12:/etc/hosts

3)安装相关依赖包

[root@k8s-master01 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo [root@k8s-master01 ~]# yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

4)设置防火墙为iptables并设置空规则

[root@k8s-master01 ~]# systemctl stop firewalld && systemctl disable firewalld

[root@k8s-master01 ~]# systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

[root@k8s-master01 ~]# yum install -y iptables-services && systemctl start iptables && systemctl enable iptables &&iptables -F && service iptables save5)关闭swap及selinux

K8s初始化init时,会检测swap分区有没有关闭,如果虚拟内存开启,容器pod就可能会放置在虚拟内存中运行,会大大降低运行效率

#关闭swap, [root@k8s-master01 ~]# swapoff -a && sed -r -i '/swap/s@(.*)@#1@g' /etc/fstab #关闭selinux [root@k8s-master01 ~]# setenforce 0 && sed -i 's#^SELINUX=.*#SELINUX=disabled#g' /etc/selinux/config setenforce: SELinux is disabled [root@k8s-master01 ~]# getenforce Disabled

6)升级内核为4.4

#安装完成后检查 /boot/grub2/grub.cfg 中对应内核 menuentry 中是否包含 initrd16 配置,如果没有,再安装一次! [root@k8s-master01 ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm [root@k8s-master01 ~]# yum --enablerepo=elrepo-kernel install -y kernel-lt #设置开机从新内核启动 [root@k8s-master01 ~]# grub2-set-default "CentOS Linux (4.4.182-1.el7.elrepo.x86_64) 7 (Core)" #重启 [root@k8s-master01 ~]# reboot [root@k8s-master01 ~]# uname -r 4.4.212-1.el7.elrepo.x86_64

7)调整内核参数

cat > /etc/sysctl.d/kubernetes.conf <<EOF net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.ipv4.ip_forward=1 net.ipv4.tcp_tw_recycle=0 vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它 vm.overcommit_memory=1 # 不检查物理内存是否够用 vm.panic_on_oom=0 # 开启OOM fs.inotify.max_user_instances=8192 fs.inotify.max_user_watches=1048576 fs.file-max=52706963 fs.nr_open=52706963 net.ipv6.conf.all.disable_ipv6=1 net.netfilter.nf_conntrack_max=2310720 EOF #使配置生效 [root@k8s-master01 ~]# sysctl -p /etc/sysctl.d/kubernetes.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 net.ipv4.tcp_tw_recycle = 0 vm.swappiness = 0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它 vm.overcommit_memory = 1 # 不检查物理内存是否够用 vm.panic_on_oom = 0 # 开启OOM fs.inotify.max_user_instances = 8192 fs.inotify.max_user_watches = 1048576 fs.file-max = 52706963 fs.nr_open = 52706963 net.ipv6.conf.all.disable_ipv6 = 1 sysctl: cannot stat /proc/sys/net/netfilter/nf_conntrack_max: No such file or directory

8)调整系统时区

#设置系统时区为中国/上海 [root@k8s-master01 ~]# timedatectl set-timezone Asia/Shanghai #将当前的 UTC 时间写入硬件时钟 [root@k8s-master01 ~]# timedatectl set-local-rtc 0 #重启依赖于系统时间的服务 [root@k8s-master01 ~]# systemctl restart rsyslog && systemctl restart crond

9)关闭不需要的服务

[root@k8s-master01 ~]# systemctl stop postfix && systemctl disable postfix

10)设置 rsyslogd 和 systemd journald

centos7以后,引导方式改为了systemd,所以会有两个日志系统同时工作只保留一个日志(journald)的方法

#持久化保存日志的目录 [root@k8s-master01 ~]# mkdir /var/log/journal [root@k8s-master01 ~]# mkdir /etc/systemd/journald.conf.d #配置文件 cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF [Journal] #持久化保存到磁盘 Storage=persistent # 压缩历史日志 Compress=yes SyncIntervalSec=5m RateLimitInterval=30s RateLimitBurst=1000 # 最大占用空间10G SystemMaxUse=10G # 单日志文件最大200M SystemMaxFileSize=200M # 日志保存时间 2 周 MaxRetentionSec=2week # 不将日志转发到 syslog ForwardToSyslog=no EOF #重启journald配置 [root@k8s-master01 ~]# systemctl restart systemd-journald

二、kube-proxy开启ipvs的前置

#加载netfilter模块 [root@k8s-master01 ~]# modprobe br_netfilter #添加配置文件 cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF #赋予权限并引导 [root@k8s-master01 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules &&lsmod | grep -e ip_vs -e nf_conntrack_ipv4 nf_conntrack_ipv4 20480 0 nf_defrag_ipv4 16384 1 nf_conntrack_ipv4 ip_vs_sh 16384 0 ip_vs_wrr 16384 0 ip_vs_rr 16384 0 ip_vs 147456 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 114688 2 ip_vs,nf_conntrack_ipv4 libcrc32c 16384 2 xfs,ip_vs

三、docker安装

#docker依赖

[root@k8s-master01 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

#导入阿里云的docker-ce仓库

[root@k8s-master01 ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#更新系统安装docker-ce

[root@k8s-master01 ~]# yum update -y && yum install -y docker-ce

#配置文件

[root@k8s-master01 ~]# mkdir /etc/docker -p

[root@k8s-master01 ~]# mkdir -p /etc/systemd/system/docker.service.d

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

}

}

EOF

#启动docker

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl start docker && systemctl enable docker

四、kubeadm安装

#导入阿里云的YUM仓库 cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF #在每个节点安装kubeadm(初始化工具)、kubectl(命令行管理工具)、kubelet(与docker的cri交互创建容器) [root@k8s-master01 ~]# yum -y install kubeadm-1.15.1 kubectl-1.15.1 kubelet-1.15.1 #开机自启,暂先不启动 [root@k8s-master01 ~]# systemctl enable kubelet.service

五、主节点(master)初始化

链接:https://pan.baidu.com/s/1bTSYZ0tflYbJ8DQKgbfq0w

提取码:7kry

------------------------------------------------------

[root@k8s-master01 ~]# cd k8s/

[root@k8s-master01 k8s]# ls

kubeadm-basic.images.tar.gz

[root@k8s-master01 k8s]# tar xf kubeadm-basic.images.tar.gz

[root@k8s-master01 k8s]# ls

kubeadm-basic.images kubeadm-basic.images.tar.gz

#创建导入镜像脚本

[root@k8s-master01 k8s]# cat load-images.sh

#!/bin/bash

ls /root/k8s/kubeadm-basic.images > /tmp/images-list.txt

cd /root/k8s/kubeadm-basic.images

for i in `cat /tmp/images-list.txt`

do

docker load -i $i

done

rm -f /tmp/images-list.txt

#授权并执行脚本

[root@k8s-master01 k8s]# chmod +x load-images.sh

[root@k8s-master01 k8s]# ./load-images.sh

#查看

[root@k8s-master01 k8s]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-controller-manager v1.15.1 d75082f1d121 6 months ago 159MB

k8s.gcr.io/kube-proxy v1.15.1 89a062da739d 6 months ago 82.4MB

k8s.gcr.io/kube-scheduler v1.15.1 b0b3c4c404da 6 months ago 81.1MB

k8s.gcr.io/kube-apiserver v1.15.1 68c3eb07bfc3 6 months ago 207MB

k8s.gcr.io/coredns 1.3.1 eb516548c180 12 months ago 40.3MB

k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 14 months ago 258MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 2 years ago 742kB

#初始化节点

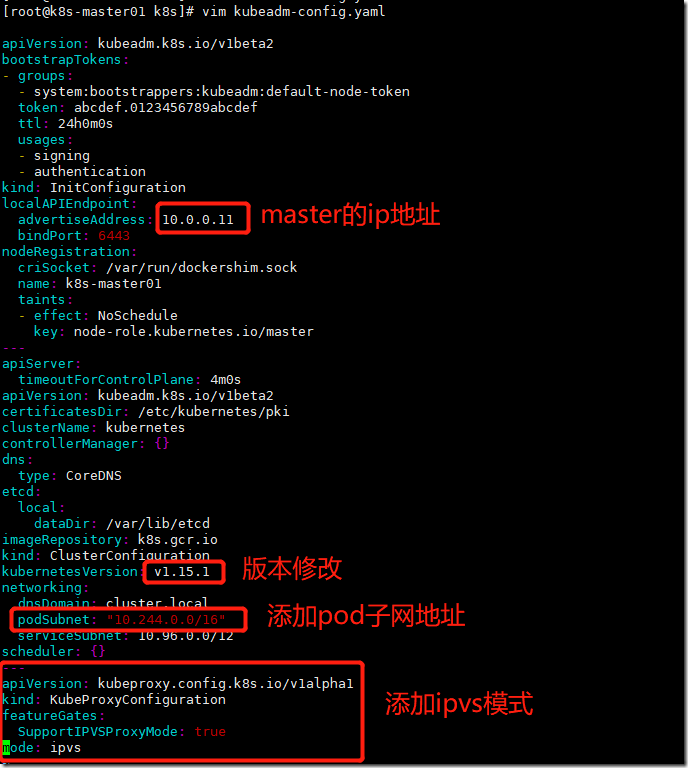

[root@k8s-master01 k8s]# kubeadm config print init-defaults > kubeadm-config.yaml

[root@k8s-master01 k8s]# vim kubeadm-config.yaml

[root@k8s-master01 k8s]# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.0.0.11

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.15.1

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

#指定配置文件进行初始化

[root@k8s-master01 k8s]# kubeadm init --config=kubeadm-config.yaml --experimental-upload-certs | tee kubeadm-init.log

Flag --experimental-upload-certs has been deprecated, use --upload-certs instead

[init] Using Kubernetes version: v1.15.1

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 19.03.5. Latest validated version: 18.09

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

[root@k8s-master01 k8s]# kubeadm init --config=kubeadm-config.yaml --experimental-upload-certs | tee kubeadm-init.log

Flag --experimental-upload-certs has been deprecated, use --upload-certs instead

[init] Using Kubernetes version: v1.15.1

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 19.03.5. Latest validated version: 18.09

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.0.11]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master01 localhost] and IPs [10.0.0.11 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master01 localhost] and IPs [10.0.0.11 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 22.502653 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.15" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

58b7cf30f439297cf587447e6c41a5783c967365ec11df8e975d7117ed8c81a6

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.11:6443 --token abcdef.0123456789abcdef

--discovery-token-ca-cert-hash sha256:11fe8136105caff3d0029fee0111e05aee5ac34d0322828fd634c2a104475d6e

[root@k8s-master01 k8s]#

#master上执行

[root@k8s-master01 k8s]# mkdir -p $HOME/.kube

[root@k8s-master01 k8s]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master01 k8s]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

#查看node状态

[root@k8s-master01 k8s]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady master 7m v1.15.1 #因为还没有构建flannel网络,所以还是NotReady六、flannel插件安装

master节点安装flannel:

[root@k8s-master01 k8s]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml [root@k8s-master01 k8s]# kubectl create -f kube-flannel.yml [root@k8s-master01 k8s]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-5c98db65d4-6vgp6 1/1 Running 0 35m coredns-5c98db65d4-8zbqt 1/1 Running 0 35m etcd-k8s-master01 1/1 Running 1 34m kube-apiserver-k8s-master01 1/1 Running 1 35m kube-controller-manager-k8s-master01 1/1 Running 1 35m kube-flannel-ds-amd64-z76v7 1/1 Running 0 3m12s #flannel容器 kube-proxy-qd4xm 1/1 Running 1 35m kube-scheduler-k8s-master01 1/1 Running 1 34m [root@k8s-master01 k8s]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master01 Ready master 37m v1.15.1 #master已就绪

七、节点加入集群

#将相关镜像拷贝到节点中 [root@k8s-master01 k8s]# scp -rp kubeadm-basic.images.tar.gz load-images.sh root@10.0.0.20:~/k8s [root@k8s-master01 k8s]# scp -rp kubeadm-basic.images.tar.gz load-images.sh root@10.0.0.21:~/k8s #导入镜像 [root@k8s-node01 k8s]# ./load-images.sh #节点加入集群 [root@k8s-node01 ~]# kubeadm join 10.0.0.11:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:11fe8136105caff3d0029fee0111e05aee5ac34d0322828fd634c2a104475d6e [preflight] Running pre-flight checks [WARNING SystemVerification]: this Docker version is not on the list of validated versions: 19.03.5. Latest validated version: 18.09 [WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service' [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Activating the kubelet service [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. #同理,node02执行相同命令 -------------------------------------------------------------------------------------------------- #master节点查看状态 [root@k8s-master01 k8s]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master01 Ready master 65m v1.15.1 k8s-node01 Ready <none> 21m v1.15.1 k8s-node02 Ready <none> 20m v1.15.1 [root@k8s-master01 k8s]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-5c98db65d4-6vgp6 1/1 Running 0 65m coredns-5c98db65d4-8zbqt 1/1 Running 0 65m etcd-k8s-master01 1/1 Running 1 64m kube-apiserver-k8s-master01 1/1 Running 1 64m kube-controller-manager-k8s-master01 1/1 Running 1 64m kube-flannel-ds-amd64-m769r 1/1 Running 0 21m kube-flannel-ds-amd64-sjwph 1/1 Running 0 20m kube-flannel-ds-amd64-z76v7 1/1 Running 0 32m kube-proxy-4g57j 1/1 Running 0 21m kube-proxy-qd4xm 1/1 Running 1 65m kube-proxy-x66cd 1/1 Running 0 20m kube-scheduler-k8s-master01 1/1 Running 1 64m

八、安装harbor

1)安装docker

[root@harbor ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@harbor ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@harbor ~]# yum update -y && yum install -y docker-ce

[root@harbor ~]# mkdir /etc/docker -p

[root@harbor ~]# mkdir -p /etc/systemd/system/docker.service.d

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"insecure-registries": ["https://hub.dianchou.com"]

}

EOF

[root@harbor ~]# systemctl start docker && systemctl enable docker

#注意: 其他节点也需要配置/etc/docker/daemon.json,并重启docker2)使用docker-compose安装harbor

#上传docker-compose及harbor离线安装包 [root@harbor ~]# ls anaconda-ks.cfg docker-compose harbor-offline-installer-v1.2.0.tgz [root@harbor ~]# mv docker-compose /usr/local/bin [root@harbor ~]# chmod +x /usr/local/bin/docker-compose [root@harbor ~]# tar xf harbor-offline-installer-v1.2.0.tgz -C /usr/local/ [root@harbor ~]# cd /usr/local/harbor/ [root@harbor harbor]# ls common docker-compose.notary.yml harbor_1_1_0_template harbor.v1.2.0.tar.gz LICENSE prepare docker-compose.clair.yml docker-compose.yml harbor.cfg install.sh NOTICE upgrade [root@harbor harbor]# ll total 485012 drwxr-xr-x 3 root root 23 Feb 2 16:42 common -rw-r--r-- 1 root root 1163 Sep 11 2017 docker-compose.clair.yml -rw-r--r-- 1 root root 1988 Sep 11 2017 docker-compose.notary.yml -rw-r--r-- 1 root root 3191 Sep 11 2017 docker-compose.yml -rw-r--r-- 1 root root 4304 Sep 11 2017 harbor_1_1_0_template -rw-r--r-- 1 root root 4345 Sep 11 2017 harbor.cfg -rw-r--r-- 1 root root 496209164 Sep 11 2017 harbor.v1.2.0.tar.gz -rwxr-xr-x 1 root root 5332 Sep 11 2017 install.sh -rw-r--r-- 1 root root 371640 Sep 11 2017 LICENSE -rw-r--r-- 1 root root 482 Sep 11 2017 NOTICE -rwxr-xr-x 1 root root 17592 Sep 11 2017 prepare -rwxr-xr-x 1 root root 4550 Sep 11 2017 upgrade #编辑harbor配置文件 [root@harbor harbor]# vim harbor.cfg hostname = hub.dianchou.com ui_url_protocol = https #The password for the root user of mysql db, change this before any production use. db_password = root123 #Maximum number of job workers in job service max_job_workers = 3 #Determine whether or not to generate certificate for the registry's token. #If the value is on, the prepare script creates new root cert and private key #for generating token to access the registry. If the value is off the default key/cert will be used. #This flag also controls the creation of the notary signer's cert. customize_crt = on #The path of cert and key files for nginx, they are applied only the protocol is set to https ssl_cert = /data/cert/server.crt ssl_cert_key = /data/cert/server.key .... #创建证书 [root@harbor harbor]# mkdir -p /data/cert [root@harbor harbor]# cd /data/cert [root@harbor cert]# openssl genrsa -des3 -out server.key 2048 Generating RSA private key, 2048 bit long modulus .........................................................+++ .....+++ e is 65537 (0x10001) Enter pass phrase for server.key: Verifying - Enter pass phrase for server.key: [root@harbor cert]# openssl req -new -key server.key -out server.csr Enter pass phrase for server.key: You are about to be asked to enter information that will be incorporated into your certificate request. What you are about to enter is what is called a Distinguished Name or a DN. There are quite a few fields but you can leave some blank For some fields there will be a default value, If you enter '.', the field will be left blank. ----- Country Name (2 letter code) [XX]:CN State or Province Name (full name) []:BJ Locality Name (eg, city) [Default City]:BJ Organization Name (eg, company) [Default Company Ltd]:dianchou Organizational Unit Name (eg, section) []: Common Name (eg, your name or your server's hostname) []:hub.dianchou.com Email Address []:352972405@qq.com Please enter the following 'extra' attributes to be sent with your certificate request A challenge password []:123456 An optional company name []:123456 [root@harbor cert]# cp server.key server.key.org [root@harbor cert]# openssl rsa -in server.key.org -out server.key Enter pass phrase for server.key.org: writing RSA key [root@harbor cert]# openssl x509 -req -days 365 -in server.csr -signkey server.key -out server.crt Signature ok subject=/C=CN/ST=BJ/L=BJ/O=dianchou/CN=hub.dianchou.com/emailAddress=352972405@qq.com Getting Private key [root@harbor cert]# chmod -R 777 /data/cert [root@harbor cert]# ls server.crt server.csr server.key server.key.org #运行脚本安装 [root@harbor data]# cd /usr/local/harbor/ [root@harbor harbor]# ./install.sh ... ✔ ----Harbor has been installed and started successfully.---- Now you should be able to visit the admin portal at https://hub.dianchou.com. For more details, please visit https://github.com/vmware/harbor .

修改windows的hosts解析:10.0.0.12 hub.dianchou.com

访问测试:https://hub.dianchou.com/ admin Harbor12345

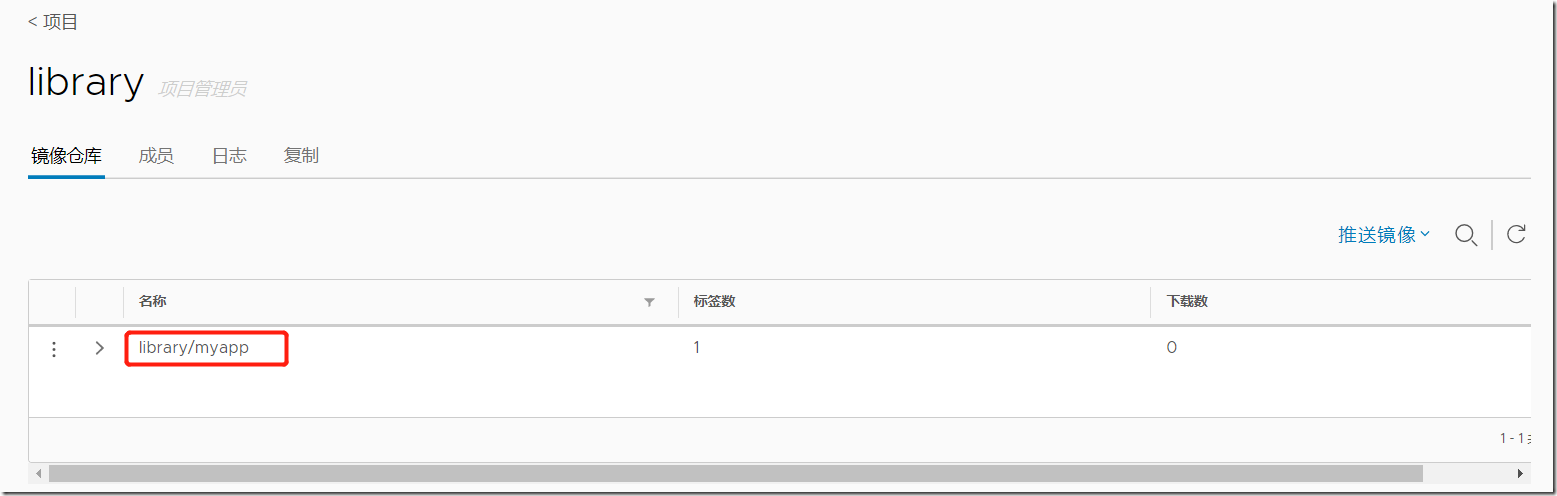

3)客户端测试

#节点添加解析 [root@k8s-node01 ~]# echo "10.0.0.12 hub.dianchou.com" >> /etc/hosts [root@k8s-node01 ~]# docker login https://hub.dianchou.com Username: admin Password: WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded #推送镜像测试 [root@k8s-node01 ~]# docker pull wangyanglinux/myapp:v1 [root@k8s-node01 ~]# docker tag wangyanglinux/myapp:v1 hub.dianchou.com/library/myapp:v1 [root@k8s-node01 ~]# docker push hub.dianchou.com/library/myapp:v1

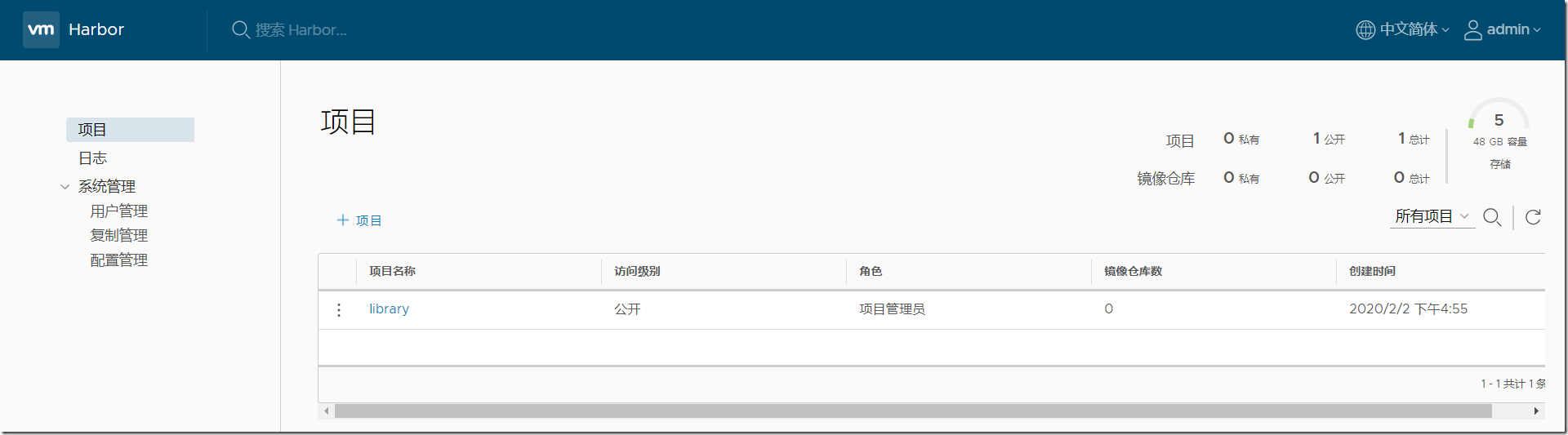

浏览器查看镜像:

九、k8s测试

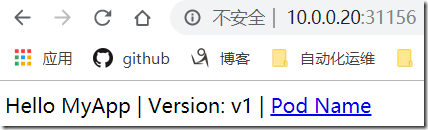

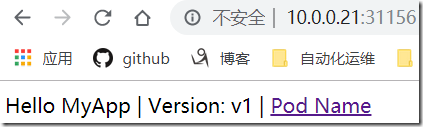

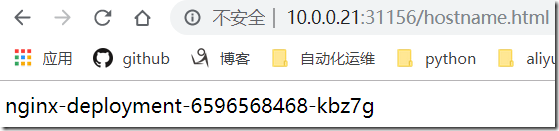

[root@k8s-master01 ~]# kubectl run nginx-deployment --image=hub.dianchou.com/library/myapp:v1 --port=80 --replicas=1 kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead. deployment.apps/nginx-deployment created [root@k8s-master01 ~]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 1/1 1 1 25s [root@k8s-master01 ~]# kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deployment-6596568468 1 1 1 74s [root@k8s-master01 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-deployment-6596568468-xjg8w 1/1 Running 0 94s [root@k8s-master01 ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-6596568468-xjg8w 1/1 Running 0 100s 10.244.2.2 k8s-node02 <none> <none> #node02上查看 [root@k8s-node02 ~]# docker ps -a|grep nginx 2ea7e8d31311 hub.dianchou.com/library/myapp "nginx -g 'daemon of…" 4 minutes ago Up 4 minutes k8s_nginx-deployment_nginx-deployment-6596568468-xjg8w_default_5f34696d-f9c4-467b-b5b5-a98878b1297e_0 c48f1decaa76 k8s.gcr.io/pause:3.1 "/pause" 4 minutes ago Up 4 minutes k8s_POD_nginx-deployment-6596568468-xjg8w_default_5f34696d-f9c4-467b-b5b5-a98878b1297e_0 #访问测试 [root@k8s-master01 ~]# curl 10.244.2.2 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> [root@k8s-master01 ~]# curl 10.244.2.2/hostname.html nginx-deployment-6596568468-xjg8w #删除pod,会重新生成新的pod [root@k8s-master01 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-deployment-6596568468-xjg8w 1/1 Running 0 7m49s [root@k8s-master01 ~]# kubectl delete pod nginx-deployment-6596568468-xjg8w pod "nginx-deployment-6596568468-xjg8w" deleted [root@k8s-master01 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-deployment-6596568468-lgk9r 1/1 Running 0 27s #pod扩容 [root@k8s-master01 ~]# kubectl scale --replicas=3 deployment/nginx-deployment deployment.extensions/nginx-deployment scaled [root@k8s-master01 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-deployment-6596568468-kbz7g 0/1 ContainerCreating 0 3s nginx-deployment-6596568468-lbtsb 0/1 ContainerCreating 0 3s nginx-deployment-6596568468-lgk9r 1/1 Running 0 83s [root@k8s-master01 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-deployment-6596568468-kbz7g 1/1 Running 0 6s nginx-deployment-6596568468-lbtsb 1/1 Running 0 6s nginx-deployment-6596568468-lgk9r 1/1 Running 0 86s [root@k8s-master01 ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-6596568468-kbz7g 1/1 Running 0 27s 10.244.2.4 k8s-node02 <none> <none> nginx-deployment-6596568468-lbtsb 1/1 Running 0 27s 10.244.2.3 k8s-node02 <none> <none> nginx-deployment-6596568468-lgk9r 1/1 Running 0 107s 10.244.1.2 k8s-node01 <none> <none>

暴露 端口供外界访问:

[root@k8s-master01 ~]# kubectl expose --help [root@k8s-master01 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h14m [root@k8s-master01 ~]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 3/3 3 3 15m [root@k8s-master01 ~]# kubectl expose deployment nginx-deployment --port=30000 --target-port=80 service/nginx-deployment exposed [root@k8s-master01 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h15m nginx-deployment ClusterIP 10.98.45.91 <none> 30000/TCP 28s [root@k8s-master01 ~]# curl 10.98.45.91 curl: (7) Failed connect to 10.98.45.91:80; Connection refused [root@k8s-master01 ~]# curl 10.98.45.91:30000 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> #轮询机制 [root@k8s-master01 ~]# curl 10.98.45.91:30000/hostname.html nginx-deployment-6596568468-lbtsb [root@k8s-master01 ~]# curl 10.98.45.91:30000/hostname.html nginx-deployment-6596568468-lgk9r [root@k8s-master01 ~]# curl 10.98.45.91:30000/hostname.html nginx-deployment-6596568468-kbz7g #查看lvs规则 [root@k8s-master01 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.96.0.1:443 rr -> 10.0.0.11:6443 Masq 1 3 0 TCP 10.96.0.10:53 rr -> 10.244.0.6:53 Masq 1 0 0 -> 10.244.0.7:53 Masq 1 0 0 TCP 10.96.0.10:9153 rr -> 10.244.0.6:9153 Masq 1 0 0 -> 10.244.0.7:9153 Masq 1 0 0 TCP 10.98.45.91:30000 rr #轮询机制 -> 10.244.1.2:80 Masq 1 0 0 -> 10.244.2.3:80 Masq 1 0 0 -> 10.244.2.4:80 Masq 1 0 0 UDP 10.96.0.10:53 rr -> 10.244.0.6:53 Masq 1 0 0 -> 10.244.0.7:53 Masq 1 0 0 #此时无法通过外部浏览直接访问-->修改type类型为:type: ClusterIP ==> type: NodePort [root@k8s-master01 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h22m nginx-deployment ClusterIP 10.98.45.91 <none> 30000/TCP 6m41s [root@k8s-master01 ~]# kubectl edit svc nginx-deployment service/nginx-deployment edited [root@k8s-master01 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h25m nginx-deployment NodePort 10.98.45.91 <none> 30000:31156/TCP 9m38s #注意:所有节点暴露31156端口访问