我的博客均是原创,转载请注名出处

前言

zookeeper 在分布式系统中主要提供了consensus support, 即一致性协议实现。提起一致性协议,首先想到底是大名顶顶的paxos(E.g. google's chubby), 更新的是raft(e.g. etcd), zookeeper是在paxos 之后,raft之前,它的协议名为ZAB(zookeeper atomic broadcast),笔者觉得它是汲取了paxos之精华。当然17年好像出了几篇更新的paper,因为笔者还没读,不敢妄加揣测consensus protocol的future trend,不过zookeeper因为在hadoop eco-system里的广泛应用,作为一个经典的存在,还是值得聊一聊

安装

wget http://www.gtlib.gatech.edu/pub/apache/zookeeper/stable/zookeeper-3.4.10.tar.gz

export ZK_HOME=$YOUR_FAV_DIR

cp conf/zoo_sample.cfg conf/zoo.cfg

修改一下dataDir即可,tickTime is in milliseconds, minimum session timeout is 2 * tickTime, client port 默认2181

make sure java installed and JAVA_HOME env variable exists. following command could use to find java home:

$(dirname $(dirname $(readlink -f $(which javac))))

运行

zkServer.sh start

(output looks like Using config: .../zoo.cfg Starting zookeeper ... STARTED)

zookeeper pid could be got from following command:

ps -ef | grep zookeeper | grep -v grep | awk '{print $2}'

Or run:

jps

listed as QuorumPeerMain

用java-based shell 连接:

zkCli.sh -server localhost:2181

停止运行

zkServer.sh stop

设置多点cluster, called zookeeper ensemble, the minimum recommended number is 3, 5 is most common in production env.

server.1=zoo1:3000:4000 server.2=zoo2:3000:4000 server.3=zoo3:3000:4000

为了测试的需要,也可以单机上设置多点(好多分布式数据库都是这么玩的,不然你让开发者怎么搞):

server.1=localhost:2345:3345 server.2=localhost:2346:3346 server.3=localhost:2347:3347

当然单机上略微麻烦点,需要搞3个cfg, 比如zoo1.cfg, zoo2.cfg, zoo3.cfg, 同时3个myid, zoo1/myid, zoo2/myid, zoo3/myid,在每个cfg中dataDir都指向相应的zoo1, zoo2, zoo3,同时clientPort为2181,2182,2183, 启动的时候:

zkCli.sh -server localhost:2181, localhost:2182, localhost:2183

Zookeeper 总揽

Detail: http://zookeeper.apache.org/doc/r3.4.10/zookeeperOver.html

Clients connect to a single ZooKeeper server. The client maintains a TCP connection through which it sends requests, gets responses, gets watch events, and sends heart beats. If the TCP connection to the server breaks, the client will connect to a different server.

Zookeeper 数据模型

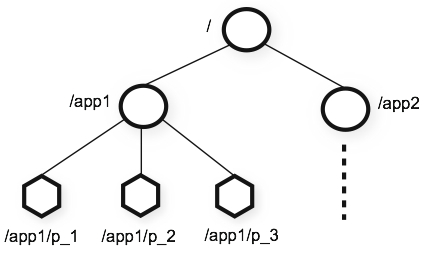

如果把zookeeper的数据模型看作是文件系统,那么zode就是其中的一个节点,有四种znode:

- persistent

- ephemeral

- persistent_sequential

- ephemeral_sequential

persistent znode 的lifetime是知道它们被显示delete, 任何有权限删除的client都可以删除,它主要适用于存储highly available and accessible数据,比如configuration data

ephemeral zonde visible by all clients(受ACL限制), 一旦客户session退出,就会被删除, 当然delete也可以删除,它可以适用于membership service,节点加入,删除,一目了然。

Zookeeper watch

zookeeper client could choose to watch a znode and get notification when there is change, E.g. clientA sets watch on /group, now if clientA does getChildren on /group, [clientA]. Now if clientB joins /group at this time, then clientA will be notified, and client A could getChildren again, now [clientA, clientB] will be returned.

- the watch is ordered in FIFO and dispatched in order

- watch notification delivered to client before other changes happen, although once client received, there could be other things happen there

- order of the watch events is ordered in order seen by zookeeper

另外session timeout是有zookeeper服务器端检测的,所以一个当一个client和一台服务器断开时,watch会被trigger,但是client如果重新加入了另一台同cluster的服务器,由于session ID,所以client的信息会重新被找回(只要不超过expire time)

Kazoo

zookeeper 支持java,C,python(社区支持)还有其它语言(社区支持),这里以Kazoo这个python client binding举例:

watcher:

import signal from kazoo.client import KazooClient from kazoo.recipe.watchers import ChildrenWatch zoo_path = '/MyPath' zk = KazooClient(hosts='localhost:2181') zk.start() zk.ensure_path(zoo_path) @zk.ChildrenWatch(zoo_path) def child_watch_func(children): print "List of Children %s" % children while True: signal.pause()

output looks like

/usr/bin/python child_watch.py List of Children [] List of Children [u'child1'] List of Children [u'child1', u'child2'] List of Children [u'child1', u'child3', u'child2'] List of Children [u'child1', u'child2'] List of Children [u'child1'] List of Children []

On another terminal: WatchedEvent state:SyncConnected type:None path:null [zk: localhost:2181(CONNECTED) 0] create /MyPath/child1 "" Created /MyPath/child1 [zk: localhost:2181(CONNECTED) 1] create /MyPath/child2 "" Created /MyPath/child2 [zk: localhost:2181(CONNECTED) 2] create /MyPath/child3 "" Created /MyPath/child3 [zk: localhost:2181(CONNECTED) 3] delete /MyPath/child3 [zk: localhost:2181(CONNECTED) 4] delete /MyPath/child2 [zk: localhost:2181(CONNECTED) 5] delete /MyPath/child1

Leader election:

from kazoo.client import KazooClient

import time

import uuid

import logging

logging.basicConfig()

my_id = uuid.uuid4()

def leader_func():

print "I am leader : %s" % my_id

while True:

print "%s is still working" % my_id

time.sleep(3)

zk = KazooClient(hosts='127.0.0.1:2181')

zk.start()

election = zk.Election("/electionpath")

election.run(leader_func)

zk.stop()

output looks like:

$ /usr/bin/python leader_election.py I am leader : 961165dc-c6f7-4858-a315-0be40fca1a47 961165dc-c6f7-4858-a315-0be40fca1a47 is still working 961165dc-c6f7-4858-a315-0be40fca1a47 is still working 961165dc-c6f7-4858-a315-0be40fca1a47 is still working 961165dc-c6f7-4858-a315-0be40fca1a47 is still working 961165dc-c6f7-4858-a315-0be40fca1a47 is still working ctrl-C Then another work will become leader

Zookeeper 应用

Barrier

single barrier: to start with, a znode named say "/zk_barrier" is created, clients all watch it, once it not exists, clients do something.

double barrier: block until N number of clients join, then do something, block until N number of clients leave, then do something

Queue

A znode say "queue-znode" is designated to hold a queue, producer adds item to the queue by creating child znode under queue-znode with sequential flag, consumer retrieve the item by getting and deleting a child from queue-znode

Lock

distributed lock, assuming there is a "/lock_node", then each client try creat EPHEMERAL_SEQUENTIAL node, and call get children, if the node someone created is the lowest number, then it's the owner of lock, the next number 需要watch 它之前紧挨着的number,如果那个number突然消失,get_all,重复这个过程

Leader election

和Lock道理差不多,最小的当leader

Group membership

/membership node, getChildren("/membership", true), get with watch, client 刚来时会注册一个ephemeral znode,这样大家都会得到通知,同时getChildren可以告诉当前的membership情况。

2 Phase commit

Service Discovery

比如hosts在/services下面注册服务通过创立ephemeral node,如果是caching可以是/services/caching,如果是storage可以是/services/storage,当clients访问系统的时候,register watch在/services path上,这样新的host加入或离开就会被client知道