前言

数据胡越来越热门,我也开始探索数据湖在公司落地,把数据湖实践入门、填坑做个记录,也方便以后大家入门

一、数据湖iceberg实践环境说明

1.hadoop版本 社区版 2.7.2

2. hive版本 2.3.6

3. flink版本1.11.6 目前flink出来flink1.14.2,但先选择flink1.11看看,原因是官网建议用flink1.11,减少用其他版本造成的坑。

官网说明: Step.1 Downloading the flink 1.11.x binary package from the apache flink download page. We now use scala 2.12 to archive the apache iceberg-flink-runtime jar, so it’s recommended to use flink 1.11 bundled with scala 2.12.

二、启动flink sql客户端

1. 启动flink standalone集群

https://iceberg.apache.org/#flink/#preparation-when-using-flink-sql-client

代码如下(示例):

# HADOOP_HOME is your hadoop root directory after unpack the binary package.

export HADOOP_CLASSPATH=`$HADOOP_HOME/bin/hadoop classpath`

# Start the flink standalone cluster

./bin/start-cluster.sh2.下载flink iceberg runtime的包,启动flink-sql

代码如下(示例):

下载地址:https://repo.maven.apache.org/maven2/org/apache/iceberg/iceberg-flink-runtime/

iceberg-flink-runtime-xxx.jar

我使用 iceberg-flink-runtime-0.11.1.jar

启动flink sql并带上iceberg

bin/sql-client.sh embedded -j /opt/software/iceberg-flink-runtime-0.11.1.jar shell3.创建基于hadoop的catalog

创建脚本,warehouse的路径,它会自动创建

hdfs路径里面 ns是命名空间,但namenode的使用ip:port代替

在flink-sql client 中执行脚本

CREATE CATALOG hadoop_catalog WITH (

'type'='iceberg',

'catalog-type'='hadoop',

'warehouse'='hdfs://ns/user/hive/warehouse/iceberg_hadoop_catalog',

'property-version'='1'

);会自动创建路径 /user/hive/warehouse/iceberg_hadoop_catalog/default, 下面是空的

[root@hadoop101 ~]# hadoop fs -ls /user/hive/warehouse/iceberg_hadoop_catalog/default

Flink SQL> show catalogs;

default_catalog

hadoop_catalog创建数据库

Flink SQL> create database iceberg_db;

[INFO] Database has been created.

Flink SQL> show databases;

default_database

iceberg_db创建表

Flink SQL> CREATE TABLE `hadoop_catalog`.`default`.`sample` (

> id BIGINT COMMENT 'unique id',

> data STRING

> );

[INFO] Table has been created.查看表, 发现从目前库找,找不到。

Flink SQL> use default_database;

Flink SQL> show tables;

[INFO] Result was empty.

Flink SQL> use iceberg_db;

Flink SQL> show tables;

[INFO] Result was empty.从hdfs路径去找,发现,生成了表的目录和元信息

4.写数据,读数据测试(hadoop catalog的限制)

hadoop catalog 创建的东西只能在本客户端使用

打开另一个sql客户端,写数据

bin/sql-client.sh embedded -j /opt/software/iceberg-flink-runtime-0.11.1.jar shell

Flink SQL> INSERT INTO `hadoop_catalog`.`default`.`sample` VALUES (1, 'a');

[INFO] Submitting SQL update statement to the cluster...

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.table.api.TableException: Sink `hadoop_catalog`.`default`.`sample` does not exists

Flink SQL> show databases;

default_database发现在第一个客户端创建的database和表,都没有, 我先认为这个hadoop catalog的限制。

步骤1: 把所有sql-client的客户端退出,重新进入sql-client

步骤2:检查hadoop上hadoop_catalog对应的表是否还在,发现表还在

发现1. 之前创建的database没有了,获取hadoop_catalog ,

结论: 客户端退出后,catalog在hadoop上的信息还在,但客户端需要重新建立catalog,catalog下的表不用重新建

Flink SQL> show catalogs;

default_catalog

重新创建catalog

Flink SQL> CREATE CATALOG hadoop_catalog WITH (

> 'type'='iceberg',

> 'catalog-type'='hadoop',

> 'warehouse'='hdfs://ns/user/hive/warehouse/iceberg_hadoop_catalog',

> 'property-version'='1'

> );

[INFO] Catalog has been created.

Flink SQL> use hadoop_catalog;

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.table.catalog.exceptions.CatalogException: A database with name [hadoop_catalog] does not exist in the catalog: [default_catalog].

Flink SQL> use catalog hadoop_catalog;

Flink SQL> show tables;

sample

sample_like

Flink SQL> show databases;

default

Flink SQL> show catalogs;

default_catalog

hadoop_catalog插入两条数据,再查询出来看看

Flink SQL> INSERT INTO `hadoop_catalog`.`default`.`sample` VALUES (1, 'a');

[INFO] Submitting SQL update statement to the cluster...

[INFO] Table update statement has been successfully submitted to the cluster:

Job ID: a7008acfe1389133c1ae6a5c00e4d611

Flink SQL> INSERT INTO `hadoop_catalog`.`default`.`sample` VALUES (2, 'b');

[INFO] Submitting SQL update statement to the cluster...

[INFO] Table update statement has been successfully submitted to the cluster:

Job ID: f642dd21e493d630824cb9b30098de3c

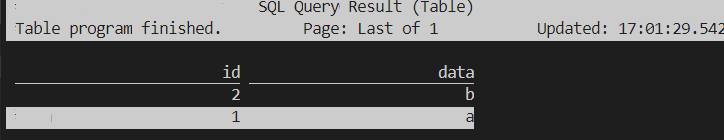

Flink SQL> select * from sample;查询结果:

看看hdfs上的文件

查看data的数据: 发现 2个数据文件

查看metadata,发现metadata比较多

之前没完整记录第一执行后的metadata信息,需要重跑,这个流程,记录完整变更的信息

5.创建基于hive的catalog

5.1 创建hive的catalog失败

创建报错,错误原因,如下图, 没有hive的依赖

建hive catalog语法

CREATE CATALOG hive_catalog WITH (

'type'='iceberg',

'catalog-type'='hive',

'uri'='thrift://hadoop101:9083',

'clients'='5',

'property-version'='1',

'warehouse'='/user/hive/warehouse'

);Flink SQL> CREATE CATALOG hive_catalog WITH (

> 'type'='iceberg',

> 'catalog-type'='hive',

> 'uri'='thrift://hadoop101:9083',

> 'clients'='5',

> 'property-version'='1',

> 'warehouse'='/user/hive/warehouse'

> );

>

Exception in thread "main" org.apache.flink.table.client.SqlClientException: Unexpected exception. This is a bug. Please consider filing an issue.

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:222)

Caused by: java.lang.NoClassDefFoundError: org/apache/hadoop/hive/metastore/api/NoSuchObjectException

at org.apache.iceberg.flink.CatalogLoader$HiveCatalogLoader.loadCatalog(CatalogLoader.java:112)

at org.apache.iceberg.flink.FlinkCatalog.<init>(FlinkCatalog.java:111)

at org.apache.iceberg.flink.FlinkCatalogFactory.createCatalog(FlinkCatalogFactory.java:127)

at org.apache.iceberg.flink.FlinkCatalogFactory.createCatalog(FlinkCatalogFactory.java:117)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.createCatalog(TableEnvironmentImpl.java:1110)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeOperation(TableEnvironmentImpl.java:1043)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeSql(TableEnvironmentImpl.java:693)

at org.apache.flink.table.client.gateway.local.LocalExecutor.lambda$executeSql$7(LocalExecutor.java:366)

at org.apache.flink.table.client.gateway.local.ExecutionContext.wrapClassLoader(ExecutionContext.java:254)

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeSql(LocalExecutor.java:366)

at org.apache.flink.table.client.cli.CliClient.callDdl(CliClient.java:651)

at org.apache.flink.table.client.cli.CliClient.callDdl(CliClient.java:646)

at org.apache.flink.table.client.cli.CliClient.callCommand(CliClient.java:362)

at java.util.Optional.ifPresent(Optional.java:159)

at org.apache.flink.table.client.cli.CliClient.open(CliClient.java:210)

at org.apache.flink.table.client.SqlClient.openCli(SqlClient.java:147)

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:115)

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:208)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hive.metastore.api.NoSuchObjectException

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at org.apache.flink.util.FlinkUserCodeClassLoader.loadClassWithoutExceptionHandling(FlinkUserCodeClassLoader.java:62)

at org.apache.flink.util.ChildFirstClassLoader.loadClassWithoutExceptionHandling(ChildFirstClassLoader.java:65)

at org.apache.flink.util.FlinkUserCodeClassLoader.loadClass(FlinkUserCodeClassLoader.java:47)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 18 more

Shutting down the session...

done.

);解决方法: 增加hive的classpath 看看, 官网没看到增加的方法。。。

想到个办法:直接把hive/lib的classpath放到hadoop classpath上,不就ok了?

export HADOOP_CLASSPATH=`$HADOOP_HOME/bin/hadoop classpath`:/opt/module/hive/lib/*.jar改完,重跑,没效果

继续努力

解决问题思路,

分析问题: 安装报错提示, 是包没找到,由这一行发出来的

org.apache.iceberg.flink.CatalogLoader$HiveCatalogLoader.loadCatalog(CatalogLoader.java:112)

把iceberg的源码下载回来对于0.11分支的。 发现对于的hive是2.3.7版本,跟我使用的hive2.3.6没大版本变动。

解决方法:排除了hive版本问题,hive的classpath也引进来了,继续看官网

最后解决方法:增加flink-sql-connector-hive-2.3.6_2.11-1.11.0.jar

[root@hadoop101 software]# bin/sql-client.sh embedded -j /opt/software/iceberg-flink-runtime-0.11.1.jar -j /opt/software/flink-sql-connector-hive-2.3.6_2.11-1.11.0.jar shell5.1 成功创建catalog

[root@hadoop101 software]# sql-client.sh embedded -j /opt/software/iceberg-flink-runtime-0.11.1.jar -j /opt/software/flink-sql-connector-hive-2.3.6_2.11-1.11.0.jar shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/flink-1.11.6/lib/log4j-slf4j-impl-2.16.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hadoop-2.7.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

No default environment specified.

Searching for '/opt/module/flink-1.11.6/conf/sql-client-defaults.yaml'...found.

Reading default environment from: file:/opt/module/flink-1.11.6/conf/sql-client-defaults.yaml

No session environment specified.

Command history file path: /root/.flink-sql-history

▒▓██▓██▒

▓████▒▒█▓▒▓███▓▒

▓███▓░░ ▒▒▒▓██▒ ▒

░██▒ ▒▒▓▓█▓▓▒░ ▒████

██▒ ░▒▓███▒ ▒█▒█▒

░▓█ ███ ▓░▒██

▓█ ▒▒▒▒▒▓██▓░▒░▓▓█

█░ █ ▒▒░ ███▓▓█ ▒█▒▒▒

████░ ▒▓█▓ ██▒▒▒ ▓███▒

░▒█▓▓██ ▓█▒ ▓█▒▓██▓ ░█░

▓░▒▓████▒ ██ ▒█ █▓░▒█▒░▒█▒

███▓░██▓ ▓█ █ █▓ ▒▓█▓▓█▒

░██▓ ░█░ █ █▒ ▒█████▓▒ ██▓░▒

███░ ░ █░ ▓ ░█ █████▒░░ ░█░▓ ▓░

██▓█ ▒▒▓▒ ▓███████▓░ ▒█▒ ▒▓ ▓██▓

▒██▓ ▓█ █▓█ ░▒█████▓▓▒░ ██▒▒ █ ▒ ▓█▒

▓█▓ ▓█ ██▓ ░▓▓▓▓▓▓▓▒ ▒██▓ ░█▒

▓█ █ ▓███▓▒░ ░▓▓▓███▓ ░▒░ ▓█

██▓ ██▒ ░▒▓▓███▓▓▓▓▓██████▓▒ ▓███ █

▓███▒ ███ ░▓▓▒░░ ░▓████▓░ ░▒▓▒ █▓

█▓▒▒▓▓██ ░▒▒░░░▒▒▒▒▓██▓░ █▓

██ ▓░▒█ ▓▓▓▓▒░░ ▒█▓ ▒▓▓██▓ ▓▒ ▒▒▓

▓█▓ ▓▒█ █▓░ ░▒▓▓██▒ ░▓█▒ ▒▒▒░▒▒▓█████▒

██░ ▓█▒█▒ ▒▓▓▒ ▓█ █░ ░░░░ ░█▒

▓█ ▒█▓ ░ █░ ▒█ █▓

█▓ ██ █░ ▓▓ ▒█▓▓▓▒█░

█▓ ░▓██░ ▓▒ ▓█▓▒░░░▒▓█░ ▒█

██ ▓█▓░ ▒ ░▒█▒██▒ ▓▓

▓█▒ ▒█▓▒░ ▒▒ █▒█▓▒▒░░▒██

░██▒ ▒▓▓▒ ▓██▓▒█▒ ░▓▓▓▓▒█▓

░▓██▒ ▓░ ▒█▓█ ░░▒▒▒

▒▓▓▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░▓▓ ▓░▒█░

______ _ _ _ _____ ____ _ _____ _ _ _ BETA

| ____| (_) | | / ____|/ __ \| | / ____| (_) | |

| |__ | |_ _ __ | | __ | (___ | | | | | | | | |_ ___ _ __ | |_

| __| | | | '_ \| |/ / \___ \| | | | | | | | | |/ _ \ '_ \| __|

| | | | | | | | < ____) | |__| | |____ | |____| | | __/ | | | |_

|_| |_|_|_| |_|_|\_\ |_____/ \___\_\______| \_____|_|_|\___|_| |_|\__|

Welcome! Enter 'HELP;' to list all available commands. 'QUIT;' to exit.

Flink SQL> CREATE CATALOG hive_catalog WITH (

> 'type'='iceberg',

> 'catalog-type'='hive',

> 'uri'='thrift://hadoop101:9083',

> 'clients'='5',

> 'property-version'='1',

> 'hive-conf-dir'='/opt/module/hive/conf'

> );

2022-01-13 10:58:27,528 INFO org.apache.hadoop.hive.conf.HiveConf [] - Found configuration file null

2022-01-13 10:58:27,741 WARN org.apache.hadoop.hive.conf.HiveConf [] - HiveConf of name hive.metastore.event.db.notification.api.auth does not exist

[INFO] Catalog has been created.5.3 测试基于hive 的catalog对多客户端的支持

在本客户端查看

Flink SQL> show catalogs;

default_catalog

hive_catalog新开一个客户端,(第一个客户端没有退出)

Flink SQL> show catalogs;

default_catalog

Flink SQL> show databases;

default_database结论: 没有看到hive_catalog, 说明: 对hadoop,hive的catalog, 不同客户端是不共享的。

总结

按照官网跑: [link](https://iceberg.apache.org/#flink/).1.了解iceberg 支持3种catalog保存机制

2.目前实践了第一种 保存到hadoop上,这种方式,多客户端无法共享catalog,无法上生产

3.需要使用基于hive的catalog