继上次爬取完总体数据之后,这次我做的是将每个信件的网址使用MapReduce进行清洗出来,进而爬取出进一步的数据。

通过观察所得该网站根据不同的信件类型有不同的网址其对应关系如下:

咨询 com.web.consult.consultDetail.flow

建议 com.web.suggest.suggesDetail.flow

投诉 com.web.complain.complainDetail.flow

所以根据条件我们生成其相关的网址。

package xinjian;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.Reducer.Context;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import Data.Filght.ExtractIATA;

import Data.Filght.AirPlane.doMapper;

import Data.Filght.AirPlane.doReducer;

public class Url {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

conf.set("mapred.textoutputformat.ignoreseparator", "true");

conf.set("mapred.textoutputformat.separator", ",");

Job job = Job.getInstance(conf);

// 设置reduce task数量

// job.setNumReduceTasks(92);

job.setJarByClass(ExtractIATA.class);

// 指定本业务job要使用的mapper,reducer业务类

job.setMapperClass(doMapper.class);

job.setReducerClass(doReducer.class);

// 虽然指定了泛型,以防框架使用第三方的类型

// 指定mapper输出数据的kv类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

// 指定最终输出的数据的kv类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(String.class);

// 指定job输入原始文件所在位置

Path in = new Path("hdfs://192.168.43.42:9000/user/hadoop/myapp/xinjian/data.csv");

Path out = new Path("hdfs://192.168.43.42:9000/user/hadoop/myapp/xinjian/data2");

FileInputFormat.addInputPath(job, in);

FileOutputFormat.setOutputPath(job, out);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

public static class doMapper extends Mapper<LongWritable, Text, Text, Text> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line =value.toString();

String values[]=line.split(",");

String url="http://www.beijing.gov.cn/hudong/hdjl/";

if(values[0].equals("咨询")) {

url+="com.web.consult.consultDetail.flow?originalId=";

url+=values[1];

}else if(values[0].equals("建议")) {

url+="com.web.suggest.suggesDetail.flow?originalId=";

url+=values[1];

}else if(values[0].equals("投诉")) {

url+="com.web.complain.complainDetail.flow?originalId=";

url+=values[1];

}else {

url="url";

}

context.write(new Text(values[1]), new Text(url));

}

}

public static class doReducer extends Reducer<Text, Text, Text, Text> {

protected void reduce(Text key, Text values, Context context) throws IOException, InterruptedException {

context.write(key, values);

}

}

}

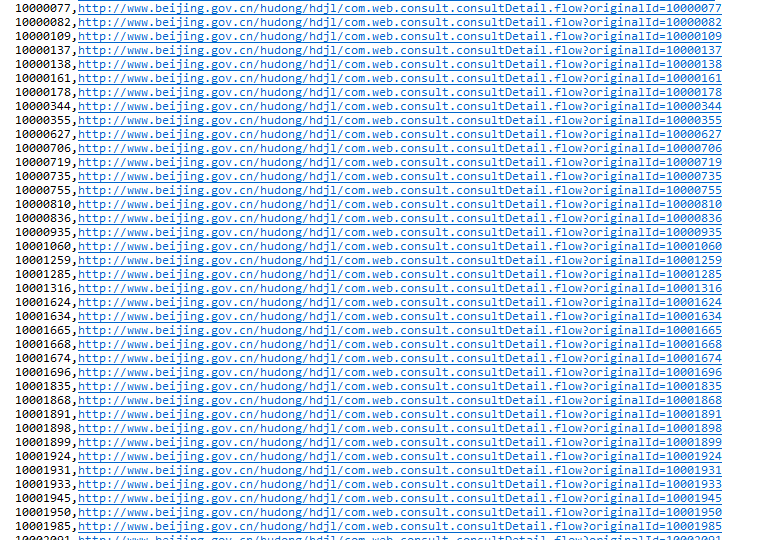

运行得出相关的信息,之后进行下一步的爬取。