# github官网:

https://github.com/kubernetes/examples/tree/master/volumes/cephfs/

# k8s官网:

https://kubernetes.io/docs/concepts/storage/volumes/#cephfs

# 参考

https://blog.51cto.com/tryingstuff/2386821

一、K8S连接Ceph步骤

1.1、首先得在kubernetes的主机上安装ceph客户端(要访问ceph的节点)

所有节点安装ceph-common

添加ceph的yum源:

[Ceph]

name=Ceph packages for $basearch

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[Ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/SRPMS

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

安装ceph-common:

yum install ceph-common -y

如果安装过程出现依赖报错,可以通过如下方式解决:

yum install -y yum-utils &&

yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/ &&

yum install --nogpgcheck -y epel-release &&

rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 &&

rm -f /etc/yum.repos.d/dl.fedoraproject.org*

yum -y install ceph-common

1.2、配置ceph配置文件(不用也行)

将ceph配置文件拷贝到各个k8s的node节点

[root@node1 ~]# scp /etc/ceph k8s-node:/etc/

1.3、ceph新建pool、user、image(ceps集群上)

# 1、新建一个pool

[root@node1 ~]# ceph osd pool create kubernetes 64 64

pool 'kubernetes' created

# 2、创建一个名字为client.k8s的用户,且只能使用指定的pool(kubernetes)

[root@node1 ~]# ceph auth get-or-create client.k8s mon 'allow r' osd 'allow class-read object_prefix rbd_children,allow rwx pool=kubernetes'

[client.k8s]

key = AQD+pg9gjreqMRAAtwq4dQnwX0kX4Vx6TueAJQ==

# 3、查看用户

[root@node1 ~]# ceph auth ls

client.k8s

key: AQD+pg9gjreqMRAAtwq4dQnwX0kX4Vx6TueAJQ==

caps: [mon] allow r

caps: [osd] allow class-read object_prefix rbd_children,allow rwx pool=kubernetes

# 4、对这个用户进行加密,后面的secret会用到

[root@node1 ~]# echo AQD+pg9gjreqMRAAtwq4dQnwX0kX4Vx6TueAJQ== | base64

QVFEK3BnOWdqcmVxTVJBQXR3cTRkUW53WDBrWDRWeDZUdWVBSlE9PQo=

# 5、新建一个块

[root@node1 ~]# rbd create -p kubernetes --image rbd.img --size 3G

[root@node1 ~]# rbd -p kubernetes ls

rbd.img

# 6、disable 模块

[root@node1 ~]# rbd feature disable kubernetes/rbd1.img deep-flatten

[root@node1 ~]# rbd feature disable kubernetes/rbd1.img fast-diff

[root@node1 ~]# rbd feature disable kubernetes/rbd1.img object-map

[root@node1 ~]# rbd feature disable kubernetes/rbd1.img exclusive-lock

# 7、查看mon地址

[root@node1 ~]# ceph mon dump

dumped monmap epoch 3

epoch 3

fsid 081dc49f-2525-4aaa-a56d-89d641cef302

last_changed 2021-01-24 02:09:24.404424

created 2021-01-24 02:00:36.999143

min_mon_release 14 (nautilus)

0: [v2:192.168.1.129:3300/0,v1:192.168.1.129:6789/0] mon.node1

1: [v2:192.168.1.130:3300/0,v1:192.168.1.130:6789/0] mon.node2

2: [v2:192.168.1.131:3300/0,v1:192.168.1.131:6789/0] mon.node3

二、volumes集成Ceph

在上面的步骤,我们已经在ceph集群用创建了我们所需的信息,现在在k8s集群中使用起来

# 1、新建目录

[root@master1 ~]# mkdir /app/ceph_test -p ;cd /app/ceph_test

# 2、创建secret的yaml文件

[root@master1 ceph_test]# cat ceph-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret

type: "kubernetes.io/rbd"

data:

key: QVFEK3BnOWdqcmVxTVJBQXR3cTRkUW53WDBrWDRWeDZUdWVBSlE9PQo= # the base64-encoded string of the already-base64-encoded key `ceph auth get-key` outputs

# 3、create这个secret

[root@master1 ceph_test]# kubectl apply -f ceph-secret.yaml

secret/ceph-secret created

[root@master1 ceph_test]# kubectl get secret

NAME TYPE DATA AGE

ceph-secret kubernetes.io/rbd 1 4m1s

# 4、创建Pod文件

[root@master1 ceph_test]# cat cephfs.yaml

apiVersion: v1

kind: Pod

metadata:

name: rbd-demo

spec:

containers:

- name: rbd-demo-nginx

image: nginx

volumeMounts:

- mountPath: "/data"

name: rbd-demo

volumes:

- name: rbd-demo

rbd:

monitors: # 指定mom的集群地址

- 192.168.1.129:6789

- 192.168.1.130:6789

- 192.168.1.131:6789

pool: kubernetes # pool name

image: rbd.img # 块 name

user: k8s # 刚刚新建的用户名

secretRef:

name: ceph-secret

# 5、创建pod

[root@master1 ceph_test]# kubectl apply -f cephfs.yaml

pod/rbd-demo created

# 6、验证pod是否成功把块rbd.img挂载到容器中

[root@master1 ceph_test]# kubectl exec -it rbd-demo -- bash

root@rbd-demo:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 47G 4.1G 43G 9% /

tmpfs 64M 0 64M 0% /dev

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/rbd0 2.9G 9.0M 2.9G 1% /data # 已经可以看到成功挂载进来了

/dev/mapper/centos-root 47G 4.1G 43G 9% /etc/hosts

shm 64M 0 64M 0% /dev/shm

tmpfs 2.0G 12K 2.0G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 2.0G 0 2.0G 0% /proc/acpi

tmpfs 2.0G 0 2.0G 0% /proc/scsi

tmpfs 2.0G 0 2.0G 0% /sys/firmware

# 然后创建一些文件

root@rbd-demo:/# cd /data/

root@rbd-demo:/data# echo aaa > test

# 6、Pod是创建在node01节点上(k8s的node01)

[root@node1 ~]# df -h | grep rbd

/dev/rbd0 2.9G 9.1M 2.9G 1% /var/lib/kubelet/plugins/kubernetes.io/rbd/mounts/kubernetes-image-rbd.img

三、PV、PVC集成Cpeh

前提:参考步骤1.3,新建pool、image、用户、还有secret的创建

3.1、创建PV

# 1、创建PV

[root@master1 ceph_test]# cat ceph-rbd-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: ceph-rbd-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

rbd:

monitors: # 指定mom的集群地址

- 192.168.1.129:6789

- 192.168.1.130:6789

- 192.168.1.131:6789

pool: kubernetes # pool name

image: rbd1.img # 块 name

user: k8s # 刚刚新建的用户名

secretRef:

name: ceph-secret

fsType: ext4

readOnly: false

persistentVolumeReclaimPolicy: Recycle

storageClassName: rbd

# 2、创建PV

[root@master1 ceph_test]# kubectl apply -f ceph-rbd-pv.yaml

persistentvolume/ceph-rbd-pv created

# 3、查看PV

[root@master1 ceph_test]# kubectl get pv ceph-rbd-pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

ceph-rbd-pv 1Gi RWO Recycle Available rbd 51s

3.2、创建PVC

# 1、创建PVC

[root@master1 ceph_test]# cat ceph-rbd-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ceph-rbd-pv-claim

spec:

accessModes:

- ReadWriteOnce

volumeName: ceph-rbd-pv

resources:

requests:

storage: 1Gi

storageClassName: rbd

# 2、apply PVC

[root@master1 ceph_test]# kubectl apply -f ceph-rbd-pvc.yaml

persistentvolumeclaim/ceph-rbd-pv-claim created

# 3、查看PVC

[root@master1 ceph_test]# kubectl get pvc ceph-rbd-pv-claim

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ceph-rbd-pv-claim Bound ceph-rbd-pv 1Gi RWO rbd 30s

3.3、Pod使用PVC

# 1、创建一个Pod的yaml文件

[root@master1 ceph_test]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: rbd-nginx

spec:

containers:

- image: nginx

name: rbd-rw

ports:

- name: www

protocol: TCP

containerPort: 80

volumeMounts:

- name: rbd-pvc

mountPath: /mnt

volumes:

- name: rbd-pvc

persistentVolumeClaim:

claimName: ceph-rbd-pv-claim

# 2、apply pod

[root@master1 ceph_test]# kubectl apply -f pod-demo.yaml

pod/rbd-nginx created

# 3、查看Pod

[root@master1 ceph_test]# kubectl get pod rbd-nginx

NAME READY STATUS RESTARTS AGE

rbd-nginx 1/1 Running 0 22s

四、StorageClass集成Ceph(最终的方案)

CEPH官网:

https://docs.ceph.com/en/latest/rbd/rbd-kubernetes/?highlight=CSI

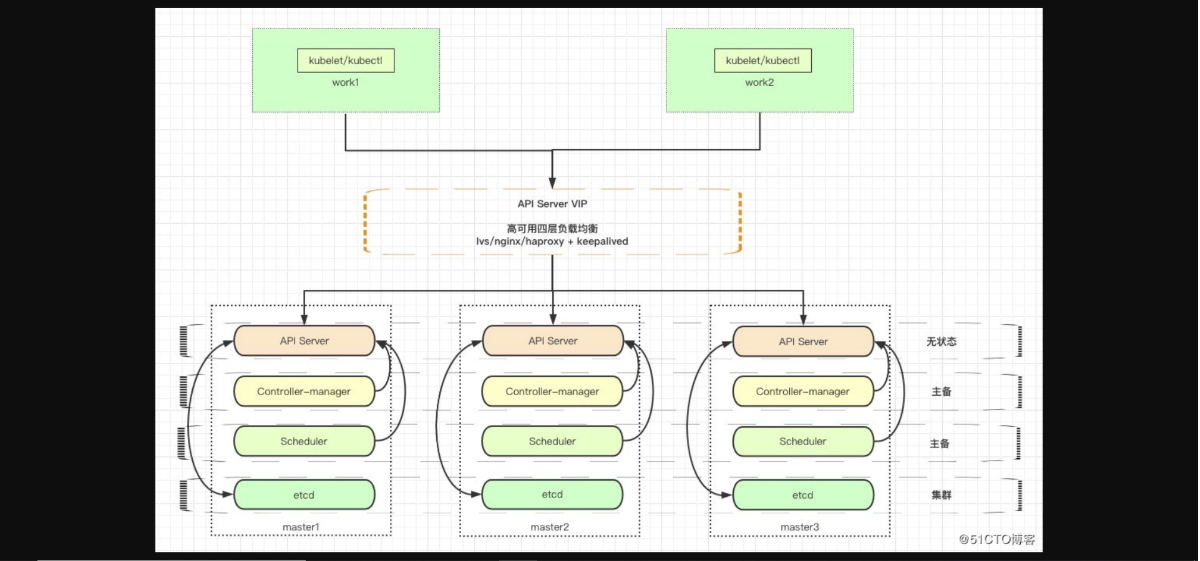

CSI架构图:

4.1、集成步骤(ceph集群上)

# 1、创建池

# 默认情况下,Ceph块设备使用该rbd池。为Kubernetes卷存储创建一个池。确保您的Ceph集群正在运行,然后创建池。

[root@node1 ~]# ceph osd pool create kubernetes

[root@node1 ~]# rbd pool init kubernetes

# 2、设置CEPH客户端身份验证

ceph auth get-or-create client.k8s mon 'profile rbd' osd 'profile rbd pool=kubernetes' mgr 'profile rbd pool=kubernetes'

[client.kubernetes]

key = AQD+pg9gjreqMRAAtwq4dQnwX0kX4Vx6TueAJQ==

# 3、查看CEPH- CSI CONFIGMAP

[root@node1 ~]# ceph mon dump

dumped monmap epoch 3

epoch 3

fsid 081dc49f-2525-4aaa-a56d-89d641cef302

last_changed 2021-01-24 02:09:24.404424

created 2021-01-24 02:00:36.999143

min_mon_release 14 (nautilus)

0: [v2:192.168.1.129:3300/0,v1:192.168.1.129:6789/0] mon.node1

1: [v2:192.168.1.130:3300/0,v1:192.168.1.130:6789/0] mon.node2

2: [v2:192.168.1.131:3300/0,v1:192.168.1.131:6789/0] mon.node3

4.2、k8s节点上

# 1、创建ConfigMap

[root@master1 ~]# mkdir /app/csi -p; cd /app/csi

[root@master1 csi]# cat csi-config-map.yaml

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

[

{

"clusterID": "081dc49f-2525-4aaa-a56d-89d641cef302", # ceph mon dump获取

"monitors": [

"192.168.1.129:6789",

"192.168.1.130:6789",

"192.168.1.131:6789"

]

}

]

metadata:

name: ceph-csi-config

# 2、apply且查看这个ConfigMap

[root@master1 csi]# kubectl apply -f csi-config-map.yaml

configmap/ceph-csi-config created

[root@master1 csi]# kubectl get cm ceph-csi-config

NAME DATA AGE

ceph-csi-config 1 19s

# 3、生成CEPH- CSI CEPHX秘密

[root@master1 csi]# cat csi-rbd-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: default

stringData:

userID: k8s # ceph集群创建的username # 这里用admin的要不然下面会报错!!!!!!!!!!!!!

userKey: AQD+pg9gjreqMRAAtwq4dQnwX0kX4Vx6TueAJQ== # 这个key不需要base64加密!!!!!!!!!!!!!!!!!

# 4、创建且查看

[root@master1 csi]# kubectl apply -f csi-rbd-secret.yaml

secret/csi-rbd-secret created

[root@master1 csi]# kubectl get secret csi-rbd-secret

NAME TYPE DATA AGE

csi-rbd-secret Opaque 2 14s

4.2.0、再创建一个ConfigMap

[root@master1 csi]# cat kms-config.yaml

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

{

"vault-test": {

"encryptionKMSType": "vault",

"vaultAddress": "http://vault.default.svc.cluster.local:8200",

"vaultAuthPath": "/v1/auth/kubernetes/login",

"vaultRole": "csi-kubernetes",

"vaultPassphraseRoot": "/v1/secret",

"vaultPassphrasePath": "ceph-csi/",

"vaultCAVerify": "false"

}

}

metadata:

name: ceph-csi-encryption-kms-config

# 创建、查看

[root@master1 csi]# kubectl apply -f kms-config.yaml

configmap/ceph-csi-encryption-kms-config created

[root@master1 csi]# kubectl get cm

NAME DATA AGE

ceph-csi-config 1 59m

ceph-csi-encryption-kms-config 1 15s

4.2.1、配置CEPH- CSI插件(k8s集群上)

创建所需的ServiceAccount和RBAC ClusterRole / ClusterRoleBinding Kubernetes对象。不一定需要这些对象为您定制Kubernetes环境中,因此可作为-从ceph- CSI 部署YAMLs:

$ kubectl apply -f https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-provisioner-rbac.yaml

$ kubectl apply -f https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-nodeplugin-rbac.yaml

# 1、文件查看

[root@master1 csi]# cat csi-nodeplugin-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: rbd-csi-nodeplugin

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-nodeplugin

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get"]

# allow to read Vault Token and connection options from the Tenants namespace

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-nodeplugin

subjects:

- kind: ServiceAccount

name: rbd-csi-nodeplugin

namespace: default

roleRef:

kind: ClusterRole

name: rbd-csi-nodeplugin

apiGroup: rbac.authorization.k8s.io

-------------------------------------------------------------------------

[root@master1 csi]# cat csi-provisioner-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: rbd-csi-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-external-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "update", "delete", "patch"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["persistentvolumeclaims/status"]

verbs: ["update", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshots"]

verbs: ["get", "list"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotcontents"]

verbs: ["create", "get", "list", "watch", "update", "delete"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments"]

verbs: ["get", "list", "watch", "update", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments/status"]

verbs: ["patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["csinodes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotcontents/status"]

verbs: ["update"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-provisioner-role

subjects:

- kind: ServiceAccount

name: rbd-csi-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: rbd-external-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

# replace with non-default namespace name

namespace: default

name: rbd-external-provisioner-cfg

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "list", "watch", "create", "update", "delete"]

- apiGroups: ["coordination.k8s.io"]

resources: ["leases"]

verbs: ["get", "watch", "list", "delete", "update", "create"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-provisioner-role-cfg

# replace with non-default namespace name

namespace: default

subjects:

- kind: ServiceAccount

name: rbd-csi-provisioner

# replace with non-default namespace name

namespace: default

roleRef:

kind: Role

name: rbd-external-provisioner-cfg

apiGroup: rbac.authorization.k8s.io

# 2、创建这2个yaml

[root@master1 csi]# kubectl apply -f csi-nodeplugin-rbac.yaml -f csi-provisioner-rbac.yaml

serviceaccount/rbd-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

serviceaccount/rbd-csi-provisioner created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

4.2.2、创建ceph csi供应器和节点插件

最后,创建ceph csi供应器和节点插件。随着的可能是个例外ceph- CSI集装箱发行版本,不一定需要这些对象为您定制Kubernetes环境中,因此可作为-从ceph- CSI部署YAMLs:

$ wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin-provisioner.yaml

$ kubectl apply -f csi-rbdplugin-provisioner.yaml

$ wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin.yaml

$ kubectl apply -f csi-rbdplugin.yaml

# 1、文件查看

[root@master1 csi]# cat csi-rbdplugin.yaml

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: csi-rbdplugin

spec:

selector:

matchLabels:

app: csi-rbdplugin

template:

metadata:

labels:

app: csi-rbdplugin

spec:

serviceAccount: rbd-csi-nodeplugin

hostNetwork: true

hostPID: true

# to use e.g. Rook orchestrated cluster, and mons' FQDN is

# resolved through k8s service, set dns policy to cluster first

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: driver-registrar

# This is necessary only for systems with SELinux, where

# non-privileged sidecar containers cannot access unix domain socket

# created by privileged CSI driver container.

securityContext:

privileged: true

image: k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

args:

- "--v=5"

- "--csi-address=/csi/csi.sock"

- "--kubelet-registration-path=/var/lib/kubelet/plugins/rbd.csi.ceph.com/csi.sock"

env:

- name: KUBE_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: registration-dir

mountPath: /registration

- name: csi-rbdplugin

securityContext:

privileged: true

capabilities:

add: ["SYS_ADMIN"]

allowPrivilegeEscalation: true

# for stable functionality replace canary with latest release version

image: quay.io/cephcsi/cephcsi:canary

args:

- "--nodeid=$(NODE_ID)"

- "--type=rbd"

- "--nodeserver=true"

- "--endpoint=$(CSI_ENDPOINT)"

- "--v=5"

- "--drivername=rbd.csi.ceph.com"

# If topology based provisioning is desired, configure required

# node labels representing the nodes topology domain

# and pass the label names below, for CSI to consume and advertise

# its equivalent topology domain

# - "--domainlabels=failure-domain/region,failure-domain/zone"

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NODE_ID

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# - name: POD_NAMESPACE

# valueFrom:

# fieldRef:

# fieldPath: spec.namespace

# - name: KMS_CONFIGMAP_NAME

# value: encryptionConfig

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- mountPath: /dev

name: host-dev

- mountPath: /sys

name: host-sys

- mountPath: /run/mount

name: host-mount

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- name: ceph-csi-config

mountPath: /etc/ceph-csi-config/

- name: ceph-csi-encryption-kms-config

mountPath: /etc/ceph-csi-encryption-kms-config/

- name: plugin-dir

mountPath: /var/lib/kubelet/plugins

mountPropagation: "Bidirectional"

- name: mountpoint-dir

mountPath: /var/lib/kubelet/pods

mountPropagation: "Bidirectional"

- name: keys-tmp-dir

mountPath: /tmp/csi/keys

- name: liveness-prometheus

securityContext:

privileged: true

image: quay.io/cephcsi/cephcsi:canary

args:

- "--type=liveness"

- "--endpoint=$(CSI_ENDPOINT)"

- "--metricsport=8680"

- "--metricspath=/metrics"

- "--polltime=60s"

- "--timeout=3s"

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: socket-dir

mountPath: /csi

imagePullPolicy: "IfNotPresent"

volumes:

- name: socket-dir

hostPath:

path: /var/lib/kubelet/plugins/rbd.csi.ceph.com

type: DirectoryOrCreate

- name: plugin-dir

hostPath:

path: /var/lib/kubelet/plugins

type: Directory

- name: mountpoint-dir

hostPath:

path: /var/lib/kubelet/pods

type: DirectoryOrCreate

- name: registration-dir

hostPath:

path: /var/lib/kubelet/plugins_registry/

type: Directory

- name: host-dev

hostPath:

path: /dev

- name: host-sys

hostPath:

path: /sys

- name: host-mount

hostPath:

path: /run/mount

- name: lib-modules

hostPath:

path: /lib/modules

- name: ceph-csi-config

configMap:

name: ceph-csi-config

- name: ceph-csi-encryption-kms-config

configMap:

name: ceph-csi-encryption-kms-config

- name: keys-tmp-dir

emptyDir: {

medium: "Memory"

}

---

# This is a service to expose the liveness metrics

apiVersion: v1

kind: Service

metadata:

name: csi-metrics-rbdplugin

labels:

app: csi-metrics

spec:

ports:

- name: http-metrics

port: 8080

protocol: TCP

targetPort: 8680

selector:

app: csi-rbdplugin

---------------------------------------------------------------------------------------------

[root@master1 csi]# cat csi-rbdplugin-provisioner.yaml

kind: Service

apiVersion: v1

metadata:

name: csi-rbdplugin-provisioner

labels:

app: csi-metrics

spec:

selector:

app: csi-rbdplugin-provisioner

ports:

- name: http-metrics

port: 8080

protocol: TCP

targetPort: 8680

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: csi-rbdplugin-provisioner

spec:

replicas: 3

selector:

matchLabels:

app: csi-rbdplugin-provisioner

template:

metadata:

labels:

app: csi-rbdplugin-provisioner

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- csi-rbdplugin-provisioner

topologyKey: "kubernetes.io/hostname"

serviceAccount: rbd-csi-provisioner

containers:

- name: csi-provisioner

image: k8s.gcr.io/sig-storage/csi-provisioner:v2.0.4

args:

- "--csi-address=$(ADDRESS)"

- "--v=5"

- "--timeout=150s"

- "--retry-interval-start=500ms"

- "--leader-election=true"

# set it to true to use topology based provisioning

- "--feature-gates=Topology=false"

# if fstype is not specified in storageclass, ext4 is default

- "--default-fstype=ext4"

- "--extra-create-metadata=true"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-snapshotter

image: k8s.gcr.io/sig-storage/csi-snapshotter:v3.0.2

args:

- "--csi-address=$(ADDRESS)"

- "--v=5"

- "--timeout=150s"

- "--leader-election=true"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

securityContext:

privileged: true

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-attacher

image: k8s.gcr.io/sig-storage/csi-attacher:v3.0.2

args:

- "--v=5"

- "--csi-address=$(ADDRESS)"

- "--leader-election=true"

- "--retry-interval-start=500ms"

env:

- name: ADDRESS

value: /csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-resizer

image: k8s.gcr.io/sig-storage/csi-resizer:v1.0.1

args:

- "--csi-address=$(ADDRESS)"

- "--v=5"

- "--timeout=150s"

- "--leader-election"

- "--retry-interval-start=500ms"

- "--handle-volume-inuse-error=false"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-rbdplugin

securityContext:

privileged: true

capabilities:

add: ["SYS_ADMIN"]

# for stable functionality replace canary with latest release version

image: quay.io/cephcsi/cephcsi:canary

args:

- "--nodeid=$(NODE_ID)"

- "--type=rbd"

- "--controllerserver=true"

- "--endpoint=$(CSI_ENDPOINT)"

- "--v=5"

- "--drivername=rbd.csi.ceph.com"

- "--pidlimit=-1"

- "--rbdhardmaxclonedepth=8"

- "--rbdsoftmaxclonedepth=4"

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NODE_ID

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# - name: POD_NAMESPACE

# valueFrom:

# fieldRef:

# fieldPath: spec.namespace

# - name: KMS_CONFIGMAP_NAME

# value: encryptionConfig

- name: CSI_ENDPOINT

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- mountPath: /dev

name: host-dev

- mountPath: /sys

name: host-sys

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- name: ceph-csi-config

mountPath: /etc/ceph-csi-config/

- name: ceph-csi-encryption-kms-config

mountPath: /etc/ceph-csi-encryption-kms-config/

- name: keys-tmp-dir

mountPath: /tmp/csi/keys

- name: csi-rbdplugin-controller

securityContext:

privileged: true

capabilities:

add: ["SYS_ADMIN"]

# for stable functionality replace canary with latest release version

image: quay.io/cephcsi/cephcsi:canary

args:

- "--type=controller"

- "--v=5"

- "--drivername=rbd.csi.ceph.com"

- "--drivernamespace=$(DRIVER_NAMESPACE)"

env:

- name: DRIVER_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: ceph-csi-config

mountPath: /etc/ceph-csi-config/

- name: keys-tmp-dir

mountPath: /tmp/csi/keys

- name: liveness-prometheus

image: quay.io/cephcsi/cephcsi:canary

args:

- "--type=liveness"

- "--endpoint=$(CSI_ENDPOINT)"

- "--metricsport=8680"

- "--metricspath=/metrics"

- "--polltime=60s"

- "--timeout=3s"

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi-provisioner.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: socket-dir

mountPath: /csi

imagePullPolicy: "IfNotPresent"

volumes:

- name: host-dev

hostPath:

path: /dev

- name: host-sys

hostPath:

path: /sys

- name: lib-modules

hostPath:

path: /lib/modules

- name: socket-dir

emptyDir: {

medium: "Memory"

}

- name: ceph-csi-config

configMap:

name: ceph-csi-config

- name: ceph-csi-encryption-kms-config

configMap:

name: ceph-csi-encryption-kms-config

- name: keys-tmp-dir

emptyDir: {

medium: "Memory"

}

# 2、创建这2个文件

[root@master1 csi]# kubectl apply -f csi-rbdplugin-provisioner.yaml -f csi-rbdplugin.yaml

service/csi-rbdplugin-provisioner created

deployment.apps/csi-rbdplugin-provisioner created

daemonset.apps/csi-rbdplugin created

service/csi-metrics-rbdplugin created

4.2.3、使用CEPH块设备

# 1、会用到这个secret

[root@master1 ~]# kubectl get secret

NAME TYPE DATA AGE

csi-rbd-secret Opaque 2 99m

# 2、创建StorageClass的yaml文件

[root@master1 csi]# cat csi-rbd-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

provisioner: rbd.csi.ceph.com # 驱动

parameters:

clusterID: b9127830-b0cc-4e34-aa47-9d1a2e9949a8 # ceph集群id(ceph -s 查看)

pool: kubernetes # 访问的pool name

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret # 默认是这些个名字

csi.storage.k8s.io/provisioner-secret-namespace: default # 默认在default namespace

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

reclaimPolicy: Delete

mountOptions:

- discard

# 3、创建且查看

[root@master1 csi]# kubectl apply -f csi-rbd-sc.yaml

storageclass.storage.k8s.io/csi-rbd-sc created

[root@master1 csi]# kubectl get storageclass.storage.k8s.io/csi-rbd-sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

csi-rbd-sc rbd.csi.ceph.com Delete Immediate false 17s

创建PERSISTENTVOLUMECLAIM (现在创建PVC,会自动创建PV咯。智能化666)

例如,为了创建一个基于块的PersistentVolumeClaim其利用ceph- CSI基StorageClass上面创建,以下YAML可以用来请求来自原始块存储CSI -rbd-SC StorageClass:

$ cat <<EOF > raw-block-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: raw-block-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Block # Block

resources:

requests:

storage: 1Gi

storageClassName: csi-rbd-sc

EOF

$ kubectl apply -f raw-block-pvc.yaml

以下示例和示例将上述PersistentVolumeClaim绑定 到作为原始块设备的Pod资源:

$ cat <<EOF > raw-block-pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: pod-with-raw-block-volume

spec:

containers:

- name: fc-container

image: fedora:26

command: ["/bin/sh", "-c"]

args: ["tail -f /dev/null"]

volumeDevices:

- name: data

devicePath: /dev/xvda

volumes:

- name: data

persistentVolumeClaim:

claimName: raw-block-pvc

EOF

$ kubectl apply -f raw-block-pod.yaml

创建一个基于文件系统PersistentVolumeClaim其利用 ceph- CSI基StorageClass上面创建,以下YAML可以用于请求安装从文件系统(由RBD图像支持)CSI -rbd-SC StorageClass:

$ cat <<EOF > pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem # Filesystem

resources:

requests:

storage: 1Gi

storageClassName: csi-rbd-sc

EOF

$ kubectl apply -f pvc.yaml

以下示例和示例将上述PersistentVolumeClaim绑定 到作为已挂载文件系统的Pod资源:

$ cat <<EOF > pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: csi-rbd-demo-pod

spec:

containers:

- name: web-server

image: nginx

volumeMounts:

- name: mypvc

mountPath: /var/lib/www/html

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: rbd-pvc

readOnly: false

EOF

$ kubectl apply -f pod.yaml

五、使用StorageClass (最终方式)

Storage Class的作用

简单来说,storage配置了要访问ceph RBD的IP/Port、用户名、keyring、pool,等信息,我们不需要提前创建image;当用户创建一个PVC时,k8s查找是否有符合PVC请求的storage class类型,如果有,则依次执行如下操作:

- 到ceph集群上创建image

- 创建一个PV,名字为pvc-xx-xxx-xxx,大小pvc请求的storage。

- 将上面的PV与PVC绑定,格式化后挂到容器中

通过这种方式管理员只要创建好storage class就行了,后面的事情用户自己就可以搞定了。如果想要防止资源被耗尽,可以设置一下Resource Quota。

当pod需要一个卷时,直接通过PVC声明,就可以根据需求创建符合要求的持久卷。

创建storage class

# cat storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: fast

provisioner: kubernetes.io/rbd

parameters:

monitors: 192.168.20.41:6789

adminId: admin

adminSecretName: ceph-secret

pool: k8s

userId: admin

userSecretName: ceph-secret

fsType: xfs

imageFormat: "2" # mun==2

imageFeatures: "layering" # 定义是layering

创建PVC

RBD只支持 ReadWriteOnce 和 ReadOnlyAll,不支持ReadWriteAll。注意这两者的区别点是,不同nodes之间是否可以同时挂载。同一个node上,即使是ReadWriteOnce,也可以同时挂载到2个容器上的。

创建应用的时候,需要同时创建 pv和pod,二者通过storageClassName关联。pvc中需要指定其storageClassName为上面创建的sc的name(即fast)。

# cat pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: rbd-pvc-pod-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 1Gi

storageClassName: fast

创建pod

# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

test: rbd-pvc-pod

name: ceph-rbd-sc-pod1

spec:

containers:

- name: ceph-rbd-sc-nginx

image: nginx

volumeMounts:

- name: ceph-rbd-vol1

mountPath: /mnt

readOnly: false

volumes:

- name: ceph-rbd-vol1

persistentVolumeClaim:

claimName: rbd-pvc-pod-pvc

补充

在使用Storage Class时,除了使用PVC的方式声明要使用的持久卷,还可通过创建一个volumeClaimTemplates进行声明创建(StatefulSets中的存储设置),如果涉及到多个副本,可以使用StatefulSets配置:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

serviceName: "nginx"

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: nginx

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "fast"

resources:

requests:

storage: 1Gi

但注意不要用Deployment。因为,如果Deployment的副本数是1,那么还是可以用的,跟Pod一致;但如果副本数 >1 ,此时创建deployment后会发现,只启动了1个Pod,其他Pod都在ContainerCreating状态。过一段时间describe pod可以看到,等volume等很久都没等到。使用StorageClass

Storage Class的作用

简单来说,storage配置了要访问ceph RBD的IP/Port、用户名、keyring、pool,等信息,我们不需要提前创建image;当用户创建一个PVC时,k8s查找是否有符合PVC请求的storage class类型,如果有,则依次执行如下操作:

- 到ceph集群上创建image

- 创建一个PV,名字为pvc-xx-xxx-xxx,大小pvc请求的storage。

- 将上面的PV与PVC绑定,格式化后挂到容器中

通过这种方式管理员只要创建好storage class就行了,后面的事情用户自己就可以搞定了。如果想要防止资源被耗尽,可以设置一下Resource Quota。

当pod需要一个卷时,直接通过PVC声明,就可以根据需求创建符合要求的持久卷。

创建storage class

# cat storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: fast

provisioner: kubernetes.io/rbd

parameters:

monitors: 192.168.20.41:6789

adminId: admin

adminSecretName: ceph-secret

pool: k8s

userId: admin

userSecretName: ceph-secret

fsType: xfs

imageFormat: "2"

imageFeatures: "layering"

创建PVC

RBD只支持 ReadWriteOnce 和 ReadOnlyAll,不支持ReadWriteAll。注意这两者的区别点是,不同nodes之间是否可以同时挂载。同一个node上,即使是ReadWriteOnce,也可以同时挂载到2个容器上的。

创建应用的时候,需要同时创建 pv和pod,二者通过storageClassName关联。pvc中需要指定其storageClassName为上面创建的sc的name(即fast)。

# cat pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: rbd-pvc-pod-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 1Gi

storageClassName: fast

创建pod

# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

test: rbd-pvc-pod

name: ceph-rbd-sc-pod1

spec:

containers:

- name: ceph-rbd-sc-nginx

image: nginx

volumeMounts:

- name: ceph-rbd-vol1

mountPath: /mnt

readOnly: false

volumes:

- name: ceph-rbd-vol1

persistentVolumeClaim:

claimName: rbd-pvc-pod-pvc

补充

在使用Storage Class时,除了使用PVC的方式声明要使用的持久卷,还可通过创建一个volumeClaimTemplates进行声明创建(StatefulSets中的存储设置),如果涉及到多个副本,可以使用StatefulSets配置:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

serviceName: "nginx"

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: nginx

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "fast"

resources:

requests:

storage: 1Gi

但注意不要用Deployment。因为,如果Deployment的副本数是1,那么还是可以用的,跟Pod一致;但如果副本数 >1 ,此时创建deployment后会发现,只启动了1个Pod,其他Pod都在ContainerCreating状态。过一段时间describe pod可以看到,等volume等很久都没等到。