centos7基础安装:

https://www.cnblogs.com/hongfeng2019/p/11353249.html

安装JDK:

https://www.cnblogs.com/hongfeng2019/p/11270113.html

scp -r /opt/cloudera xxx:/opt

vim /etc/profile

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

export CLASS_PATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

export HADOOP_HOME=/opt/cloudera/parcels/CDH/lib/hadoop

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

PATH=$PATH:/opt/cloudera/parcels/CDH/bin:$JAVA_HOME/bin:$JRE_HOME/bin

source /etc/profile

cd /opt/cloudera/parcels/CDH/lib/hadoop/etc/hadoop

在hadoop-env.sh中,再显示地重新声明一遍JAVA_HOME

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

下载:hadoop-3.0.0

wget https://archive.apache.org/dist/hadoop/common/hadoop-3.0.0/hadoop-3.0.0.tar.gz

tar -zxf hadoop-3.1.2.tar.gz -C /hongfeng/software/

vim /etc/profile

HADOOP_HOME=/hongfeng/software/hadoop-3.0.0

PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

source /etc/profile

copy集群的客户端文件:

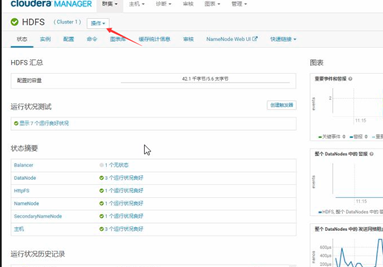

选择hdfs,点一个节点进去,操作中选下载客户端配置:

把copy下来的文件cp到下面目录并覆盖:

/hongfeng/software/hadoop-3.0.0/etc/hadoop

把节点的/etc/hosts拷贝下来.

下载hive:

wget https://archive.apache.org/dist/hive/hive-2.1.1/apache-hive-2.1.1-bin.tar.gz

tar -xzvf apache-hive-2.1.1-bin.tar.gz -C /hongfeng/software

vim /etc/profile

export HIVE_HOME=/hongfeng/software/apache-hive-2.1.1-bin

export PATH=$PATH:$HIVE_HOME/bin

#从cdh上copy hive的客户端配置文件

在hive-env.sh中:

export HADOOP_HOME=${HADOOP_HOME}

scp /usr/share/java/mysql-connector-java.jar 10.52.61.177:/hongfeng/software/apache-hive-2.1.1-bin/lib

hive启动报错:

Unrecognized Hadoop major version number: 3.0.0

解决方法:

hadoop, hive,hbase都下载相对应的CDH版本.