配置文件都在:/etc/服务名, 看hadoop的classpath

|grep conf

/etc/hadoop/conf

log都在: /var/log/服务名

看scm的log:

tail -1000 /var/log/cloudera-scm-server/cloudera-scm-server.log |grep error

hive:

/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hive as HIVE_HOME

hadoop:

/opt/cloudera/parcels/CDH/lib/hadoop as HADOOP_HOME

在CDH6.2里,可查看/etc/spark/conf/spark-env.sh

HADOOP_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop

spark:

/opt/cloudera/parcels/CDH/lib/spark

parcels的目录:

/opt/cloudera/parcels

/opt/cloudera/parcels/CDH/jars

#jar包目录/opt/cloudera/parcels/CDH/lib(你装cdh的路径),找到lib目录对应的那些组件里面

/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hive/lib

/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hive/lib |grep uhadoop*

uhadoop-1.0-SNAPSHOT.jar

/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop/lib |grep uhadoop*

uhadoop-1.0-SNAPSHOT.jar

/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop/lib |grep ufile

ufilesdk-1.0-SNAPSHOT.jar

CDH组件目录:

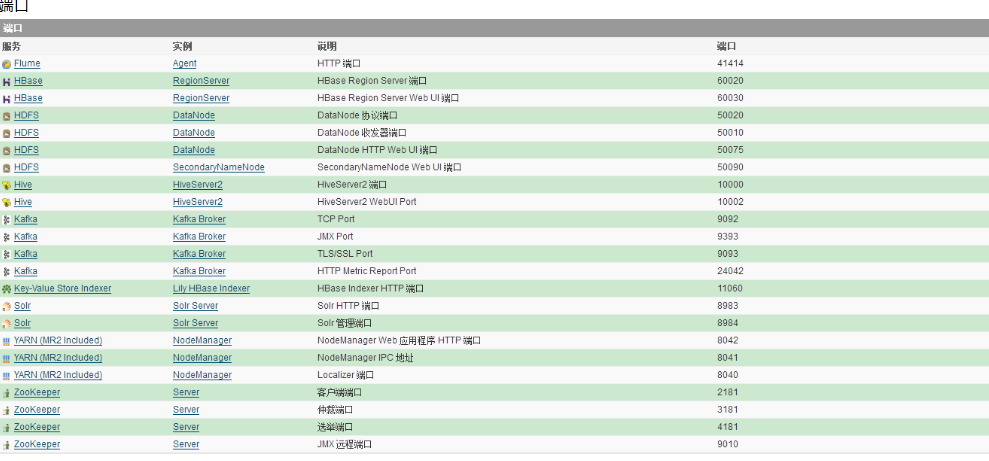

namenode: 8020

1.cloudera manager软件安装目录是在/opt/cloudera/parcels/CDH-5.7.1-1.cdh5.7.1.p0.11/lib中,其余的配置,命令其实都是来自这里和在这里生效的

2.cloudera manager配置分发就是把新配置发送到/etc/alternatives/hadoop中和/etc/hadoop中,然后各个服务再使用此配置。

cd /etc/alternatives可看到都是软链接

[root@node1 alternatives]# ll

total 0

lrwxrwxrwx 1 root root 67 Apr 16 2019 avro-tools -> /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/bin/avro-tools

lrwxrwxrwx 1 root root 64 Apr 16 2019 beeline -> /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/bin/beeline

lrwxrwxrwx 1 root root 79 Apr 16 2019 bigtop-detect-javahome -> /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/bin/bigtop-detect-javahome

lrwxrwxrwx 1 root root 65 Apr 16 2019 catalogd -> /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/bin/catalogd

lrwxrwxrwx 1 root root 39 Apr 16 2019 cifs-idmap-plugin -> /usr/lib64/cifs-utils/cifs_idmap_sss.so

lrwxrwxrwx 1 root root 63 Apr 16 2019 cli_mt -> /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/bin/cli_mt

lrwxrwxrwx 1 root root 63 Apr 16 2019 cli_st -> /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/bin/cli_st

lrwxrwxrwx 1 root root 65 Apr 16 2019 flume-ng -> /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/bin/flume-ng

lrwxrwxrwx 1 root root 76 Apr 16 2019 flume-ng-conf -> /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/etc/flume-ng/conf.empty

lrwxrwxrwx 1 root root 63 Apr 16 2019 hadoop -> /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/bin/hadoop

3.cloudera manager各个服务的命令来自/opt/cloudera/parcels/CDH-5.7.1-1.cdh5.7.1.p0.11/lib中各个服务,有一部分命令发布到/usr下了。只不过是软链接到各个服务的bin下面的命令罢了

cd /usr/bin

lrwxrwxrwx 1 root root 34 Apr 16 2019 zookeeper-client -> /etc/alternatives/zookeeper-client

lrwxrwxrwx 1 root root 46 Apr 16 2019 zookeeper-security-migration -> /etc/alternatives/zookeeper-security-migration

lrwxrwxrwx 1 root root 34 Apr 16 2019 zookeeper-server -> /etc/alternatives/zookeeper-server

lrwxrwxrwx 1 root root 42 Apr 16 2019 zookeeper-server-cleanup -> /etc/alternatives/zookeeper-server-cleanup

lrwxrwxrwx 1 root root 45 Apr 16 2019 zookeeper-server-initialize -> /etc/alternatives/zookeeper-server-initialize

lrwxrwxrwx. 1 root root 6 Nov 7 2018 zsoelim -> soelim

4/ cloudera manager的spark服务器安装成功,但是启动spark shell时报错:SparkDeploySchedulerBackend: Application has been killed. Reason: Master removed our application: FAILED。这是因为多次为集群添加spark服务时,需要将spark的目录清理干净,因为我没有清理/var/run/spark/work,这个目录可能前几次添加失败时,导致这个目录的所有者不是spark,所以spark无法往这里写入内容,所以需要先删除此目录。

5/安装完了各种服务之后,需要将各个服务角色的内存调整一下,cloudera manager默认调整的不是很好。