1. 用requests库和BeautifulSoup库,爬取校园新闻首页新闻的标题、链接、正文。

import requests

from bs4 import BeautifulSoup

res = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/')

res.encoding='utf-8'

soup=BeautifulSoup(res.text,'html.parser')

for news in soup.select('li'):

if len(news.select('.news-list-title'))> 0:

d=news.select('.news-list-info')[0].contents[0].text

t=news.select('.news-list-title')[0].text

ti=news.select('a')[0].attrs['href']

resf=requests.get(ti)

resf.encoding='utf-8'

soupd=BeautifulSoup(resf.text,'html.parser')

s=soupd.select('.show-info')[0].text

print(t,ti)

print(soupd.select('#content')[0].text)

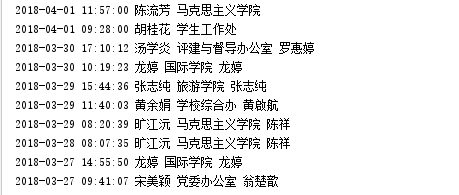

2. 分析字符串,获取每篇新闻的发布时间,作者,来源,摄影等信息

res2 = requests.get(ti)

res2.encoding = 'utf-8'

soup2 = BeautifulSoup(res2.text, 'html.parser')

info = soup2.select('.show-info')[0].text

info = info.lstrip('发布时间:').rstrip('点击:次')

# print(info)

time = info[:info.find('作者')] # 发布时间

author = info[info.find('作者:')+3:info.find('审核')] # 作者

check = info[info.find('审核:')+3:info.find('来源')] # 审核

source = info[info.find("来源:")+3:info.find('摄影')] # 来源

print(time, author, check, source,end="")

if(info.find("摄影:")>0):

photogra = info[info.find("摄影:")+3:] # 摄影

print(photogra)

else:

print()

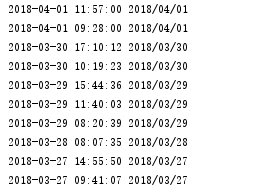

3.将其中的发布时间由str转换成datetime类型

res3 = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/')

res3.encoding = 'utf-8'

soup3 = BeautifulSoup(res3.text,'html.parser')

for news in soup3.select('li'):

if len(news.select('.news-list-title')) > 0:

a = news.a.attrs['href']

resq = requests.get(a)

resq.encoding = 'utf-8'

soupq = BeautifulSoup(resq.text,'html.parser')

info = soupq.select('.show-info')[0].text

dt = info.lstrip('发布时间:')[:19]

from datetime import datetime

dt = info.lstrip('发布时间:')[:19]

dati = datetime.strptime(dt, '%Y-%m-%d %H:%M:%S')

print(dati, dati.strftime('%Y/%m/%d'))

4. 将完整的代码及运行结果截图发布在作业上。

import requests

from bs4 import BeautifulSoup

res = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/')

res.encoding='utf-8'

soup=BeautifulSoup(res.text,'html.parser')

for news in soup.select('li'):

if len(news.select('.news-list-title'))> 0:

d=news.select('.news-list-info')[0].contents[0].text

t=news.select('.news-list-title')[0].text

ti=news.select('a')[0].attrs['href']

resf=requests.get(ti)

resf.encoding='utf-8'

soupd=BeautifulSoup(resf.text,'html.parser')

s=soupd.select('.show-info')[0].text

print(t,ti)

print(soupd.select('#content')[0].text)

res2 = requests.get(ti)

res2.encoding = 'utf-8'

soup2 = BeautifulSoup(res2.text, 'html.parser')

info = soup2.select('.show-info')[0].text

info = info.lstrip('发布时间:').rstrip('点击:次')

time = info[:info.find('作者')]

author = info[info.find('作者:')+3:info.find('审核')]

check = info[info.find('审核:')+3:info.find('来源')]

source = info[info.find("来源:")+3:info.find('摄影')]

print(time, author, check, source,end="")

if(info.find("摄影:")>0):

photogra = info[info.find("摄影:")+3:]

print(photogra)

else:

print()

res3 = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/')

res3.encoding = 'utf-8'

soup3 = BeautifulSoup(res3.text,'html.parser')

for news in soup3.select('li'):

if len(news.select('.news-list-title')) > 0:

a = news.a.attrs['href']

resq = requests.get(a)

resq.encoding = 'utf-8'

soupq = BeautifulSoup(resq.text,'html.parser')

info = soupq.select('.show-info')[0].text

dt = info.lstrip('发布时间:')[:19]

from datetime import datetime

dt = info.lstrip('发布时间:')[:19]

dati = datetime.strptime(dt, '%Y-%m-%d %H:%M:%S')

print(dati, dati.strftime('%Y/%m/%d'))