一、CPU 拓扑结构的构建

1. Arm64 架构的cpu拓扑结构存储在全局数组 struct cpu_topology cpu_topology[NR_CPUS] 变量当中

2. struct cpu_topology 结构的定义:

// include/asm/topology.h struct cpu_topology { int thread_id; int core_id; int cluster_id; cpumask_t thread_sibling; cpumask_t core_sibling; }; extern struct cpu_topology cpu_topology[NR_CPUS]; #define topology_physical_package_id(cpu) (cpu_topology[cpu].cluster_id) #define topology_core_id(cpu) (cpu_topology[cpu].core_id) #define topology_core_cpumask(cpu) (&cpu_topology[cpu].core_sibling) #define topology_sibling_cpumask(cpu) (&cpu_topology[cpu].thread_sibling)

3. cpu_topology[] 数组的构建来自设备 dts 文件的 "/cpus" 节点下的 "cpu-map" 节点,更新函数为:

//kernel_init() --> kernel_init_freeable() --> smp_prepare_cpus() --> init_cpu_topology() --> parse_dt_topology() --> parse_cluster() --> parse_core() static int __init parse_core(struct device_node *core, int cluster_id, int core_id) { ... cpu = get_cpu_for_node(core); if (cpu >= 0) { cpu_topology[cpu].cluster_id = cluster_id; cpu_topology[cpu].core_id = core_id; } ... } //kernel_init() --> kernel_init_freeable() --> smp_prepare_cpus() --> store_cpu_topology() --> update_siblings_masks() static void update_siblings_masks(unsigned int cpuid) { struct cpu_topology *cpu_topo, *cpuid_topo = &cpu_topology[cpuid]; int cpu; /* update core and thread sibling masks */ for_each_possible_cpu(cpu) { cpu_topo = &cpu_topology[cpu]; if (cpuid_topo->cluster_id != cpu_topo->cluster_id) continue; cpumask_set_cpu(cpuid, &cpu_topo->core_sibling); if (cpu != cpuid) cpumask_set_cpu(cpu, &cpuid_topo->core_sibling); //互相设置,只更新了一个cluster的! if (cpuid_topo->core_id != cpu_topo->core_id) continue; cpumask_set_cpu(cpuid, &cpu_topo->thread_sibling); if (cpu != cpuid) cpumask_set_cpu(cpu, &cpuid_topo->thread_sibling); } }

4. 由附上的debug打印可知,解析后的cpu拓扑结构中各个成员得值如下:

---------------------debug_printf_cpu_topology---------------------- thread_id = -1, core_id = 0, cluster_id = 0, thread_sibling = 0, core_sibling = 0-3 thread_id = -1, core_id = 1, cluster_id = 0, thread_sibling = 1, core_sibling = 0-3 thread_id = -1, core_id = 2, cluster_id = 0, thread_sibling = 2, core_sibling = 0-3 thread_id = -1, core_id = 3, cluster_id = 0, thread_sibling = 3, core_sibling = 0-3 thread_id = -1, core_id = 0, cluster_id = 1, thread_sibling = 4, core_sibling = 4-6 thread_id = -1, core_id = 1, cluster_id = 1, thread_sibling = 5, core_sibling = 4-6 thread_id = -1, core_id = 2, cluster_id = 1, thread_sibling = 6, core_sibling = 4-6 thread_id = -1, core_id = 0, cluster_id = 2, thread_sibling = 7, core_sibling = 7 -------------------------------------------------

疑问:update_siblings_masks()中只是更新了一个cluster的,为什么会所有的cpu的 thread_sibling/core_sibling 都有更新到 ?

二、调度域的构建

1. 相关结构描述

/* * 对于ARM的大小核架构只有两个调度域层级,MC 和 DIE,SMT是超线程,只有X86平台上有。 */ static struct sched_domain_topology_level default_topology[] = { #ifdef CONFIG_SCHED_SMT { cpu_smt_mask, cpu_smt_flags, SD_INIT_NAME(SMT) }, //&cpu_topology[cpu].thread_sibling 这个没有使能 #endif #ifdef CONFIG_SCHED_MC { cpu_coregroup_mask, cpu_core_flags, SD_INIT_NAME(MC) }, //&cpu_topology[cpu].core_sibling,初始化了sd_flags这个成员函数指针 #endif { cpu_cpu_mask, SD_INIT_NAME(DIE) }, //return node_to_cpumask_map[per_cpu(numa_node, cpu)] { NULL, }, }; /* 系统中构建调度域使用的是 sched_domain_topology,其指向 default_topology 数组 */ static struct sched_domain_topology_level *sched_domain_topology = default_topology; #define for_each_sd_topology(tl) for (tl = sched_domain_topology; tl->mask; tl++)

2. 调度域的构建函数

// sched_init_smp --> init_sched_domains --> build_sched_domains static int build_sched_domains(const struct cpumask *cpu_map, struct sched_domain_attr *attr) { enum s_alloc alloc_state; struct sched_domain *sd; struct s_data d; int i, ret = -ENOMEM; /* * 对全局 default_topology 的 tl->data 的各个per-cpu的变量成员分配内存,对 s_data.rd 分配内存。 */ alloc_state = __visit_domain_allocation_hell(&d, cpu_map); /*初始化时是cpu_active_mask*/ if (alloc_state != sa_rootdomain) goto error; /* Set up domains for CPUs specified by the cpu_map */ for_each_cpu(i, cpu_map) { struct sched_domain_topology_level *tl; sd = NULL; /*只有MC和DIE两个*/ for_each_sd_topology(tl) { //为每个cpu 的每个层级都 build 调度域 sd = build_sched_domain(tl, cpu_map, attr, sd, i); //相等说明 d.sd 只指向MC层级的 sd if (tl == sched_domain_topology) *per_cpu_ptr(d.sd, i) = sd; if (tl->flags & SDTL_OVERLAP) //defalut的层级数组每个元素的tl->flags都是没有初始化的,这个不执行 sd->flags |= SD_OVERLAP; } } /* Build the groups for the domains */ for_each_cpu(i, cpu_map) { for (sd = *per_cpu_ptr(d.sd, i); sd; sd = sd->parent) { //d.sd 是指向MC的每个domain的,此时子层级的已经指向了父层级的,父子的都可以遍历到了 sd->span_weight = cpumask_weight(sched_domain_span(sd)); //参数 sd->span if (sd->flags & SD_OVERLAP) {//不成立 if (build_overlap_sched_groups(sd, i)) goto error; } else { if (build_sched_groups(sd, i)) //sd->span 内的sg构成一个环形单链表 goto error; } } } /* Calculate CPU capacity for physical packages and nodes */ for (i = nr_cpumask_bits-1; i >= 0; i--) { struct sched_domain_topology_level *tl = sched_domain_topology; if (!cpumask_test_cpu(i, cpu_map)) continue; //从这里可以看出每个CPU,每个层级都有一个 sd 结构 for (sd = *per_cpu_ptr(d.sd, i); sd; sd = sd->parent, tl++) { init_sched_groups_energy(i, sd, tl->energy); //第三个参数恒为NULL claim_allocations(i, sd); init_sched_groups_capacity(i, sd); //这里可以看出sdg->sgc->capacity是sd中所有cpu的算力之和 } } /* Attach the domains 为每一个rq attach domain*/ rcu_read_lock(); for_each_cpu(i, cpu_map) { /*读取的初始化为-1的*/ int max_cpu = READ_ONCE(d.rd->max_cap_orig_cpu); int min_cpu = READ_ONCE(d.rd->min_cap_orig_cpu); sd = *per_cpu_ptr(d.sd, i); /*找出最小和最大算力的cpu,sd的算力内容还是从rq里面来的*/ if ((max_cpu < 0) || (cpu_rq(i)->cpu_capacity_orig > cpu_rq(max_cpu)->cpu_capacity_orig)) WRITE_ONCE(d.rd->max_cap_orig_cpu, i); if ((min_cpu < 0) || (cpu_rq(i)->cpu_capacity_orig < cpu_rq(min_cpu)->cpu_capacity_orig)) WRITE_ONCE(d.rd->min_cap_orig_cpu, i); /*传参sd = *per_cpu_ptr(d.sd, i),d.rd 为系统唯一的root_domain结构,i是cpu id */ cpu_attach_domain(sd, d.rd, i); } rcu_read_unlock(); if (!cpumask_empty(cpu_map)) update_asym_cpucapacity(cpumask_first(cpu_map)); ret = 0; error: /* * 这里面除了 root_domain 判断了引用计数,没有释放外,之前所有申请初始化的成员都已经free掉了。 * 自己加debug打印证实的确是释放掉了,但是cpu的domain拓扑还是存在,为什么? */ __free_domain_allocs(&d, alloc_state, cpu_map); return ret; }

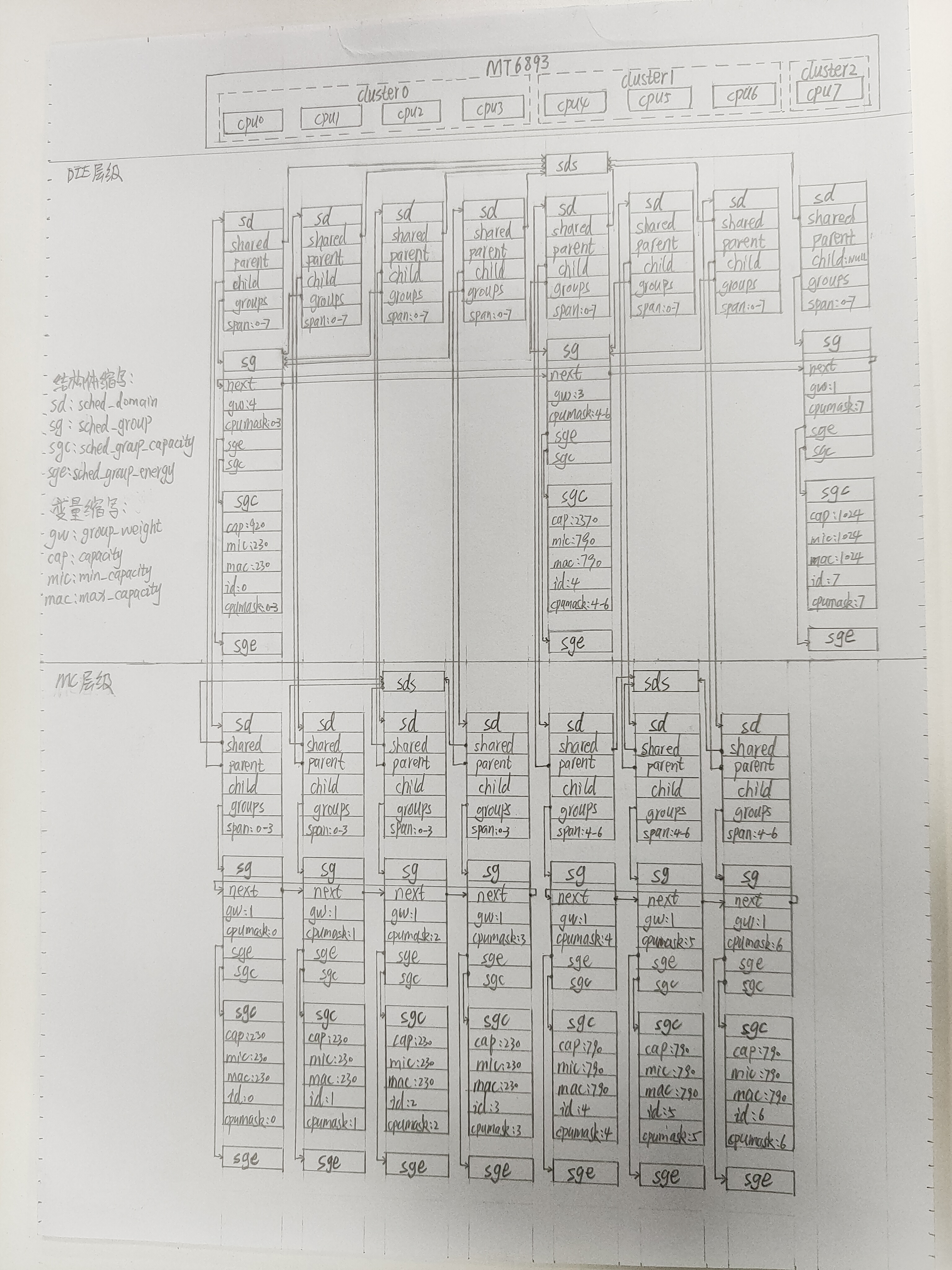

3. 对于 4+3+1 cpu架构的Soc,加debug后画出的拓扑图如下:

注:sds 为 struct sched_domain_shared *shared 的缩写。

由实验结果可知

(1). 小核和中核的 cpu_rq(cpu)->sd 指向其MC层级的 sched_domain 结构,大核没有MC层级的 sched_domain 结构,大核的 cpu_rq(cpu)->sd 指向其DIE层级的sched_domain 结构。

(2). 所有cpu的 cpu_rq(cpu)->rd 指向同一个全局 root_domain 结构。

(3). DIE层级的 sched_group_capacity.capacity 是其对应的 MC 层级的所有cpu的算力的和,本cluster有几个核就是几倍。

补充:对应层级的 sched_domain 的 private 成员会指向其对应层级的 sched_domain_topology_level.data 成员,也就是 default_topology[i].data,但是 data 中的各个 per-cpu 的指针在build完成后就全释放干净了。

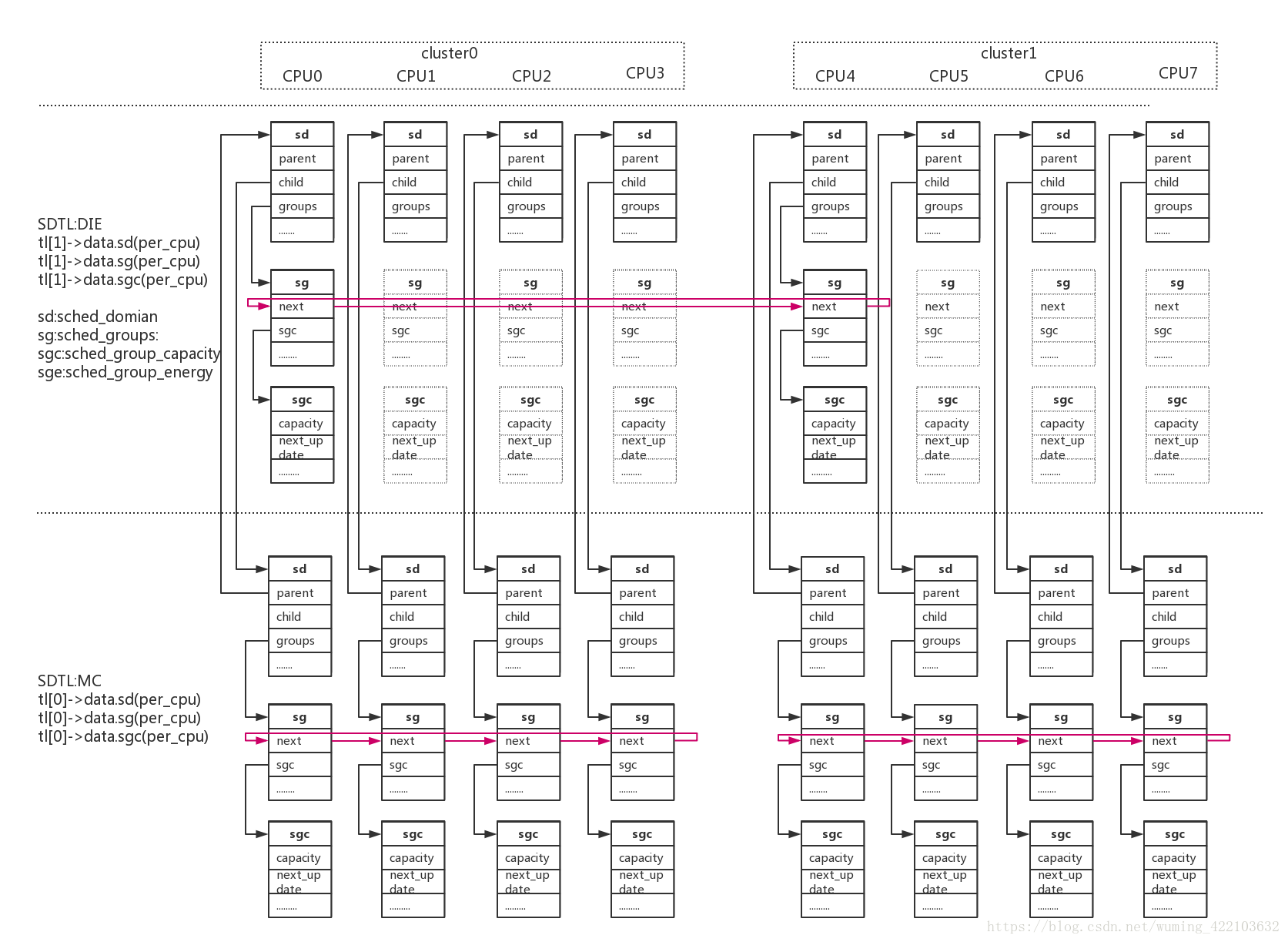

4. 对于 4+4 cpu架构的soc,其调度域拓扑结构如下:

5. cache 相关per-cpu变量的构建

// kernel/sched/sched.h //select_idle_cpu/__select_idle_sibling/select_idle_sibling_cstate_aware/set_cpu_sd_state_busy/set_cpu_sd_state_idle/nohz_kick_needed 中使用 DECLARE_PER_CPU(struct sched_domain *, sd_llc); DECLARE_PER_CPU(int, sd_llc_size); //fair.c中唤醒路径中的 wake_wide 中使用 DECLARE_PER_CPU(int, sd_llc_id); //cpus_share_cache 中通过cpu的这个变量来判断是否共享cache DECLARE_PER_CPU(struct sched_domain_shared *, sd_llc_shared); //set_idle_cores/test_idle_cores DECLARE_PER_CPU(struct sched_domain *, sd_numa); //task_numa_migrate中使用 DECLARE_PER_CPU(struct sched_domain *, sd_asym); //fair.c nohz_kick_needed中使用 DECLARE_PER_CPU(struct sched_domain *, sd_ea); //find_best_target 中有使用 DECLARE_PER_CPU(struct sched_domain *, sd_scs); //compute_energy/mtk_idle_power/mtk_busy_power 中都有使用

更新函数:

//sched_init_smp --> init_sched_domains --> build_sched_domains --> cpu_attach_domain --> update_top_cache_domain static void update_top_cache_domain(int cpu) { struct sched_domain_shared *sds = NULL; struct sched_domain *sd; struct sched_domain *ea_sd = NULL; int id = cpu; int size = 1; /* 找到最顶层的 SD_SHARE_PKG_RESOURCES 来初始化*/ sd = highest_flag_domain(cpu, SD_SHARE_PKG_RESOURCES); if (sd) { id = cpumask_first(sched_domain_span(sd)); size = cpumask_weight(sched_domain_span(sd)); sds = sd->shared; } rcu_assign_pointer(per_cpu(sd_llc, cpu), sd); per_cpu(sd_llc_size, cpu) = size; per_cpu(sd_llc_id, cpu) = id; rcu_assign_pointer(per_cpu(sd_llc_shared, cpu), sds); /* parent->parent是 NULL,没找到的话就返回NULL */ sd = lowest_flag_domain(cpu, SD_NUMA); rcu_assign_pointer(per_cpu(sd_numa, cpu), sd); sd = highest_flag_domain(cpu, SD_ASYM_PACKING); rcu_assign_pointer(per_cpu(sd_asym, cpu), sd); //从下往上遍历,找到一个初始化的就赋值过去 for_each_domain(cpu, sd) { if (sd->groups->sge) ea_sd = sd; else break; } rcu_assign_pointer(per_cpu(sd_ea, cpu), ea_sd); sd = highest_flag_domain(cpu, SD_SHARE_CAP_STATES); rcu_assign_pointer(per_cpu(sd_scs, cpu), sd); } /* default_topology 层级数组初始化时传的函数指针 */ static inline int cpu_core_flags(void) { return SD_SHARE_PKG_RESOURCES; } static inline struct sched_domain *highest_flag_domain(int cpu, int flag) { struct sched_domain *sd, *hsd = NULL; for_each_domain(cpu, sd) { //由child domain开始向上查找 if (!(sd->flags & flag)) break; hsd = sd; } return hsd; //返回的一个DIE层级的sd }

由 highest_flag_domain() 和MC层级传的flag函数指针可知,获取的是一个DIE层级的调度域,也就是说一个cluster内的cpu是共享cache的,但是除了大核,只有CPU7(这里说的cache应该是二级cache)。

对每个cpu进行debug打印的各个成员得内容:

cache: sd = 000000007607d14d, size = 4, id = 0, sds= 00000000d3d7a536, sdnuma = (null), sdasym = (null), sdea = 0000000005eb165b, sdscs=000000007607d14d cache: sd = 0000000073059b52, size = 4, id = 0, sds= 00000000d3d7a536, sdnuma = (null), sdasym = (null), sdea = 000000001b3b27f3, sdscs=0000000073059b52 cache: sd = 000000005b94d1f5, size = 4, id = 0, sds= 00000000d3d7a536, sdnuma = (null), sdasym = (null), sdea = 000000009c9ce4e7, sdscs=000000005b94d1f5 cache: sd = 0000000034f5aa86, size = 4, id = 0, sds= 00000000d3d7a536, sdnuma = (null), sdasym = (null), sdea = 00000000aa75a8a2, sdscs=0000000034f5aa86 cache: sd = 0000000015506191, size = 3, id = 4, sds= 000000001048be02, sdnuma = (null), sdasym = (null), sdea = 00000000375c082c, sdscs=0000000015506191 cache: sd = 0000000013e36405, size = 3, id = 4, sds= 000000001048be02, sdnuma = (null), sdasym = (null), sdea = 00000000f629682d, sdscs=0000000013e36405 cache: sd = 00000000687439ee, size = 3, id = 4, sds= 000000001048be02, sdnuma = (null), sdasym = (null), sdea = 00000000d6ba54d5, sdscs=00000000687439ee cache: sd = (null), size = 1, id = 7, sds= (null), sdnuma = (null), sdasym = (null), sdea = 00000000b81ad593, sdscs= (null)

(1) 为 NULL 的就是没有找到相关的调度域。

(2) sd_llc 和 sd_scs 具有相同指向,和 cpu_rq(cpu)->sd 和 MC层级的sd 四者是相同的指向。由于大核CPU7没有MC层级的调度域,所以其的为NULL。

(3) sd_llc_size 表示此cpu所在的cluster的cpu的个数。

(3) sd_llc_id 表示此cpu所在的cluster的首个cpu的id。

(4) sd_llc_shared 同一个cluster的cpu指向同一个 struct sched_domain_shared 结构,这个指向和MC层级调度域的 sd->sds 指向相同。

(5) sd_numa 和 sd_asym 由于 default_topology 变量中没有指定相关的flag,所以为NULL.

(6) sd_ea 和每个cpu的DIE层级的 sched_domain 成员具有相同指向。

6. Debug打印代码

/* 放到 kernel/sched 下面 */ #define pr_fmt(fmt) "topo_debug: " fmt #include <linux/fs.h> #include <linux/sched.h> #include <linux/proc_fs.h> #include <linux/seq_file.h> #include <linux/string.h> #include <linux/printk.h> #include <asm/topology.h> #include <linux/cpumask.h> #include <linux/sched/topology.h> #include "sched.h" extern struct cpu_topology cpu_topology[NR_CPUS]; extern struct sched_domain_topology_level *sched_domain_topology; //需要去掉原文件中的static修饰 #define for_each_sd_topology(tl) for (tl = sched_domain_topology; tl->mask; tl++) struct domain_topo_debug_t { int cmd; }; static struct domain_topo_debug_t dtd; static void debug_printf_sched_domain(struct seq_file *m, struct sched_domain *sd, char *level, int cpu) { if (!sd) { if (level) { seq_printf(m, "TL = %s, cpu = %d, sd = NULL ", level, cpu); } else { seq_printf(m, "sd = NULL "); } } else { seq_printf(m, "sched_domain sd = %p: parent = %p, child = %p, groups = %p, name = %s, private = %p, shared = %p, span = %*pbl, flags=0x%x ", sd, sd->parent, sd->child, sd->groups, sd->name, sd->private, sd->shared, cpumask_pr_args(to_cpumask(sd->span)), sd->flags); } } static void debug_printf_sched_group(struct seq_file *m, struct sched_group *sg, char *level, int cpu) { if (!sg) { if (level) { seq_printf(m, "TL = %s, cpu = %d, sg = NULL ", level, cpu); } else { seq_printf(m, "sg = NULL "); } } else { seq_printf(m, "sched_group sg = %p: next = %p, ref = %d, group_weight = %d, sgc = %p, asym_prefer_cpu = %d, sge = %p, cpumask = %*pbl ", sg, sg->next, atomic_read(&sg->ref), sg->group_weight, sg->sgc, sg->asym_prefer_cpu, sg->sge, cpumask_pr_args(to_cpumask(sg->cpumask))); } } static void debug_printf_sched_group_capacity(struct seq_file *m, struct sched_group_capacity *sgc, char *level, int cpu) { if (!sgc) { if (level) { seq_printf(m, "TL = %s, cpu = %d, sgc = NULL ", level, cpu); } else { seq_printf(m, "sgc = NULL "); } } else { seq_printf(m, "sched_group_capacity sgc = %p: ref = %d, capacity = %d, min_capacity = %d, max_capacity = %d, next_update = %d, imbalance = %d, id = %d, cpumask = %*pbl ", sgc, atomic_read(&sgc->ref), sgc->capacity, sgc->min_capacity, sgc->max_capacity, sgc->next_update, sgc->imbalance, sgc->id, cpumask_pr_args(to_cpumask(sgc->cpumask))); } } static void debug_printf_sched_domain_shared(struct seq_file *m, struct sched_domain_shared *sds, char *level, int cpu) { if (!sds) { if (level) { seq_printf(m, "TL = %s, cpu = %d, sds = NULL ", level, cpu); } else { seq_printf(m, "sds = NULL "); } } else { seq_printf(m, "sched_domain_shared sds = %p: ref = %d, nr_busy_cpus = %d, has_idle_cores = %d, overutilized = %d ", sds, atomic_read(&sds->ref), atomic_read(&sds->nr_busy_cpus), sds->has_idle_cores, sds->overutilized); } } static void debug_printf_sd_sds_sg_sgc(struct seq_file *m, struct sched_domain_topology_level *tl, int cpu) { struct sd_data *sdd; struct sched_domain *sd; struct sched_domain_shared *sds; struct sched_group *sg; struct sched_group_capacity *sgc; sdd = &tl->data; seq_printf(m, "-------------------------TL = %s, cpu = %d, sdd = %p, %s-------------------------------- ", tl->name, cpu, sdd, __func__); if (!sdd->sd) { seq_printf(m, "TL = %s, cpu = %d, sdd->sd = NULL ", tl->name, cpu); } else { sd = *per_cpu_ptr(sdd->sd, cpu); debug_printf_sched_domain(m, sd, tl->name, cpu); } if (!sdd->sds) { seq_printf(m, "TL = %s, cpu = %d, sdd->sds = NULL ", tl->name, cpu); } else { sds = *per_cpu_ptr(sdd->sds, cpu); debug_printf_sched_domain_shared(m, sds, tl->name, cpu); } if (!sdd->sg) { seq_printf(m, "TL = %s, cpu = %d, sdd->sg = NULL ", tl->name, cpu); } else { sg = *per_cpu_ptr(sdd->sg, cpu); debug_printf_sched_group(m, sg, tl->name, cpu); } if (!sdd->sgc) { seq_printf(m, "TL = %s, cpu = %d, sdd->sgc = NULL ", tl->name, cpu); } else { sgc = *per_cpu_ptr(sdd->sgc, cpu); debug_printf_sched_group_capacity(m, sgc, tl->name, cpu); } seq_printf(m, "------------------------------------------------- "); } static void debug_printf_sd_sds_sg_sgc_cpu_rq(struct seq_file *m, int cpu) { struct rq *rq = cpu_rq(cpu); seq_printf(m, "---------------------cpu=%d, %s---------------------- ", cpu, __func__); if (rq->sd) { seq_printf(m, "rq->sd: "); debug_printf_sched_domain(m, rq->sd, NULL, cpu); seq_printf(m, "rq->sd->groups: "); debug_printf_sched_group(m, rq->sd->groups, NULL, cpu); if (rq->sd->groups) { seq_printf(m, "rq->sd->groups->sgc: "); debug_printf_sched_group_capacity(m, rq->sd->groups->sgc, NULL, cpu); } seq_printf(m, "rq->sd->shared: "); debug_printf_sched_domain_shared(m, rq->sd->shared, NULL, cpu); } if (rq->sd && rq->sd->parent) { seq_printf(m, "rq->sd->parent: "); debug_printf_sched_domain(m, rq->sd->parent, NULL, cpu); seq_printf(m, "rq->sd->parent->groups: "); debug_printf_sched_group(m, rq->sd->parent->groups, NULL, cpu); if (rq->sd->parent->groups) { seq_printf(m, "rq->sd->parent->groups->sgc: "); debug_printf_sched_group_capacity(m, rq->sd->parent->groups->sgc, NULL, cpu); } seq_printf(m, "rq->sd->parent->shared: "); debug_printf_sched_domain_shared(m, rq->sd->parent->shared, NULL, cpu); } if (rq->sd && rq->sd->child) { seq_printf(m, "rq->sd->child: "); debug_printf_sched_domain(m, rq->sd->child, NULL, cpu); seq_printf(m, "rq->sd->child->groups: "); debug_printf_sched_group(m, rq->sd->child->groups, NULL, cpu); if (rq->sd->child->groups) { seq_printf(m, "rq->sd->child->groups->sgc: "); debug_printf_sched_group_capacity(m, rq->sd->child->groups->sgc, NULL, cpu); } seq_printf(m, "rq->sd->child->shared: "); debug_printf_sched_domain_shared(m, rq->sd->child->shared, NULL, cpu); } if (rq->sd && rq->sd->parent && rq->sd->parent->parent) { seq_printf(m, "rq->sd->parent->parent: "); debug_printf_sched_domain(m, rq->sd->parent->parent, NULL, cpu); seq_printf(m, "rq->sd->parent->parent->groups: "); debug_printf_sched_group(m, rq->sd->parent->parent->groups, NULL, cpu); if (rq->sd->parent->parent->groups) { seq_printf(m, "rq->sd->parent->parent->groups->sgc: "); debug_printf_sched_group_capacity(m, rq->sd->parent->parent->groups->sgc, NULL, cpu); } seq_printf(m, "rq->sd->parent->parent->shared: "); debug_printf_sched_domain_shared(m, rq->sd->parent->parent->shared, NULL, cpu); } if (rq->sd && rq->sd->child && rq->sd->child->child) { seq_printf(m, "rq->sd->child->child: "); debug_printf_sched_domain(m, rq->sd->child->child, NULL, cpu); seq_printf(m, "rq->sd->child->child->groups: "); debug_printf_sched_group(m, rq->sd->child->child->groups, NULL, cpu); if (rq->sd->child->child->groups) { seq_printf(m, "rq->sd->child->child->groups->sgc: "); debug_printf_sched_group_capacity(m, rq->sd->child->child->groups->sgc, NULL, cpu); } seq_printf(m, "rq->sd->child->child->shared: "); debug_printf_sched_domain_shared(m, rq->sd->child->child->shared, NULL, cpu); } seq_printf(m, "------------------------------------------------- "); } static void debug_printf_cpu_rq(struct seq_file *m, int cpu) { struct callback_head *callback; struct rq *rq = cpu_rq(cpu); seq_printf(m, "---------------------cpu=%d, %s---------------------- ", cpu, __func__); seq_printf(m, "rq = %p: rd = %p, sd = %p, cpu_capacity = %d, cpu_capacity_orig = %d, max_idle_balance_cost = %lu ", rq, rq->rd, rq->sd, rq->cpu_capacity, rq->cpu_capacity_orig, rq->max_idle_balance_cost); seq_printf(m, "balance_callback: "); callback = rq->balance_callback; while(callback) { seq_printf(m, "%pf ", callback->func); callback = callback->next; } seq_printf(m, "------------------------------------------------- "); } static void debug_printf_root_domain(struct seq_file *m) { struct rq *rq = cpu_rq(0); struct root_domain *rd = rq->rd; seq_printf(m, "---------------------%s---------------------- ", __func__); if (rd) { seq_printf(m, "refcount = %d, span = %*pbl, max_cpu_capacity.val = %d, max_cpu_capacity.cpu = %d, max_cap_orig_cpu = %d, min_cap_orig_cpu = %d ", atomic_read(&rd->refcount), cpumask_pr_args(rd->span), rd->max_cpu_capacity.val, rd->max_cpu_capacity.cpu, rd->max_cap_orig_cpu, rd->min_cap_orig_cpu); } seq_printf(m, "------------------------------------------------- "); } static void debug_printf_cpu_topology(struct seq_file *m) { int i; struct cpu_topology *ct = cpu_topology; seq_printf(m, "---------------------%s---------------------- ", __func__); for (i = 0; i < NR_CPUS; i++) { seq_printf(m, "thread_id = %d, core_id = %d, cluster_id = %d, thread_sibling = %*pbl, core_sibling = %*pbl ", ct->thread_id, ct->core_id, ct->cluster_id, cpumask_pr_args(&ct->thread_sibling), cpumask_pr_args(&ct->core_sibling)); ct++; //new add } seq_printf(m, "------------------------------------------------- "); } static void debug_printf_cache(struct seq_file *m, int cpu) { struct sched_domain *sd, *sdnuma, *sdasym, *sdea, *sdscs; struct sched_domain_shared *sds; int size, id; sd = rcu_dereference(per_cpu(sd_llc, cpu)); size = per_cpu(sd_llc_size, cpu); id = per_cpu(sd_llc_id, cpu); sds = rcu_dereference(per_cpu(sd_llc_shared, cpu)); sdnuma = rcu_dereference(per_cpu(sd_numa, cpu)); sdasym = rcu_dereference(per_cpu(sd_asym, cpu)); sdea = rcu_dereference(per_cpu(sd_ea, cpu)); sdscs = rcu_dereference(per_cpu(sd_scs, cpu)); seq_printf(m, "---------------------%s---------------------- ", __func__); seq_printf(m, "cache: sd = %p, size = %d, id = %d, sds= %p, sdnuma = %p, sdasym = %p, sdea = %p, sdscs=%p ", sd, size, id, sds, sdnuma, sdasym, sdea, sdscs); seq_printf(m, "------------------------------------------------- "); } static void debug_printf_all(struct seq_file *m, const struct cpumask *cpu_mask) { int cpu; struct sched_domain_topology_level *tl; for_each_cpu(cpu, cpu_mask) { for_each_sd_topology(tl) { debug_printf_sd_sds_sg_sgc(m, tl, cpu); } } for_each_cpu(cpu, cpu_mask) { //分开更方便查看 debug_printf_cpu_rq(m, cpu); debug_printf_sd_sds_sg_sgc_cpu_rq(m, cpu); debug_printf_cache(m, cpu); } debug_printf_root_domain(m); debug_printf_cpu_topology(m); } static ssize_t domain_topo_debug_write(struct file *file, const char __user *buf, size_t count, loff_t *ppos) { int ret, cmd_value; char buffer[32] = {0}; if (count >= sizeof(buffer)) { count = sizeof(buffer) - 1; } if (copy_from_user(buffer, buf, count)) { pr_info("copy_from_user failed "); return -EFAULT; } ret = sscanf(buffer, "%d", &cmd_value); if(ret <= 0){ pr_info("sscanf dec failed "); return -EINVAL; } pr_info("cmd_value=%d ", cmd_value); dtd.cmd = cmd_value; return count; } static int domain_topo_debug_show(struct seq_file *m, void *v) { switch (dtd.cmd) { case 0: debug_printf_all(m, cpu_possible_mask); break; case 1: debug_printf_all(m, cpu_online_mask); break; case 2: debug_printf_all(m, cpu_present_mask); break; case 3: debug_printf_all(m, cpu_active_mask); break; case 4: debug_printf_all(m, cpu_isolated_mask); break; default: pr_info("dtd.cmd is invalid! "); break; } return 0; } static int domain_topo_debug_open(struct inode *inode, struct file *file) { return single_open(file, domain_topo_debug_show, NULL); } static const struct file_operations domain_topo_debug_fops = { .open = domain_topo_debug_open, .read = seq_read, .write = domain_topo_debug_write, .llseek = seq_lseek, .release = single_release, }; static int __init domain_topo_debug_init(void) { proc_create("domain_topo_debug", S_IRUGO | S_IWUGO, NULL, &domain_topo_debug_fops); pr_info("domain_topo_debug probed ", nr_cpu_ids); return 0; } fs_initcall(domain_topo_debug_init);

Debug打印内容:

# cat domain_topo_debug -------------------------TL = MC, cpu = 0, sdd = 000000009e66189d, debug_printf_sd_sds_sg_sgc-------------------------------- TL = MC, cpu = 0, sdd->sd = NULL TL = MC, cpu = 0, sdd->sds = NULL TL = MC, cpu = 0, sdd->sg = NULL TL = MC, cpu = 0, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = DIE, cpu = 0, sdd = 0000000079bc9f22, debug_printf_sd_sds_sg_sgc-------------------------------- TL = DIE, cpu = 0, sdd->sd = NULL TL = DIE, cpu = 0, sdd->sds = NULL TL = DIE, cpu = 0, sdd->sg = NULL TL = DIE, cpu = 0, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = MC, cpu = 1, sdd = 000000009e66189d, debug_printf_sd_sds_sg_sgc-------------------------------- TL = MC, cpu = 1, sdd->sd = NULL TL = MC, cpu = 1, sdd->sds = NULL TL = MC, cpu = 1, sdd->sg = NULL TL = MC, cpu = 1, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = DIE, cpu = 1, sdd = 0000000079bc9f22, debug_printf_sd_sds_sg_sgc-------------------------------- TL = DIE, cpu = 1, sdd->sd = NULL TL = DIE, cpu = 1, sdd->sds = NULL TL = DIE, cpu = 1, sdd->sg = NULL TL = DIE, cpu = 1, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = MC, cpu = 2, sdd = 000000009e66189d, debug_printf_sd_sds_sg_sgc-------------------------------- TL = MC, cpu = 2, sdd->sd = NULL TL = MC, cpu = 2, sdd->sds = NULL TL = MC, cpu = 2, sdd->sg = NULL TL = MC, cpu = 2, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = DIE, cpu = 2, sdd = 0000000079bc9f22, debug_printf_sd_sds_sg_sgc-------------------------------- TL = DIE, cpu = 2, sdd->sd = NULL TL = DIE, cpu = 2, sdd->sds = NULL TL = DIE, cpu = 2, sdd->sg = NULL TL = DIE, cpu = 2, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = MC, cpu = 3, sdd = 000000009e66189d, debug_printf_sd_sds_sg_sgc-------------------------------- TL = MC, cpu = 3, sdd->sd = NULL TL = MC, cpu = 3, sdd->sds = NULL TL = MC, cpu = 3, sdd->sg = NULL TL = MC, cpu = 3, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = DIE, cpu = 3, sdd = 0000000079bc9f22, debug_printf_sd_sds_sg_sgc-------------------------------- TL = DIE, cpu = 3, sdd->sd = NULL TL = DIE, cpu = 3, sdd->sds = NULL TL = DIE, cpu = 3, sdd->sg = NULL TL = DIE, cpu = 3, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = MC, cpu = 4, sdd = 000000009e66189d, debug_printf_sd_sds_sg_sgc-------------------------------- TL = MC, cpu = 4, sdd->sd = NULL TL = MC, cpu = 4, sdd->sds = NULL TL = MC, cpu = 4, sdd->sg = NULL TL = MC, cpu = 4, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = DIE, cpu = 4, sdd = 0000000079bc9f22, debug_printf_sd_sds_sg_sgc-------------------------------- TL = DIE, cpu = 4, sdd->sd = NULL TL = DIE, cpu = 4, sdd->sds = NULL TL = DIE, cpu = 4, sdd->sg = NULL TL = DIE, cpu = 4, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = MC, cpu = 5, sdd = 000000009e66189d, debug_printf_sd_sds_sg_sgc-------------------------------- TL = MC, cpu = 5, sdd->sd = NULL TL = MC, cpu = 5, sdd->sds = NULL TL = MC, cpu = 5, sdd->sg = NULL TL = MC, cpu = 5, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = DIE, cpu = 5, sdd = 0000000079bc9f22, debug_printf_sd_sds_sg_sgc-------------------------------- TL = DIE, cpu = 5, sdd->sd = NULL TL = DIE, cpu = 5, sdd->sds = NULL TL = DIE, cpu = 5, sdd->sg = NULL TL = DIE, cpu = 5, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = MC, cpu = 6, sdd = 000000009e66189d, debug_printf_sd_sds_sg_sgc-------------------------------- TL = MC, cpu = 6, sdd->sd = NULL TL = MC, cpu = 6, sdd->sds = NULL TL = MC, cpu = 6, sdd->sg = NULL TL = MC, cpu = 6, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = DIE, cpu = 6, sdd = 0000000079bc9f22, debug_printf_sd_sds_sg_sgc-------------------------------- TL = DIE, cpu = 6, sdd->sd = NULL TL = DIE, cpu = 6, sdd->sds = NULL TL = DIE, cpu = 6, sdd->sg = NULL TL = DIE, cpu = 6, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = MC, cpu = 7, sdd = 000000009e66189d, debug_printf_sd_sds_sg_sgc-------------------------------- TL = MC, cpu = 7, sdd->sd = NULL TL = MC, cpu = 7, sdd->sds = NULL TL = MC, cpu = 7, sdd->sg = NULL TL = MC, cpu = 7, sdd->sgc = NULL ------------------------------------------------- -------------------------TL = DIE, cpu = 7, sdd = 0000000079bc9f22, debug_printf_sd_sds_sg_sgc-------------------------------- TL = DIE, cpu = 7, sdd->sd = NULL TL = DIE, cpu = 7, sdd->sds = NULL TL = DIE, cpu = 7, sdd->sg = NULL TL = DIE, cpu = 7, sdd->sgc = NULL ------------------------------------------------- ---------------------cpu=0, debug_printf_cpu_rq---------------------- rq = 000000003429aaa4: rd = 000000001b6257a5, sd = 00000000d7f4f9d1, cpu_capacity = 230, cpu_capacity_orig = 241, max_idle_balance_cost = 200000 balance_callback: ------------------------------------------------- ---------------------cpu=1, debug_printf_cpu_rq---------------------- rq = 00000000ed35f55b: rd = 000000001b6257a5, sd = 00000000b6a75a4c, cpu_capacity = 230, cpu_capacity_orig = 241, max_idle_balance_cost = 200000 balance_callback: ------------------------------------------------- ---------------------cpu=2, debug_printf_cpu_rq---------------------- rq = 000000007ab2924d: rd = 000000001b6257a5, sd = 00000000baa86fe9, cpu_capacity = 230, cpu_capacity_orig = 241, max_idle_balance_cost = 200000 balance_callback: ------------------------------------------------- ---------------------cpu=3, debug_printf_cpu_rq---------------------- rq = 00000000fccf4c48: rd = 000000001b6257a5, sd = 0000000088de345a, cpu_capacity = 230, cpu_capacity_orig = 241, max_idle_balance_cost = 200000 balance_callback: ------------------------------------------------- ---------------------cpu=4, debug_printf_cpu_rq---------------------- rq = 000000002b3d7ce9: rd = 000000001b6257a5, sd = 00000000c5314037, cpu_capacity = 790, cpu_capacity_orig = 917, max_idle_balance_cost = 200000 balance_callback: ------------------------------------------------- ---------------------cpu=5, debug_printf_cpu_rq---------------------- rq = 000000000ffd99ea: rd = 000000001b6257a5, sd = 00000000bcdabb79, cpu_capacity = 790, cpu_capacity_orig = 917, max_idle_balance_cost = 200000 balance_callback: ------------------------------------------------- ---------------------cpu=6, debug_printf_cpu_rq---------------------- rq = 00000000ecf5161f: rd = 000000001b6257a5, sd = 00000000a7438b3c, cpu_capacity = 790, cpu_capacity_orig = 917, max_idle_balance_cost = 200000 balance_callback: ------------------------------------------------- ---------------------cpu=7, debug_printf_cpu_rq---------------------- rq = 000000001e90f615: rd = 000000001b6257a5, sd = 00000000e6bf0308, cpu_capacity = 906, cpu_capacity_orig = 1024, max_idle_balance_cost = 200000 balance_callback: ------------------------------------------------- ---------------------cpu=0, debug_printf_sd_sds_sg_sgc_cpu_rq---------------------- rq->sd: sched_domain sd = 00000000d7f4f9d1: parent = 000000002e35d366, child = (null), groups = 00000000020e03b4, name = MC, private = 000000009e66189d, shared = 0000000024396c1d, span = 0-3, flags=0x823f rq->sd->groups: sched_group sg = 00000000020e03b4: next = 00000000ab25b4ff, ref = 4, group_weight = 1, sgc = 00000000c0fb3d29, asym_prefer_cpu = 0, sge = 0000000045497311, cpumask = 0 rq->sd->groups->sgc: sched_group_capacity sgc = 00000000c0fb3d29: ref = 4, capacity = 230, min_capacity = 230, max_capacity = 230, next_update = 71656, imbalance = 0, id = 0, cpumask = 0 rq->sd->shared: sched_domain_shared sds = 0000000024396c1d: ref = 4, nr_busy_cpus = 1, has_idle_cores = 0, overutilized = 0 rq->sd->parent: sched_domain sd = 000000002e35d366: parent = (null), child = 00000000d7f4f9d1, groups = 00000000e4ad4cdb, name = DIE, private = 0000000079bc9f22, shared = 000000001c246b7a, span = 0-7, flags=0x107f rq->sd->parent->groups: sched_group sg = 00000000e4ad4cdb: next = 0000000044121b65, ref = 8, group_weight = 4, sgc = 0000000076821083, asym_prefer_cpu = 0, sge = 00000000be40d7aa, cpumask = 0-3 rq->sd->parent->groups->sgc: sched_group_capacity sgc = 0000000076821083: ref = 8, capacity = 920, min_capacity = 230, max_capacity = 230, next_update = 71662, imbalance = 0, id = 0, cpumask = 0-3 rq->sd->parent->shared: sched_domain_shared sds = 000000001c246b7a: ref = 8, nr_busy_cpus = 0, has_idle_cores = 0, overutilized = 0 ------------------------------------------------- ---------------------cpu=1, debug_printf_sd_sds_sg_sgc_cpu_rq---------------------- rq->sd: sched_domain sd = 00000000b6a75a4c: parent = 0000000052738a20, child = (null), groups = 00000000ab25b4ff, name = MC, private = 000000009e66189d, shared = 0000000024396c1d, span = 0-3, flags=0x823f rq->sd->groups: sched_group sg = 00000000ab25b4ff: next = 00000000b80ca367, ref = 4, group_weight = 1, sgc = 00000000c7f86843, asym_prefer_cpu = 0, sge = 000000004ba0e6e4, cpumask = 1 rq->sd->groups->sgc: sched_group_capacity sgc = 00000000c7f86843: ref = 4, capacity = 230, min_capacity = 230, max_capacity = 230, next_update = 71656, imbalance = 0, id = 1, cpumask = 1 rq->sd->shared: sched_domain_shared sds = 0000000024396c1d: ref = 4, nr_busy_cpus = 1, has_idle_cores = 0, overutilized = 0 rq->sd->parent: sched_domain sd = 0000000052738a20: parent = (null), child = 00000000b6a75a4c, groups = 00000000e4ad4cdb, name = DIE, private = 0000000079bc9f22, shared = 000000001c246b7a, span = 0-7, flags=0x107f rq->sd->parent->groups: sched_group sg = 00000000e4ad4cdb: next = 0000000044121b65, ref = 8, group_weight = 4, sgc = 0000000076821083, asym_prefer_cpu = 0, sge = 00000000be40d7aa, cpumask = 0-3 rq->sd->parent->groups->sgc: sched_group_capacity sgc = 0000000076821083: ref = 8, capacity = 920, min_capacity = 230, max_capacity = 230, next_update = 71662, imbalance = 0, id = 0, cpumask = 0-3 rq->sd->parent->shared: sched_domain_shared sds = 000000001c246b7a: ref = 8, nr_busy_cpus = 0, has_idle_cores = 0, overutilized = 0 ------------------------------------------------- ---------------------cpu=2, debug_printf_sd_sds_sg_sgc_cpu_rq---------------------- rq->sd: sched_domain sd = 00000000baa86fe9: parent = 00000000ad441716, child = (null), groups = 00000000b80ca367, name = MC, private = 000000009e66189d, shared = 0000000024396c1d, span = 0-3, flags=0x823f rq->sd->groups: sched_group sg = 00000000b80ca367: next = 00000000d5827f1a, ref = 4, group_weight = 1, sgc = 00000000186b5ec8, asym_prefer_cpu = 0, sge = 00000000ad61ee0b, cpumask = 2 rq->sd->groups->sgc: sched_group_capacity sgc = 00000000186b5ec8: ref = 4, capacity = 230, min_capacity = 230, max_capacity = 230, next_update = 71655, imbalance = 0, id = 2, cpumask = 2 rq->sd->shared: sched_domain_shared sds = 0000000024396c1d: ref = 4, nr_busy_cpus = 1, has_idle_cores = 0, overutilized = 0 rq->sd->parent: sched_domain sd = 00000000ad441716: parent = (null), child = 00000000baa86fe9, groups = 00000000e4ad4cdb, name = DIE, private = 0000000079bc9f22, shared = 000000001c246b7a, span = 0-7, flags=0x107f rq->sd->parent->groups: sched_group sg = 00000000e4ad4cdb: next = 0000000044121b65, ref = 8, group_weight = 4, sgc = 0000000076821083, asym_prefer_cpu = 0, sge = 00000000be40d7aa, cpumask = 0-3 rq->sd->parent->groups->sgc: sched_group_capacity sgc = 0000000076821083: ref = 8, capacity = 920, min_capacity = 230, max_capacity = 230, next_update = 71662, imbalance = 0, id = 0, cpumask = 0-3 rq->sd->parent->shared: sched_domain_shared sds = 000000001c246b7a: ref = 8, nr_busy_cpus = 0, has_idle_cores = 0, overutilized = 0 ------------------------------------------------- ---------------------cpu=3, debug_printf_sd_sds_sg_sgc_cpu_rq---------------------- rq->sd: sched_domain sd = 0000000088de345a: parent = 00000000f40b8d8f, child = (null), groups = 00000000d5827f1a, name = MC, private = 000000009e66189d, shared = 0000000024396c1d, span = 0-3, flags=0x823f rq->sd->groups: sched_group sg = 00000000d5827f1a: next = 00000000020e03b4, ref = 4, group_weight = 1, sgc = 00000000cd39f15f, asym_prefer_cpu = 0, sge = 000000002fe36ed1, cpumask = 3 rq->sd->groups->sgc: sched_group_capacity sgc = 00000000cd39f15f: ref = 4, capacity = 230, min_capacity = 230, max_capacity = 230, next_update = 71661, imbalance = 0, id = 3, cpumask = 3 rq->sd->shared: sched_domain_shared sds = 0000000024396c1d: ref = 4, nr_busy_cpus = 1, has_idle_cores = 0, overutilized = 0 rq->sd->parent: sched_domain sd = 00000000f40b8d8f: parent = (null), child = 0000000088de345a, groups = 00000000e4ad4cdb, name = DIE, private = 0000000079bc9f22, shared = 000000001c246b7a, span = 0-7, flags=0x107f rq->sd->parent->groups: sched_group sg = 00000000e4ad4cdb: next = 0000000044121b65, ref = 8, group_weight = 4, sgc = 0000000076821083, asym_prefer_cpu = 0, sge = 00000000be40d7aa, cpumask = 0-3 rq->sd->parent->groups->sgc: sched_group_capacity sgc = 0000000076821083: ref = 8, capacity = 920, min_capacity = 230, max_capacity = 230, next_update = 71662, imbalance = 0, id = 0, cpumask = 0-3 rq->sd->parent->shared: sched_domain_shared sds = 000000001c246b7a: ref = 8, nr_busy_cpus = 0, has_idle_cores = 0, overutilized = 0 ------------------------------------------------- ---------------------cpu=4, debug_printf_sd_sds_sg_sgc_cpu_rq---------------------- rq->sd: sched_domain sd = 00000000c5314037: parent = 000000009aaa92f2, child = (null), groups = 0000000021c86a5c, name = MC, private = 000000009e66189d, shared = 000000007b30bb71, span = 4-6, flags=0x823f rq->sd->groups: sched_group sg = 0000000021c86a5c: next = 000000007e6c07b8, ref = 3, group_weight = 1, sgc = 00000000ae0ee0b8, asym_prefer_cpu = 0, sge = 00000000e1287c6b, cpumask = 4 rq->sd->groups->sgc: sched_group_capacity sgc = 00000000ae0ee0b8: ref = 3, capacity = 790, min_capacity = 790, max_capacity = 790, next_update = 71257, imbalance = 0, id = 4, cpumask = 4 rq->sd->shared: sched_domain_shared sds = 000000007b30bb71: ref = 3, nr_busy_cpus = 1, has_idle_cores = 0, overutilized = 0 rq->sd->parent: sched_domain sd = 000000009aaa92f2: parent = (null), child = 00000000c5314037, groups = 0000000044121b65, name = DIE, private = 0000000079bc9f22, shared = 000000001c246b7a, span = 0-7, flags=0x107f rq->sd->parent->groups: sched_group sg = 0000000044121b65: next = 000000000679d876, ref = 8, group_weight = 3, sgc = 000000006f38e38f, asym_prefer_cpu = 0, sge = 0000000000aea201, cpumask = 4-6 rq->sd->parent->groups->sgc: sched_group_capacity sgc = 000000006f38e38f: ref = 8, capacity = 2370, min_capacity = 790, max_capacity = 790, next_update = 71260, imbalance = 0, id = 4, cpumask = 4-6 rq->sd->parent->shared: sched_domain_shared sds = 000000001c246b7a: ref = 8, nr_busy_cpus = 0, has_idle_cores = 0, overutilized = 0 ------------------------------------------------- ---------------------cpu=5, debug_printf_sd_sds_sg_sgc_cpu_rq---------------------- rq->sd: sched_domain sd = 00000000bcdabb79: parent = 000000005357fe0e, child = (null), groups = 000000007e6c07b8, name = MC, private = 000000009e66189d, shared = 000000007b30bb71, span = 4-6, flags=0x823f rq->sd->groups: sched_group sg = 000000007e6c07b8: next = 000000006871b1c7, ref = 3, group_weight = 1, sgc = 000000008745da85, asym_prefer_cpu = 0, sge = 00000000ff253c30, cpumask = 5 rq->sd->groups->sgc: sched_group_capacity sgc = 000000008745da85: ref = 3, capacity = 790, min_capacity = 790, max_capacity = 790, next_update = 70617, imbalance = 0, id = 5, cpumask = 5 rq->sd->shared: sched_domain_shared sds = 000000007b30bb71: ref = 3, nr_busy_cpus = 1, has_idle_cores = 0, overutilized = 0 rq->sd->parent: sched_domain sd = 000000005357fe0e: parent = (null), child = 00000000bcdabb79, groups = 0000000044121b65, name = DIE, private = 0000000079bc9f22, shared = 000000001c246b7a, span = 0-7, flags=0x107f rq->sd->parent->groups: sched_group sg = 0000000044121b65: next = 000000000679d876, ref = 8, group_weight = 3, sgc = 000000006f38e38f, asym_prefer_cpu = 0, sge = 0000000000aea201, cpumask = 4-6 rq->sd->parent->groups->sgc: sched_group_capacity sgc = 000000006f38e38f: ref = 8, capacity = 2370, min_capacity = 790, max_capacity = 790, next_update = 71260, imbalance = 0, id = 4, cpumask = 4-6 rq->sd->parent->shared: sched_domain_shared sds = 000000001c246b7a: ref = 8, nr_busy_cpus = 0, has_idle_cores = 0, overutilized = 0 ------------------------------------------------- ---------------------cpu=6, debug_printf_sd_sds_sg_sgc_cpu_rq---------------------- rq->sd: sched_domain sd = 00000000a7438b3c: parent = 00000000779b3b0b, child = (null), groups = 000000006871b1c7, name = MC, private = 000000009e66189d, shared = 000000007b30bb71, span = 4-6, flags=0x823f rq->sd->groups: sched_group sg = 000000006871b1c7: next = 0000000021c86a5c, ref = 3, group_weight = 1, sgc = 0000000043aa756f, asym_prefer_cpu = 0, sge = 000000000c95e6d7, cpumask = 6 rq->sd->groups->sgc: sched_group_capacity sgc = 0000000043aa756f: ref = 3, capacity = 790, min_capacity = 790, max_capacity = 790, next_update = 70116, imbalance = 0, id = 6, cpumask = 6 rq->sd->shared: sched_domain_shared sds = 000000007b30bb71: ref = 3, nr_busy_cpus = 1, has_idle_cores = 0, overutilized = 0 rq->sd->parent: sched_domain sd = 00000000779b3b0b: parent = (null), child = 00000000a7438b3c, groups = 0000000044121b65, name = DIE, private = 0000000079bc9f22, shared = 000000001c246b7a, span = 0-7, flags=0x107f rq->sd->parent->groups: sched_group sg = 0000000044121b65: next = 000000000679d876, ref = 8, group_weight = 3, sgc = 000000006f38e38f, asym_prefer_cpu = 0, sge = 0000000000aea201, cpumask = 4-6 rq->sd->parent->groups->sgc: sched_group_capacity sgc = 000000006f38e38f: ref = 8, capacity = 2370, min_capacity = 790, max_capacity = 790, next_update = 71260, imbalance = 0, id = 4, cpumask = 4-6 rq->sd->parent->shared: sched_domain_shared sds = 000000001c246b7a: ref = 8, nr_busy_cpus = 0, has_idle_cores = 0, overutilized = 0 ------------------------------------------------- ---------------------cpu=7, debug_printf_sd_sds_sg_sgc_cpu_rq---------------------- rq->sd: sched_domain sd = 00000000e6bf0308: parent = (null), child = (null), groups = 000000000679d876, name = DIE, private = 0000000079bc9f22, shared = 000000001c246b7a, span = 0-7, flags=0x107f rq->sd->groups: sched_group sg = 000000000679d876: next = 00000000e4ad4cdb, ref = 8, group_weight = 1, sgc = 0000000011c34dc4, asym_prefer_cpu = 0, sge = 00000000287ffa86, cpumask = 7 rq->sd->groups->sgc: sched_group_capacity sgc = 0000000011c34dc4: ref = 8, capacity = 906, min_capacity = 906, max_capacity = 906, next_update = 70126, imbalance = 0, id = 7, cpumask = 7 rq->sd->shared: sched_domain_shared sds = 000000001c246b7a: ref = 8, nr_busy_cpus = 0, has_idle_cores = 0, overutilized = 0 ------------------------------------------------- ---------------------debug_printf_cache---------------------- cache: sd = 00000000d7f4f9d1, size = 4, id = 0, sds= 0000000024396c1d, sdnuma = (null), sdasym = (null), sdea = 000000002e35d366, sdscs=00000000d7f4f9d1 ------------------------------------------------- ---------------------debug_printf_cache---------------------- cache: sd = 00000000b6a75a4c, size = 4, id = 0, sds= 0000000024396c1d, sdnuma = (null), sdasym = (null), sdea = 0000000052738a20, sdscs=00000000b6a75a4c ------------------------------------------------- ---------------------debug_printf_cache---------------------- cache: sd = 00000000baa86fe9, size = 4, id = 0, sds= 0000000024396c1d, sdnuma = (null), sdasym = (null), sdea = 00000000ad441716, sdscs=00000000baa86fe9 ------------------------------------------------- ---------------------debug_printf_cache---------------------- cache: sd = 0000000088de345a, size = 4, id = 0, sds= 0000000024396c1d, sdnuma = (null), sdasym = (null), sdea = 00000000f40b8d8f, sdscs=0000000088de345a ------------------------------------------------- ---------------------debug_printf_cache---------------------- cache: sd = 00000000c5314037, size = 3, id = 4, sds= 000000007b30bb71, sdnuma = (null), sdasym = (null), sdea = 000000009aaa92f2, sdscs=00000000c5314037 ------------------------------------------------- ---------------------debug_printf_cache---------------------- cache: sd = 00000000bcdabb79, size = 3, id = 4, sds= 000000007b30bb71, sdnuma = (null), sdasym = (null), sdea = 000000005357fe0e, sdscs=00000000bcdabb79 ------------------------------------------------- ---------------------debug_printf_cache---------------------- cache: sd = 00000000a7438b3c, size = 3, id = 4, sds= 000000007b30bb71, sdnuma = (null), sdasym = (null), sdea = 00000000779b3b0b, sdscs=00000000a7438b3c ------------------------------------------------- ---------------------debug_printf_cache---------------------- cache: sd = (null), size = 1, id = 7, sds= (null), sdnuma = (null), sdasym = (null), sdea = 00000000e6bf0308, sdscs= (null) ------------------------------------------------- ---------------------debug_printf_root_domain---------------------- refcount = 8, span = 0-7, max_cpu_capacity.val = 906, max_cpu_capacity.cpu = 7, max_cap_orig_cpu = 7, min_cap_orig_cpu = 0 ------------------------------------------------- ---------------------debug_printf_cpu_topology---------------------- thread_id = -1, core_id = 0, cluster_id = 0, thread_sibling = 0, core_sibling = 0-3 thread_id = -1, core_id = 1, cluster_id = 0, thread_sibling = 1, core_sibling = 0-3 thread_id = -1, core_id = 2, cluster_id = 0, thread_sibling = 2, core_sibling = 0-3 thread_id = -1, core_id = 3, cluster_id = 0, thread_sibling = 3, core_sibling = 0-3 thread_id = -1, core_id = 0, cluster_id = 1, thread_sibling = 4, core_sibling = 4-6 thread_id = -1, core_id = 1, cluster_id = 1, thread_sibling = 5, core_sibling = 4-6 thread_id = -1, core_id = 2, cluster_id = 1, thread_sibling = 6, core_sibling = 4-6 thread_id = -1, core_id = 0, cluster_id = 2, thread_sibling = 7, core_sibling = 7 -------------------------------------------------

7. Qcom BSP 的状态一致。

8. sched_domain sd->flags 这个掩码标志在代码中的很多位置作为开关进行判断,看起来像是一个常量。也在 /proc/sys/kernel/sched_domain/cpuX/domainY/flags 也导出了这些flag。

三、DEBUG核隔离和offline的影响

1. 被 isolated 的 cpu 的 sched_group->group_weight=0,比如中核中 CPU4 被 isolated 了,CPU4 的 sched_group->group_weight=0,中核DIE层级的 sched_group->group_weight=2(3-1=2).

2. 被 offline 状态的 cpu 的 rq->sd = NULL,这就导致这个 offline 的 cpu 与整个调度域完全脱离了,因为cpu找调度域是通过 rq->sd 去路由的。其 cache 相关的 per-cpu 的指针

全部变为指向NULL(重新online后会恢复)。小核 DIE层级 cluster 对应的 sched_group->group_weight 的值也是减去 offline 状态的 cpu 后的。

3. 唯一的大核CPU7被isolate后,DIE层级的 sched_group.next 构成的单链表的指向并不会改变。唯一的大核CPU7被 offline 后,DIE层级的 sched_group.next 变为小核和中核互相指向,

已经不存在大核的了。

4. CPU核被 isolate 了或被 offline 了,其DIE层级对应的 sd->groups->sgc 的 capacity 也会变化,其是此cluster内 online 和没被isolate的cpu的算力之和。

offline cpu2 后的效果:

---------------------cpu=2, debug_printf_cpu_rq---------------------- rq = 000000001f8a123c: rd = 0000000035a93b24, sd = (null), cpu_capacity = 230, cpu_capacity_orig = 241, max_idle_balance_cost = 200000 balance_callback: ------------------------------------------------- ---------------------cpu=2, debug_printf_sd_sds_sg_sgc_cpu_rq---------------------- ------------------------------------------------- ---------------------debug_printf_cache---------------------- cache: sd = (null), size = 1, id = 2, sds= (null), sdnuma = (null), sdasym = (null), sdea = (null), sdscs= (null) -------------------------------------------------

同时的 isolate cpu3 后的效果:

---------------------cpu=3, debug_printf_cpu_rq---------------------- rq = 00000000d72c33eb: rd = 0000000014388aae, sd = 0000000005fa314e, cpu_capacity = 230, cpu_capacity_orig = 241, max_idle_balance_cost = 200000 balance_callback: ------------------------------------------------- ---------------------cpu=3, debug_printf_sd_sds_sg_sgc_cpu_rq---------------------- rq->sd: sched_domain sd = 0000000005fa314e: parent = 000000009e86ff7e, child = (null), groups = 000000000018df41, name = MC, private = 0000000076e60833, shared = 000000005f941234, span = 0-1,3 rq->sd->groups: sched_group sg = 000000000018df41: next = 0000000000f13ee1, ref = 3, group_weight = 0, sgc = 000000003d6525d0, asym_prefer_cpu = 0, sge = 00000000ab7de946, cpumask = 3 rq->sd->groups->sgc: sched_group_capacity sgc = 000000003d6525d0: ref = 3, capacity = 230, min_capacity = 230, max_capacity = 230, next_update = 8909791, imbalance = 0, id = 3, cpumask = 3 rq->sd->shared: sched_domain_shared sds = 000000005f941234: ref = 3, nr_busy_cpus = 2, has_idle_cores = 0, overutilized = 0 rq->sd->parent: sched_domain sd = 000000009e86ff7e: parent = (null), child = 0000000005fa314e, groups = 0000000052c6c8c4, name = DIE, private = 000000002e14f724, shared = 000000000392d96b, span = 0-1,3-6 rq->sd->parent->groups: sched_group sg = 0000000052c6c8c4: next = 00000000cf354652, ref = 6, group_weight = 2, sgc = 00000000de302455, asym_prefer_cpu = 0, sge = 0000000042cf348e, cpumask = 0-1,3 rq->sd->parent->groups->sgc: sched_group_capacity sgc = 00000000de302455: ref = 6, capacity = 460, min_capacity = 230, max_capacity = 230, next_update = 8912959, imbalance = 0, id = 0, cpumask = 0-1,3 rq->sd->parent->shared: sched_domain_shared sds = 000000000392d96b: ref = 6, nr_busy_cpus = 0, has_idle_cores = 0, overutilized = 0 ------------------------------------------------- ---------------------debug_printf_cache---------------------- cache: sd = 0000000005fa314e, size = 3, id = 0, sds= 000000005f941234, sdnuma = (null), sdasym = (null), sdea = 000000009e86ff7e, sdscs=0000000005fa314e -------------------------------------------------

5. 将CPU7 isolate后,max_cpu_capacity.cpu = 7,将CPU7 offline后,max_cpu_capacity.cpu = 4。但是实验看起来不是很可靠,span的内容也不可靠。

isolate cpu7后的 root_domain: ---------------------debug_printf_root_domain---------------------- refcount = 8, span = 0-7, max_cpu_capacity.val = 1024, max_cpu_capacity.cpu = 7, max_cap_orig_cpu = 7, min_cap_orig_cpu = 0 ------------------------------------------------- offline cpu2 cpu7,isolate cpu3后的 root_domain: ---------------------debug_printf_root_domain---------------------- refcount = 6, span = 0-1,3-6, max_cpu_capacity.val = 790, max_cpu_capacity.cpu = 4, max_cap_orig_cpu = 4, min_cap_orig_cpu = 0 ------------------------------------------------- offline cpu3,isolate cpu7后的 root_domain: ---------------------debug_printf_root_domain---------------------- refcount = 7, span = 0-2,4-7, max_cpu_capacity.val = 790, max_cpu_capacity.cpu = 5, max_cap_orig_cpu = 7, min_cap_orig_cpu = 0 ------------------------------------------------- 还出现一次: ---------------------debug_printf_root_domain---------------------- refcount = 3, span = 0-1, max_cpu_capacity.val = 906, max_cpu_capacity.cpu = 7, max_cap_orig_cpu = -1, min_cap_orig_cpu = -1 -------------------------------------------------

6. 无论怎样 isolate 和 offline,全局 cpu_topology[NR_CPUS] 数组的值不会变化。