1、在每个节点上面导入如下镜像

[root@node1 DNS]# ll

total 59816

-rw-r--r--. 1 root root 8603136 Nov 25 18:13 exechealthz-amd64.tar.gz

-rw-r--r--. 1 root root 47218176 Nov 25 18:13 kubedns-amd64.tar.gz

-rw-r--r--. 1 root root 5424640 Nov 25 18:13 kube-dnsmasq-amd64.tar.gz

准备好如下文件

[root@manager ~]# cat kubedns-deployment.yaml # Copyright 2016 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # TODO - At some point, we need to rename all skydns-*.yaml.* files to kubedns-*.yaml.* # Should keep target in cluster/addons/dns-horizontal-autoscaler/dns-horizontal-autoscaler.yaml # in sync with this file. # Warning: This is a file generated from the base underscore template file: skydns-rc.yaml.base apiVersion: extensions/v1beta1 kind: Deployment metadata: name: kube-dns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" spec: replicas: 3 # replicas: not specified here: # 1. In order to make Addon Manager do not reconcile this replicas parameter. # 2. Default is 1. # 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: rollingUpdate: maxSurge: 10% maxUnavailable: 0 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns annotations: scheduler.alpha.kubernetes.io/critical-pod: '' scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]' spec: containers: - name: kubedns image: hub.c.163.com/allan1991/kubedns-amd64:1.9 resources: # TODO: Set memory limits when we've profiled the container for large # clusters, then set request = limit to keep this container in # guaranteed class. Currently, this container falls into the # "burstable" category so the kubelet doesn't backoff from restarting it. limits: memory: 170Mi requests: cpu: 100m memory: 70Mi livenessProbe: httpGet: path: /healthz-kubedns port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /readiness port: 8081 scheme: HTTP # we poll on pod startup for the Kubernetes master service and # only setup the /readiness HTTP server once that's available. initialDelaySeconds: 3 timeoutSeconds: 5 args: - --kube-master-url=http://192.168.10.220:8080 #修改成自己的ip - --domain=cluster.local. - --dns-port=10053 - --config-map=kube-dns # This should be set to v=2 only after the new image (cut from 1.5) has # been released, otherwise we will flood the logs. - --v=0 # {{ pillar['federations_domain_map'] }} env: - name: PROMETHEUS_PORT value: "10055" ports: - containerPort: 10053 name: dns-local protocol: UDP - containerPort: 10053 name: dns-tcp-local protocol: TCP - containerPort: 10055 name: metrics protocol: TCP - name: dnsmasq image: hub.c.163.com/allan1991/kube-dnsmasq-amd64:1.4 livenessProbe: httpGet: path: /healthz-dnsmasq port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 args: - --cache-size=1000 - --no-resolv - --server=127.0.0.1#10053 - --log-facility=- ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP # see: https://github.com/kubernetes/kubernetes/issues/29055 for details resources: requests: cpu: 150m memory: 10Mi # - name: dnsmasq-metrics # image: gcr.io/google_containers/dnsmasq-metrics-amd64:1.0 # livenessProbe: # httpGet: # path: /metrics # port: 10054 # scheme: HTTP # initialDelaySeconds: 60 # timeoutSeconds: 5 # successThreshold: 1 # failureThreshold: 5 # args: # - --v=2 # - --logtostderr # ports: # - containerPort: 10054 # name: metrics # protocol: TCP # resources: # requests: # memory: 10Mi - name: healthz image: hub.c.163.com/allan1991/exechealthz-amd64:1.2 resources: limits: memory: 50Mi requests: cpu: 10m # Note that this container shouldn't really need 50Mi of memory. The # limits are set higher than expected pending investigation on #29688. # The extra memory was stolen from the kubedns container to keep the # net memory requested by the pod constant. memory: 50Mi args: - --cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1 >/dev/null - --url=/healthz-dnsmasq - --cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1:10053 >/dev/null - --url=/healthz-kubedns - --port=8080 - --quiet ports: - containerPort: 8080 protocol: TCP dnsPolicy: Default # Don't use cluster DNS.

[root@manager ~]# cat kubedns-svc.yaml # Copyright 2016 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # This file should be kept in sync with cluster/images/hyperkube/dns-svc.yaml # TODO - At some point, we need to rename all skydns-*.yaml.* files to kubedns-*.yaml.* # Warning: This is a file generated from the base underscore template file: skydns-svc.yaml.base apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "KubeDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.10.10.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP

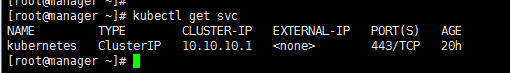

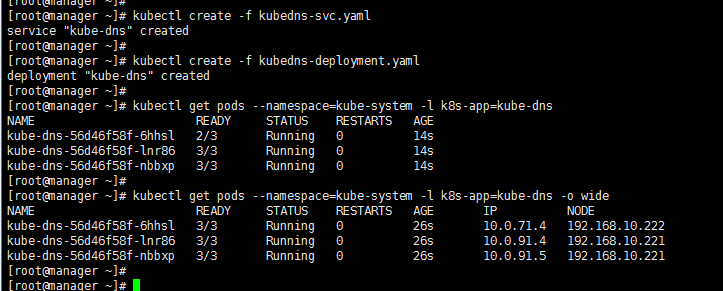

检查结果

[root@manager ~]# kubectl create -f kubedns-svc.yaml service "kube-dns" created [root@manager ~]# [root@manager ~]# kubectl create -f kubedns-deployment.yaml deployment "kube-dns" created [root@manager ~]# [root@manager ~]# kubectl get pods --namespace=kube-system -l k8s-app=kube-dns NAME READY STATUS RESTARTS AGE kube-dns-56d46f58f-6hhsl 2/3 Running 0 14s kube-dns-56d46f58f-lnr86 3/3 Running 0 14s kube-dns-56d46f58f-nbbxp 3/3 Running 0 14s [root@manager ~]# [root@manager ~]# kubectl get pods --namespace=kube-system -l k8s-app=kube-dns -o wide NAME READY STATUS RESTARTS AGE IP NODE kube-dns-56d46f58f-6hhsl 3/3 Running 0 26s 10.0.71.4 192.168.10.222 kube-dns-56d46f58f-lnr86 3/3 Running 0 26s 10.0.91.4 192.168.10.221 kube-dns-56d46f58f-nbbxp 3/3 Running 0 26s 10.0.91.5 192.168.10.221

验证结果