磁盘满了一次,导致hdfs的很多块变成一个副本

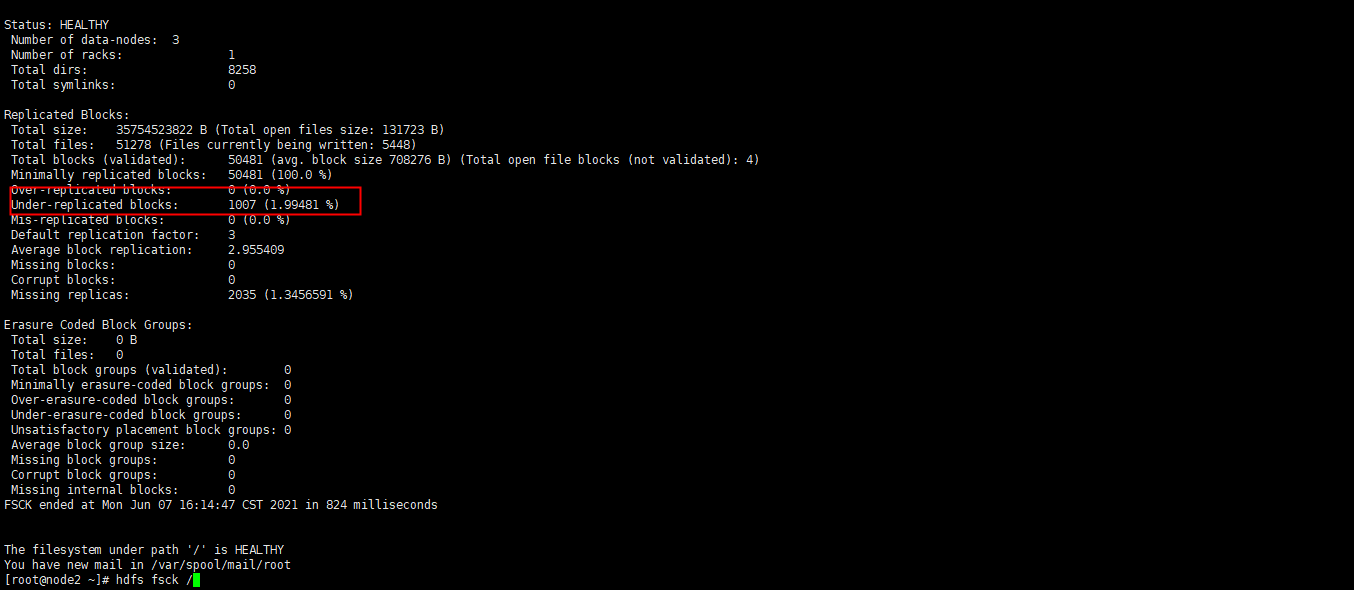

看一下副本信息

执行 hdfs fsck /

1007个块少于3个副本一下

而且 hbase的regionsever启动报错

File /apps/hbase/data/data/default/RECOMMEND.HOT_GOODS_RECOMMEND/3c3424f8a720878ebd969d90b0b376b9/recovered.edits/0000000000000027303-node1%2C16020%2C1621934159558.1622652845389.temp could only be written to 0 of the 1 minReplication nodes. There are 3 datanode(s) running and no node(s) are excluded in this operation.

执行修复这些副本试试

先把部分不完整的的块路径写到 /tmp/under_replicated_files

hdfs fsck / | grep 'Under replicated' | awk -F':' '{print $1}' >> /tmp/under_replicated_files

写个脚本批量进行修复

#!/bin/bash for line in `cat /tmp/under_replicated_files` do hdfs debug recoverLease -path $line done

修复完成后,在执行下 hdfs fsck / 看块的情况