Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at com.wanmi.sbc.dw.spark.app.RecommendAnalysisApp$$anonfun$run$2.apply(RecommendAnalysisApp.scala:33) at com.wanmi.sbc.dw.spark.app.RecommendAnalysisApp$$anonfun$run$2.apply(RecommendAnalysisApp.scala:33) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.mutable.HashSet.foreach(HashSet.scala:78) at scala.collection.TraversableLike$class.map(TraversableLike.scala:234) at scala.collection.mutable.AbstractSet.scala$collection$SetLike$$super$map(Set.scala:46) at scala.collection.SetLike$class.map(SetLike.scala:92) at scala.collection.mutable.AbstractSet.map(Set.scala:46) at com.wanmi.sbc.dw.spark.app.RecommendAnalysisApp$.run(RecommendAnalysisApp.scala:33) at com.wanmi.sbc.dw.spark.app.RecommendAnalysisApp.run(RecommendAnalysisApp.scala) ... 11 more Caused by: java.sql.SQLException: ERROR 2006 (INT08): Incompatible jars detected between client and server. Ensure that phoenix-[version]-server.jar is put on the classpath of HBase in every region server: Can't find method newStub in org.apache.phoenix.coprocessor.generated.MetaDataProtos$MetaDataService! at org.apache.phoenix.exception.SQLExceptionCode$Factory$1.newException(SQLExceptionCode.java:494) at org.apache.phoenix.exception.SQLExceptionInfo.buildException(SQLExceptionInfo.java:150) at org.apache.phoenix.query.ConnectionQueryServicesImpl.checkClientServerCompatibility(ConnectionQueryServicesImpl.java:1326) at org.apache.phoenix.query.ConnectionQueryServicesImpl.ensureTableCreated(ConnectionQueryServicesImpl.java:1162) at org.apache.phoenix.query.ConnectionQueryServicesImpl.createTable(ConnectionQueryServicesImpl.java:1501) at org.apache.phoenix.query.DelegateConnectionQueryServices.createTable(DelegateConnectionQueryServices.java:119) at org.apache.phoenix.schema.MetaDataClient.createTableInternal(MetaDataClient.java:2721) at org.apache.phoenix.schema.MetaDataClient.createTable(MetaDataClient.java:1114) at org.apache.phoenix.compile.CreateTableCompiler$1.execute(CreateTableCompiler.java:192) at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:408) at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:391) at org.apache.phoenix.call.CallRunner.run(CallRunner.java:53) at org.apache.phoenix.jdbc.PhoenixStatement.executeMutation(PhoenixStatement.java:390) at org.apache.phoenix.jdbc.PhoenixStatement.executeMutation(PhoenixStatement.java:378) at org.apache.phoenix.jdbc.PhoenixStatement.executeUpdate(PhoenixStatement.java:1806) at org.apache.phoenix.query.ConnectionQueryServicesImpl$12.call(ConnectionQueryServicesImpl.java:2569) at org.apache.phoenix.query.ConnectionQueryServicesImpl$12.call(ConnectionQueryServicesImpl.java:2532) at org.apache.phoenix.util.PhoenixContextExecutor.call(PhoenixContextExecutor.java:76) at org.apache.phoenix.query.ConnectionQueryServicesImpl.init(ConnectionQueryServicesImpl.java:2532) at org.apache.phoenix.jdbc.PhoenixDriver.getConnectionQueryServices(PhoenixDriver.java:255) at org.apache.phoenix.jdbc.PhoenixEmbeddedDriver.createConnection(PhoenixEmbeddedDriver.java:150) at org.apache.phoenix.jdbc.PhoenixDriver.connect(PhoenixDriver.java:221) at java.sql.DriverManager.getConnection(DriverManager.java:664) at java.sql.DriverManager.getConnection(DriverManager.java:208) at org.apache.phoenix.mapreduce.util.ConnectionUtil.getConnection(ConnectionUtil.java:113) at org.apache.phoenix.mapreduce.util.ConnectionUtil.getInputConnection(ConnectionUtil.java:58) at org.apache.phoenix.mapreduce.util.PhoenixConfigurationUtil.getSelectColumnMetadataList(PhoenixConfigurationUtil.java:354) at org.apache.phoenix.spark.PhoenixRDD.toDataFrame(PhoenixRDD.scala:118) at org.apache.phoenix.spark.SparkSqlContextFunctions.phoenixTableAsDataFrame(SparkSqlContextFunctions.scala:39) at com.wanmi.sbc.dw.spark.recommend.db.PhoenixDb.select(PhoenixDb.scala:40) at com.wanmi.sbc.dw.spark.recommend.CommonDimensionData.user_recommend_goods_data$lzycompute(CommonDimensionData.scala:222) at com.wanmi.sbc.dw.spark.recommend.CommonDimensionData.user_recommend_goods_data(CommonDimensionData.scala:222) at com.wanmi.sbc.dw.spark.recommend.CommonDimensionData.<init>(CommonDimensionData.scala:254) at com.wanmi.sbc.dw.spark.recommend.DataAnalysis.<init>(DataAnalysis.scala:16) at com.wanmi.sbc.dw.spark.recommend.hot.HotGoodsRecommend.<init>(HotGoodsRecommend.scala:19) ... 26 more Caused by: java.lang.IllegalArgumentException: Can't find method newStub in org.apache.phoenix.coprocessor.generated.MetaDataProtos$MetaDataService! at org.apache.hadoop.hbase.util.Methods.call(Methods.java:47) at org.apache.hadoop.hbase.protobuf.ProtobufUtil.newServiceStub(ProtobufUtil.java:1537) at org.apache.hadoop.hbase.client.HTable$12.call(HTable.java:996) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.NoSuchMethodException: org.apache.phoenix.coprocessor.generated.MetaDataProtos$MetaDataService.newStub(org.apache.hadoop.hbase.shaded.com.google.protobuf.RpcChannel) at java.lang.Class.getMethod(Class.java:1786) at org.apache.hadoop.hbase.util.Methods.call(Methods.java:39)

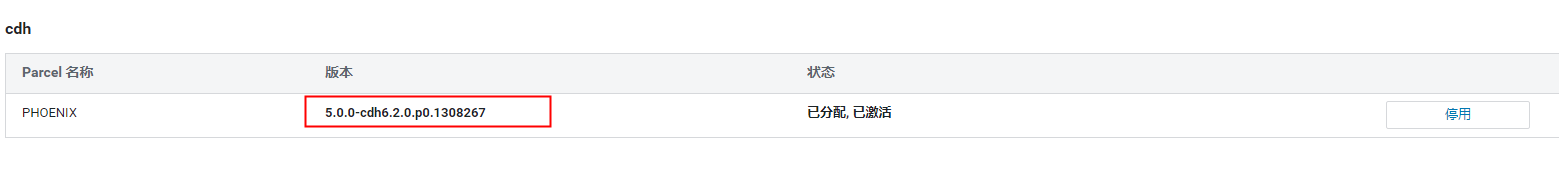

使用parall包安装的phoniex

在cdh集群执行spark读取phoniex数据是报上面错误

spark-shell --jars hdfs://hdfs地址:8020/spark/yarn/phoenix-core-5.0.0-HBase-2.0.jar,hdfs://hdfs地址:8020/spark/yarn/phoenix-spark-5.0.0-HBase-2.0.jar,hdfs://hdfs地址:8020/spark/yarn/hppc-0.7.2.jar,hdfs://hdfs地址:8020/spark/yarn/htrace-core-3.0.4.jar,hdfs://hdfs地址:8020/spark/yarn/htrace-core-3.1.0-incubating.jar,hdfs://hdfs地址:8020/spark/yarn/htrace-core-3.2.0-incubating.jar,hdfs://hdfs地址:8020/spark/yarn/htrace-core4-4.2.0-incubating.jar,hdfs://hdfs地址:8020/spark/yarn/tephra-api-0.6.0.jar,hdfs://hdfs地址:8020/spark/yarn/tephra-core-0.6.0.jar,hdfs://hdfs地址:8020/spark/yarn/tephra-api-0.14.0-incubating.jar,hdfs://hdfs地址:8020/spark/yarn/tephra-core-0.14.0-incubating.jar,hdfs://hdfs地址:8020/spark/yarn/twill-zookeeper-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/twill-api-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/twill-common-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/twill-core-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/twill-discovery-api-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/twill-discovery-core-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/disruptor-3.3.6.jar,hdfs://hdfs地址:8020/spark/yarn/antlr4-runtime-4.7.jar,hdfs://hdfs地址:8020/spark/yarn/antlr-2.7.7.jar,hdfs://hdfs地址:8020/spark/yarn/antlr-runtime-3.4.jar,hdfs://hdfs地址:8020/spark/yarn/antlr-runtime-3.5.2.jar

使用idea开发环境没问题

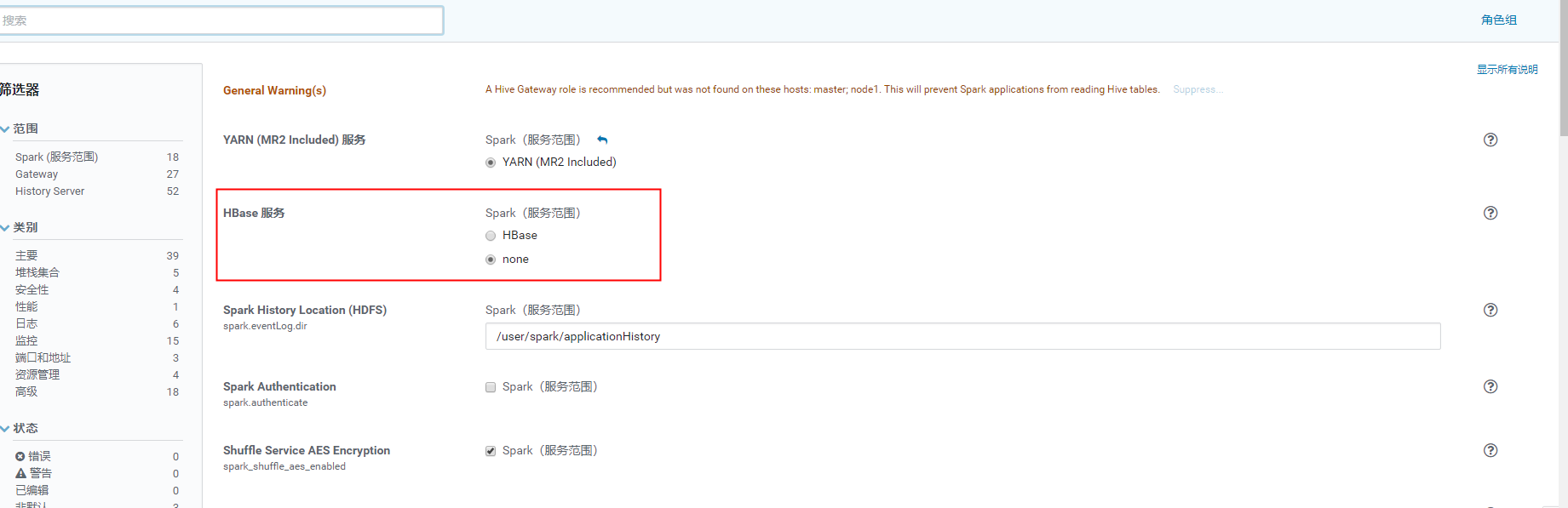

排查了1天,最大可能是cdh的 hbase的jar包依赖冲突的问题

选择将hbase服务依赖去掉,spark启动不加载hbase的classpath

将hbase相关jar copy到spark的classpath ,在运行代码成功了

cp $HBASE_HOME/lib/hbase-* $SPARK_HOME/jars

val df= spark.read.format("org.apache.phoenix.spark").options(Map("table" -> "RECOMMEND.HOT_GOODS_RECOMMEND", "zkUrl" -> "master:2181", "skipNormalizingIdentifier" -> "true")).load()

成功读取

太南了!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!